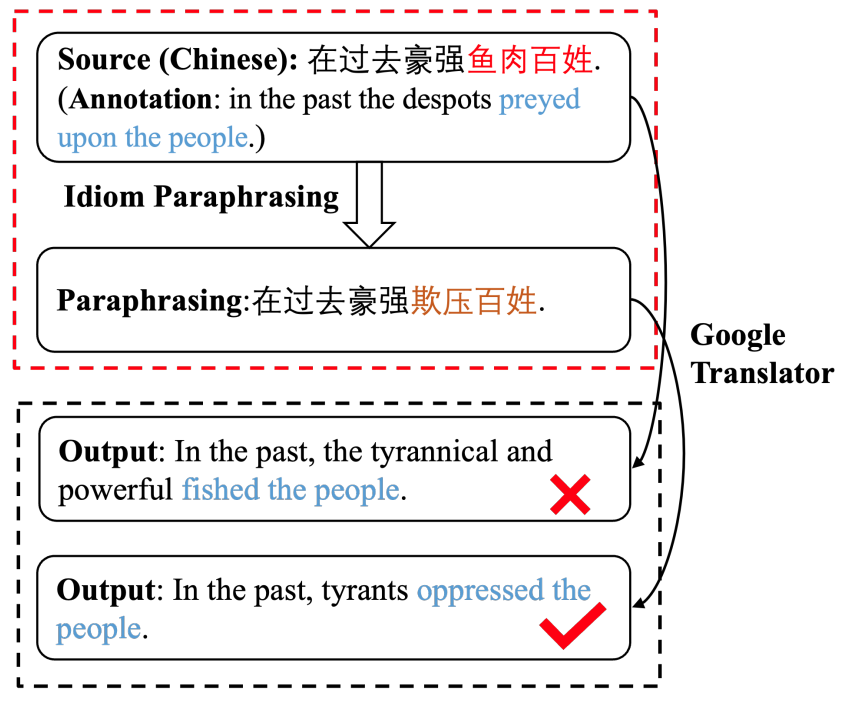

Chinese Idiom Paraphrasing (CIP), which goal is to rephrase the idioms of input sentence to generate a fluent, meaning-preserving sentence without any idiom:

Data in this dataset and several approaches:

-

LSTM approach

-

Transformer approach

-

mt5 approach

-

infill approach

- Python>=3.6

- torch>=1.7.1

- transformers==4.8.0

- fairseq==0.10.2

you can download all pre-trained models here(4s9n), and put it intomodeldirectory.

If you want train models from scratch, you need uses the pre-trained language models t5-pegasus (ZhuiyiTechnology) and place the models under the model directory after downloading.

train LSTM and Transformer model by fairseq, you need process data for jieba and bpe tokenize sentence, we use scripts from Subword-nmt:

git clone https://github.com/rsennrich/subword-nmtThen run

sh prepare.shtrain LSTM, Transformer, t5-pegasus, infill model

sh train_lstm.sh

sh train_transformer.sh

sh train_t5_pegasus.sh

sh train_infill.shRun the following command to generate

sh fairseq_generate.sh

sh generate_t5_pegasus.sh

sh generate_infill.sh@article{qiang2022chinese,

title={Chinese Idiom Paraphrasing},

author={Qiang, Jipeng and Li, Yang and Zhang, Chaowei and Li, Yun and Yuan, Yunhao and Zhu, Yi and Wu, Xindong},

journal={arXiv preprint arXiv:2204.07555},

year={2022}

}