Note

This repository supplements the Medium story: Streaming Text Embeddings for Retrieval Augmented Generation (RAG)

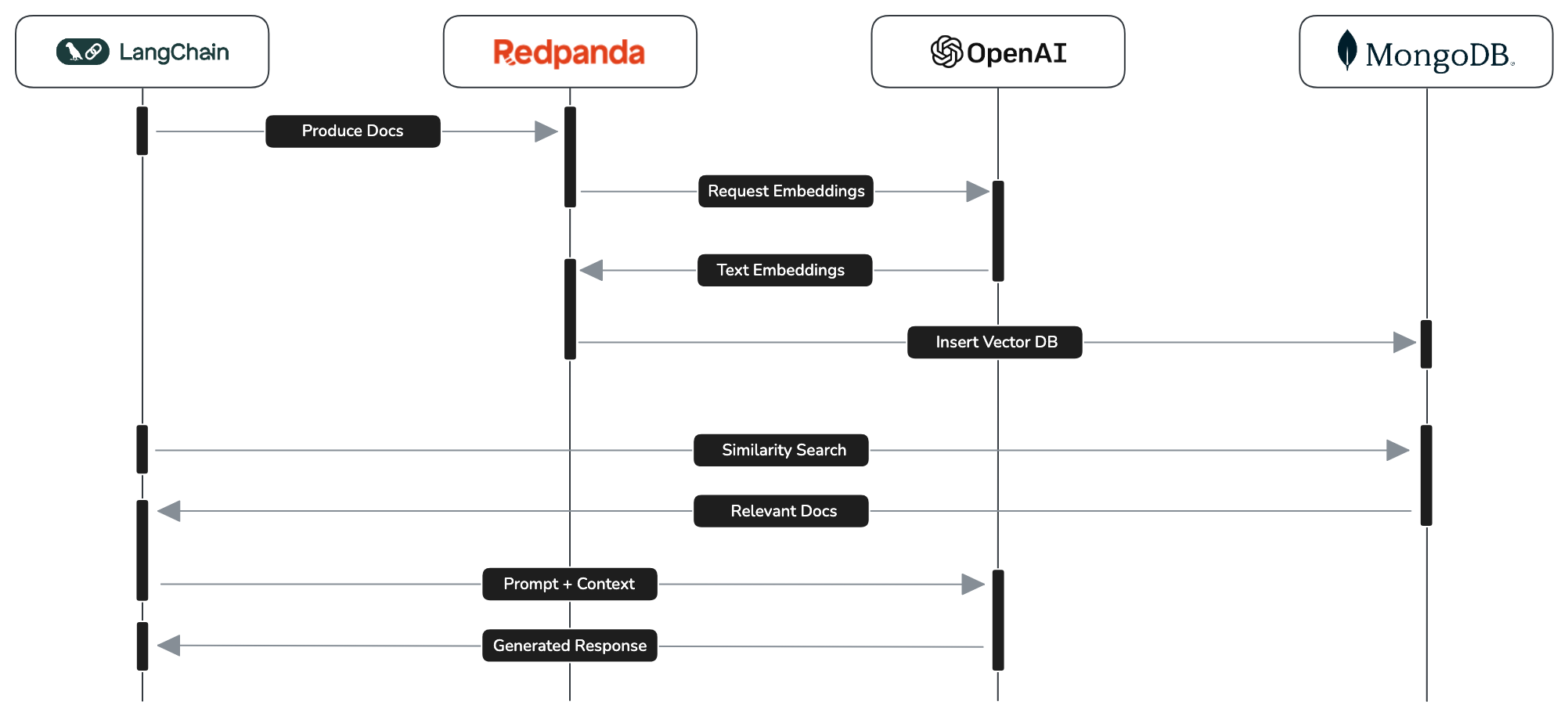

How to use Redpanda and Redpanda Connect to generate vector embeddings on streaming text.

Retrieval Augmented Generation (RAG) is best described by OpenAI as "the process of retrieving relevant contextual information from a data source and passing that information to a large language model alongside the user's prompt. This information is used to improve the model's output by augmenting the model's base knowledge".

RAG comprises of two parts:

- The acquisition and persistence of new information that the model has no prior knowledge of. This new information (documents, webpages, etc) is split into small chunks of text and stored in a vector database along with its vector embeddings. This is the bit we're going to do in near realtime using Redpanda.

Note

Vector embeddings are a mathematical representation of text that encode its semantic meaning in a vector space. Words with a similar meaning will be clustered closer together in this multidimensional space. Vector databases, such as MongoDB Atlas, have the ability to query vector embeddings to retrieve texts with a similar semantic meaning. A famous example of this being: king - man + woman = queen.

- The retrieval of relevant contextual information from a vector database (semantic search), and the passing of that information alongside a user's question (prompt) to the large language model to improve the model's generated answer.

In this demo, we'll demonstrate how to build a stream processing pipeline using the Redpanda Platform to add OpenAI text embeddings to messages as they stream through Redpanda on their way to a MongoDB Atlas vector database. As part of the solution we'll also use the LangChain framework to acquire and send new information to Redpanda, and to build the prompt that retrieves relevant texts from the vector database and uses that context to query OpenAI's gpt-4o model.

Complete the setup prerequisites to prepare a local .env file that contains connection details for Redpanda Cloud, OpenAI, and MongoDB Atlas. The environment variables included in the .env file are used in this demo by the Python scripts and by Redpanda Connect for configuration interpolation:

export $(grep -v '^#' .env | xargs)python3 -m venv env

source env/bin/activate

pip install -r requirements.txtSee: https://docs.redpanda.com/redpanda-connect/guides/getting_started/#install

The demo comprises of four parts:

Ask OpenAI's gpt-4o model to answer a question about something that happended after it's training cutoff date of October 2023 (i.e. something it hasn't learned about).

# Activate the Python environment

source env/bin/activate

# Prompt OpenAI without any context

python openai_rag.py -v -q "Name the atheletes and their nations that won Gold, Silver, and Bronze in the mens 100m at the Paris olympic games."

# gpt-4o doesn't know the answer

Question: Name the atheletes and their nations that won Gold, Silver, and Bronze in the mens 100m at the Paris olympic games.

Answer: I don't know.Run a Redpanda Connect pipeline that consumes messages from a Redpanda topics named documents, and passes each message to OpenAI's embeddings API to retrieve the vector embeddings for the text. The pipeline then inserts the enriched messages into a MongoDB Atlas database collection that has a vector search index.

rpk connect run --log.level debug openai_rpcn.yaml

INFO Running main config from specified file @service=redpanda-connect benthos_version=4.34.0 path=openai_rpcn.yaml

INFO Listening for HTTP requests at: http://0.0.0.0:4195 @service=redpanda-connect

INFO Launching a Redpanda Connect instance, use CTRL+C to close @service=redpanda-connect

INFO Input type kafka is now active @service=redpanda-connect label="" path=root.input

DEBU Starting consumer group @service=redpanda-connect label="" path=root.input

INFO Output type mongodb is now active @service=redpanda-connect label="" path=root.output

DEBU Consuming messages from topic 'documents' partition '0' @service=redpanda-connect label="" path=root.inputDownload some relevant information from a reputable source, like the BBC website. Split the text into smaller parts using LangChain, and send the splits to the documents topic.

python produce_documents.py -u https://www.bbc.co.uk/sport/olympics/articles/clwyy8jwp2goAt this point the messages will be picked up by the Redpanda Connct pipeline, enriched with vector embeddings, and inserted into MongoDB ready for searching.

To complete the RAG demo, use LangChain to retrieve the information stored in MongoDB by performing a similarity search. The context is added to a prompt that asks OpenAI the same question from the first step. The gpt-4o model is now able to use the additional context to generate a better response to the original question.

python openai_rag.py -v -q "Name the atheletes and their nations that won Gold, Silver, and Bronze in the mens 100m at the Paris olympic games."

Question: Name the atheletes and their nations that won Gold, Silver, and Bronze in the mens 100m at the Paris olympic games.

Answer: The athletes who won medals in the men's 100m at the Paris Olympic Games are:

- Gold: Noah Lyles (USA)

- Silver: Kishane Thompson (Jamaica)

- Bronze: Fred Kerley (USA)