In recent years King County, WA, has seen cost of living climb well above state and national averages, and the high cost of housing is a major factor in this increase. A news article published by the University of Washington in 2017 reported that the cost of basic living essentials for a family of four in Seattle had increased by $30,000 (62%) over the previous decade, with housing and childcare eating up half of a family's budget.

For middle-income families, home ownership can be a path to building wealth. How can a family maximize the return on their investment in home ownership? What factors affect the selling prices of midrange homes? What can homeowners do to increase the value of their homes while they occupy them?

This project addresses these questions using data from the King County Housing dataset. To make the results more relevant to middle-income families, my focus is on houses with two to five bedrooms and with a median price of $425,000.* The top 10% most expensive houses are omitted. The resulting multiple linear regression model can help middle-income families understand how changes within their reach, like adding a bedroom or remodeling a bathroom, can increase the sale price of their homes.

*Assuming excellent credit, a down-payment of 14%, and the ability to spend about 1/3 of monthly gross income on a mortgage payment, $425,000 would be an affordable home price for a family making King County's median household income of $89,675. I used NerdWallet's mortgage calculator to estimate this.

(see Interpretation below for deeper discussion)

- Adding a bathroom or increasing square footage by 100 square feet could raise the price of a home by over $8,300.

- Improving the condition of a home by one level (e.g., from 3 to 4) could raise the price of the home by over $21,000.

- Improving the grade of a home by one level (e.g., from 7 to 8) could raise the price of the home by over $42,000.

My model explains 82% of the variability in home prices.

Here's what you'll find in this repository:

- pricing-midrange-homes-king-co-wa.ipynb: Jupyter Notebook containing project code

- column_names.md: Markdown file containing the data dictionary for the dataset provided by Flatiron School

- kc_house_data.csv: CSV file containing the dataset provided by Flatiron School

- presentation.pdf: PDF file containing slides from a non-technical presentation of project results

For more technical details, see the Appendix below.

To get a sense for what is in the King County Housing dataset, first I will do some basic exploration of the entire dataset. After cleaning, another round of exploration will help clarify trends in the data specific to midrange housing.

First, I import the packages and data I need and take a look at the first few rows to see what's there.

# Import needed packages and read in the data

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

%matplotlib inline

data = pd.read_csv('kc_house_data.csv')# View first few rows of the dataset

data.head().dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| id | date | price | bedrooms | bathrooms | sqft_living | sqft_lot | floors | waterfront | view | ... | grade | sqft_above | sqft_basement | yr_built | yr_renovated | zipcode | lat | long | sqft_living15 | sqft_lot15 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 7129300520 | 10/13/2014 | 221900.0 | 3 | 1.00 | 1180 | 5650 | 1.0 | NaN | 0.0 | ... | 7 | 1180 | 0.0 | 1955 | 0.0 | 98178 | 47.5112 | -122.257 | 1340 | 5650 |

| 1 | 6414100192 | 12/9/2014 | 538000.0 | 3 | 2.25 | 2570 | 7242 | 2.0 | 0.0 | 0.0 | ... | 7 | 2170 | 400.0 | 1951 | 1991.0 | 98125 | 47.7210 | -122.319 | 1690 | 7639 |

| 2 | 5631500400 | 2/25/2015 | 180000.0 | 2 | 1.00 | 770 | 10000 | 1.0 | 0.0 | 0.0 | ... | 6 | 770 | 0.0 | 1933 | NaN | 98028 | 47.7379 | -122.233 | 2720 | 8062 |

| 3 | 2487200875 | 12/9/2014 | 604000.0 | 4 | 3.00 | 1960 | 5000 | 1.0 | 0.0 | 0.0 | ... | 7 | 1050 | 910.0 | 1965 | 0.0 | 98136 | 47.5208 | -122.393 | 1360 | 5000 |

| 4 | 1954400510 | 2/18/2015 | 510000.0 | 3 | 2.00 | 1680 | 8080 | 1.0 | 0.0 | 0.0 | ... | 8 | 1680 | 0.0 | 1987 | 0.0 | 98074 | 47.6168 | -122.045 | 1800 | 7503 |

5 rows × 21 columns

Already I can see that there is some data that may not be useful at all. id appears to be a unique identifying number for each sale record. sqft_living appears to be the sum of sqft_above and sqft_basement. I can also see some missing values.

Let's check for missing values throughout the dataset.

Missing values can cause problems when it comes time to build my regression model, so I want to be sure to find and resolve them. If there are any duplicate observations, I will want to remove those.

First, let's check for missing values in each column.

# View counts and data types by column

data.info()<class 'pandas.core.frame.DataFrame'>

RangeIndex: 21597 entries, 0 to 21596

Data columns (total 21 columns):

id 21597 non-null int64

date 21597 non-null object

price 21597 non-null float64

bedrooms 21597 non-null int64

bathrooms 21597 non-null float64

sqft_living 21597 non-null int64

sqft_lot 21597 non-null int64

floors 21597 non-null float64

waterfront 19221 non-null float64

view 21534 non-null float64

condition 21597 non-null int64

grade 21597 non-null int64

sqft_above 21597 non-null int64

sqft_basement 21597 non-null object

yr_built 21597 non-null int64

yr_renovated 17755 non-null float64

zipcode 21597 non-null int64

lat 21597 non-null float64

long 21597 non-null float64

sqft_living15 21597 non-null int64

sqft_lot15 21597 non-null int64

dtypes: float64(8), int64(11), object(2)

memory usage: 3.5+ MB

Above I can see that there are a few columns with missing values (and some whose data types may need to be changed).

There's a more direct way to see how many missing values there are and where:

# Check for missing values by column

data.isna().sum()id 0

date 0

price 0

bedrooms 0

bathrooms 0

sqft_living 0

sqft_lot 0

floors 0

waterfront 2376

view 63

condition 0

grade 0

sqft_above 0

sqft_basement 0

yr_built 0

yr_renovated 3842

zipcode 0

lat 0

long 0

sqft_living15 0

sqft_lot15 0

dtype: int64

It looks like there are a lot of missing values in waterfront and yr_renovated and a few in view. These can be filled during the cleaning phase.

Let's check for duplicate observations. I'll do this two ways: first by searching for observations that are completely identical, and then by searching for duplicate values in the id column, since I believe that to be a unique identifier for each home sale.

# Check for duplicate records

print('Number of duplicate records: ', sum(data.duplicated()))Number of duplicate records: 0

# Check for duplicate IDs

display(data['id'].value_counts().head())

# Count non-unique IDs

id_value_counts = data['id'].value_counts()

num_repeat_ids = len(id_value_counts[id_value_counts > 1])*2 + 1

print('Number of non-unique IDs: ', num_repeat_ids)795000620 3

1825069031 2

2019200220 2

7129304540 2

1781500435 2

Name: id, dtype: int64

Number of non-unique IDs: 353

I can see both from the value counts and from my calculation that there are a number of non-unique values in the id column, but these are few in relation to the overall size of the dataset.

If the records are not identical, and just the ids are repeated, how are these records different from one another?

# Inspect a few of the records with duplicate IDs

display(data[data['id'] == 795000620])

display(data[data['id'] == 1825069031])

# display(data[data['id'] == 2019200220])

# display(data[data['id'] == 7129304540])

# display(data[data['id'] == 1781500435]).dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| id | date | price | bedrooms | bathrooms | sqft_living | sqft_lot | floors | waterfront | view | ... | grade | sqft_above | sqft_basement | yr_built | yr_renovated | zipcode | lat | long | sqft_living15 | sqft_lot15 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 17588 | 795000620 | 9/24/2014 | 115000.0 | 3 | 1.0 | 1080 | 6250 | 1.0 | 0.0 | 0.0 | ... | 5 | 1080 | 0.0 | 1950 | 0.0 | 98168 | 47.5045 | -122.33 | 1070 | 6250 |

| 17589 | 795000620 | 12/15/2014 | 124000.0 | 3 | 1.0 | 1080 | 6250 | 1.0 | 0.0 | 0.0 | ... | 5 | 1080 | 0.0 | 1950 | 0.0 | 98168 | 47.5045 | -122.33 | 1070 | 6250 |

| 17590 | 795000620 | 3/11/2015 | 157000.0 | 3 | 1.0 | 1080 | 6250 | 1.0 | NaN | 0.0 | ... | 5 | 1080 | 0.0 | 1950 | NaN | 98168 | 47.5045 | -122.33 | 1070 | 6250 |

3 rows × 21 columns

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| id | date | price | bedrooms | bathrooms | sqft_living | sqft_lot | floors | waterfront | view | ... | grade | sqft_above | sqft_basement | yr_built | yr_renovated | zipcode | lat | long | sqft_living15 | sqft_lot15 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3946 | 1825069031 | 8/14/2014 | 550000.0 | 4 | 1.75 | 2410 | 8447 | 2.0 | 0.0 | 3.0 | ... | 8 | 2060 | 350.0 | 1936 | 1980.0 | 98074 | 47.6499 | -122.088 | 2520 | 14789 |

| 3947 | 1825069031 | 10/16/2014 | 550000.0 | 4 | 1.75 | 2410 | 8447 | 2.0 | NaN | 3.0 | ... | 8 | 2060 | 350.0 | 1936 | 1980.0 | 98074 | 47.6499 | -122.088 | 2520 | 14789 |

2 rows × 21 columns

On inspection, I can see that the observations really are different even when the id value is the same: different dates, different prices, etc. Since I expect to drop the id column before modeling anyway, I will leave these observations in the dataset and assume that they represent different sales of the same house or some other meaningful difference.

Categorical values can interfere with modeling if they are not handled correctly, so it is important to identify them early on. I'll use four approaches: viewing summary statistics, counting unique values, looking at histograms, and looking at scatter plots.

First, let's view summary statistics for each of the numeric columns and see if any of them have particularly small ranges, perhaps made up of mostly integers. (This is also a good opportunity to get a feel for the distributions of all the numeric variables.)

# Check summary statistics of numeric columns

data.describe().dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| id | price | bedrooms | bathrooms | sqft_living | sqft_lot | floors | waterfront | view | condition | grade | sqft_above | yr_built | yr_renovated | zipcode | lat | long | sqft_living15 | sqft_lot15 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 2.159700e+04 | 2.159700e+04 | 21597.000000 | 21597.000000 | 21597.000000 | 2.159700e+04 | 21597.000000 | 19221.000000 | 21534.000000 | 21597.000000 | 21597.000000 | 21597.000000 | 21597.000000 | 17755.000000 | 21597.000000 | 21597.000000 | 21597.000000 | 21597.000000 | 21597.000000 |

| mean | 4.580474e+09 | 5.402966e+05 | 3.373200 | 2.115826 | 2080.321850 | 1.509941e+04 | 1.494096 | 0.007596 | 0.233863 | 3.409825 | 7.657915 | 1788.596842 | 1970.999676 | 83.636778 | 98077.951845 | 47.560093 | -122.213982 | 1986.620318 | 12758.283512 |

| std | 2.876736e+09 | 3.673681e+05 | 0.926299 | 0.768984 | 918.106125 | 4.141264e+04 | 0.539683 | 0.086825 | 0.765686 | 0.650546 | 1.173200 | 827.759761 | 29.375234 | 399.946414 | 53.513072 | 0.138552 | 0.140724 | 685.230472 | 27274.441950 |

| min | 1.000102e+06 | 7.800000e+04 | 1.000000 | 0.500000 | 370.000000 | 5.200000e+02 | 1.000000 | 0.000000 | 0.000000 | 1.000000 | 3.000000 | 370.000000 | 1900.000000 | 0.000000 | 98001.000000 | 47.155900 | -122.519000 | 399.000000 | 651.000000 |

| 25% | 2.123049e+09 | 3.220000e+05 | 3.000000 | 1.750000 | 1430.000000 | 5.040000e+03 | 1.000000 | 0.000000 | 0.000000 | 3.000000 | 7.000000 | 1190.000000 | 1951.000000 | 0.000000 | 98033.000000 | 47.471100 | -122.328000 | 1490.000000 | 5100.000000 |

| 50% | 3.904930e+09 | 4.500000e+05 | 3.000000 | 2.250000 | 1910.000000 | 7.618000e+03 | 1.500000 | 0.000000 | 0.000000 | 3.000000 | 7.000000 | 1560.000000 | 1975.000000 | 0.000000 | 98065.000000 | 47.571800 | -122.231000 | 1840.000000 | 7620.000000 |

| 75% | 7.308900e+09 | 6.450000e+05 | 4.000000 | 2.500000 | 2550.000000 | 1.068500e+04 | 2.000000 | 0.000000 | 0.000000 | 4.000000 | 8.000000 | 2210.000000 | 1997.000000 | 0.000000 | 98118.000000 | 47.678000 | -122.125000 | 2360.000000 | 10083.000000 |

| max | 9.900000e+09 | 7.700000e+06 | 33.000000 | 8.000000 | 13540.000000 | 1.651359e+06 | 3.500000 | 1.000000 | 4.000000 | 5.000000 | 13.000000 | 9410.000000 | 2015.000000 | 2015.000000 | 98199.000000 | 47.777600 | -121.315000 | 6210.000000 | 871200.000000 |

It is clear from the results that there are some wide ranges in some columns. price, for example, ranges from $78,000 to over \$7 million, and sqft_living from 370 to over 13,000 square feet. Other variables could be categorical, such as view and condition, where there seem to be few unique values representing levels of quality rather than some truly quantitative measure.

Let's check the number of unique values in each column to see if we find any other potential categorical variables.

# Check the number of unique values in each column

unique_vals_list = []

for col in data.columns:

unique_vals_list.append({'column': col, 'unique values': len(data[col].unique())})

pd.DataFrame(unique_vals_list).dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| column | unique values | |

|---|---|---|

| 0 | id | 21420 |

| 1 | date | 372 |

| 2 | price | 3622 |

| 3 | bedrooms | 12 |

| 4 | bathrooms | 29 |

| 5 | sqft_living | 1034 |

| 6 | sqft_lot | 9776 |

| 7 | floors | 6 |

| 8 | waterfront | 3 |

| 9 | view | 6 |

| 10 | condition | 5 |

| 11 | grade | 11 |

| 12 | sqft_above | 942 |

| 13 | sqft_basement | 304 |

| 14 | yr_built | 116 |

| 15 | yr_renovated | 71 |

| 16 | zipcode | 70 |

| 17 | lat | 5033 |

| 18 | long | 751 |

| 19 | sqft_living15 | 777 |

| 20 | sqft_lot15 | 8682 |

It is clear that floors, waterfront, view, and condition have few unique values (and we have already seen that some of those values are missing anyway). bedrooms and grade might also be good candidates for one-hot encoding after we have narrowed the dataset to just the midrange homes.

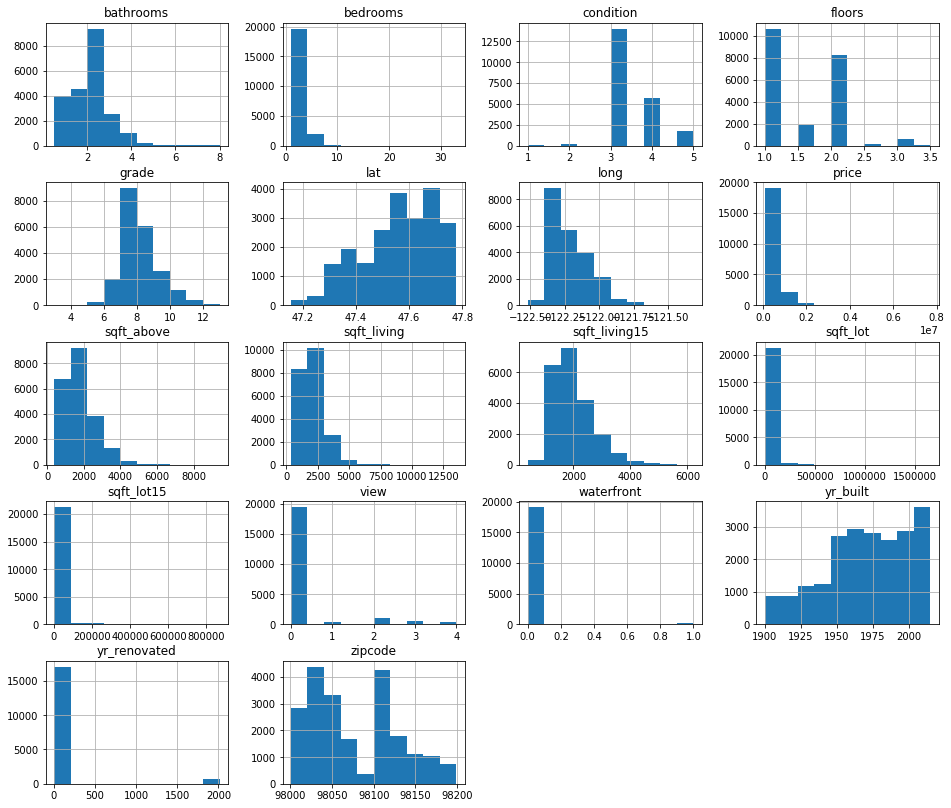

Now let's check the histograms:

# Define a function to create histograms

def hist_it(data):

"""Creates histograms of all numeric columns in a DataFrame"""

data.hist(figsize=(16,14))# Create histograms for numerical variables

data_for_hist = data.drop(['id'], axis=1)

hist_it(data_for_hist)Let's also take a look at scatter plots for each numeric variable. Coincidentally, the scatter matrix below will let us do an initial check for linear relationships between the independent variables and the target of our analysis, price.

# Create a scatter_matrix to look for categorical variables and linear relationships

pd.plotting.scatter_matrix(data.drop(['id'], axis=1), figsize=[16,14]);Based on the plots, value counts, and summary statistics, I think that floors, waterfront, view, condition, and grade may all be candidates for one-hot encoding in the final dataset. There is also zipcode to consider; although there are many unique values in that column, a home's zipcode is more like a category it belongs to than a quantitative measure of some kind.

I choose to continue to treat all variables as continuous for the first round of modeling, but below I will create a copy of my dataset with zipcode one-hot encoded to try as well.

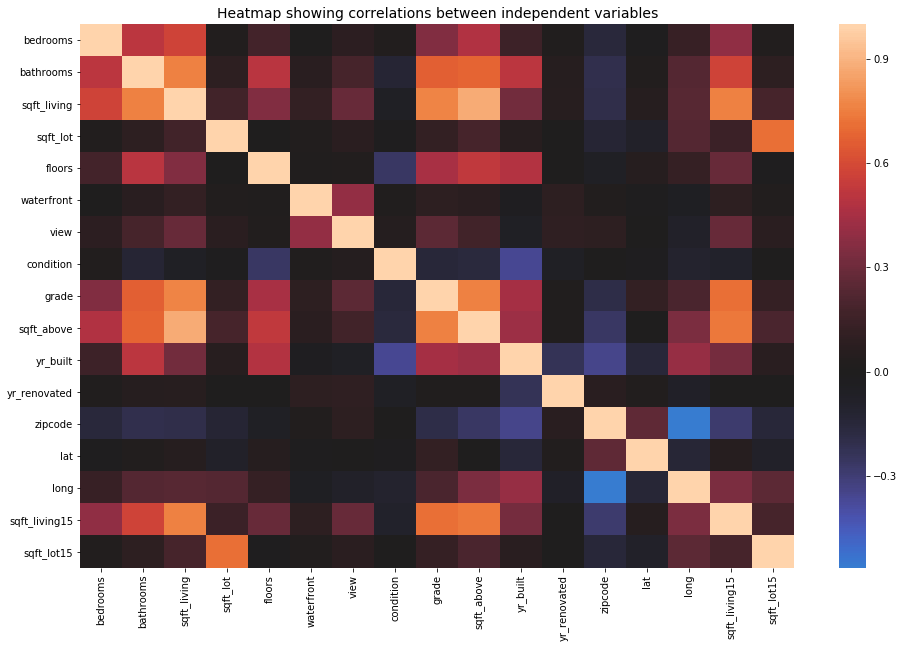

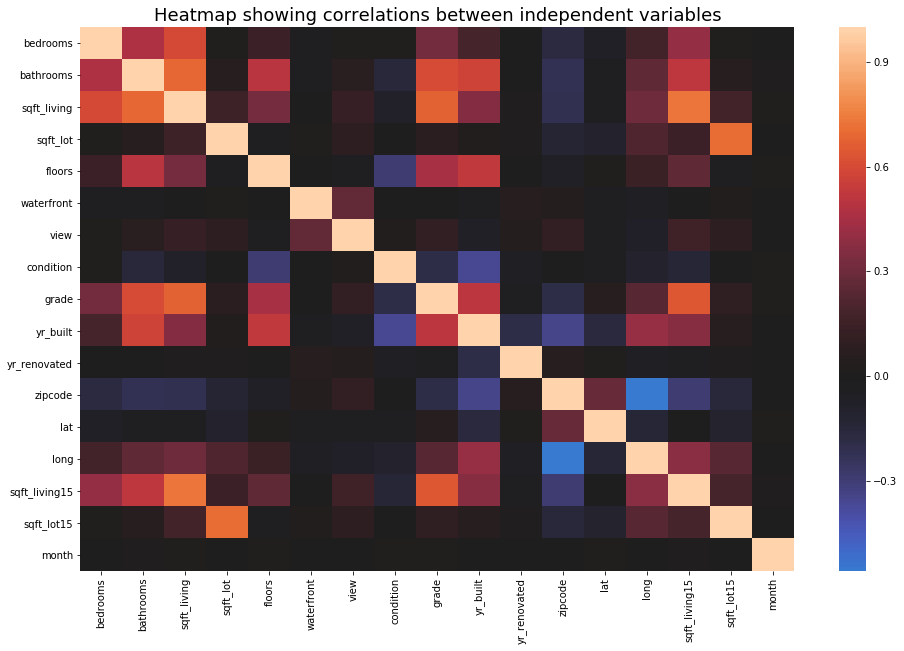

Multicollinearity (when two or more independent variables are strongly correlated with each other) can cause a model to appear better than it really is; those correlations amplify the effects of the correlated features. If such correlations are found, one variable from each correlated pair can be dropped from the model to counteract the effect of multicollinearity.

I will check for correlations among the features using a correlation matrix and a heatmap, below, and using 0.8 as the threshold for "strong" correlation.

# Create a boolean correlation matrix to highlight strong correlations

abs(data.corr()) > 0.8.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| id | price | bedrooms | bathrooms | sqft_living | sqft_lot | floors | waterfront | view | condition | grade | sqft_above | yr_built | yr_renovated | zipcode | lat | long | sqft_living15 | sqft_lot15 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| id | True | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False |

| price | False | True | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False |

| bedrooms | False | False | True | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False |

| bathrooms | False | False | False | True | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False |

| sqft_living | False | False | False | False | True | False | False | False | False | False | False | True | False | False | False | False | False | False | False |

| sqft_lot | False | False | False | False | False | True | False | False | False | False | False | False | False | False | False | False | False | False | False |

| floors | False | False | False | False | False | False | True | False | False | False | False | False | False | False | False | False | False | False | False |

| waterfront | False | False | False | False | False | False | False | True | False | False | False | False | False | False | False | False | False | False | False |

| view | False | False | False | False | False | False | False | False | True | False | False | False | False | False | False | False | False | False | False |

| condition | False | False | False | False | False | False | False | False | False | True | False | False | False | False | False | False | False | False | False |

| grade | False | False | False | False | False | False | False | False | False | False | True | False | False | False | False | False | False | False | False |

| sqft_above | False | False | False | False | True | False | False | False | False | False | False | True | False | False | False | False | False | False | False |

| yr_built | False | False | False | False | False | False | False | False | False | False | False | False | True | False | False | False | False | False | False |

| yr_renovated | False | False | False | False | False | False | False | False | False | False | False | False | False | True | False | False | False | False | False |

| zipcode | False | False | False | False | False | False | False | False | False | False | False | False | False | False | True | False | False | False | False |

| lat | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | True | False | False | False |

| long | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | True | False | False |

| sqft_living15 | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | True | False |

| sqft_lot15 | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | True |

# Create the correlation heatmap

data_for_scatter_matrix = data.drop(['price', 'id'], axis=1)

plt.figure(figsize=(16,10))

sns.heatmap(data_for_scatter_matrix.corr(), center=0)

plt.title('Heatmap showing correlations between independent variables', fontsize=14)

plt.show();The only pair of features with a correlation coefficient of 0.8 or above is sqft_living and sqft_above. As stated earlier, sqft_living is the sum of sqft_above and sqft_basement, so sqft_above will be dropped from the dataset anyway, avoiding any potential effects caused by multicollinearity.

Now that we have seen the basic characteristics of the whole dataset, it is time to trim it down to the part that is most relevant to middle-income families: houses with 2 to 5 bedrooms and not in the top 10% by price. This is also the time to resolve any missing values, drop unneeded columns, and then reexamine the data visually to understand the characteristics of our more focused dataset.

These are the main cleaning steps I will take:

- Filter the dataset to observations with 2-5 bedrooms.

- Filter the dataset to observations with price below the 90th percentile.

- Resolve missing values in the

waterfront,view, andyr_renovatedcolumns. - Calculate a new column,

month, from the date column. - Drop the following columns:

id,date,sqft_above,sqft_basement.

First, let's focus in on the midrange homes by filtering out the biggest and most expensive ones.

# Filter the dataset

midrange_homes = data[(data['price'] < np.quantile(data['price'], 0.9))

& (data['bedrooms'].isin(range(2, 6)))]

# View the first few rows

midrange_homes.head().dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| id | date | price | bedrooms | bathrooms | sqft_living | sqft_lot | floors | waterfront | view | ... | grade | sqft_above | sqft_basement | yr_built | yr_renovated | zipcode | lat | long | sqft_living15 | sqft_lot15 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 7129300520 | 10/13/2014 | 221900.0 | 3 | 1.00 | 1180 | 5650 | 1.0 | NaN | 0.0 | ... | 7 | 1180 | 0.0 | 1955 | 0.0 | 98178 | 47.5112 | -122.257 | 1340 | 5650 |

| 1 | 6414100192 | 12/9/2014 | 538000.0 | 3 | 2.25 | 2570 | 7242 | 2.0 | 0.0 | 0.0 | ... | 7 | 2170 | 400.0 | 1951 | 1991.0 | 98125 | 47.7210 | -122.319 | 1690 | 7639 |

| 2 | 5631500400 | 2/25/2015 | 180000.0 | 2 | 1.00 | 770 | 10000 | 1.0 | 0.0 | 0.0 | ... | 6 | 770 | 0.0 | 1933 | NaN | 98028 | 47.7379 | -122.233 | 2720 | 8062 |

| 3 | 2487200875 | 12/9/2014 | 604000.0 | 4 | 3.00 | 1960 | 5000 | 1.0 | 0.0 | 0.0 | ... | 7 | 1050 | 910.0 | 1965 | 0.0 | 98136 | 47.5208 | -122.393 | 1360 | 5000 |

| 4 | 1954400510 | 2/18/2015 | 510000.0 | 3 | 2.00 | 1680 | 8080 | 1.0 | 0.0 | 0.0 | ... | 8 | 1680 | 0.0 | 1987 | 0.0 | 98074 | 47.6168 | -122.045 | 1800 | 7503 |

5 rows × 21 columns

Let's check the effect of our filtering by looking at the summary statistics again:

# View summary statistics

midrange_homes.describe().dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| id | price | bedrooms | bathrooms | sqft_living | sqft_lot | floors | waterfront | view | condition | grade | sqft_above | yr_built | yr_renovated | zipcode | lat | long | sqft_living15 | sqft_lot15 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 1.899900e+04 | 18999.000000 | 18999.000000 | 18999.000000 | 18999.000000 | 1.899900e+04 | 18999.000000 | 16906.000000 | 18943.000000 | 18999.000000 | 18999.000000 | 18999.000000 | 18999.000000 | 15614.000000 | 18999.000000 | 18999.000000 | 18999.000000 | 18999.000000 | 18999.000000 |

| mean | 4.623793e+09 | 451033.600453 | 3.285910 | 2.012816 | 1912.888047 | 1.413348e+04 | 1.463682 | 0.002189 | 0.145331 | 3.405127 | 7.464498 | 1659.656298 | 1971.048424 | 66.947931 | 98078.543344 | 47.553219 | -122.212729 | 1882.645929 | 12188.540871 |

| std | 2.878978e+09 | 177148.927445 | 0.782848 | 0.672443 | 706.241075 | 3.852339e+04 | 0.536461 | 0.046732 | 0.582127 | 0.643411 | 0.959870 | 671.456125 | 28.847826 | 359.290326 | 53.294178 | 0.143337 | 0.142935 | 574.261538 | 25473.821194 |

| min | 1.200019e+06 | 78000.000000 | 2.000000 | 0.500000 | 440.000000 | 5.200000e+02 | 1.000000 | 0.000000 | 0.000000 | 1.000000 | 4.000000 | 440.000000 | 1900.000000 | 0.000000 | 98001.000000 | 47.155900 | -122.519000 | 399.000000 | 651.000000 |

| 25% | 2.154950e+09 | 310000.000000 | 3.000000 | 1.500000 | 1380.000000 | 5.000000e+03 | 1.000000 | 0.000000 | 0.000000 | 3.000000 | 7.000000 | 1160.000000 | 1952.000000 | 0.000000 | 98033.000000 | 47.451150 | -122.330000 | 1460.000000 | 5040.000000 |

| 50% | 3.943600e+09 | 425000.000000 | 3.000000 | 2.000000 | 1810.000000 | 7.486000e+03 | 1.000000 | 0.000000 | 0.000000 | 3.000000 | 7.000000 | 1480.000000 | 1975.000000 | 0.000000 | 98065.000000 | 47.562200 | -122.233000 | 1780.000000 | 7515.000000 |

| 75% | 7.350400e+09 | 570000.000000 | 4.000000 | 2.500000 | 2340.000000 | 1.015000e+04 | 2.000000 | 0.000000 | 0.000000 | 4.000000 | 8.000000 | 2030.000000 | 1996.000000 | 0.000000 | 98118.000000 | 47.680700 | -122.122000 | 2230.000000 | 9750.000000 |

| max | 9.900000e+09 | 886000.000000 | 5.000000 | 5.000000 | 5820.000000 | 1.651359e+06 | 3.500000 | 1.000000 | 4.000000 | 5.000000 | 12.000000 | 5450.000000 | 2015.000000 | 2015.000000 | 98199.000000 | 47.777600 | -121.315000 | 4670.000000 | 560617.000000 |

Bedrooms now range from 2 to 5, and the median price is now $425,000.

Let's check again for missing values; some may have been eliminated by the filtering.

# Check for missing values by column

midrange_homes.isna().sum()id 0

date 0

price 0

bedrooms 0

bathrooms 0

sqft_living 0

sqft_lot 0

floors 0

waterfront 2093

view 56

condition 0

grade 0

sqft_above 0

sqft_basement 0

yr_built 0

yr_renovated 3385

zipcode 0

lat 0

long 0

sqft_living15 0

sqft_lot15 0

dtype: int64

I can see above that there are still 2,093 missing values in the waterfront column. I'll investigate further.

# View value counts for 'waterfront'

midrange_homes['waterfront'].value_counts()0.0 16869

1.0 37

Name: waterfront, dtype: int64

Out of about 19,000 observations, only 37 have a value of 1 (presumably meaning "yes") in the waterfront column. If we take a quick look at median prices of homes with waterfront == 1 versus those where it equals 0...

# Print medians of homes with and without 'waterfront'

print(midrange_homes[midrange_homes['waterfront'] == 1]['price'].median())

print(midrange_homes[midrange_homes['waterfront'] == 0]['price'].median())635000.0

425000.0

...it is clear that waterfront probably has a pretty strong effect on price, but the vast majority of houses are not experiencing this effect. For this reason, I will fill missing waterfront values with 0, assuming that when the creators of this dataset left a value blank, the property was probably not on the waterfront anyway.

# Fill NaNs with 0.0 because it is the mode

midrange_homes['waterfront'] = midrange_homes['waterfront'].fillna(0.0)

midrange_homes['waterfront'] = midrange_homes['waterfront'].astype('int64')

midrange_homes.info()<class 'pandas.core.frame.DataFrame'>

Int64Index: 18999 entries, 0 to 21596

Data columns (total 21 columns):

id 18999 non-null int64

date 18999 non-null object

price 18999 non-null float64

bedrooms 18999 non-null int64

bathrooms 18999 non-null float64

sqft_living 18999 non-null int64

sqft_lot 18999 non-null int64

floors 18999 non-null float64

waterfront 18999 non-null int64

view 18943 non-null float64

condition 18999 non-null int64

grade 18999 non-null int64

sqft_above 18999 non-null int64

sqft_basement 18999 non-null object

yr_built 18999 non-null int64

yr_renovated 15614 non-null float64

zipcode 18999 non-null int64

lat 18999 non-null float64

long 18999 non-null float64

sqft_living15 18999 non-null int64

sqft_lot15 18999 non-null int64

dtypes: float64(7), int64(12), object(2)

memory usage: 3.2+ MB

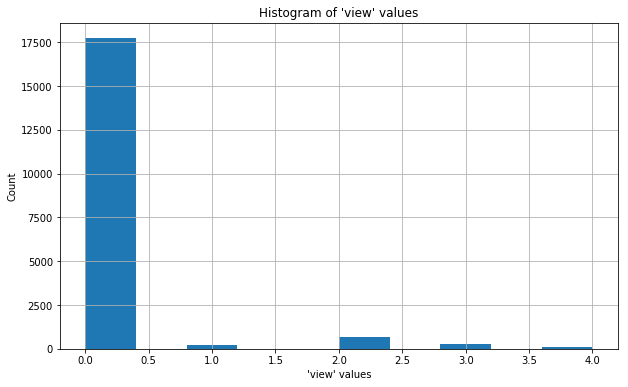

The documentation for this dataset is not very clear on what view represents. It seems likely, given what little has been said about it, that view gives some indication of whether or how much a home was viewed prior to sale. Because the maximum value of view is only 4, it seems likely that this is not an absolute count, but perhaps some kind of "index" or "tier" of how much viewing went on (i.e., a categorical variable). If we take a quick look at the distribution of values in view...

# Create a histogram of 'view' values

plt.figure(figsize=(10,6))

midrange_homes['view'].hist()

plt.title('Histogram of \'view\' values')

plt.xlabel('\'view\' values')

plt.ylabel('Count')

plt.show();...we can see that mode of values in this column is 0 by a long shot. We can see from the summary statistics table (above) that 0 is also the minimum value, the 25th percentile, the median, and the 75% percentile. For these reasons, I will reassign all missing values to 0, since this will not change the overall distribution of the data.

# Fill NaNs with 0.0 and check that missing `view` values are now resolved

midrange_homes['view'] = midrange_homes['view'].fillna(0.0).astype('int64')

midrange_homes.info()<class 'pandas.core.frame.DataFrame'>

Int64Index: 18999 entries, 0 to 21596

Data columns (total 21 columns):

id 18999 non-null int64

date 18999 non-null object

price 18999 non-null float64

bedrooms 18999 non-null int64

bathrooms 18999 non-null float64

sqft_living 18999 non-null int64

sqft_lot 18999 non-null int64

floors 18999 non-null float64

waterfront 18999 non-null int64

view 18999 non-null int64

condition 18999 non-null int64

grade 18999 non-null int64

sqft_above 18999 non-null int64

sqft_basement 18999 non-null object

yr_built 18999 non-null int64

yr_renovated 15614 non-null float64

zipcode 18999 non-null int64

lat 18999 non-null float64

long 18999 non-null float64

sqft_living15 18999 non-null int64

sqft_lot15 18999 non-null int64

dtypes: float64(6), int64(13), object(2)

memory usage: 3.2+ MB

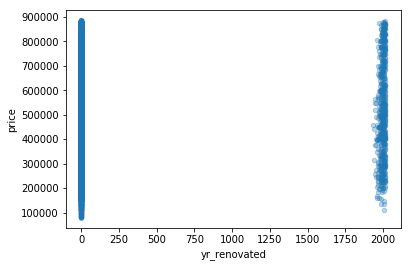

From the histogram below, it is clear that most yr_renovated values are 0. I assume this means that a house has not had substantial renovation since its construction.

# Create a histogram of 'yr_renovated' values

plt.figure(figsize=(10,6))

midrange_homes['yr_renovated'].hist()

plt.title('Histogram of \'yr_renovated\' values')

plt.xlabel('\'yr_renovated\' values')

plt.ylabel('Count')

plt.show();Since 0 is the mode, I will replace the missing values with 0:

# Fill NaNs with 0 and cast to dtype 'int64'

midrange_homes['yr_renovated'] = midrange_homes['yr_renovated'].fillna(0)

midrange_homes['yr_renovated'] = midrange_homes['yr_renovated'].astype('int64')

# Confirm that all missing values have been resolved

midrange_homes.info()<class 'pandas.core.frame.DataFrame'>

Int64Index: 18999 entries, 0 to 21596

Data columns (total 21 columns):

id 18999 non-null int64

date 18999 non-null object

price 18999 non-null float64

bedrooms 18999 non-null int64

bathrooms 18999 non-null float64

sqft_living 18999 non-null int64

sqft_lot 18999 non-null int64

floors 18999 non-null float64

waterfront 18999 non-null int64

view 18999 non-null int64

condition 18999 non-null int64

grade 18999 non-null int64

sqft_above 18999 non-null int64

sqft_basement 18999 non-null object

yr_built 18999 non-null int64

yr_renovated 18999 non-null int64

zipcode 18999 non-null int64

lat 18999 non-null float64

long 18999 non-null float64

sqft_living15 18999 non-null int64

sqft_lot15 18999 non-null int64

dtypes: float64(5), int64(14), object(2)

memory usage: 3.2+ MB

I want to have the option to explore seasonality in sale prices. Since the dataset comes from 2014-2015 and I want to be able to generalize to other years, I will focus on the month in which each sale was made. I will extract the number of the month from each date into a new column.

# Create 'month' column

midrange_homes['month'] = [x.split('/')[0] for x in midrange_homes['date']]

midrange_homes['month'] = pd.to_numeric(midrange_homes['month'],

errors='coerce')

# Confirm that 'month' exists and is numeric

midrange_homes.info()<class 'pandas.core.frame.DataFrame'>

Int64Index: 18999 entries, 0 to 21596

Data columns (total 22 columns):

id 18999 non-null int64

date 18999 non-null object

price 18999 non-null float64

bedrooms 18999 non-null int64

bathrooms 18999 non-null float64

sqft_living 18999 non-null int64

sqft_lot 18999 non-null int64

floors 18999 non-null float64

waterfront 18999 non-null int64

view 18999 non-null int64

condition 18999 non-null int64

grade 18999 non-null int64

sqft_above 18999 non-null int64

sqft_basement 18999 non-null object

yr_built 18999 non-null int64

yr_renovated 18999 non-null int64

zipcode 18999 non-null int64

lat 18999 non-null float64

long 18999 non-null float64

sqft_living15 18999 non-null int64

sqft_lot15 18999 non-null int64

month 18999 non-null int64

dtypes: float64(5), int64(15), object(2)

memory usage: 3.3+ MB

I have decided to drop the following columns for the reasons given below:

-

id: The values in this column simply identify each observation, and I don't expect them to be useful for modeling. -

date: The whole date is not analytically interesting to me, but the month is, so I extracted that data to a separate column. -

sqft_aboveandsqft_basement: As stated above,sqft_livingappears to be the sum of these two values. Since there is not much a homeowner could do to shift the balance of 'above' space to basement, I don't expect these features to be useful for my model.

# Drop unneeded columns

midrange_homes.drop(['id', 'date', 'sqft_above', 'sqft_basement'],

axis=1, inplace=True)

# Review the remaining columns

midrange_homes.info()<class 'pandas.core.frame.DataFrame'>

Int64Index: 18999 entries, 0 to 21596

Data columns (total 18 columns):

price 18999 non-null float64

bedrooms 18999 non-null int64

bathrooms 18999 non-null float64

sqft_living 18999 non-null int64

sqft_lot 18999 non-null int64

floors 18999 non-null float64

waterfront 18999 non-null int64

view 18999 non-null int64

condition 18999 non-null int64

grade 18999 non-null int64

yr_built 18999 non-null int64

yr_renovated 18999 non-null int64

zipcode 18999 non-null int64

lat 18999 non-null float64

long 18999 non-null float64

sqft_living15 18999 non-null int64

sqft_lot15 18999 non-null int64

month 18999 non-null int64

dtypes: float64(5), int64(13)

memory usage: 2.8 MB

A look at the .info() above shows that we now have 18,999 rows and 18 columns. All columns are of numeric data types, and there are no missing values.

Let's revisit our histograms now that the data has been cleaned.

# Create histograms of numeric features

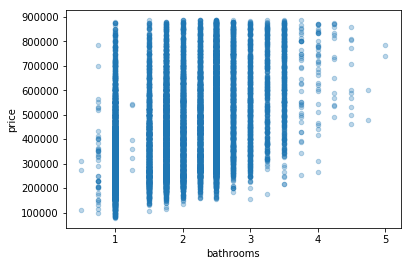

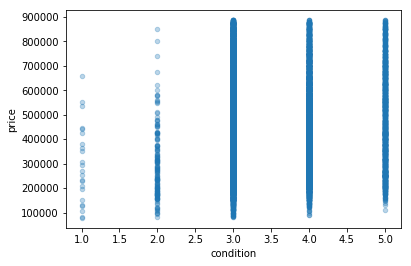

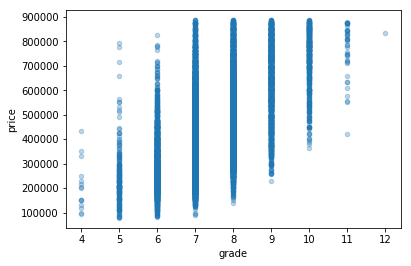

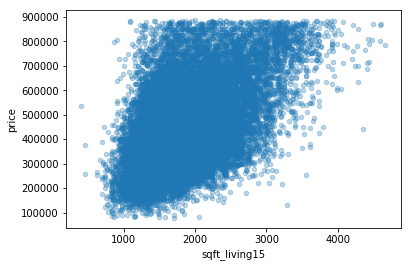

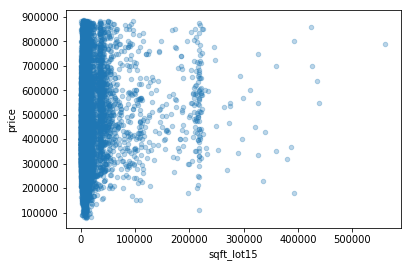

hist_it(midrange_homes)Note that selecting the homes with lower prices and 2-5 bedrooms has also caused the ranges of some other variables to shrink. grade, for instance, now only goes up to 10, sqft_living to about 5,000, and bathrooms up to 5.

Now let's examine scatterplots of each feature against price:

# Create scatterplots of all columns v. price

for column in midrange_homes.drop('price', axis=1):

midrange_homes.plot(kind='scatter', x=column, y='price', alpha=0.3)

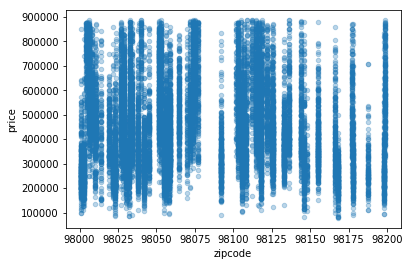

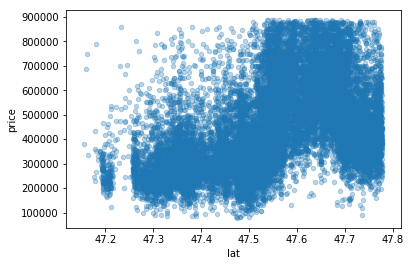

plt.show();Most features appear to have a more or less linear relationship with price. The distribution of points in zipcode makes me wonder if there are actually two subsets in this data: homes in the Seattle area (with zipcodes of 98100 and up), and homes elsewhere in the county. Depending on the results of my first attempt at modeling, I may decide to reexamine the effect that location may be having on the model.

Now that the dataset has been trimmed down a bit, let's re-check for multicollinearity.

# Create a boolean correlation matrix to highlight strong correlations

abs(midrange_homes.corr()) > 0.8.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| price | bedrooms | bathrooms | sqft_living | sqft_lot | floors | waterfront | view | condition | grade | yr_built | yr_renovated | zipcode | lat | long | sqft_living15 | sqft_lot15 | month | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| price | True | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False |

| bedrooms | False | True | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False |

| bathrooms | False | False | True | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False |

| sqft_living | False | False | False | True | False | False | False | False | False | False | False | False | False | False | False | False | False | False |

| sqft_lot | False | False | False | False | True | False | False | False | False | False | False | False | False | False | False | False | False | False |

| floors | False | False | False | False | False | True | False | False | False | False | False | False | False | False | False | False | False | False |

| waterfront | False | False | False | False | False | False | True | False | False | False | False | False | False | False | False | False | False | False |

| view | False | False | False | False | False | False | False | True | False | False | False | False | False | False | False | False | False | False |

| condition | False | False | False | False | False | False | False | False | True | False | False | False | False | False | False | False | False | False |

| grade | False | False | False | False | False | False | False | False | False | True | False | False | False | False | False | False | False | False |

| yr_built | False | False | False | False | False | False | False | False | False | False | True | False | False | False | False | False | False | False |

| yr_renovated | False | False | False | False | False | False | False | False | False | False | False | True | False | False | False | False | False | False |

| zipcode | False | False | False | False | False | False | False | False | False | False | False | False | True | False | False | False | False | False |

| lat | False | False | False | False | False | False | False | False | False | False | False | False | False | True | False | False | False | False |

| long | False | False | False | False | False | False | False | False | False | False | False | False | False | False | True | False | False | False |

| sqft_living15 | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | True | False | False |

| sqft_lot15 | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | True | False |

| month | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | False | True |

# Create the correlation heatmap

data_for_scatter_matrix = midrange_homes.drop(['price'], axis=1)

plt.figure(figsize=(16,10))

sns.heatmap(data_for_scatter_matrix.corr(), center=0)

plt.title('Heatmap showing correlations between independent variables',

fontsize=18)

plt.show();Good news: there appear to be no correlations with coefficients higher than my chosen threshold of 0.8.

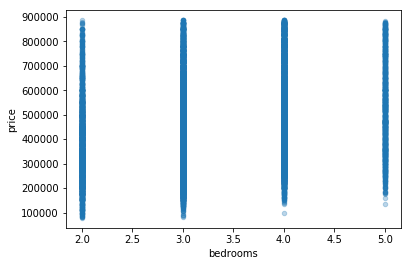

Having seen the histograms and scatterplots, I now want to examine a few features in detail to answer the following questions:

-

Is there a number of bedrooms that causes a big jump up in price (e.g., three versus two, or four versus three)?

-

Are there times of year when prices tend to be lower or higher?

-

What is the difference in price between a home of a middling grade (e.g., 7) and one of a higher grade (e.g., 10)?

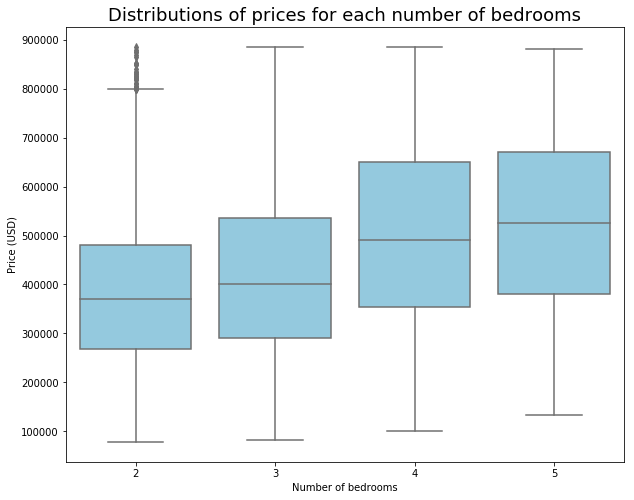

Is there a number of bedrooms that causes a big jump up in price (e.g., three versus two, or four versus three)? Let's examine some boxplots to find out.

# Create boxplots for 'bedrooms' v. 'price'

plt.figure(figsize=(10,8))

sns.boxplot(midrange_homes['bedrooms'], midrange_homes['price'], color='skyblue')

plt.title('Distributions of prices for each number of bedrooms', fontsize=18)

plt.xlabel('Number of bedrooms')

plt.ylabel('Price (USD)')

plt.show();# Calculate percent differences in median prices

medians = []

for n in range(2,6):

medians.append(midrange_homes[midrange_homes['bedrooms'] == n]['price'].median())

percent_differences = []

for m in range(0,len(medians)-1):

percent_differences.append(round(((medians[m+1] - medians[m]) / medians[m]),2))

percent_differences [0.08, 0.22, 0.07]

The biggest difference in median price is between four and three bedrooms, where there is an increase of 22%.

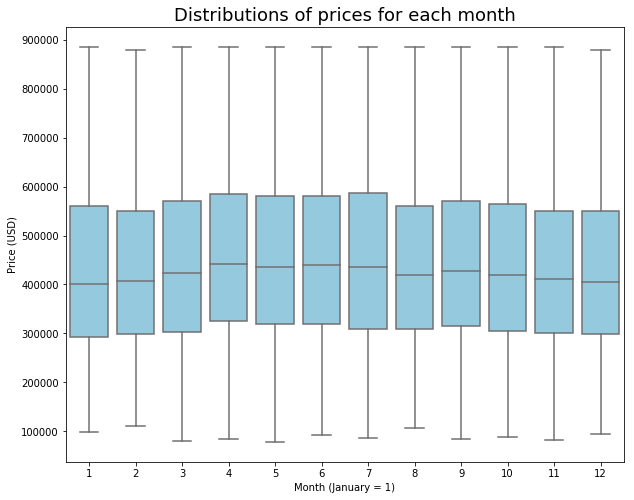

Are there times of year when prices tend to be lower or higher?

# Create a boxplot to compare 'month' and 'price'

plt.figure(figsize=(10,8))

sns.boxplot(midrange_homes['month'], midrange_homes['price'], color='skyblue')

plt.title('Distributions of prices for each month', fontsize=18)

plt.xlabel('Month (January = 1)')

plt.ylabel('Price (USD)')

plt.show();Although houses sell at a wide range of prices every month, median prices appear to be higher in April through July than in November through February.

Let's compare April and January median prices directly:

april_med = midrange_homes[midrange_homes['month'] == 4]['price'].median()

january_med = midrange_homes[midrange_homes['month'] == 1]['price'].median()

april_med - january_med41000.0

There is a difference in median house price of $41,000 between April and January! Although this analysis doesn't consider any other factors (like whether houses sold in April are bigger than those sold in January), there could be an advantage to timing the sale of one's home for the spring/summer as opposed to winter.

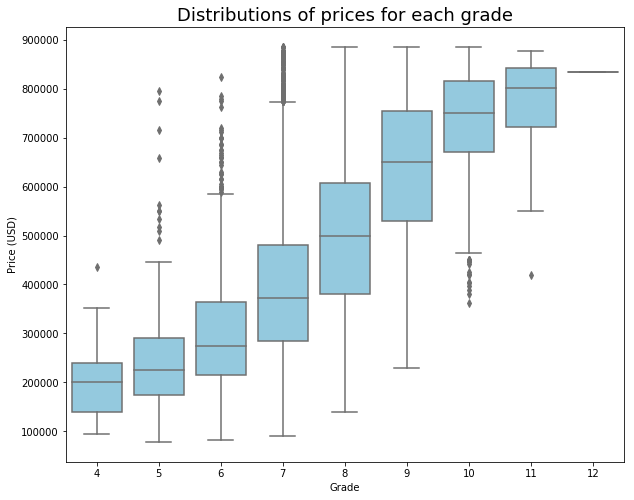

What is the difference in price between a home of a middling grade (e.g., 7) and one of a higher grade (e.g., 10)?

# Create boxplots to compare 'grade' and 'price'

plt.figure(figsize=(10,8))

sns.boxplot(midrange_homes['grade'], midrange_homes['price'], color='skyblue')

plt.title('Distributions of prices for each grade', fontsize=18)

plt.xlabel('Grade')

plt.ylabel('Price (USD)')

plt.show();It looks like there could be substantial differences in price based on the grade of a house. For instance, only the outliers of grade-5 houses fall within the price range of grade-11 houses.

Let's make a direct comparison between the median prices of grade-7 and grade-10 homes:

grade_7_med = midrange_homes[midrange_homes['grade'] == 7]['price'].median()

grade_10_med = midrange_homes[midrange_homes['grade'] == 10]['price'].median()

grade_10_med - grade_7_med378805.0

There is a huge difference (almost $379,000) between the median prices of grade-7 and grade-10 homes. Improving the grade of a home by that much is probably outside the reach of most homeowners. What if a homeowner could improve the grade of their home from 7 to 8?

grade_8_med = midrange_homes[midrange_homes['grade'] == 8]['price'].median()

grade_8_med - grade_7_med126500.0

Based on the boxplots above, we can see that the jump in median price from grade 7 to grade 8 is a big one, but if a homeowner could manage it, it could pay off. The median price of a grade-8 home is $126,500 higher than the median price of a grade-7 home. Again, this is without considering any other factors, like the size or condition of these homes.

Because King County is home to Seattle, one of the most expensive housing markets in the US, there is probably some spatial patterning in home price, size, and quality across the county. Although a home-owning family probably cannot move their house to a new location, a family shopping for a home would want to know where they might find homes in their price range, or how much more house they could get by living a little farther from central Seattle.

Let's use lat and long to take a look at where midrange homes can be found in King County.

# Define a function to create map-like scatter plots with color code

def locate_it(data, latitude, longitude, feature):

"""Create a scatterplot from lat/long data with color code.

Parameters:

data: a DataFrame

latitude: the name of the column in your DataFrame that contains

the latitude values. Pass this as a string.

longitude: the name of the column in your DataFrame that contains

the longitude values. Pass this as a string.

feature: the name of the column whose values you want to use as

the values for your color code. Pass this as a string.

Dependencies: matplotlib

Returns: scatterplot"""

plt.figure(figsize=(16,12))

cmap = plt.cm.get_cmap('RdYlBu')

sc = plt.scatter(data[longitude], data[latitude],

c=data[feature], vmin=min(data[feature]),

vmax=max(data[feature]), alpha=0.5, s=5, cmap=cmap)

plt.colorbar(sc)

plt.xlabel('Longitude')

plt.ylabel('Latitude')

plt.title('House {} by location'.format(feature), fontsize=18)

plt.show();# Call locate_it for price by location

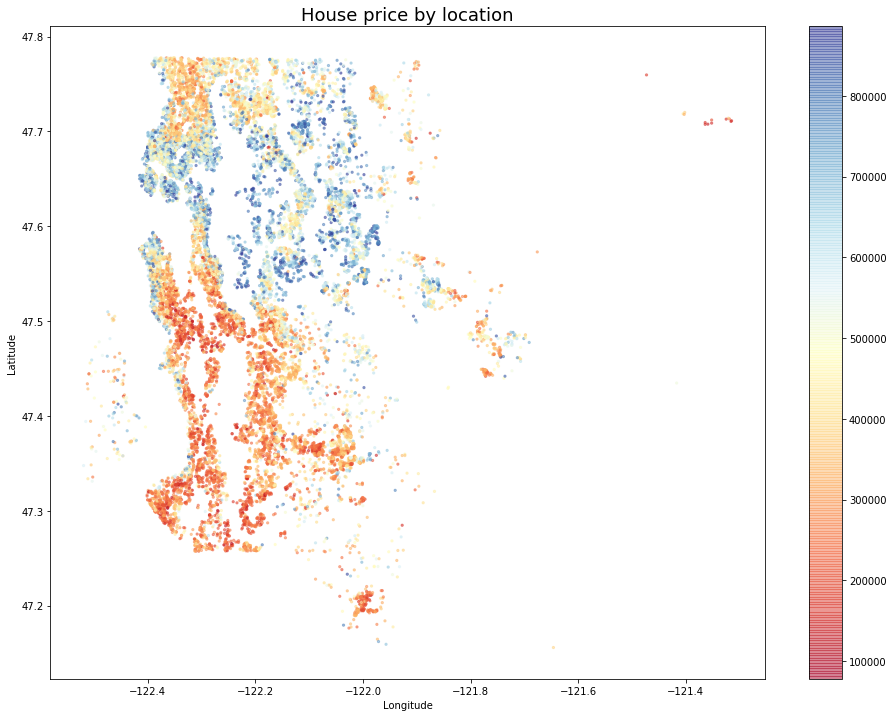

locate_it(midrange_homes, 'lat', 'long', 'price')This scatterplot confirms that the most expensive midrange homes (blue dots) can be found in Seattle, around Lake Washington, and in some other waterfront locations. Less expensive homes (red dots) are concentrated in the southwest part of the county.

# Call locate_it for sqft_living by location

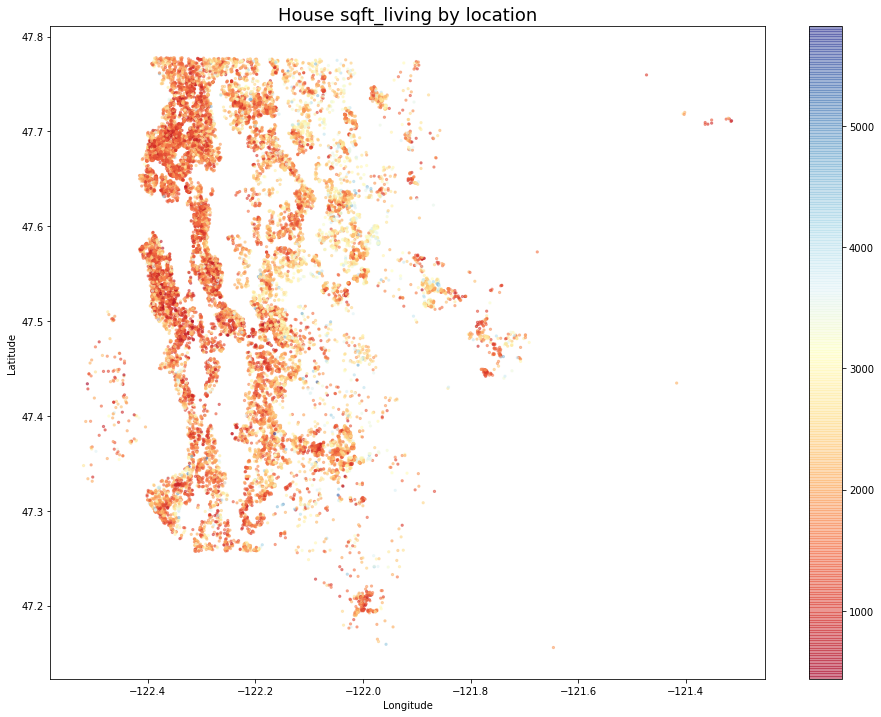

locate_it(midrange_homes, 'lat', 'long', 'sqft_living')Midrange homes tend to be smaller, which is why there are so few blue dots on this plot. It's hard to understand the range of home sizes represented here, so I'll plot it again with some adjustments to the color scale.

# Customize the plot for sqft_living by location

plt.figure(figsize=(16,12))

cmap = plt.cm.get_cmap('RdYlBu')

sc = plt.scatter(midrange_homes['long'], midrange_homes['lat'],

c=midrange_homes['sqft_living'],

vmin=min(midrange_homes['sqft_living']),

vmax=np.percentile(midrange_homes['sqft_living'], 90),

alpha=0.5, s=5, cmap=cmap)

plt.colorbar(sc)

plt.xlabel('Longitude')

plt.ylabel('Latitude')

plt.title('House square footage by location\n(Darkest blue = 90th percentile of size)', fontsize=14)

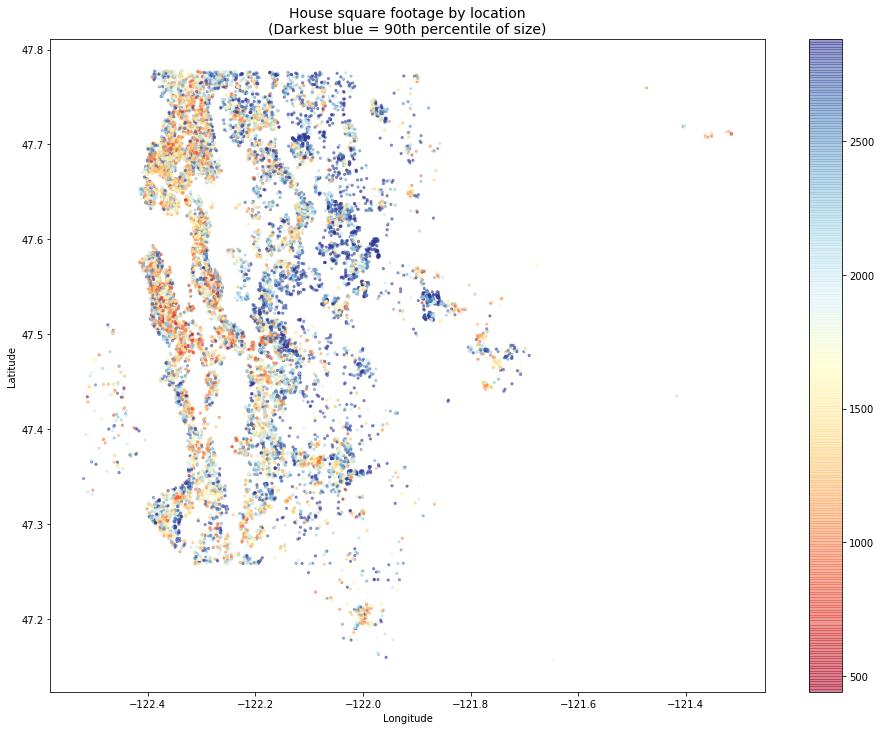

plt.show();That's a little easier to understand. Midrange homes over 2,000 square feet can be found across much of western King County except in Seattle proper, where they are less common.

What about house quality? Let's visualize homes by grade.

# Call locate_it for grade by location

locate_it(midrange_homes, 'lat', 'long', 'grade')This plot shows that the highest quality midrange homes by grade can be found to the east of Lake Washington, while there seem to be more low-quality homes in the central and southwestern parts of the county. Overall, midrange homes tend to have grades in the middle of the range of possible values, from 6 to 10.

Finally, let's look at the condition of midrange homes.

locate_it(midrange_homes, 'lat', 'long', 'condition')This plot contains some good news for middle-income home buyers: homes in good condition (blue dots) can be found all over King County, and midrange homes are almost all of middling condition or better.

I anticipate that I may want to examine the effect that location (expressed through zipcode) has on price, so I'll create a copy of my midrange_homes DataFrame with dummy variables for the various zipcodes.

# Generate dummy variables

zip_dummies = pd.get_dummies(midrange_homes['zipcode'], prefix='zip')# Drop the original 'zipcode' column

mh_no_zips = midrange_homes.drop('zipcode', axis=1)

# Concatenate the dummies to the copy of 'midrange_homes'

mh_zips_encoded = pd.concat([mh_no_zips, zip_dummies], axis=1)

# Preview the new DataFrame

mh_zips_encoded.head().dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| price | bedrooms | bathrooms | sqft_living | sqft_lot | floors | waterfront | view | condition | grade | ... | zip_98146 | zip_98148 | zip_98155 | zip_98166 | zip_98168 | zip_98177 | zip_98178 | zip_98188 | zip_98198 | zip_98199 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 221900.0 | 3 | 1.00 | 1180 | 5650 | 1.0 | 0 | 0 | 3 | 7 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

| 1 | 538000.0 | 3 | 2.25 | 2570 | 7242 | 2.0 | 0 | 0 | 3 | 7 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | 180000.0 | 2 | 1.00 | 770 | 10000 | 1.0 | 0 | 0 | 3 | 6 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 3 | 604000.0 | 4 | 3.00 | 1960 | 5000 | 1.0 | 0 | 0 | 5 | 7 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | 510000.0 | 3 | 2.00 | 1680 | 8080 | 1.0 | 0 | 0 | 3 | 8 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

5 rows × 87 columns

# Drop one of the dummy variables

mh_zips_encoded.drop('zip_98168', axis=1, inplace=True)

# Check the head again

mh_zips_encoded.head().dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| price | bedrooms | bathrooms | sqft_living | sqft_lot | floors | waterfront | view | condition | grade | ... | zip_98144 | zip_98146 | zip_98148 | zip_98155 | zip_98166 | zip_98177 | zip_98178 | zip_98188 | zip_98198 | zip_98199 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 221900.0 | 3 | 1.00 | 1180 | 5650 | 1.0 | 0 | 0 | 3 | 7 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

| 1 | 538000.0 | 3 | 2.25 | 2570 | 7242 | 2.0 | 0 | 0 | 3 | 7 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | 180000.0 | 2 | 1.00 | 770 | 10000 | 1.0 | 0 | 0 | 3 | 6 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 3 | 604000.0 | 4 | 3.00 | 1960 | 5000 | 1.0 | 0 | 0 | 5 | 7 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | 510000.0 | 3 | 2.00 | 1680 | 8080 | 1.0 | 0 | 0 | 3 | 8 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

5 rows × 86 columns

Once I have seen how my model performs on midrange_homes, I can try again with mh_zips_encoded to see if treating zipcode as a categorical variable improves model performance.

Working on the data in midrange_homes, I will use multiple linear regression to try to create a model that explains at least 70% of the variability in price.

# Import statsmodels

import statsmodels.api as sm

from statsmodels.formula.api import ols# Split the cleaned data into features and target

mh_features = midrange_homes.drop(['price'], axis=1)

mh_target = midrange_homes['price']# Define a function to run OLS and return model summary

def model_it(data, features):

"""Fit an OLS model and return model summary

data: a DataFrame containing both features and target

features: identical to 'data', but with the target dropped"""

features_sum = '+'.join(features.columns)

formula = 'price' + '~' + features_sum

model = ols(formula=formula, data=data).fit()

return model.summary()# Fit the model and return summary

model_it(midrange_homes, mh_features)OLS Regression Results*

R-squared: 0.675

Adj. R-squared: 0.675

(See project notebook for full printout of model results.)

The first attempt at this model yields some interesting results. An adjusted R-squared value of 0.675 means that the model explains 67.5% of the variability in price, which is close to my goal of 70%. Near the bottom of the model summary, the skew and kurtosis are not too far from the target values (skew = 0, kurtosis = 3) that would mean that the residuals are normally distributed. The Jarque-Bera score of 866 is very high, however, and that suggests that the residuals are not normally distributed--not a good thing for linear regression!

Let's see if the model can be improved by dropping the two features with p-values over my chosen threshold of 0.05: long and sqft_lot15. Those high p-values indicate that the effects of those features are not significant, and reducing the overall number of features could improve the adjusted R-squared value.

I'll run the model again, this time minus the two features whose p-values were above my significance threshold of 0.05 (long and sqft_lot15).

# Drop unwanted features and rerun the modeling function

mh_features_fewer = mh_features.drop(['long', 'sqft_lot15'], axis=1)

model_it(midrange_homes, mh_features_fewer)OLS Regression Results*

R-squared: 0.675

Adj. R-squared: 0.675

(See project notebook for full printout of model results.)

Dropping those features did not change the adjusted R-squared at all, but now all of the feature coefficients are significant. At least we can be certain that the impacts those features have on price are significant.

From the coefficients, we can get a few important insights:

- For every additional square foot of living space, we can expect the price of a home to go up by $62.99.

- A step up in condition could raise the price by over $23,900.

- A step up in grade could raise the price by over $65,300.

This time I will try to fit a model to my alternate DataFrame, mh_zips_encoded, which uses one-hot encoding on the original zipcode feature.

# Split the data into features and target

mh_zips_encoded_features = mh_zips_encoded.drop(['price'], axis=1)

mh_zips_encoded_target = mh_zips_encoded['price']model_it(mh_zips_encoded, mh_zips_encoded_features)OLS Regression Results

R-squared: 0.821

Adj. R-squared: 0.821

(See project notebook for full printout of model results.)

That's a huge improvement in model performance, with an adjusted R-squared value of 0.821 compared to 0.675 without one-hot encoding of zipcodes. Many of the features, however, are not having impacts that are statistically significant (their p-values are over my chosen threshold of 0.05). Now it's time to see if I can eliminate some of the less significant features while maintaining or improving model performance.

I will use stepwise feature selection to try to narrow my list of features to only those that are really affecting price. I got the code for stepwise_selection below from the Flatiron School data science curriculum.

# Paste in code for 'stepwise_selection' function

def stepwise_selection(X, y,

initial_list=[],

threshold_in=0.01,

threshold_out = 0.05,

verbose=True):

""" Perform a forward-backward feature selection

based on p-value from statsmodels.api.OLS

Parameters:

X: pandas.DataFrame with candidate features

y: list-like with the target

initial_list: list of features to start with (column names of X)

threshold_in: include a feature if its p-value < threshold_in

threshold_out: exclude a feature if its p-value > threshold_out

verbose: whether to print the sequence of inclusions and exclusions

Returns: list of selected features

Always set threshold_in < threshold_out to avoid infinite looping.

See https://en.wikipedia.org/wiki/Stepwise_regression for the details

"""

included = list(initial_list)

while True:

changed=False

# forward step

excluded = list(set(X.columns)-set(included))

new_pval = pd.Series(index=excluded)

for new_column in excluded:

model = sm.OLS(y, sm.add_constant(pd.DataFrame(X[included+[new_column]]))).fit()

new_pval[new_column] = model.pvalues[new_column]

best_pval = new_pval.min()

if best_pval < threshold_in:

best_feature = new_pval.idxmin()

included.append(best_feature)

changed=True

if verbose:

print('Add {:30} with p-value {:.6}'.format(best_feature, best_pval))

# backward step

model = sm.OLS(y, sm.add_constant(pd.DataFrame(X[included]))).fit()

# use all coefs except intercept

pvalues = model.pvalues.iloc[1:]

worst_pval = pvalues.max() # null if pvalues is empty

if worst_pval > threshold_out:

changed=True

worst_feature = pvalues.argmax()

included.remove(worst_feature)

if verbose:

print('Drop {:30} with p-value {:.6}'.format(worst_feature, worst_pval))

if not changed:

break

return includedresult = stepwise_selection(mh_zips_encoded_features, mh_zips_encoded_target, verbose = True)

print('resulting features:')

print(result)resulting features:

['bathrooms', 'sqft_living15', 'grade', 'yr_built', 'lat', 'sqft_living', 'zip_98155', 'zip_98028', 'zip_98004', 'view', 'zip_98019', 'zip_98040', 'zip_98011', 'condition', 'zip_98022', 'zip_98014', 'sqft_lot', 'zip_98178', 'zip_98112', 'zip_98116', 'zip_98119', 'zip_98058', 'zip_98006', 'zip_98136', 'waterfront', 'zip_98005', 'zip_98199', 'zip_98109', 'zip_98010', 'zip_98122', 'zip_98029', 'zip_98055', 'month', 'zip_98102', 'zip_98105', 'zip_98027', 'zip_98075', 'zip_98103', 'zip_98031', 'zip_98107', 'zip_98188', 'zip_98039', 'yr_renovated', 'zip_98115', 'zip_98117', 'zip_98033', 'zip_98126', 'zip_98144', 'zip_98008', 'zip_98070', 'long', 'zip_98007', 'zip_98052', 'zip_98074', 'zip_98118', 'zip_98053', 'zip_98166', 'zip_98042', 'zip_98032', 'zip_98146', 'zip_98030', 'zip_98106', 'zip_98059', 'zip_98092', 'zip_98023', 'zip_98177', 'zip_98125', 'zip_98056', 'zip_98034', 'zip_98108', 'zip_98065', 'zip_98024']

(See project notebook for full printout.)

Now I will rerun the model using just the features selected by stepwise_selection.

Note that I am using the list of features selected when I applied stepwise selection the first time; rerunning this notebook may produce slightly different results in the list above than what you see in the cell below.

# Make a new DataFrame using just the selected features

stepwise_selections = ['grade', 'yr_built', 'lat', 'sqft_living15',

'sqft_living', 'zip_98155', 'zip_98028', 'zip_98004',

'view', 'zip_98019', 'zip_98040', 'zip_98011',

'condition', 'zip_98022', 'zip_98014', 'sqft_lot',

'zip_98178', 'zip_98112', 'zip_98116', 'zip_98119',

'zip_98058', 'zip_98136', 'zip_98006', 'waterfront',

'zip_98109', 'zip_98005', 'zip_98199', 'zip_98122',

'zip_98010', 'zip_98029', 'zip_98102', 'month',

'zip_98055', 'zip_98105', 'zip_98027', 'zip_98103',

'zip_98107', 'zip_98075', 'zip_98115', 'zip_98117',

'zip_98033', 'zip_98126', 'zip_98144', 'zip_98008',

'yr_renovated', 'zip_98039', 'long', 'zip_98070',

'zip_98007', 'zip_98052', 'zip_98118', 'zip_98074',

'zip_98053', 'zip_98166', 'zip_98031', 'bathrooms',

'zip_98042', 'zip_98146', 'zip_98106', 'zip_98032',

'zip_98030', 'zip_98188', 'zip_98059', 'zip_98092',

'zip_98023', 'zip_98177', 'zip_98125', 'zip_98056',

'zip_98034', 'zip_98108', 'zip_98065', 'zip_98024' ]

cols_to_drop = ([col for col in mh_zips_encoded.columns

if not col in stepwise_selections])

mh_selected = mh_zips_encoded.drop(cols_to_drop, axis=1)# Preview the new DataFrame

mh_selected.head().dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| bathrooms | sqft_living | sqft_lot | waterfront | view | condition | grade | yr_built | yr_renovated | lat | ... | zip_98126 | zip_98136 | zip_98144 | zip_98146 | zip_98155 | zip_98166 | zip_98177 | zip_98178 | zip_98188 | zip_98199 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1.00 | 1180 | 5650 | 0 | 0 | 3 | 7 | 1955 | 0 | 47.5112 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 |

| 1 | 2.25 | 2570 | 7242 | 0 | 0 | 3 | 7 | 1951 | 1991 | 47.7210 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | 1.00 | 770 | 10000 | 0 | 0 | 3 | 6 | 1933 | 0 | 47.7379 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 3 | 3.00 | 1960 | 5000 | 0 | 0 | 5 | 7 | 1965 | 0 | 47.5208 | ... | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | 2.00 | 1680 | 8080 | 0 | 0 | 3 | 8 | 1987 | 0 | 47.6168 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

5 rows × 72 columns

Finally, I will try to fit the multiple linear regression model again, this time using my new DataFrame, which contains only the features chosen by stepwise selection.

# Prepare the inputs and call model_it

model_it(mh_zips_encoded, mh_selected)OLS Regression Results

R-squared: 0.821

Adj. R-squared: 0.820

(See project notebook for full printout of model results.)

The model summary above shows that eliminating some features through stepwise selection caused the adjusted R-squared value to drop by 0.001 while eliminating those features with p-values over 0.05. Not bad! This model summary can give us some important insights about home pricing.

According to this model:

-

Adding a bathroom or increasing square footage by 100 square feet could raise the price of a home by over $8,300.

-

Improving the condition of a home by one level (e.g., from 3 to 4) could raise the price of the home by over $21,000.

-

Improving the grade of a home by one level (e.g., from 7 to 8) could raise the price of the home by over $42,000.

Finally, some interpretation of the many zipcode features:

The zipcodes that had a significant positive impact on price are numerous, and they come from both the Seattle metro area (zipcodes over 98100) and elsewhere in King County. This means that there are places throughout King County where location will add to the value of a home. This is probably because there are different ways that location can add value, especially in conjunction with other features. If a home is in the Seattle metro area, the location could be adding value because many people want to live in the city or within commuting distance to their jobs in the city center. If a home is outside of the Seattle metro area, it's possible to have a bigger lot, more square footage, etc., so location can add value through its relationship to other features. Homeowners can't do much to change the location of the home they own, but an awareness of how location is effecting their home's value could help them make decisions about what sorts of improvements to make. Location awareness could also help prospective home buyers understand the tradeoffs of buying a home of this or that size or quality in different locations.

I think that this final model delivers the best and most helpful results for my middle-income family audience, so I will proceed with analyzing this one using Q-Q plots.

Now that I have settled on my best model, I want to make sure that the three main assumptions for linear models hold true:

-

Each feature has a linear relationship with the target.

-

The residuals are normally distributed.

-

The residuals are homoscedastic (i.e., there is an equal chance of having small or large residuals along the range of each feature).

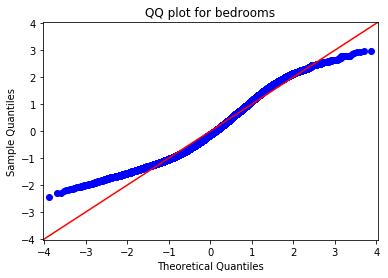

I already decided that most of my features had linear relationships to the target when I inspected the scatterplots above. Now I'll use Q-Q plots to check the residuals for normality and homoscedasticity.

# Import needed package, prep input, and generate Q-Q plots

from scipy import stats

columns_for_qq = mh_zips_encoded_features.columns

for column in columns_for_qq:

formula = 'price' + '~' + column

model = ols(formula=formula, data=mh_zips_encoded).fit()

sm.graphics.qqplot(model.resid, dist=stats.norm, line='45', fit=True)

plt.title('QQ plot for ' + str(column))

plt.show();

fig = plt.figure(figsize=(16,14))

fig = sm.graphics.plot_regress_exog(model, column, fig=fig)(See the project notebook in this repository for a full printout of the Q-Q plots.)

My residuals definitely aren't perfectly normal, but they aren't too bad for some of the features, like bedrooms, bathrooms, sqft_living, and grade. Although there is plenty of room for improvement in my model (and lots that it can't explain, simply because the original data are limited), I think it is worth proceeding to the validation stage with this model.

To ensure that my model could be used to generalize to houses outside my dataset, I will validate it using k-fold cross-validation. Because I am going to use a function from Scikit-Learn for validation, I will first duplicate my model in Scikit-Learn to make things a little easier.

# Import needed sklearn packages

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error

from sklearn.model_selection import cross_val_score

# Initialize and run the regression model using sklearn

linreg = LinearRegression()

linreg.fit(mh_selected, mh_zips_encoded['price'])LinearRegression(copy_X=True, fit_intercept=True, n_jobs=None, normalize=False)

# Define a function to call cross_val_score for various k values

def crossval_it(model, features, target, k):

"""Find mean of MSE for k folds in cross-validation

model: your linear model, preferably a LinearRegression() object

features: a DataFrame of just your features

target: a DataFrame of just your target

k: number of folds for cross-validation

Dependencies: numpy, sklearn.metrics.mean_squared_error,

sklearn.metrics.cross_val_score"""

result = np.mean(cross_val_score(model, features, target, cv=k,

scoring='neg_mean_squared_error'))

return result# Define a function to compare k-fold cross-validation results at various

# values of k

def compare_k_folds(model, features, target, folds_list):

"""Print MSE for k-fold cross-validation with various k values

model: your linear model, preferably a LinearRegression() object

features: a DataFrame of just your features

target: a DataFrame of just your target

folds_list: a list of numbers of folds (k) to use in cross-

validation

Dependencies: numpy, sklearn.metrics.mean_squared_error,

sklearn.metrics.cross_val_score"""

for fold in folds_list:

mean_mse = crossval_it(model, features, target, fold)

print('{} folds: {}'.format(fold, mean_mse))# Call compare_k_folds for 5, 10, and 20 folds

folds = [5, 10, 20]

compare_k_folds(linreg, mh_selected, mh_zips_encoded['price'], folds)5 folds: -5729117966.714569

10 folds: -5722862137.233953

20 folds: -5698285685.955608

The results for 5, 10, and 20-fold cross-validation above are mean squared errors. Taking the square root of each of these (root mean squared error or RMSE), I can see that, while my model doesn't predict price perfectly, at least it is pretty consistent.

# Define a function to compare RMSE for k-fold cross-validation at

# various k values

def compare_rmse(model, features, target, folds_list):

"""Compare RMSE for k-fold cross-validation results at various k values

model: your linear model, preferably a LinearRegression() object

features: a DataFrame of just your features

target: a DataFrame of just your target

folds_list: a list of numbers of folds (k) to use in cross-

validation

Dependencies: numpy, sklearn.metrics.mean_squared_error,

sklearn.metrics.cross_val_score"""

for fold in folds_list:

rmse = np.sqrt(abs(crossval_it(model, features, target, fold)))

print('{} folds: {}'.format(fold, rmse))# Call compare_rmse for 5, 10, and 20 folds

compare_rmse(linreg, mh_selected, mh_zips_encoded['price'], folds)5 folds: 75690.9371504579

10 folds: 75649.60103816776

20 folds: 75486.99017682191

The benefit to looking at RMSE is that is it in the same units as the target; since our target is price and it hasn't been transformed in any way, RMSE can be expressed in dollars. As we cross-validate with more and more folds, RMSE stays pretty consistent, varying by only $204 from 5 to 20 folds. From this validation process I conclude that, although my model doesn't explain all the variation in price, it does its job consistently; its successes are not all random or lucky.

In the "Interpretation" section below, I will wrap up with a summary of how my model can help middle-income families understand the factors that influence the price of a home they want to buy or sell.

My purpose in analyzing the King County Housing dataset was to extract insights that would help middle-income families understand the factors influencing the prices of homes they want to buy or sell.

From the original dataset I eliminated the top 10% most expensive homes and then selected only homes with two to five bedrooms. This filtering left 18,999 observations for my analysis, with a median home price of $425,000, an affordable price for a family earning King County's median household income.

Examining a few features in isolation, I was able to observe the following:

-

The time of year may have an impact on sale price. Median sale prices in the months of April, May, June, and July tend to be higher than those at other times of year. For instance, April's median home price is $41,000 higher than January's.

-

The number of bedrooms may be a good indicator of sale price, and adding a bedroom could cause a substantial increase in the sale price of a home. For instance, adding a fourth bedroom to a three-bedroom house could cause up to a 22% increase in sale price.

-

Similarly, improving the grade of a house could have a substantial impact on price. For instance, raising a house's grade from 7 to 8 could raise the sale price by over $126,000.

It is important to note that the above observations are based on considering features in isolation, but in reality, the features in this dataset are probably related to one another in ways that affect the target (price). For instance, adding a bedroom probably means increasing a home's square footage at the same time, and if a new addition improves the design of the home, the grade may also increase.

My multiple linear regression model can offer more precise insights on the impacts of various features on price. I fit the model to a version of my dataset that used one-hot encoding on zipcodes, and I used stepwise feature selection to eliminate features that were not having a significant impact on price. The resulting model explains 82% of the variability in price.

Specifically, the model coefficients show that:

-

Adding a bathroom or increasing square footage by 100 square feet could raise the price of a home by over $8,300.

-

Improving the condition of a home by one level (e.g., from 3 to 4) could raise the price of the home by over $21,000.

-

Improving the grade of a home by one level (e.g., from 7 to 8) could raise the price of the home by over $42,000.

The dollar values cited here are likely to be much more accurate than those above because they take into account the effects of many features on price, not just one at a time.

There are many factors influencing the price of a home, not all of which are within a homeowner's control. There are also many potential factors affecting price that the original dataset did not consider, such as a home's school district, or the walkability of the neighborhood, or the distance to major employment centers. My model indicates, however, that there are some concrete steps a homeowner can take to raise the potential sale price of a home, including improvements to amenities and design.

This repository (https://github.com/jrkreiger/pricing-midrange-homes) contains files from a project assigned to me as part of Flatiron School's Online Data Science Bootcamp. The purpose of the project was to demonstrate skills in data cleaning and multiple linear regression modeling.

Here's what you'll find inside:

-

pricing-midrange-homes-king-co-wa.ipynb: Jupyter Notebook containing project code

-

column_names.md: Markdown file containing the data dictionary for the dataset provided by Flatiron School

-

kc_house_data.csv: CSV file containing the dataset provided by Flatiron School

-

presentation.pdf: PDF file containing slides from a non-technical presentation of project results

I created the Jupyter Notebook in a virtual environment provided to me by Flatiron School. Things may work a little differently for you if you're running different versions of Python or the packages I used.

If you download the notebook to view locally, note that I formatted it using Jupyter Notebook extensions (https://github.com/ipython-contrib/jupyter_contrib_nbextensions). The extensions let me easily create a table of contents and collapse groups of cells to make the notebook easier to read and navigate. If you don't have the extensions installed locally, the notebook will look a little different for you.

You can read my blog post about the project at this link: http://jrkreiger.net/uncategorized/cleaning-house-data/.

View a video of my non-technical presentation here: https://youtu.be/yvaGRdNtPNI.

Flatiron School provided me the dataset, the data dictionary, a Jupyter Notebook containing only a rubric for recording my identifying information, and the code I used for stepwise selection. I wrote the rest of the code in the Jupyter Notebook, the text of this README, my blog post linked above, and the content of my non-technical presentation (with the exception of some images whose creators I credit in the slides).