This is the code of:

CIAN: Cross-Image Affinity Net for Weakly Supervised Semantic Segmentation, Junsong Fan, Zhaoxiang Zhang, Tieniu Tan, Chunfeng Song, Jun Xiao, AAAI2020 [paper].

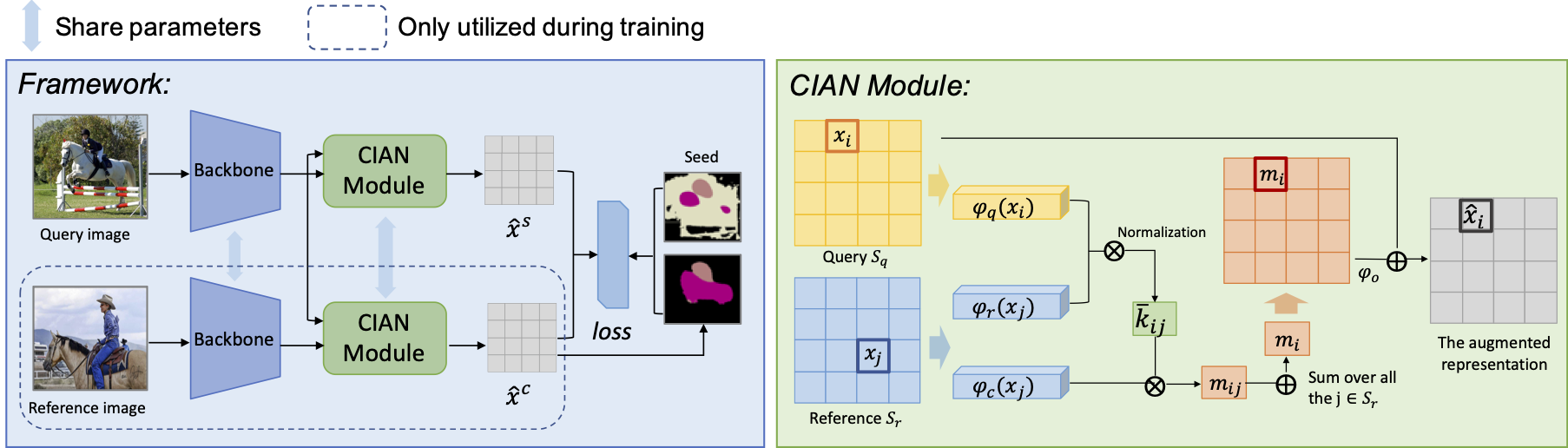

Framework of the approach. Our approach learns the cross image relationships to help weakly-supervised semantic segmentation. The CIAN Module takes features as input from two different images, extract and change information across them to augment the original features.

- Python 3.7, MXNet 1.3.1, Numpy, OpenCV, pydensecrf.

- NVIDIA GPUs

-

Download the VOC 2012 dataset, and config the path accordingly in the

run_cian.sh. -

Download the seeds here, untar and put the folder

CIAN_SEEDSintoCIAN/data/Seeds/. We use the VGG16 based CAM to generate the foreground and the saliency model DRFI to generate the background. You can also generate the seeds by yourself. -

Download the ImageNet pretrained parameters and put them into the folder

CIAN/data/pretrained. We adopt the pretrained parameters provided by the official MXNet model-zoo:

./run_cian.shThis script will automatically run the training, testing (on val set), and retraining pipeline. Checkpoints and predictions will be saved in folder CIAN/snapshot/CIAN.

If you find the code is useful, please consider citing:

@inproceedings{fan2020cian,

title={CIAN: Cross-Image Affinity Net for Weakly Supervised Semantic Segmentation},

author={Fan, Junsong and Zhang, Zhaoxiang and Tan, Tieniu and Song, Chunfeng and Xiao, Jun},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

year={2020}

}