An implementation of Rainbow in PyTorch. A lot of codes are borrowed from baselines, NoisyNet-A3C, RL-Adventure.

List of papers are:

-

Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A., Veness, J., Bellemare, M. G., et al. (2015). Human-level control through deep reinforcement learning. Nature, 518(7540), 529. http://doi.org/10.1038/nature14236

-

van Hasselt, H., Guez, A., & Silver, D. (2015, September 22). Deep Reinforcement Learning with Double Q-learning. arXiv.org.

-

Schaul, T., Quan, J., Antonoglou, I., & Silver, D. (2015, November 19). Prioritized Experience Replay. arXiv.org.

-

Wang, Z., Schaul, T., Hessel, M., van Hasselt, H., Lanctot, M., & de Freitas, N. (2015, November 20). Dueling Network Architectures for Deep Reinforcement Learning. arXiv.org.

-

Fortunato, M., Azar, M. G., Piot, B., Menick, J., Osband, I., Graves, A., et al. (2017, July 1). Noisy Networks for Exploration. arXiv.org.

-

Hessel, M., Modayil, J., van Hasselt, H., Schaul, T., Ostrovski, G., Dabney, W., et al. (2017, October 6). Rainbow: Combining Improvements in Deep Reinforcement Learning. arXiv.org.

torch

torchvision

numpy

tensorboardX

I tested code on source built torch-v1.0 with CUDA10.0.

You can specify environment with --env

python main.py --env PongNoFrameskip-v4

You can use RL algorithms with below arguments

python main.py --multi-step 3 --double --dueling --noisy --c51 --prioritized-replay

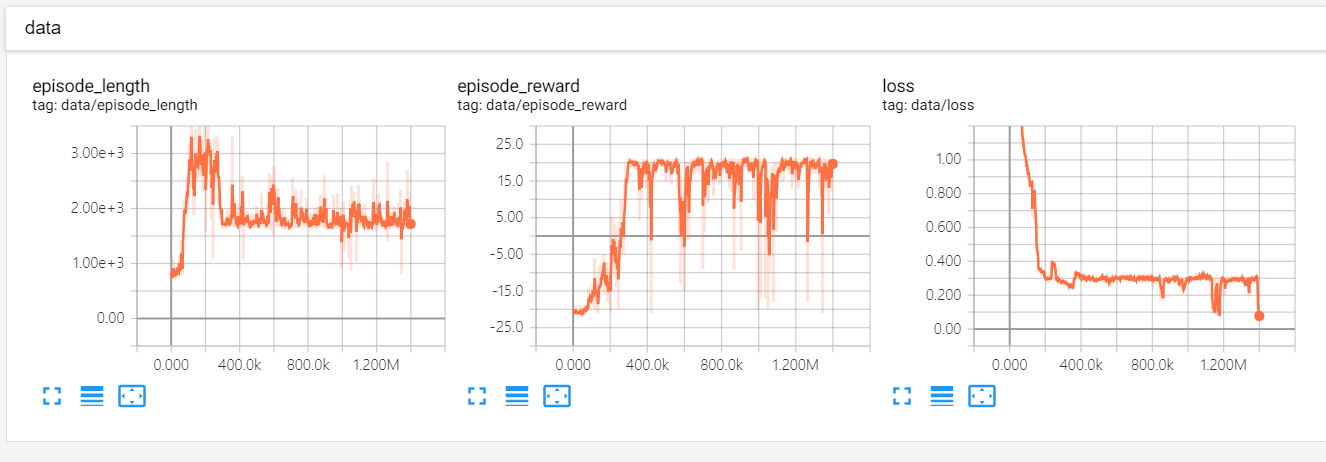

This is tensorboard scalars with Rainbow without multi-step(--double --dueling --noisy --c51 --prioritized-replay)

You can enjoy the pretrained model with command

python main.py --evaluate --render --multi-step 3 --double --dueling --noisy --c51 --prioritized-replay

- Kalxhin(https://github.com/Kaixhin/NoisyNet-A3C)

- higgsfield(https://github.com/higgsfield)

- openai(https://github.com/openai/baselines)