This repository holds the code to create the press briefing claim dataset. The main modules can be found in the src directory. Three notebooks in the root directory interface these modules and guides through the dataset creation process.

This repository is part of my bachelore theses with the title Automated statement extractionfrom press briefings. For more indepth information see the Statement Extractor repository.

This repository uses Pipenv to manage a virtual environment with all python packages. Information about how to install Pipenv can be found here.

To create a virtual environment and install all packages needed, call pipenv install from the root directory.

Default directorys and parameter can be defined in config.py.

The wikification module relies on two wikification services, Dandelion and TagMe. API keys for these services can be created for free. The wikify module expects the environment variables DANDELION_TOKEN and TAGME_TOKEN.

Data:

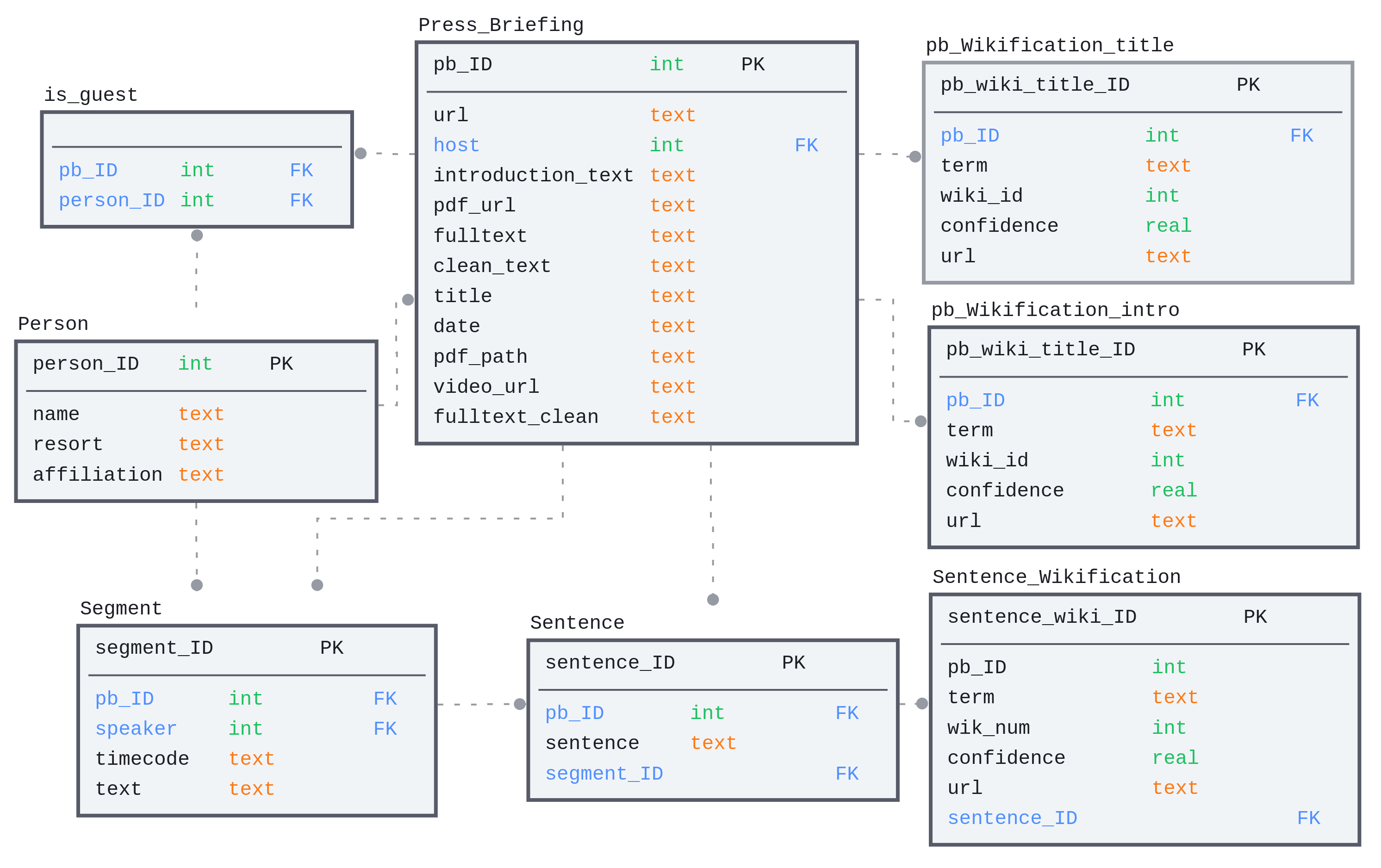

- data/SMC_dataset holds the full dataset as SQLite database and csv tables.

- data/SMC_dataset/pre_labeled holds pre labeled dataset slices that are ready for labeling.

- data/SMC_dataset/labeled holds labeled dataset slices.

Code:

- src conatins main modules to scrape, parse and import the data.

Notebooks:

- create_dataset.ipynb guieds through the database creation process.

- create_tables.ipynb guides through the table creation process.

- dataset_analysis.ipynb holds the analysis of the dataset.

Besides the dataset from the SMC press briefings, a translated version of the IBM Debater® - Claim Sentences Search (IBM_Debater_(R)_claim_sentences_search) dataset, from the claim model comparision is used to balance the dataset. To create the training data, the Claim Sentences Search dataset needs to be preprocessed like in the claim model comparision repo and translated into german.