This is the third project in the Udacity Data Engineer Nanodegree.

The virtual startup 'Sparkify' provides a music streaming service. In this project we imagine that Sparkify has grown and we are shifting to using cloud services for our data warehousing needs.

Here we create an ETL pipeline that extracts JSON log data of user activity & song metadata from s3 buckets and stage them on Redshift, transforming the data into a star-schema to optimize queries that have been specified by the analytics team.

We also support Sparkify's IaC scalability goals by automating the ETL pipeline infrastructure management using the AWS Python SDK (Boto3).

- Clone this repository and install the requirements.

$ pip3 install -r requirements.txt

- You will need an AWS user with rights to create IAM roles and Redshift clusters. Export your AWS Account credentials as environment variables in your terminal window:

$ export AWS_ACCESS_KEY_ID=<YOUR_AWS_ACCESS_KEY_ID>

$ export AWS_SECRET_ACCESS_KEY=<YOUR_AWS_ACCESS_KEY_ID>

- Run the script with

-cto to create the necessary IAM role, permissions and Redshift cluster if you do not already have those set up. You can adjust the configuration details (e.g. AWS region, DB credentials) by editingdwh_config.jsonbefore running the setup script.

If you already have the cluster set up, run the script without the optional argument.

$ python3 sparkify_redshift.py [-c]

- RECOMMENDED: Run the teardown script to clean up your AWS resources.

$ python3 scripts/cleanup_redshift.py

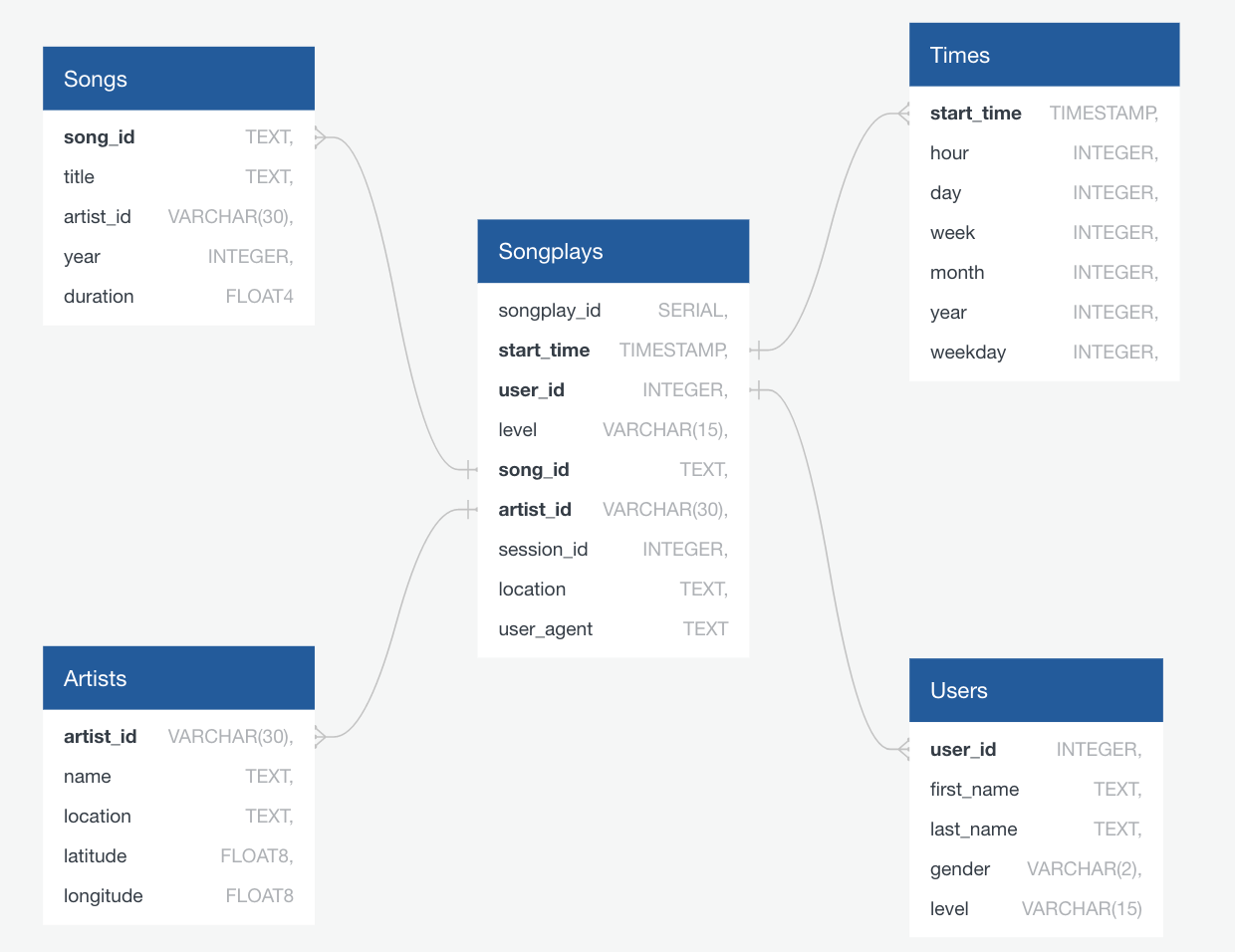

The data is loaded into a star-schema DB optimized on queries related to

songplays in the app. As they are small, each of the dimension tables is

distributed equally across each of the redshift nodes to optimize joins on

the fact table, songplays.

Image created with QuickDBD

Image created with QuickDBD

The dashboard.ipynb Juptyer Notebook contains some sample analytical

queries that demonstrate potential use-cases for this DB, as well as some

graphs of the data/tables.

- etl.py - The main script that runs the ETL Pipeline from S3 to Redshift.

- setup_redshift.py - A script that sets up the required AWS resources including IAM role, permissions, Redshift cluster and DB.

- cleanup_redshift.py - A script that removes up created AWS resources including IAM role, permissions, Redshift cluster and DB.

- create_tables.py - Creates the tables on Redshift DB.

- sql_queries.py - Queries specified by the Sparkify Analytics team.

- dwh_config.json - Configuration file defining constants related to AWS resources.

- dashboard.ipynb - Some graphs of the DB plus some sample analytical queries.

The first dataset is a subset of real data from the Million Song Dataset. Each file is in JSON format and contains metadata about a song and the artist of that song. The files are partitioned by the first three letters of each song's track ID.

For example:

song_data/A/B/C/TRABCEI128F424C983.json

song_data/A/A/B/TRAABJL12903CDCF1A.json

The second dataset consists of log files in JSON format generated by an event simulator based on the songs in the dataset above. These simulate app activity logs from an imaginary music streaming app based on configuration settings.

The log files are partitioned by year and month, for example:

log_data/2018/11/2018-11-12-events.json

log_data/2018/11/2018-11-13-events.json

Thierry Bertin-Mahieux, Daniel P.W. Ellis, Brian Whitman, and Paul Lamere. The Million Song Dataset. In Proceedings of the 12th International Society for Music Information Retrieval Conference (ISMIR 2011), 2011.