Our survey primarily aims to provide a comprehensive understanding of MTL, encompassing its definition, taxonomy, applications, and their connections and trends. We delve into the various aspects of MTL methods, including the loss function, network architecture, and optimization methods, offering explanations and insights from the perspective of technical details. For each method, we provide the corresponding paper link, as well as the code repository for the MTL methods discussed in the paper. We sincerely hope that this survey aids in your comprehension of MTL and its associated methods. If you have any questions or suggestions, please feel free to contact us.

@article{yu2024unleashing,

title={Unleashing the Power of Multi-Task Learning: A Comprehensive Survey Spanning Traditional, Deep, and Pre-Trained Foundation Model Eras},

author={Yu, Jun and Dai, Yutong and Liu, Xiaokang and Huang, Jin and Shen, Yishan and Zhang, Ke and Zhou, Rong and Adhikarla, Eashan and Ye, Wenxuan and Liu, Yixin and others},

journal={arXiv preprint arXiv:2404.18961},

year={2024}

}

- Awesome-Multitask-Learning

- A survey on multi-task learning

Yu Zhang and Qiang Yang IEEE Transactions on Knowledge and Data Engineering 2022. [Paper]

March 31, 2021 - Multi-task learning for dense prediction tasks: A survey

Simon Vandenhende, Stamatios Georgoulis, Marc Proesmans, Dengxin Dai and Luc Van Gool

IEEE Transactions on Pattern Analysis and Machine Intelligence 2021. [Paper]

Jan 26, 2021 - A Brief Review of Deep Multi-task Learning and Auxiliary Task Learning

Partoo Vafaeikia and Khashayar Namdar and Farzad Khalvati

arXiv 2020. [Paper]

Jul 02, 2020 - Multi-Task Learning with Deep Neural Networks: A Survey

Michael Crawshaw

arXiv 2020. [Paper]

Sep 10, 2020 - A brief review on multi-task learning [Paper]

Kim-Han Thung, Chong-Yaw Wee

Multimedia Tools and Applications 2018.

Aug 08, 2018 - An overview of multi-task learning in deep neural networks

Sebastian Ruder

arXiv 2017. [Paper]

Jun 15, 2017

-

Synthetic Data This dataset is often artificially defined by researchers, thus different from one another. The features are often generated via drawing random variables from a shared distribution and adding irrelevant variants from other distributions, and the corresponding responses are produced by a specific computational method.

-

School Data This dataset comes from the Inner London Education Authority (ILEA) and contains 15,362 records of student examination, which are described by 27 student- and school-specific features from 139 secondary schools. The goal is to predict exam scores from 27 features.

-

SARCOS Data This dataset is in humanoid robotics consists of 44,484 training examples and 4, 449 test examples. The goal of learning is to estimate inverse dynamics model of a 7 degrees-of-freedom (DOF) SARCOS anthropomorphic robot arm.

-

Computer Survey Data This dataset is from a survey on the likelihood (11 point scale from 0 to 10) of purchasing personal computers. There are 20 computer models as examples, each of which contains 13 computer descriptions (e.g., price, CPU speed, and screen size) and 6 subject-level covariates (e.g., gender, computer knowledge, and work experience) as features and ratings of 179 subjects as targets, i.e., tasks.

-

Climate Dataset This real-time dataset is collected from a sensor network (e.g., anemometer, thermistor, and pressure transducer) of four climate stations—Cambermet, Chimet, Sotonmet and Bramblemet—in the south on England, which can represent 4 tasks as needed. The archived data are reported in 5-minute intervals, including ∼ 10 climate signals (e.g., wind speed, wave period, barometric pressure, and water temperature).

-

20 Newsgroups This dataset is a collection of approximately 19, 000 netnews articles, organized into 20 hierarchical newsgroups according to the topic, such as root categories (e.g., comp,rec, sci, and talk) and sub-categories (e.g., comp.graphics, sci.electronics, and talk.politics.guns). Users can design different combinations as multiple text classifications tasks.

-

Reuters-21578 Collection This text collection contains 21578 documents from Reuters newswire dating back to 1987. These documents were assembled and indexed with more than 90 correlated categories—5 top categories (i.e., exchanges, orgs, people, place, topic), and each of them includes variable sub-categories。

-

MultiMNIST This dataset is a MTL version of MNIST dataset9. By overlaying multiple images together, traditional digit classification is converted to a MTL problem, where classifying the digits on the different positions are considered as distinctive tasks.

-

ImageCLEF-2014 This dataset is a benchmark for domain adaptation challenge, which contains 2, 400 images of 12 common categories selected from 4 domains: Caltech 256, ImageNet 2012, Pascal VOC 2012, and Bing.

-

Office-Caltech This dataset is a standard benchmark for domain adaption in computer vision, consisting of real-world images of 10 common categories from Office dataset and Caltech-256 dataset. There are 2,533 images from 4 distinct domains/tasks: Amazon, DSLR, Webcam, and Caltech.

-

Office-31 This dataset consists of 4,110 images from 31 object categories across 3 domains/tasks: Amazon, DSLR, and Webcam.

-

Office-Home Dataset. This dataset is collected for object recognition to validate domain adaptation models in the era of deep learning, which includes 15,588 images images in office and home settings (e.g., alarm clock, chair, eraser, keyboard, telephone, etc.) organized into 4 domains/tasks: Art (paintings, sketches and artistic depictions), Clipart (clipart images), Product (product images from www.amazon.com), and Real-World (real-world objects captured with a regular camera).

-

DomainNet This dataset is annotated for the purpose of multi-source unsupervised domain adaptation (UDA) research. It contains ∼ 0.6 million images from 345 categories across 6 distinct domains, e.g., sketch, infograph, quickdraw, real, etc.

-

EMMa This dataset comprises more than 2.8 million objects from Amazon product listings, each annotated with images, listing text, mass, price, product ratings, and its position in Amazon’s product-category taxonomy. It includes a comprehensive taxonomy of 182 physical materials, and objects are annotated with one or more materials from this taxonomy. EMMa offers a new benchmark for multi-task learning in computer vision and NLP, allowing for the addition of new tasks and object attributes at scale.

-

SYNTHIA This dataset is a synthetic dataset created to address the need for a large and diverse collection of images with pixel-level annotations for vision-based semantic segmentation in urban scenarios, particularly for autonomous driving applications. It consists of precise pixel-level semantic annotations for 13 classes, including sky, building, road, sidewalk, fence, vegetation, lane-marking, pole, car, traffic signs, pedestrians, cyclists, and miscellaneous objects.

-

CityScapes This dataset consists of 5,000 images with high quality annotations and 20,000 images with coarse annotations from 50 different cities, which contains 19 classes for semantic urban scene understanding.

-

NYU-Depth Dataset V2 This dataset is comprised of 1,449 images from 464 indoor scenes across 3 cities, which contains 35,064 distinct objects of 894 different classes. The dense per-pixel labels of class, instance, and depth are used in many computer vision tasks, e.g., semantic segmentation, depth prediction, and surface normal estimation.

-

PASCAL VOC Project This project provides standardized image datasets for object class recognition and also has run challenges evaluating performance on object class recognition from 2005 to 2012, where VOC07, VOC08, and VOC12 are commonly used for MTL research. The multiple tasks covers classification, detection (e.g., body part, saliency, semantic edge), segmentation, attribute prediction, surface normals prediction, etc.

-

Taskonomy This dataset is currently the most diverse product for computer vision in MTL, consisting of 4 million samples from 3D scans of ∼ 600 buildings. This product is a dictionary of 26 tasks (e.g., 2D, 2.5D, 3D, semantics, etc.) as a computational taxonomic map for task transfer learning.

-

Adaptive multi-task sparse learning with an application to fMRI study [Paper]

Xi Chen, Jinghui He, Rick Lawrence and Jaime G Carbonell

Proceedings of the 2012 SIAM International Conference on Data Mining 2012. -

Multi-stage multi-task feature learning [Paper]

Pinghua Gong, Jieping Ye and Changshui Zhang

Advances in neural information processing systems 2012. -

Sparse Multi-Task Lasso

Aurelie C Lozano and Grzegorz Swirszcz

Proceedings of the 29th International Coference on International Conference on Machine Learning 2012. [Paper] -

Modeling disease progression via fused sparse group lasso

Jiayu Zhou, Jun Liu, Vaibhav A Narayan and Jieping Ye

Proceedings of the 18th ACM SIGKDD international conference on Knowledge discovery and data mining 2012. [Paper] -

A multi-task learning formulation for predicting disease progression

Jiayu Zhou, Lei Yuan, Jun Liu and Jieping Ye

Proceedings of the 17th ACM SIGKDD international conference on Knowledge discovery and data mining 2011. [Paper] -

Dirty Block-Sparse Model

Ali Jalali, Sujay Sanghavi, Chao Ruan and Pradeep Ravikumar

Advances in neural information processing systems 2010. [Paper] -

Adaptive Sparse Multi-Task Lasso

Seunghak Lee, Jun Zhu and Eric Xing

Advances in neural information processing systems 2010. [Paper] -

Multi-Task Feature Selection

Guillaume Obozinski, Ben Taskar and Michael Jordan

researchgate 2006. [Paper] -

A probabilistic framework for multi-task learning

Jian Zhang

Ph.D. Thesis 2006. [Paper]

-

Multi-task learning for multiple language translation [Paper]

Daxiang Dong, Hua Wu, Wei He, Dianhai Yu and Haifeng Wang

Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing 2015. -

A convex formulation for learning shared structures from multiple tasks [Paper]

Jianhui Chen, Lei Tang, Jun Liu and Jieping Ye

Proceedings of the 26th annual international conference on machine learning 2009. -

Multi-task feature learning [Paper]

Andreas Argyriou, Theodoros Evgeniou and Massimiliano Pontil

Advances in neural information processing systems 2006. -

A framework for learning predictive structures from multiple tasks and unlabeled data [Paper]

Rie Kubota Ando, Tong Zhang and Peter Bartlett

Journal of Machine Learning Research 2005.

-

Learning Linear and Nonlinear Low-Rank Structure in Multi-Task Learning [Paper]

Yi Zhang, Yu Zhang and Wei Wang

IEEE Transactions on Knowledge and Data Engineering 2023 -

Multi-stage multi-task learning with reduced rank [Paper]

Lei Han and Yu Zhang

Proceedings of the AAAI Conference on Artificial Intelligence 2016. -

Multitask learning meets tensor factorization: task imputation via convex optimization [Paper]

Kishan Wimalawarne, Masashi Sugiyama and Ryota Tomioka

Advances in neural information processing systems 2014 -

Multilinear multitask learning [Paper]

Bernardino Romera-Paredes, Hane Aung, Nadia Bianchi-Berthouze and Massimiliano Pontil

Proceedings of the 30th International Conference on Machine Learning 2013 -

An accelerated gradient method for trace norm minimization [Paper]

Shuiwang Ji and Jieping Ye

Proceedings of the 26th annual international conference on machine learning 2009.

-

Learning incoherent sparse and low-rank patterns from multiple tasks [Paper]

Jianhui Chen, Ji Liu and Jieping Ye

ACM Transactions on Knowledge Discovery from Data 2012. -

Multi-level lasso for sparse multi-task regression [Paper]

Aurelie C Lozano and Grzegorz Swirszcz

Proceedings of the 29th International Coference on International Conference on Machine Learning 2012. -

Robust multi-task feature learning [Paper]

Pinghua Gong, Jieping Ye and Changshui Zhang

Proceedings of the 18th ACM SIGKDD international conference on Knowledge discovery and data mining 2012. -

Integrating low-rank and group-sparse structures for robust multi-task learning [Paper]

Jianhui Chen, Jiayu Zhou and Jieping Ye

Proceedings of the 17th ACM SIGKDD international conference on Knowledge discovery and data mining 2011. -

A dirty model for multi-task learning [Paper]

Ali Jalali, Sujay Sanghavi, Chao Ruan and Pradeep Ravikumar

Advances in neural information processing systems 2010. -

A framework for learning predictive structures from multiple tasks and unlabeled data. [Paper]

Rie Kubota Ando, Tong Zhang and Peter Bartlett

Journal of Machine Learning Research 2005

-

A convex formulation for learning task relationships in multi-task learning. [Paper]

Zhang, Yu and Yeung, Dit--Yan

arXiv preprint arXiv:1203.3536 2012 -

Hierarchical multitask structured output learning for large-scale sequence segmentation. [Paper]

G{"o}rnitz, Nico and Widmer, Christian and Zeller, Georg and Kahles, Andr{'e} and R{"a}tsch, Gunnar and Sonnenburg, S{"o}ren

Advances in Neural Information Processing Systems 2011 -

Large margin multi-task metric learning. [Paper]

Evgeniou, Theodoros and Pontil, Massimiliano

Advances in neural information processing systems 2010 -

Multi-task learning using generalized t process. [Paper]

Zhang, Yu and Yeung, Dit--Yan

JMLR Workshop and Conference Proceedings 2010 -

Multi-task learning via conic programming. [Paper]

Kato, Tsuyoshi and Kashima, Hisashi and Sugiyama, Masashi and Asai, Kiyoshi

Advances in Neural Information Processing Systems 2007 -

Multi-task Gaussian process prediction. [Paper]

Bonilla, Edwin V and Chai, Kian and Williams, Christopher

Advances in Neural Information Processing Systems 2007 -

Learning multiple tasks with kernel methods. [Paper]

Evgeniou, Theodoros and Micchelli, Charles A and Pontil, Massimiliano and Shawe-Taylor, John

Journal of machine learning research 2005 -

Regularized multi--task learning. [Paper]

Parameswaran, Shibin and Weinberger, Kilian Q

Proceedings of the tenth ACM SIGKDD international conference on Knowledge discovery and data mining 2004

-

Learning tree structure in multi-task learning. [Paper]

Han, Lei and Zhang, Yu

Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 2015 -

Clustered Multi-Task Learning Via Alternating Structure Optimization. [Paper]

Zhou, Jiayu and Chen, Jianhui and Ye, Jieping

Advances in Neural Information Processing Systems 2011 -

A Framework for Learning Predictive Structures from Multiple Tasks and Unlabeled Data. [Paper]

Ando, Rie Kubota and Zhang, Tong

The Journal of Machine Learning Research 2005

|

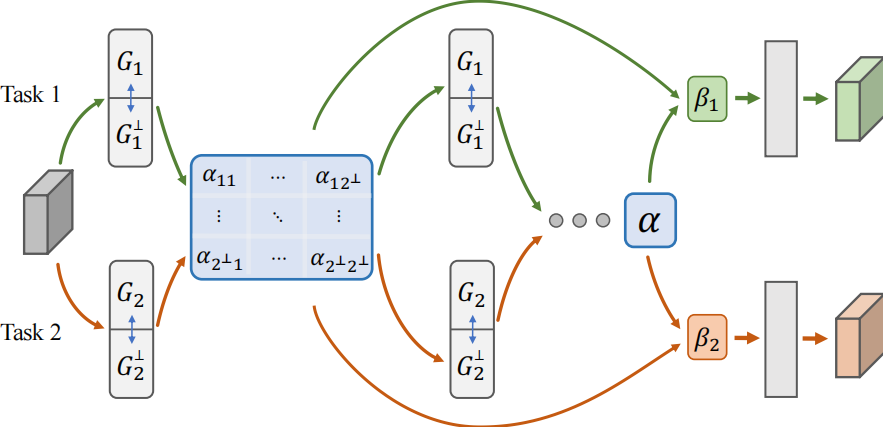

Latent multi-task architecture learning [Paper]

Authors: Sebastian Ruder, Joachim Bingel, Isabelle Augenstein and Anders Sogaard Publisher: Proceedings of the AAAI Conference on Artificial Intelligence Year: 2019 |

|

|

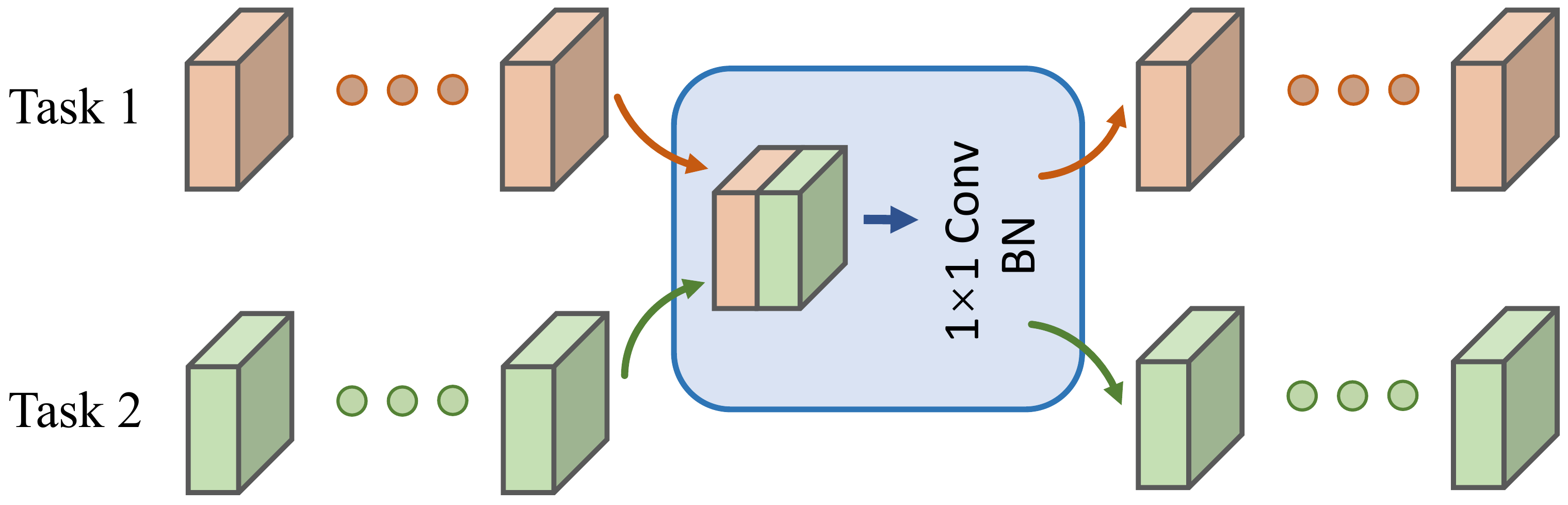

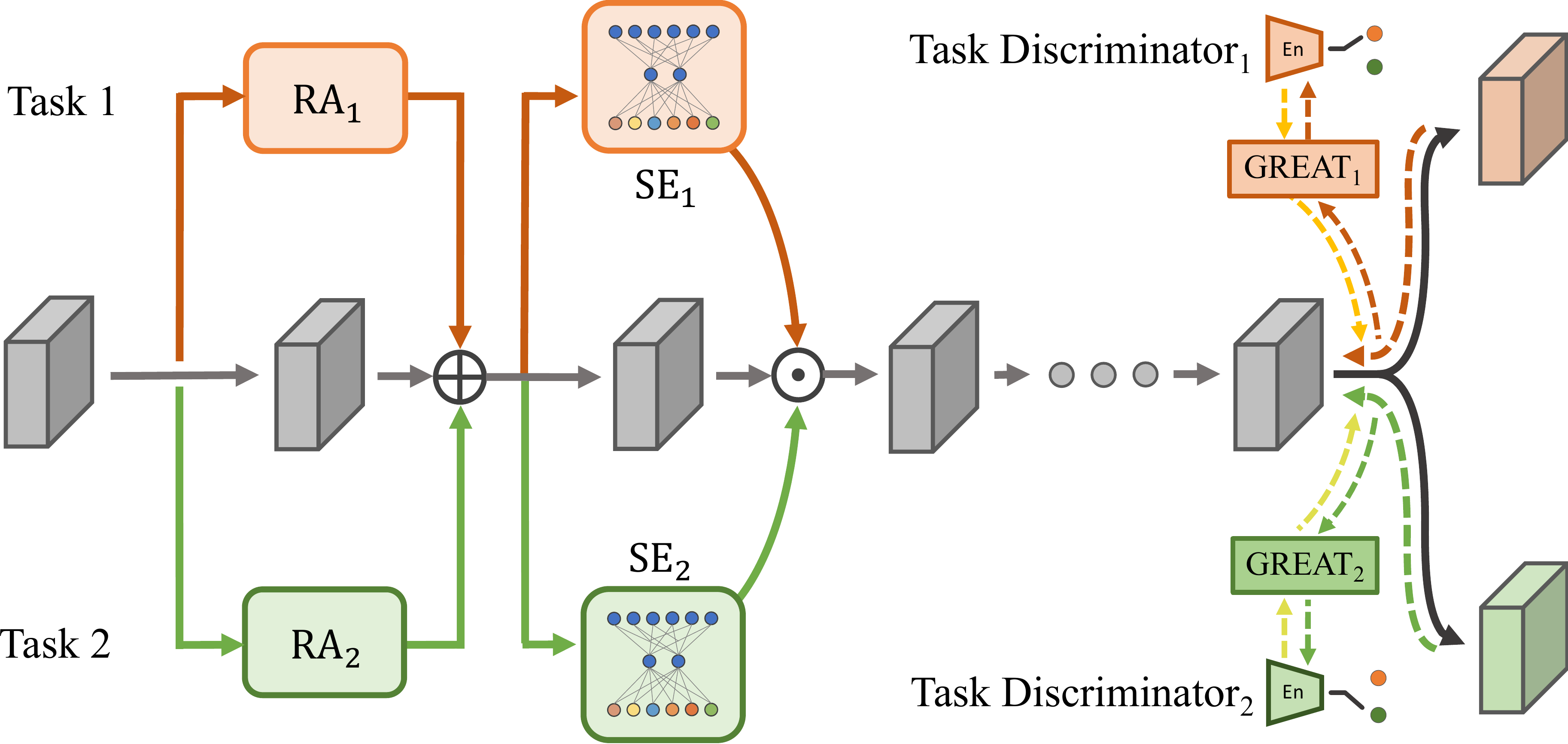

Nddr-cnn: Layerwise feature fusing in multi-task cnns by neural discriminative dimensionality reduction [Paper]

[Code]

Authors: Yuan Gao, Jiayi Ma, Mingbo Zhao , Wei Liu and Alan L Yuille Publisher: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition Year: 2019 |

|

|

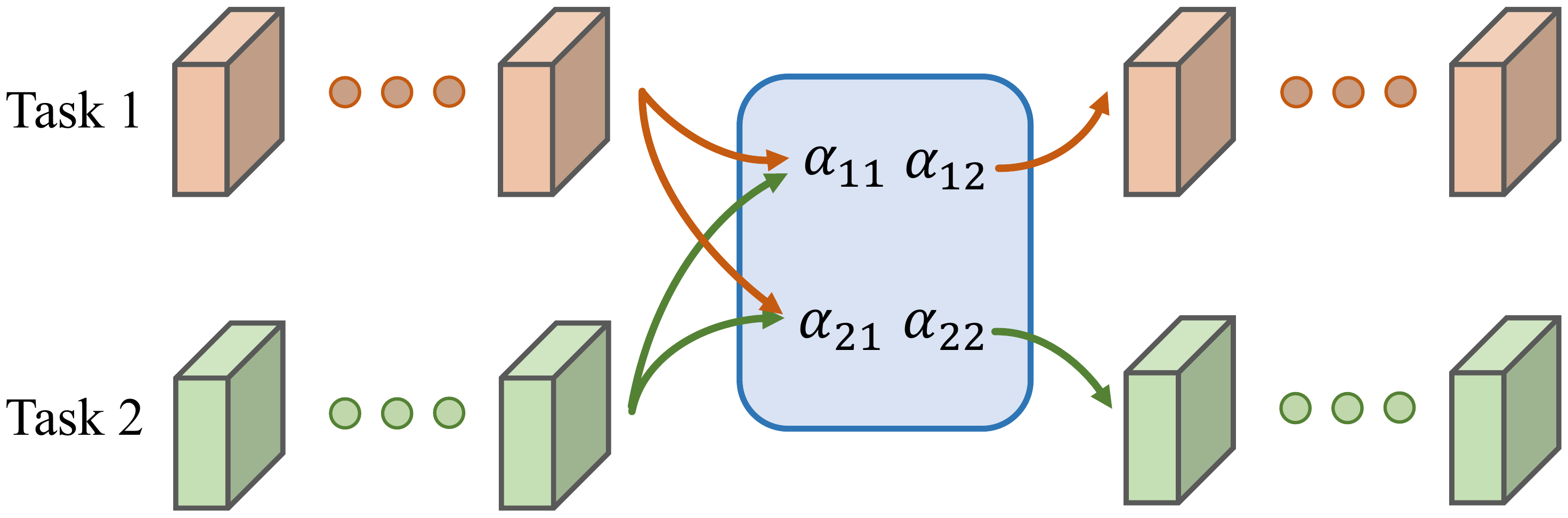

Cross-stitch networks for multi-task learning [Paper]

[Code] Authors: Ishan Misra, Abhinav Shrivastava, Abhinav Gupta and Martial Hebert Publisher: CVPR Year: 2016 |

|

|

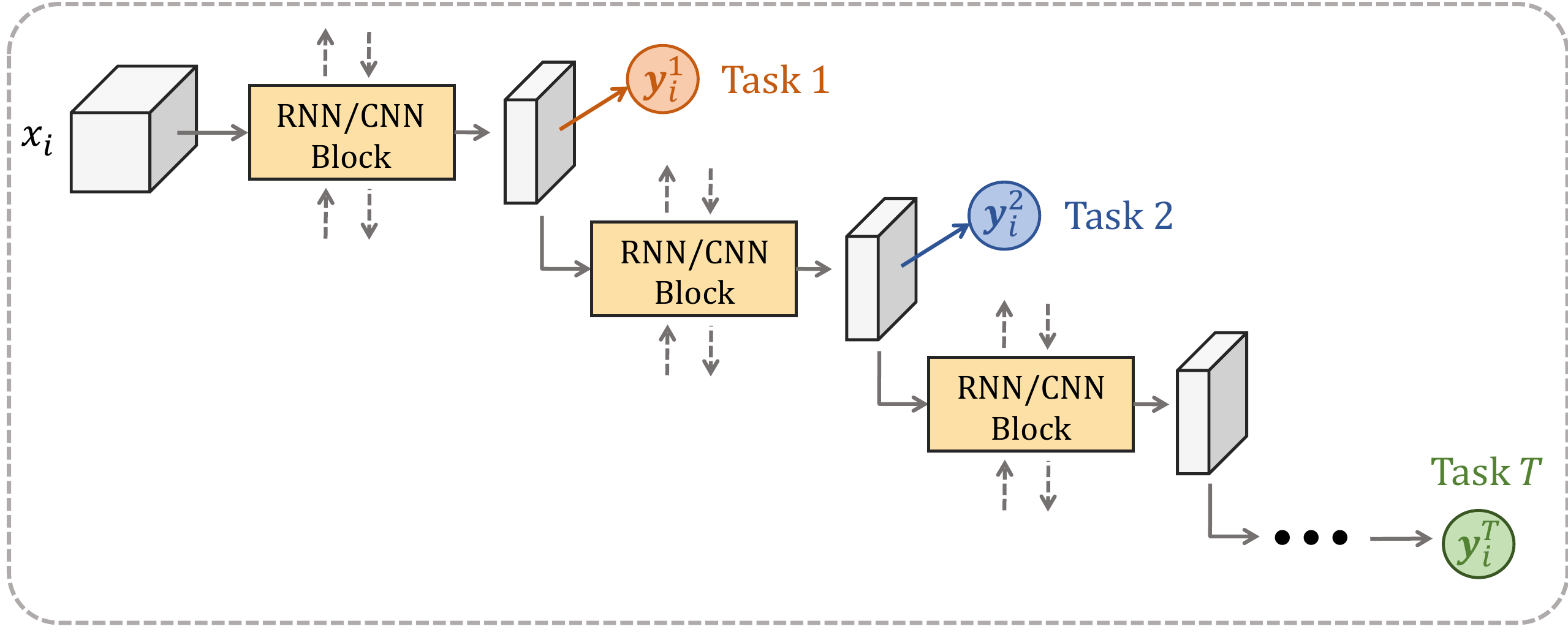

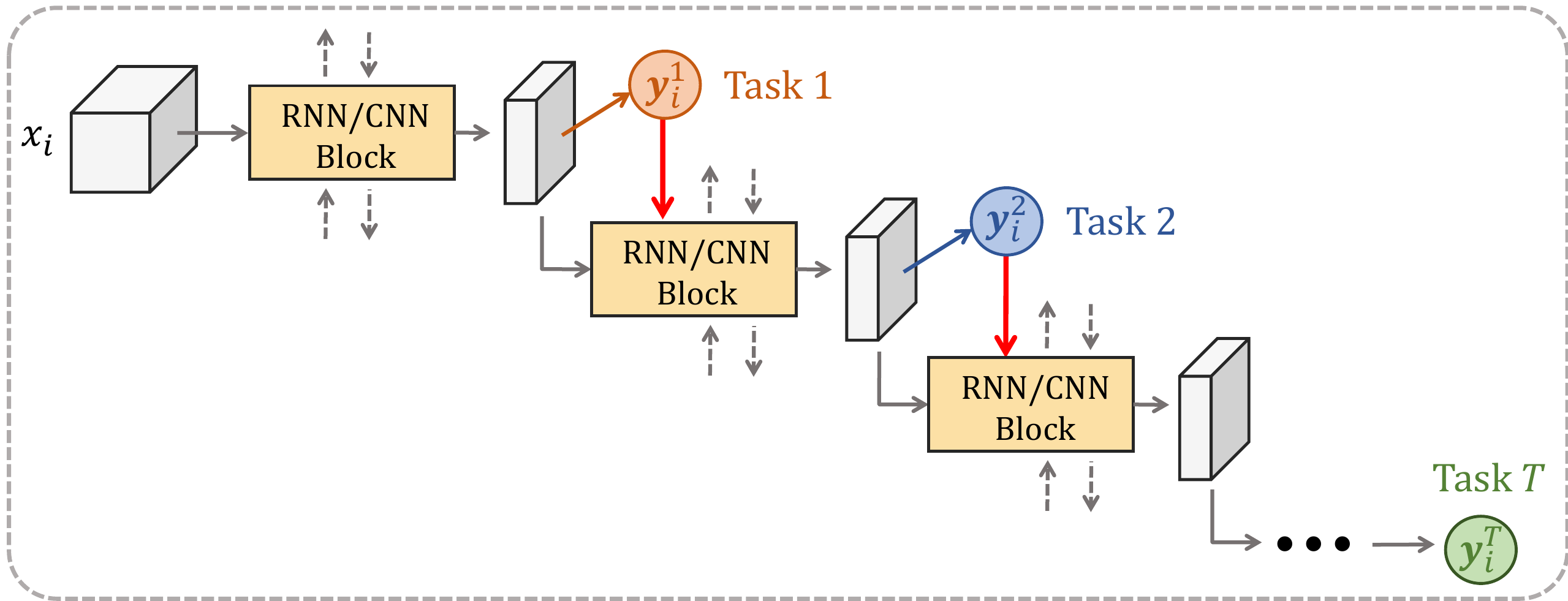

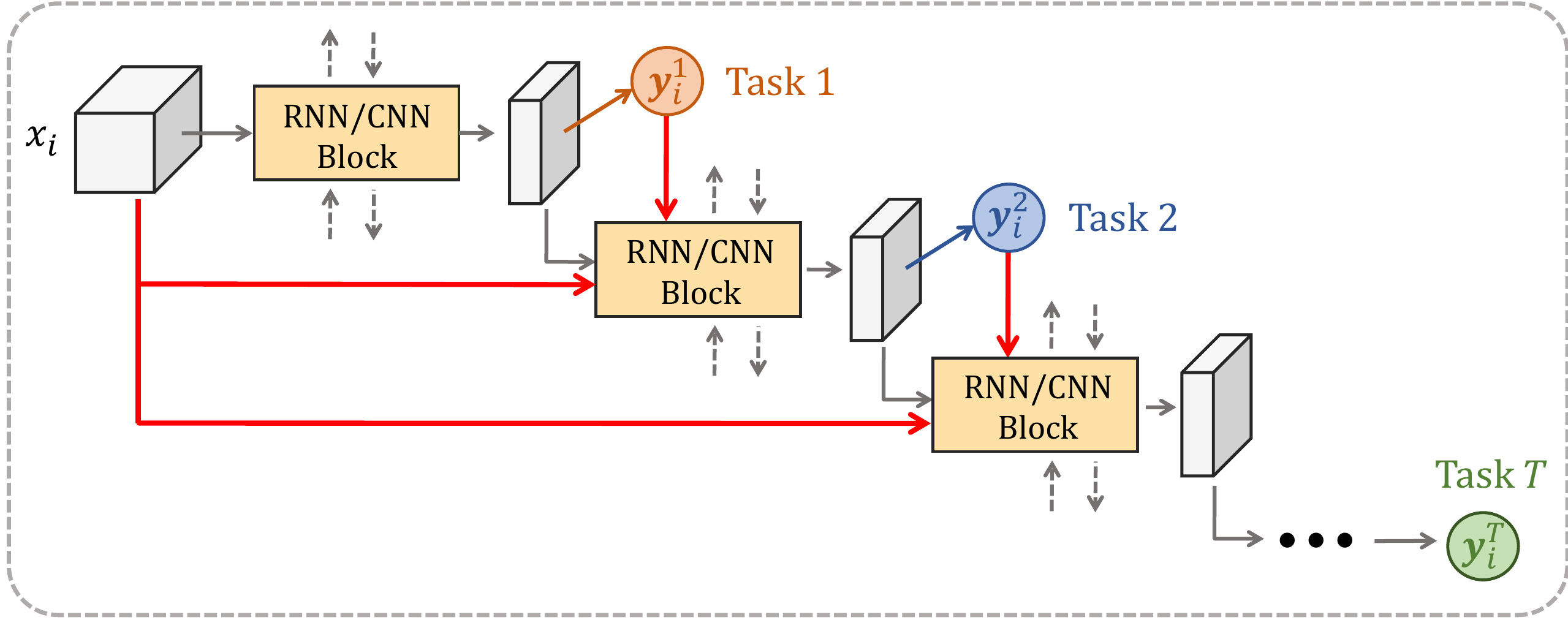

Deep cascade multi-task learning for slot filling in online shopping assistant [Paper]

[Code]

Authors: Yu Gong, Xusheng Luo, Yu Zhu, Wenwu Ou, Zhao Li, Muhua Zhu, Kenny Q. Zhu, Lu Duan and Xi Chen Publisher: Proceedings of the AAAI conference on artificial intelligence Year: 2019 |

|

|

A hierarchical multi-task approach for learning embeddings from semantic tasks [Paper]

[Code]

Authors: Victor Sanh, Thomas Wolf and Sebastian Ruder Publisher: Proceedings of the AAAI conference on artificial intelligence Year: 2019 |

|

|

Deep multi-task learning with low level tasks supervised at lower layers [Paper]

Authors: Anders S{\o}gaard and Yoav Goldberg Publisher: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics Volume 2: Short Papers Year: 2016 |

|

|

Instance-aware semantic segmentation via multi-task network cascades [Paper]

[Code]

Authors: Jifeng Dai, Kaiming He and Jian Sun Publisher: Proceedings of the IEEE conference on computer vision and pattern recognition Year: 2016 |

|

|

A Joint Many-Task Model: Growing a Neural Network for

Multiple NLP Tasks [Paper]

[Code]

Authors: Kazuma Hashimoto, Caiming Xiong, Yoshimasa Tsuruoka, Richard Socher Publisher: arXiv preprint arXiv:1611.01587 Year: 2016 |

|

|

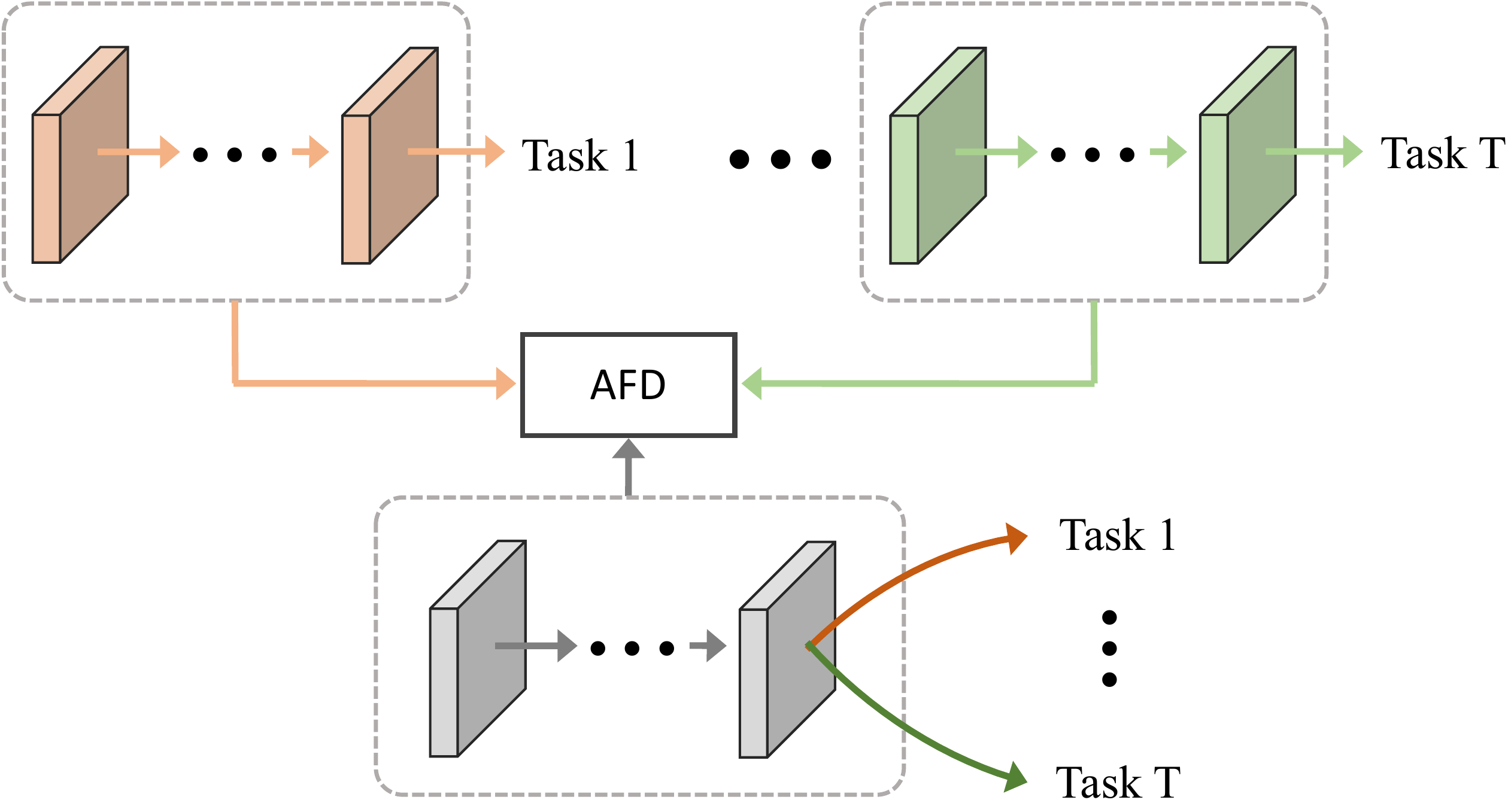

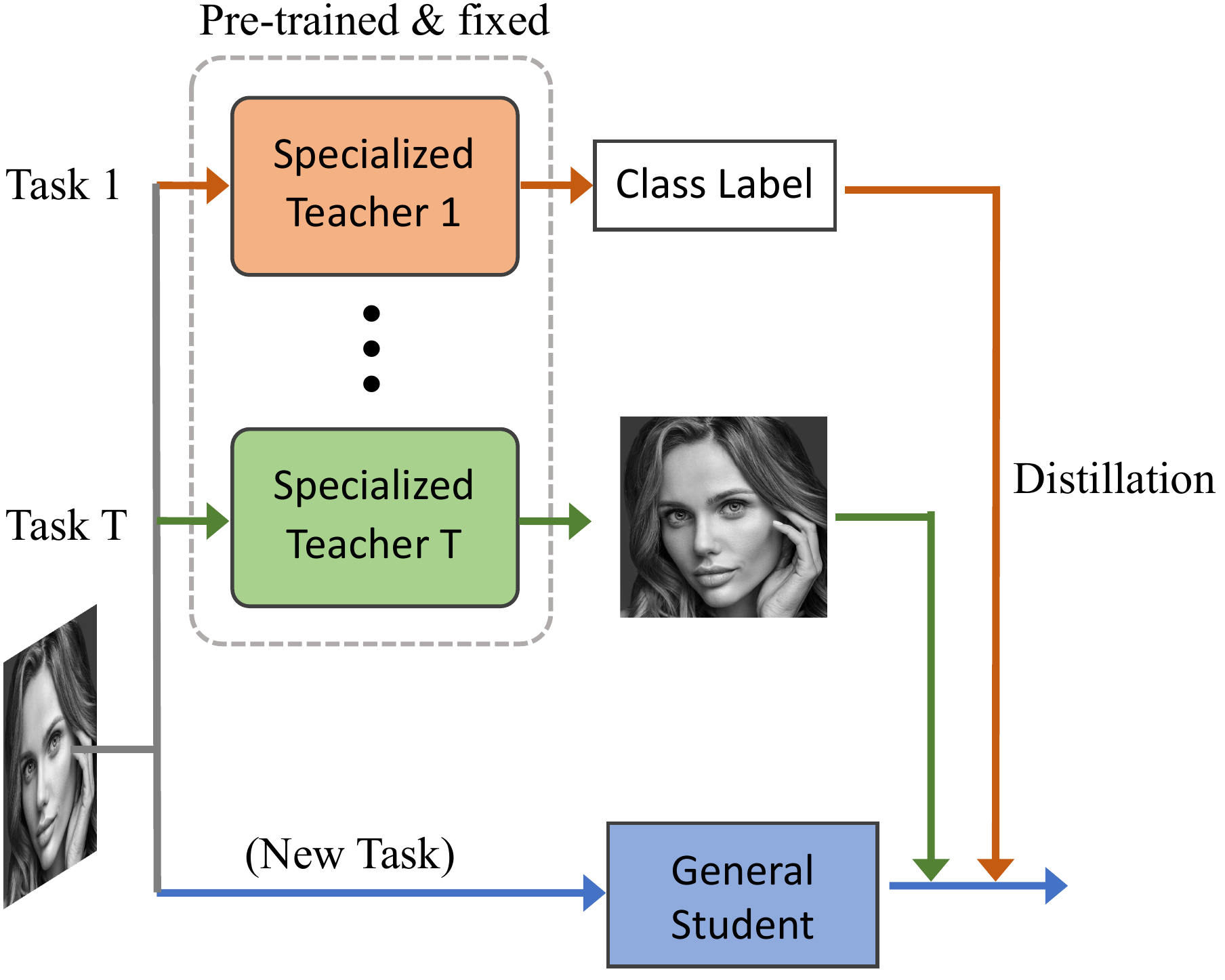

Online Knowledge Distillation for Multi-Task Learning [Paper]

Authors: Geethu Miriam Jacob and Vishal Agarwal Publisher: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision Year: 2023 |

|

|

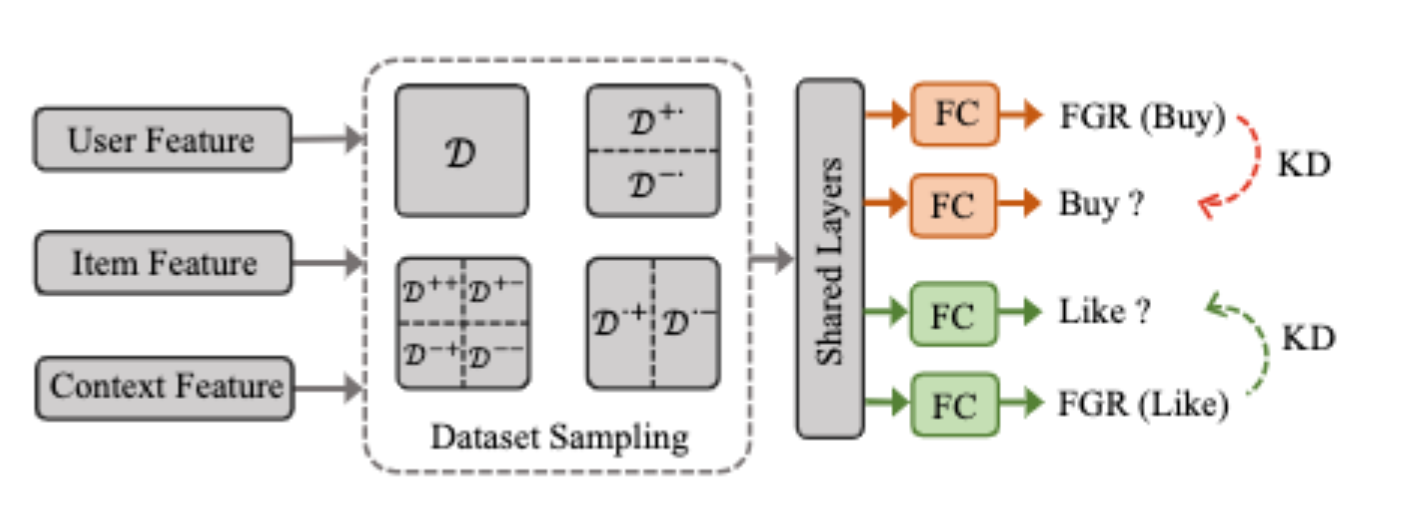

Cross-task knowledge distillation in multi-task recommendation [Paper]

Authors: Chenxiao Yang, Junwei Pan, Xiaofeng Gao, Tingyu Jiang, Dapeng Liu and Guihai Chen Publisher: Proceedings of the AAAI Conference on Artificial Intelligence Year: 2022 |

|

|

Multi-task self-training for learning general representations [Paper]

Authors: Golnaz Ghiasi, Barret Zoph, Ekin D Cubuk and Quoc V Le and Tsung-Yi Lin Publisher: Proceedings of the IEEE/CVF International Conference on Computer Vision Year: 2021 |

|

|

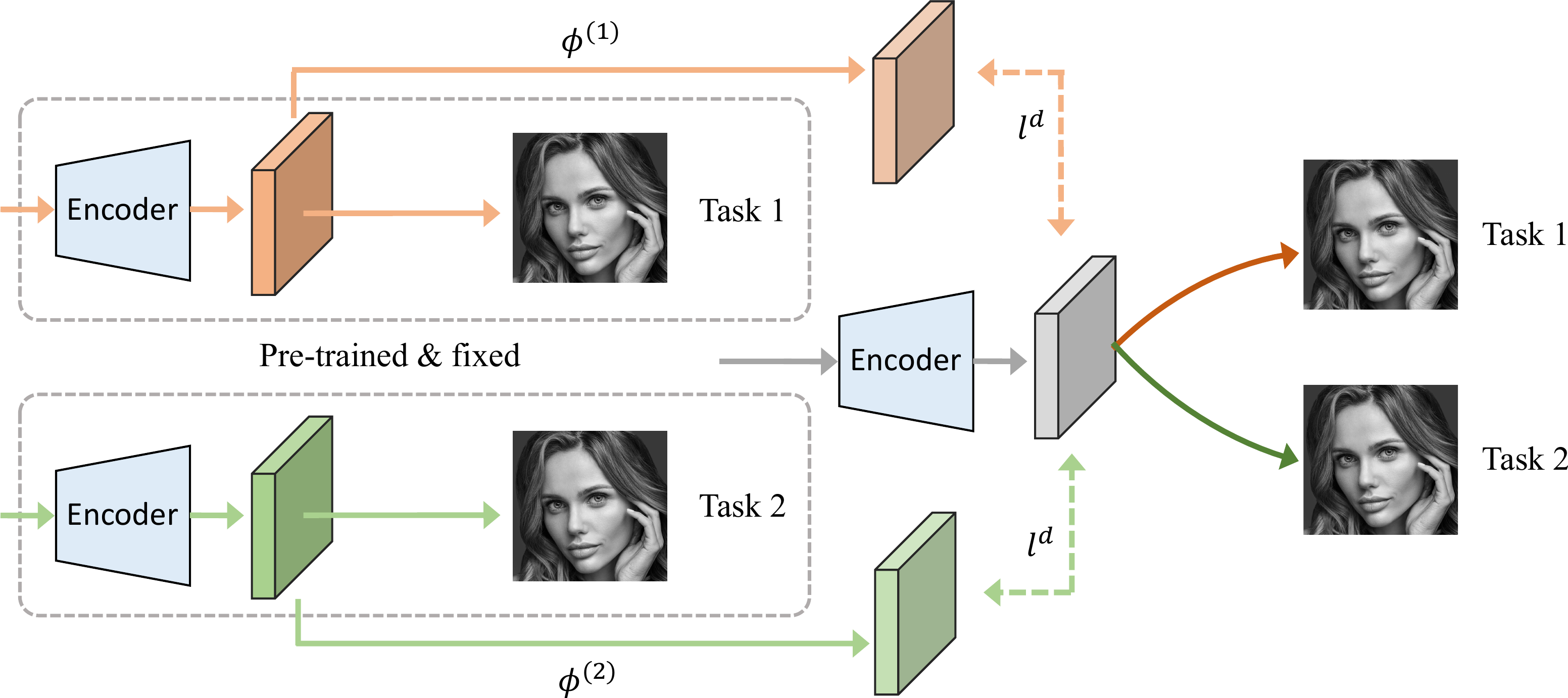

Knowledge distillation for multi-task learning [Paper]

[Code] Authors: WeiHong Li and Hakan Bilen Publisher: Computer Vision--ECCV Year: 2020 |

|

|

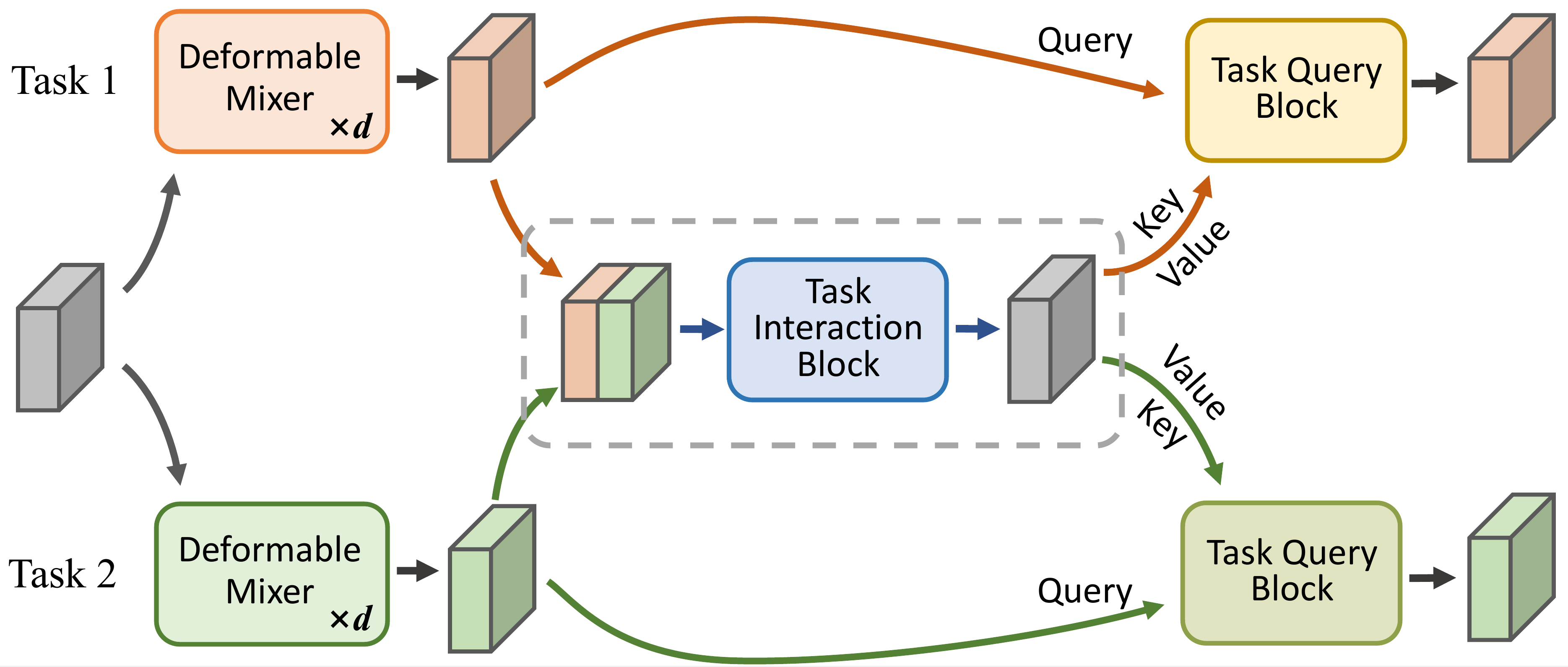

Demt: Deformable mixer transformer for multi-task learning of dense prediction

[Paper]

[Code]

Authors: Yangyang Xu, Yibo Yang and Lefei Zhang Publisher: arXiv e-prints Year: 2023 |

|

|

Exploring relational context for multi-task dense prediction

[Paper]

[Code]

Authors: David Bruggemann, Menelaos Kanakis, Anton Obukhov, Stamatios Georgoulis and Luc Van Gool Publisher: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Year: 2021 |

|

|

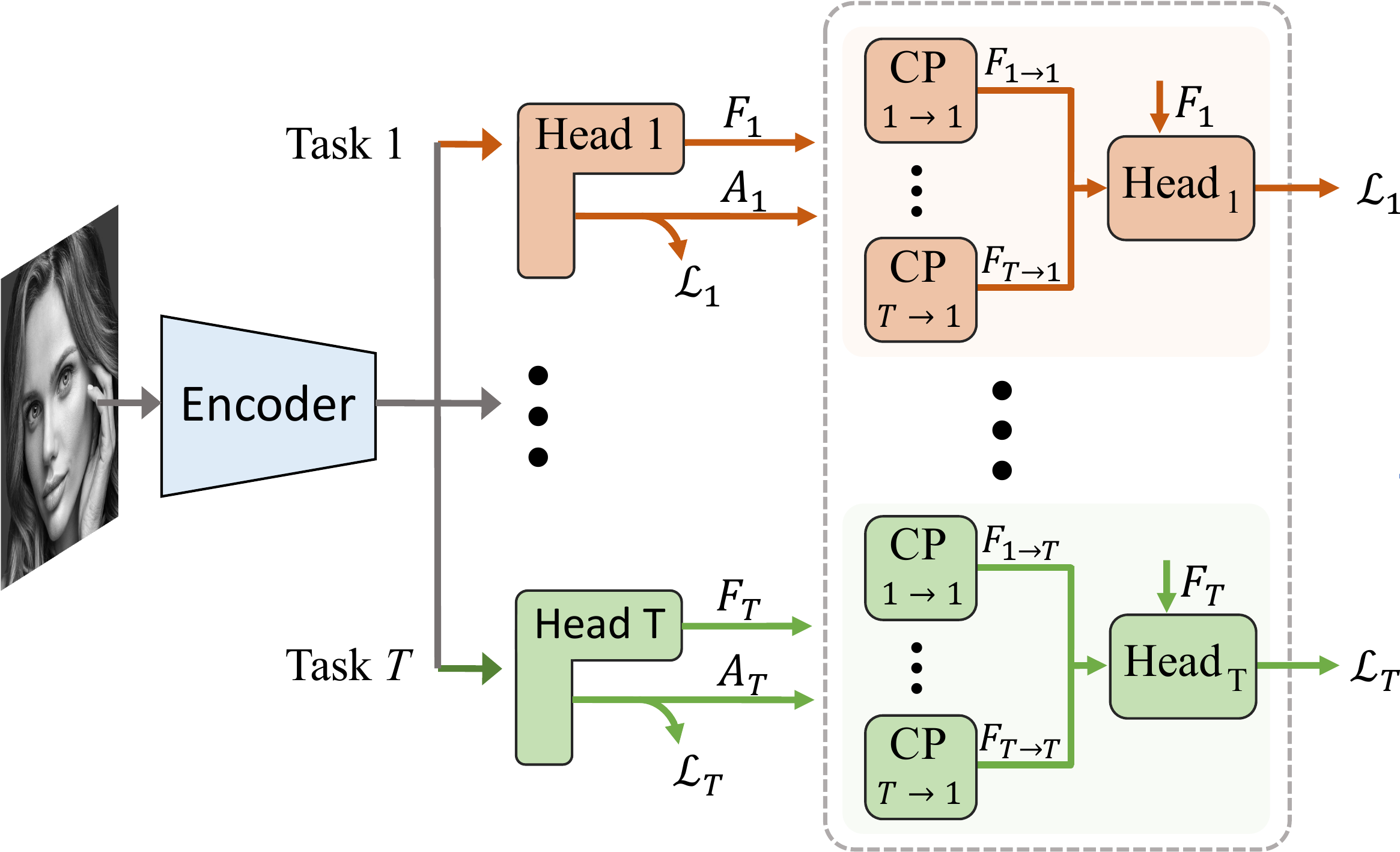

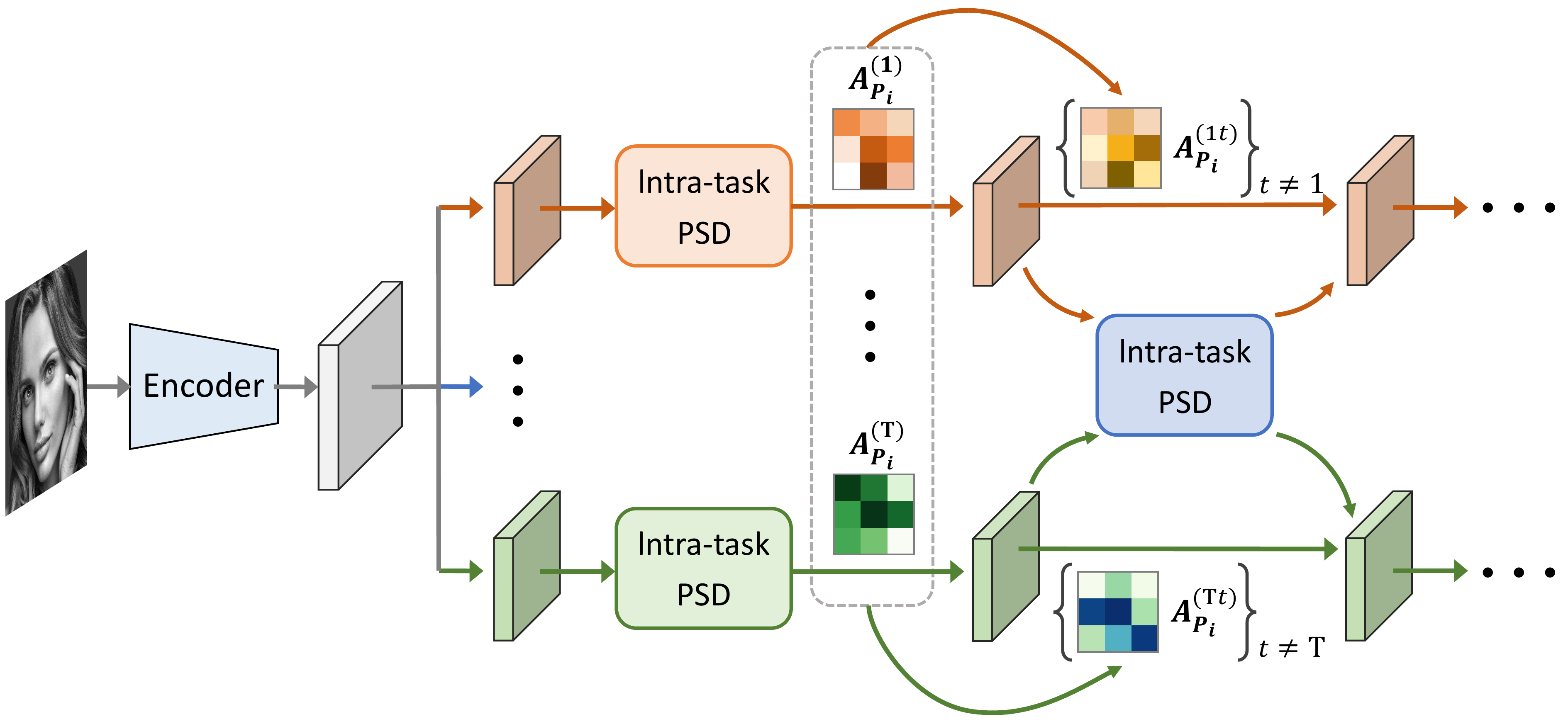

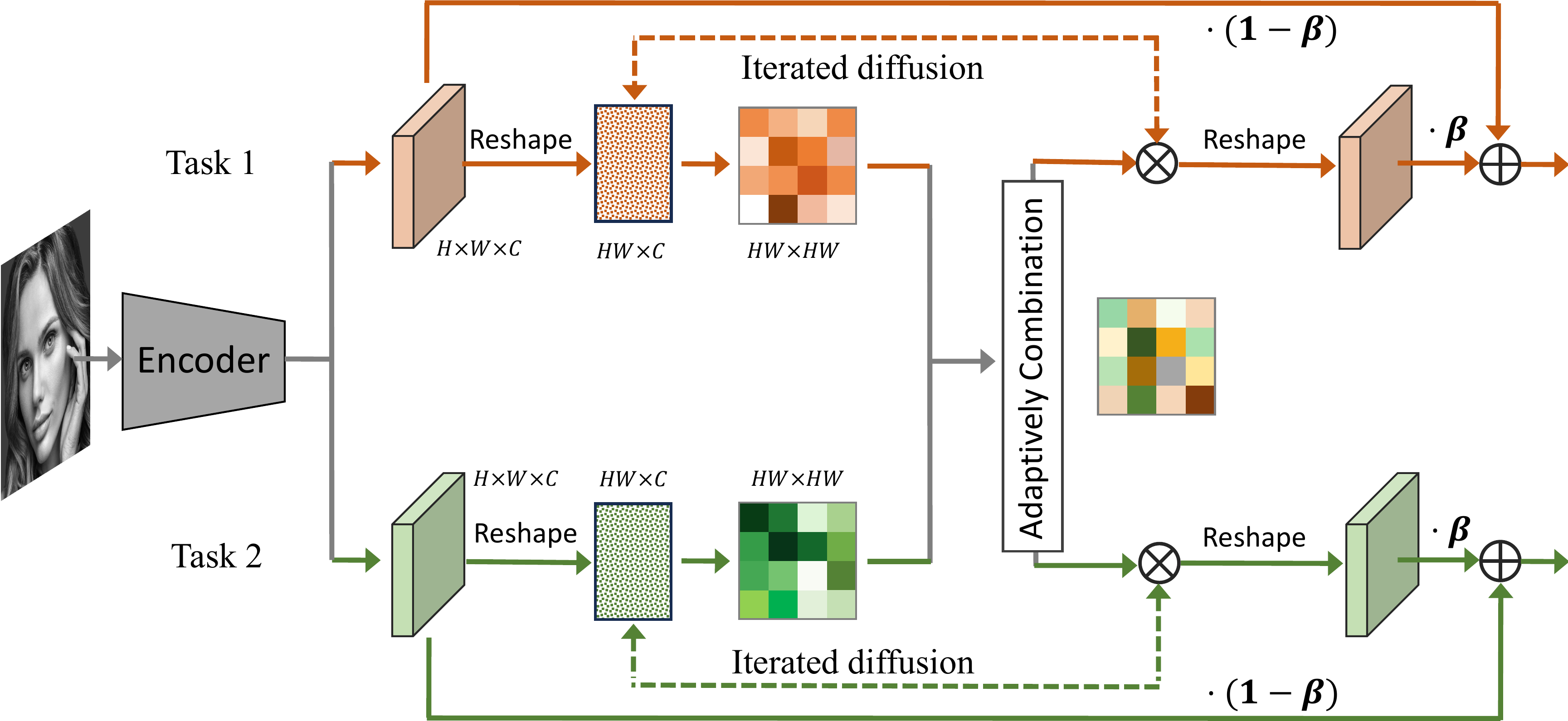

Pattern-structure diffusion for multi-task learning [Paper]

Authors: Ling Zhou, Zhen Cui, Chunyan Xu, Zhenyu Zhang, Chaoqun Wang, Tong Zhang and Jian Yang Publisher: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Year: 2020 |

|

|

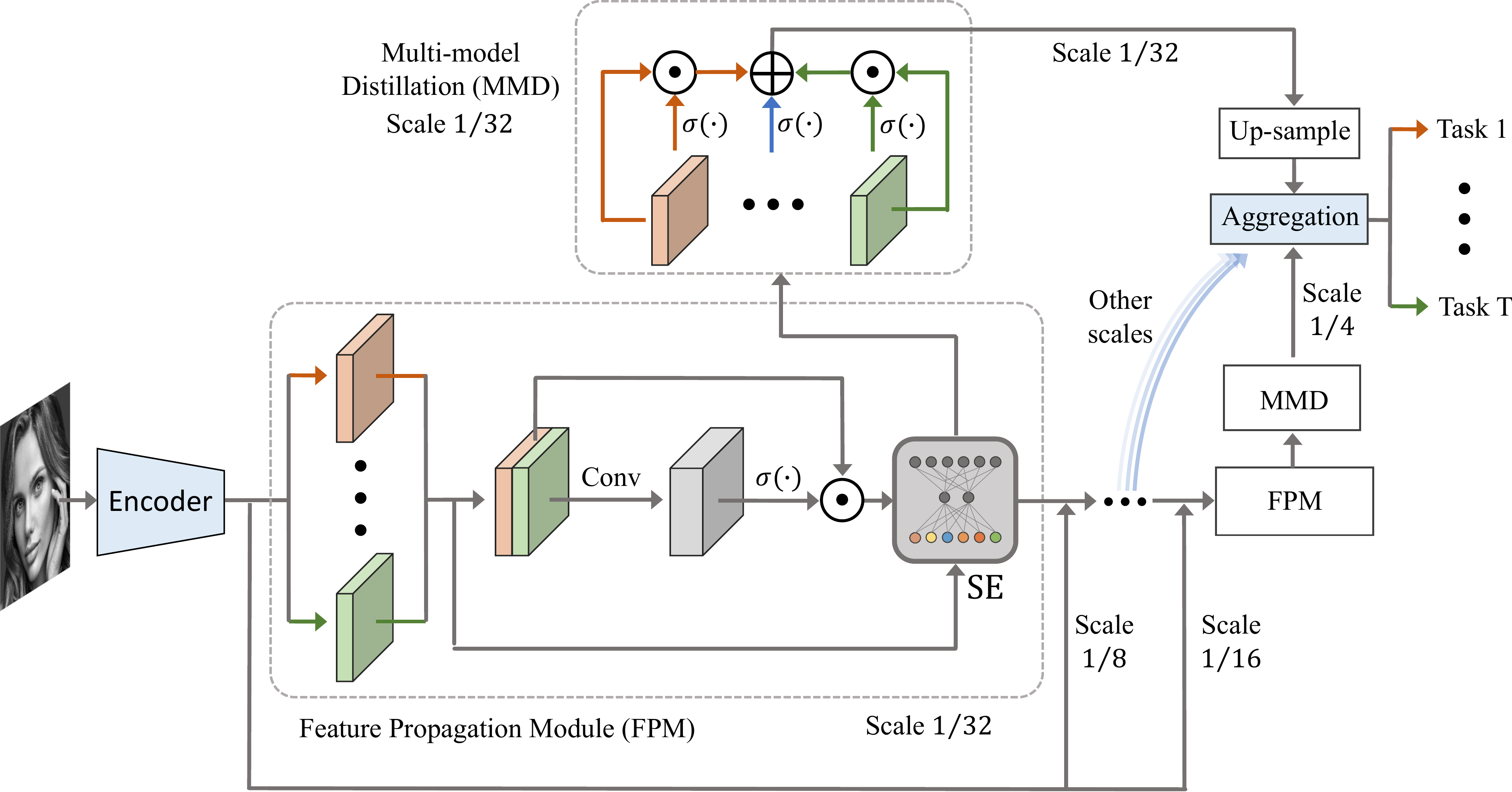

Mti-net: Multi-scale task interaction networks for multi-task

learning [Paper]

[Code]

Authors: Simon Vandenhende, Stamatios Georgoulis and Luc Van Gool Publisher: Computer Vision--ECCV 2020: 16th European Conference, Glasgow, UK, August 23--28, 2020, Proceedings, Part IV 16 Year: 2020 |

|

|

End-to-end multi-task learning with attention [Paper]

[Code] Authors: Shikun Liu, Edward Johns and Andrew J Davison Publisher: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Year: 2019 |

|

|

Pattern-affinitive propagation across depth, surface normal and semantic segmentation [Paper]

Authors: Zhenyu Zhang, Zhen Cui, Chunyan Xu, Yan Yan, Nicu Sebe and Jian Yang Publisher: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Year: 2019 |

|

|

Attentive single-tasking of multiple tasks

[Paper]

[Code]

Authors: Kevis-Kokitsi Maninis, Ilija Radosavovic and Iasonas Kokkinos Publisher: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Year: 2019 |

|

|

Pad-net: Multi-tasks guided prediction-and-distillation network for simultaneous depth estimation and scene parsing [Paper]

Authors: Dan Xu, Wanli Ouyang, Xiaogang Wang and Nicu Sebe Publisher: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Year: 2018 |

|

|

Recon: Reducing Conflicting Gradients From the Root For Multi-Task Learning [Paper]

[Code] Authors: Guangyuan Shi, Qimai Li, Wenlong Zhang, Jiaxin Chen, Xiao-Ming Wu Publisher: The Eleventh International Conference on Learning Representations Year: 2022 |

|

|

Towards impartial multi-task learning [Paper]

[Code] Authors: Liyang Liu, Yi Li, Zhanghui Kuang, Jing-Hao Xue, Yimin Chen, Wenming Yang, Qingmin Liao, Wayne Zhang Publisher: ICLR Year: 2021 |

|

|

Gradient surgery for multi-task learning [Paper]

[Code] Authors: Tianhe Yu, Saurabh Kumar, Abhishek Gupta, Sergey Levine, Karol Hausman, Chelsea Finn Publisher: Advances in Neural Information Processing Systems Year: 2020 |

|

|

Multi-task learning using uncertainty to weigh losses for scene geometry and semantics [Paper]

[Code] Authors: Alex Kendall, Yarin Gal and Roberto Cipollae Publisher: Proceedings of the IEEE conference on computer vision and pattern recognition Year: 2018 |

|

|

Gradnorm: Gradient normalization for adaptive loss balancing in deep multitask networks [Paper]

[Code] Authors: Zhao Chen, Vijay Badrinarayanan, Chen-Yu Lee and Andrew Rabinovich Publisher: International conference on machine learning Year: 2018 |

|

|

Mitigating gradient bias in multi-objective learning: A provably convergent approach

[Paper]

[Code]

Authors:Heshan Devaka Fernando,Han Shen, Miao Liu,Subhajit Chaudhury, Keerthiram Murugesan and Tianyi Chen Publisher:The Eleventh International Conference on Learning Representations Year:2022 |

|

|

Multi-task learning as a bargaining game

[Paper]

[Code]

Authors:Aviv Navon, Aviv Shamsian, Idan Achituve, Haggai Maron, Kenji Kawaguchi, Gal Chechik and Ethan Fetaya Publisher:arXiv preprint arXiv:2202.01017 Year:2022 |

|

|

Multi-task learning with user preferences: Gradient descent with controlled ascent in pareto optimization

[Paper]

[Code]

Authors:Debabrata Mahapatra and Vaibhav Rajan Publisher:International Conference on Machine Learning Year:2020 |

|

|

Pareto multi-task learning

[Paper]

[Code]

Authors:Xi Lin, Hui-Ling Zhen, Zhenhua Li, Qing-Fu Zhang and Sam Kwong Publisher:Advances in neural information processing systems Year:2019 |

|

|

Multi-task learning as multi-objective optimization

[Paper]

[Code]

Authors:Ozan Sener and Vladlen Koltun Publisher:Advances in neural information processing systems Year:2018 |

|

|

Multicriteria optimization

[Paper]

Authors:Matthias Ehrgott Publisher:Springer Science \& Business Media Year:2005 |

|

|

Steepest descent methods for multicriteria optimization

[Paper]

Authors:J{\"o}rg Fliege and Benar Fux Svaiter Publisher:Springer Year:2000 |

|

|

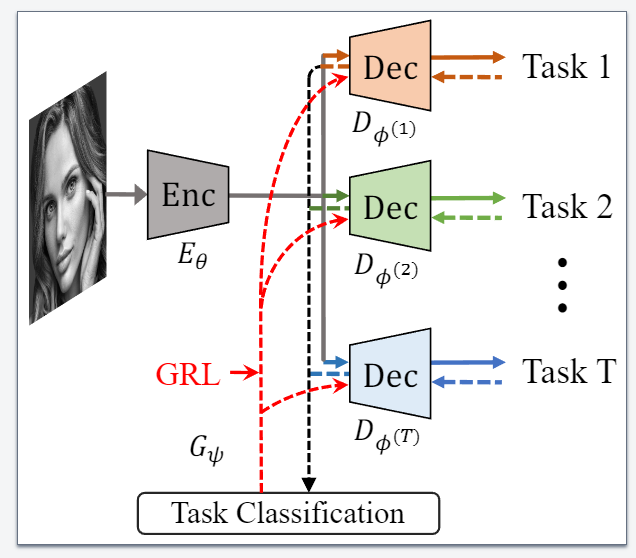

Representation disentanglement for multi-task learning with application to fetal ultrasound

[Paper]

[Code]

Authors:Qingjie Meng, Nick Pawlowski, Daniel Rueckert, Bernhard Kainz Publisher: Springer Year: 2019 |

|

|

Multi-task adversarial network for disentangled feature learning

[Paper]

Authors: Yang Liu, Zhaowen Wang, Hailin Jin, Ian Wassell Publisher: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Year: 2018 |

|

|

Gradient adversarial training of neural networks

[Paper]

Authors:Ayan Sinha, Zhao Chen, Vijay Badrinarayanan, Andrew Rabinovich Publisher: arXiv preprint arXiv:1806.08028 Year: 2018 |

|

|

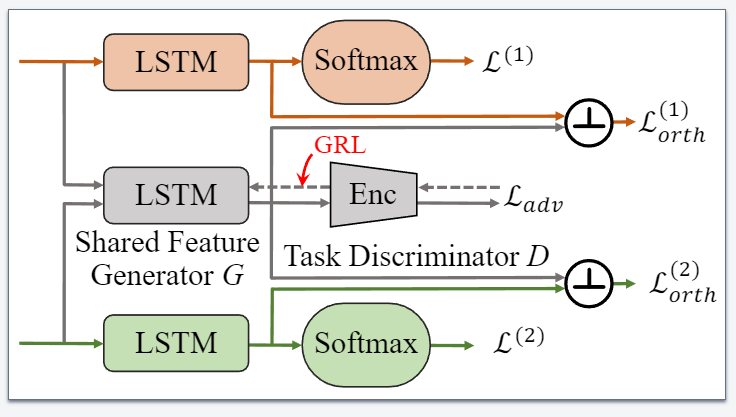

Adversarial Multi-task Learning for Text Classification

[Paper]

[Code]

Authors: Pengfei Liu, Xipeng Qiu, Xuanjing Huang Publisher: arXiv preprint arXiv:1704.05742 Year: 2017 |

|

|

Mod-Squad: Designing Mixtures of Experts As Modular Multi-Task Learners

[Paper]

Authors: Zitian Chen, Yikang Shen, Mingyu Ding, Zhenfang Chen, Hengshuang Zhao, Erik Learned-Miller, Chuang Gan Publisher: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Year: 2023 |

|

|

AdaMV-MoE: Adaptive Multi-Task Vision Mixture-of-Experts

[Paper]

[Code] Authors: Tianlong Chen, Xuxi Chen, Xianzhi Du, Abdullah Rashwan, Fan Yang, Huizhong Chen, Zhangyang Wang, Yeqing Li Publisher: Proceedings of the IEEE/CVF International Conference on Computer Vision Year: 2023 |

|

|

SSummaReranker: A multi-task mixture-of-experts re-ranking framework for abstractive summarization

[Paper]

Authors: Mathieu Ravaut, Shafiq Joty, Nancy F. Chen Publisher: arXiv preprint arXiv:2203.06569 Year: 2022 |

|

|

Multi-task learning with calibrated mixture of insightful experts

[Paper]

Authors: Sinan Wang, Yumeng Li, Hongyan Li, Tanchao Zhu, Zhao Li, Wenwu Ou Publisher: 2022 IEEE 38th International Conference on Data Engineering (ICDE) Year: 2022 |

|

|

Eliciting transferability in multi-task learning with task-level mixture-of-experts

[Paper]

[Code] Authors: Qinyuan Ye, Juan Zha, Xiang Ren Publisher: arXiv preprint arXiv:2205.12701 Year: 2022 |

|

|

Eliciting and Understanding Cross-Task Skills with Task-Level Mixture-of-Experts

[Paper]

[Code] Authors: Qinyuan Ye, Juan Zha, Xiang Ren Publisher: arXiv preprint arXiv:2205.12701 Year: 2022 |

|

|

M³ViT: Mixture-of-Experts Vision Transformer for Efficient Multi-task Learning with Model-Accelerator Co-design

[Paper]

[Code] Authors: Hanxue Liang, Zhiwen Fan, Rishov Sarkar, Ziyu Jiang, Tianlong Chen, Kai Zou, Yu Cheng, Cong Hao, Zhangyang Wang Publisher: Advances in Neural Information Processing Systems Year: 2022 |

|

|

Dselect-k: Differentiable selection in the mixture of experts with applications to multi-task learning

[Paper]

[Code] Authors: Hussein Hazimeh, Zhe Zhao, Aakanksha Chowdhery, Maheswaran Sathiamoorthy, Yihua Chen, Rahul Mazumder, Lichan Hong, Ed H. Chi Publisher: Advances in Neural Information Processing Systems Year: 2021 |

|

|

Sparsely Activated Mixture-of-Experts are Robust Multi-Task Learners

[Paper]

Authors: Shashank Gupta, Subhabrata Mukherjee, Krishan Subudhi, Eduardo Gonzalez, Damien Jose, Ahmed H. Awadallah, Jianfeng Gao Publisher: arXiv preprint arXiv:2204.07689 Year: 2021 |

|

|

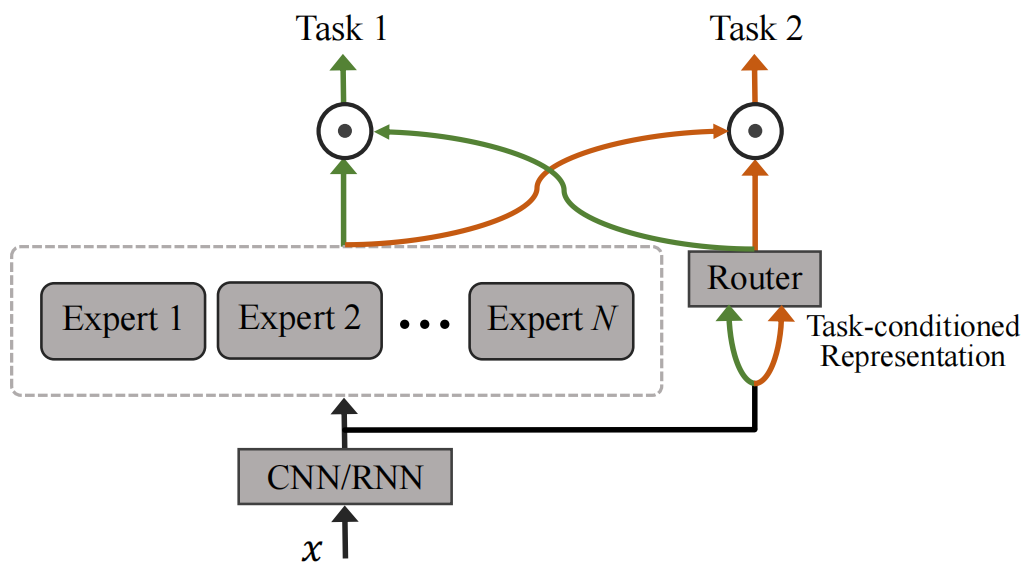

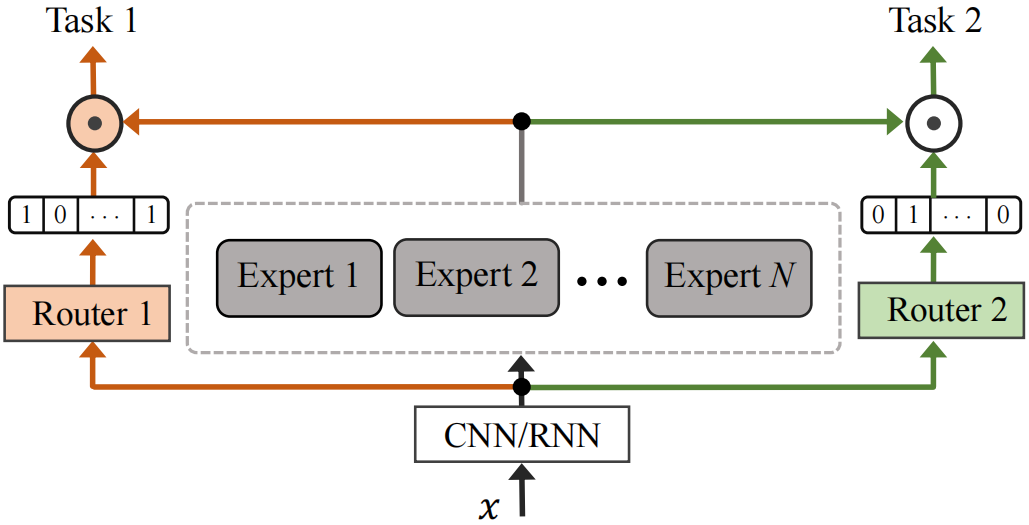

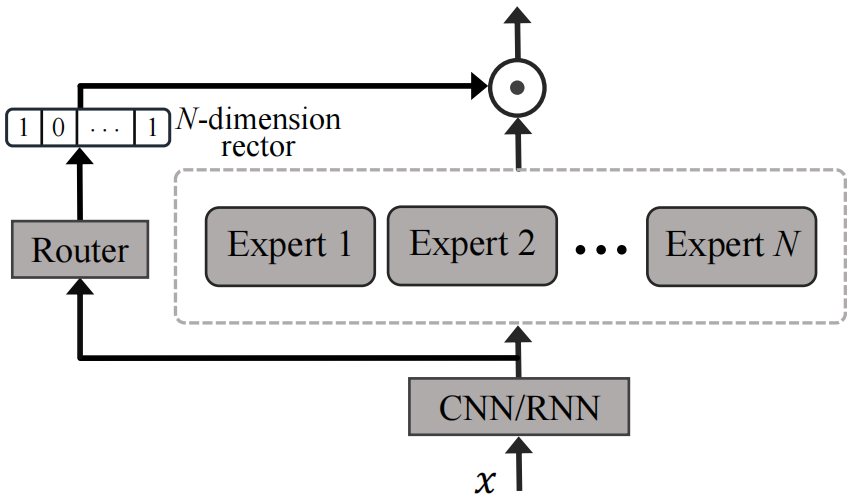

Modeling task relationships in multi-task learning with multi-gate mixture-of-experts

[Paper]

[Code] Authors: Jiaqi Ma, Zhe Zhao, Xinyang Yi, Jilin Chen, Lichan Hong and Ed H Chi Publisher: Proceedings of the 24th ACM SIGKDD international conference on knowledge discovery \& data mining Year: 2018 |

|

|

Outrageously large neural networks: The sparsely-gated mixture-of-experts layer

[Paper] Authors: Noam Shazeer, Azalia Mirhoseini, Krzysztof Maziarz, Andy Davis, Quoc Le,, Geoffrey Hinton and Jeff Dean Publisher: arXiv preprint arXiv:1701.06538 Year: 2017 |

|

|

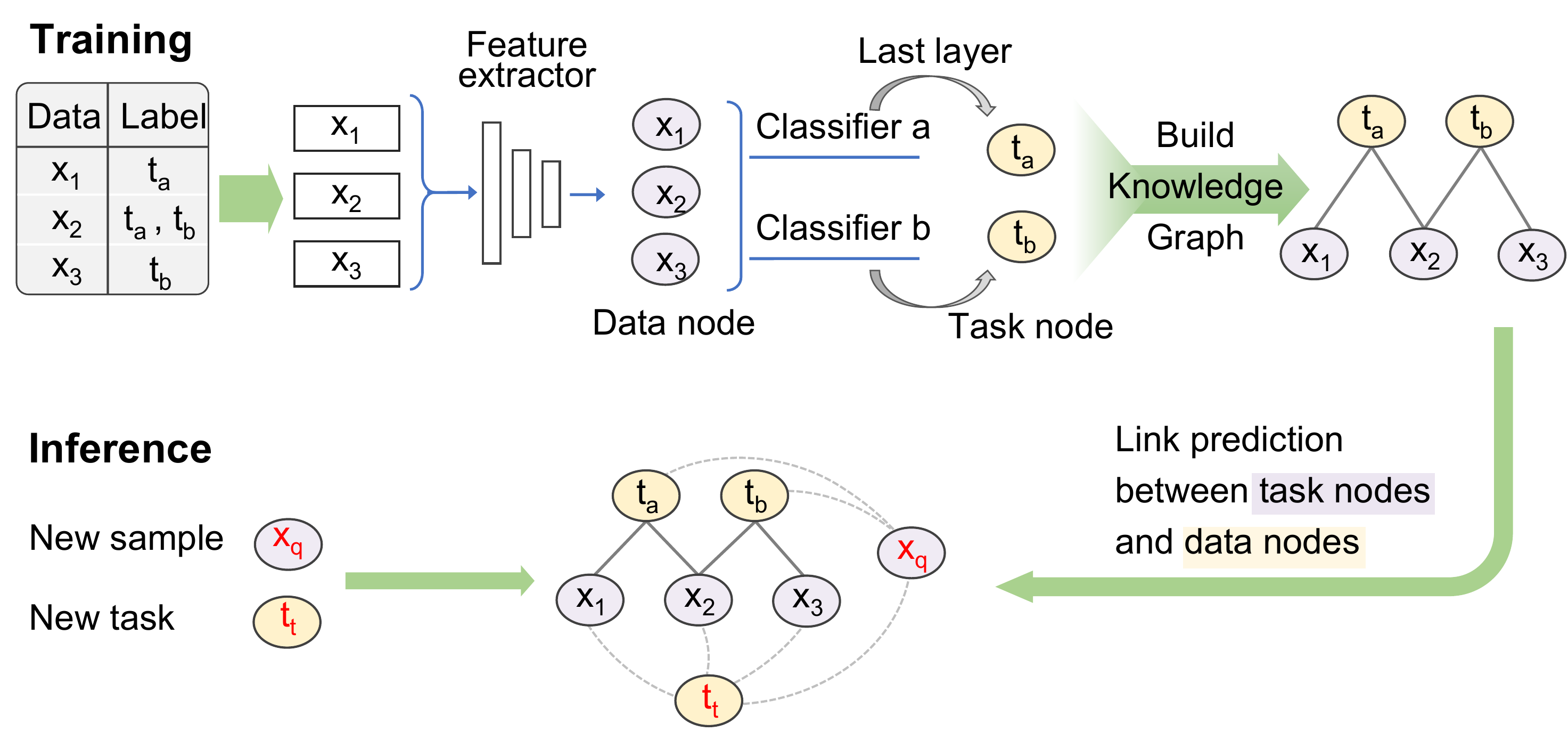

Relational Multi-Task Learning: Modeling Relations between Data and Tasks

[Paper]

[Code]

Authors: Kaidi Cao, Jiaxuan You, Jure Leskovec Publisher: ParXiv preprint arXiv:2303.07666 Year: 2023 |

|

|

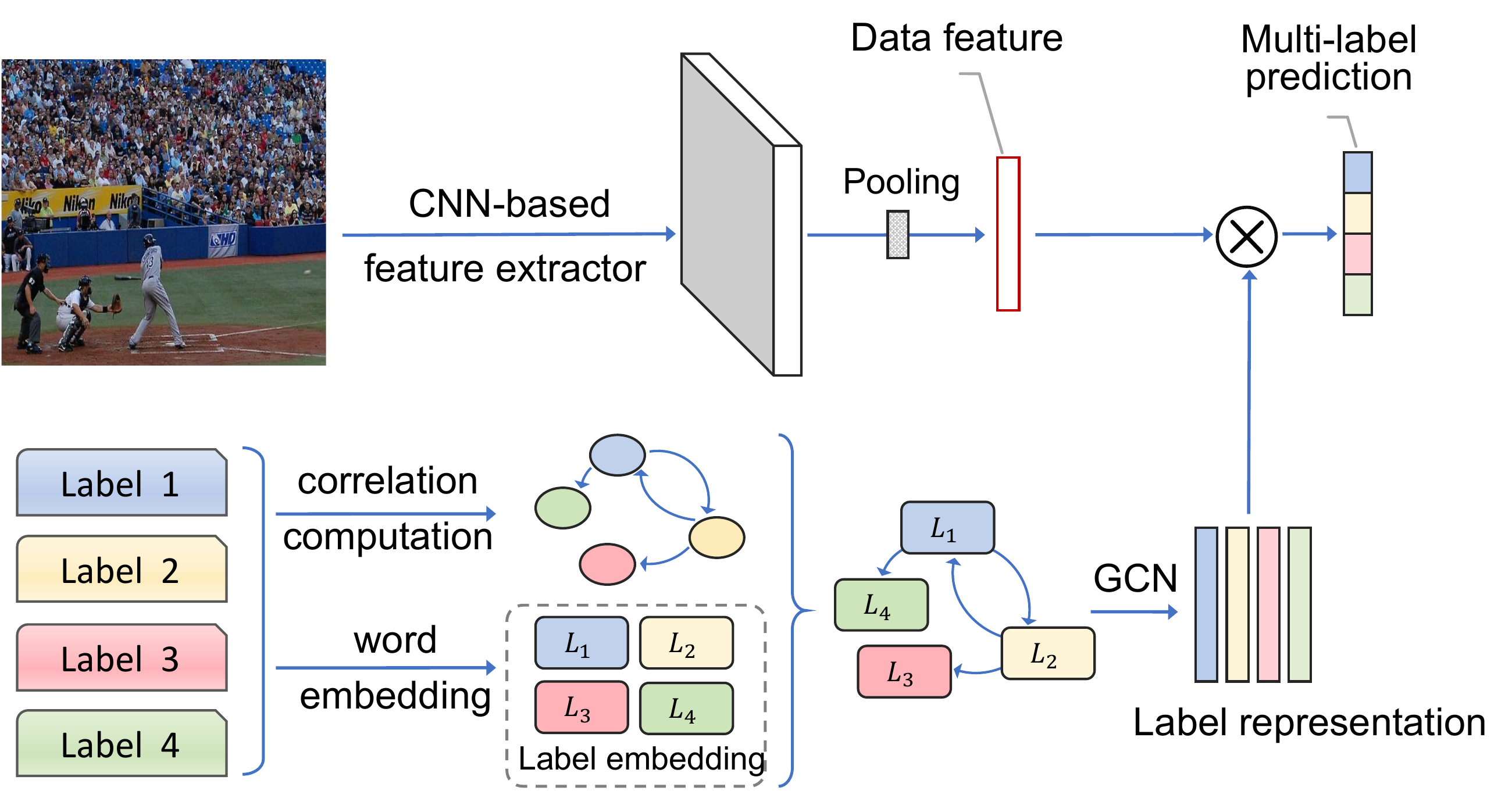

Multi-label image recognition with graph convolutional networks

[Paper]

[Code]

Authors: Zhao-Min Chen, Xiu-Shen Wei, Peng Wang, Yanwen Guo Publisher: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition Year: 2019 |

|

|

Leveraging sequence classification by taxonomy-based multitask

learning

[Paper]

Authors: Christian Widmer, Jose Leiva, Yasemin Altun, Gunnar Rätsch Publisher: Research in Computational Molecular Biology: 14th Annual International Conference, RECOMB 2010, Lisbon, Portugal, April 25-28, 2010. Proceedings 14 Year: 2010 |

|