Yuanfudao at SemEval-2018 Task 11: Three-way Attention and Relational Knowledge for Commonsense Machine Comprehension

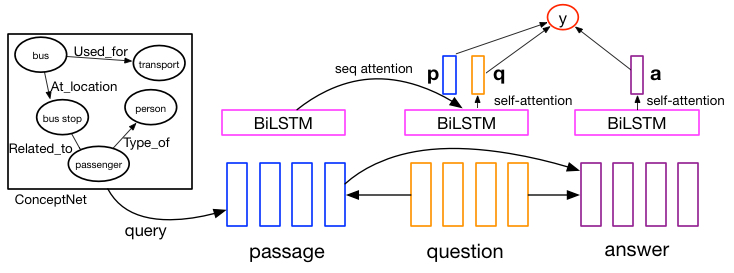

We use attention-based LSTM networks.

For more technical details, please refer to our paper at https://arxiv.org/abs/1803.00191

Official leaderboard is available at https://competitions.codalab.org/competitions/17184#results (Evaluation Phase)

The overall model architecture is shown below:

pytorch >= 0.2

spacy >= 2.0

GPU machine is preferred, training on CPU will be much slower.

Download preprocessed data from Google Drive or Baidu Cloud Disk, unzip and put them under folder data/.

If you choose to preprocess dataset by yourself,

please preprocess official dataset by python3 src/preprocess.py, download Glove embeddings,

and also remember to download ConceptNet and preprocess it with python3 src/preprocess.py conceptnet

Official dataset can be downloaded on hidrive.

We transform original XML format data to Json format with xml2json by running ./xml2json.py --pretty --strip_text -t xml2json -o test-data.json test-data.xml

Train model with python3 src/main.py --gpu 0,

the accuracy on development set will be approximately 83% after 50 epochs.

Following above instructions you will get a model with ~81.5% accuracy on test set, we use two additional techniques for our official submission (~83.95% accuracy):

-

Pretrain our model with RACE dataset for 10 epochs.

-

Train 9 models with different random seeds and ensemble their outputs.