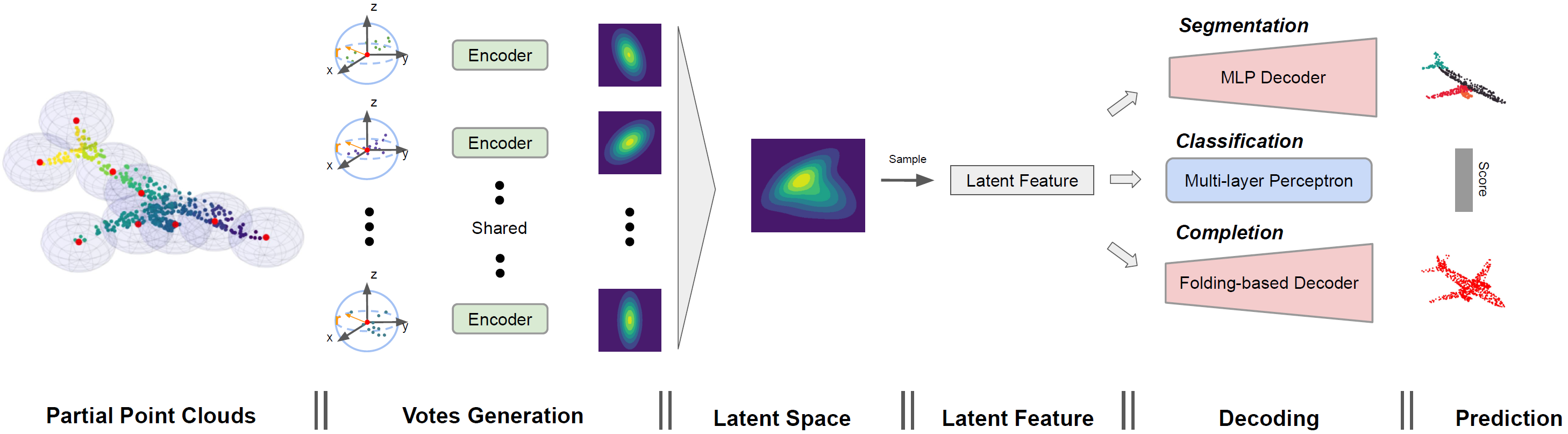

This paper proposes a general model for partial point clouds analysis wherein

the latent feature encoding a complete point clouds is inferred by applying a

local point set voting strategy. In particular, each local point set constructs

a vote that corresponds to a distribution in the latent space, and the optimal

latent feature is the one with the highest probability. We illustrates that this

proposed method achieves state-of-the-art performance on shape classification,

part segmentation and point cloud completion.

[paper]

[suppl]

This paper proposes a general model for partial point clouds analysis wherein

the latent feature encoding a complete point clouds is inferred by applying a

local point set voting strategy. In particular, each local point set constructs

a vote that corresponds to a distribution in the latent space, and the optimal

latent feature is the one with the highest probability. We illustrates that this

proposed method achieves state-of-the-art performance on shape classification,

part segmentation and point cloud completion.

[paper]

[suppl]

- Pytorch:1.5.0

- PyTorch geometric

- CUDA 10.1

- Tensorboard (optinoal for visualization of training process)

- open3D (optinoal for visualization of points clouds)

.

├── data_root

│ ├── ModelNet40 (dataset)

│ ├── ShapeNet_normal (dataset)

│ └── completion3D (dataset)

│

├── modelnet

│ ├── train_modelnet.sh

│ ├── evaluate_modelnet.sh

│ └── tensorboard.sh

│

├── shapenet_seg

│ ├── train_shaplenet.sh

│ ├── evaluate_shaplenet.sh

│ └── tensorboard.sh

│

├── completion3D

│ ├── train_completion3D.sh

│ ├── evaluate_completion3D.sh

│ └── tensorboard.sh

│

├── utils

│ ├── class_completion3D.py

│ ├── main.py

│ ├── model_utils.py

│ └── models.py

│

├── visulaization

│ ├── visualize_part_segmentation.py

│ └── visualize_point_cloud_completion.py

│

├── demo

│ ├── point_cloud_completion_demo.py

│ └── visualize.py

│

└── readme.md

The ModelNet40 (415M) dataset

is used to perform shape classification task. We provide a pretrained

model,

whose accuracy on complete point clouds is 92.0% and accuracy on parital point

clouds is 85.8%. Replace the --checkpoint flag in evaluate_modelnet.sh with

the path of pretrained model.

-

Enter

completion3D/cd completion3D/ -

Train the model.

./train_modelnet.sh

-

Visualize the training process in the Tensorboard.

./tensorboard.sh

-

Evaluate the trained model. Make sure that parameters in the evaluation are consistent with those during training.

./evaluate_modelnet.sh

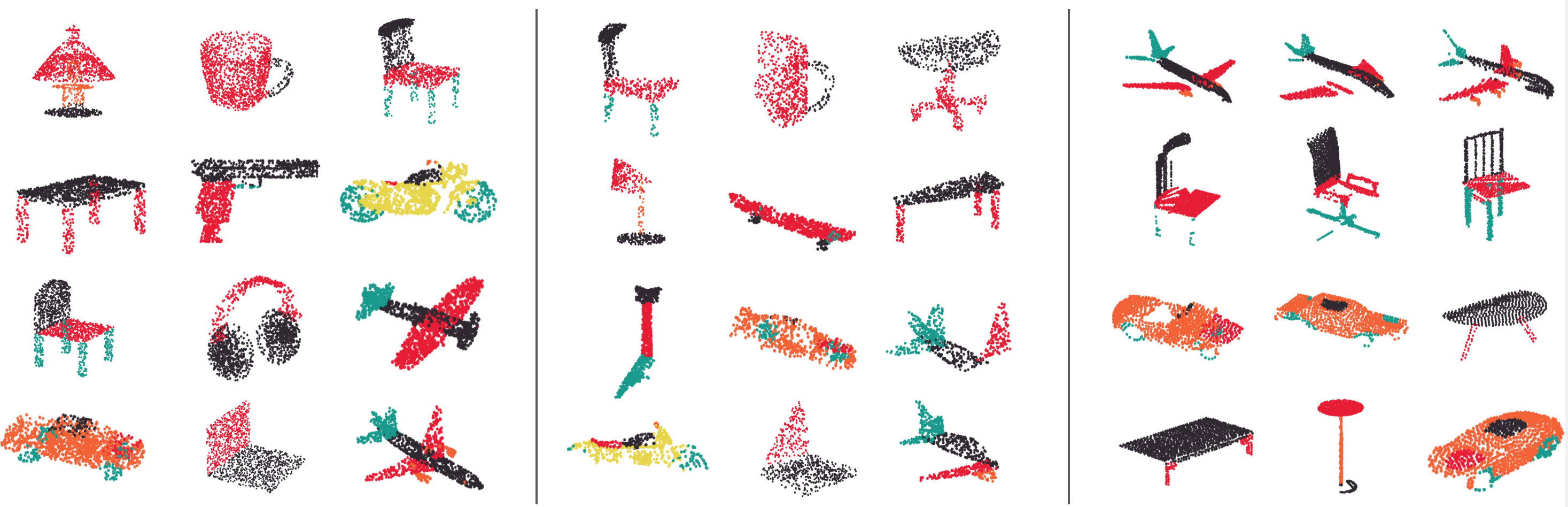

The ShapeNet

(674M) dataset is used during experiments of part segmentation task. You can set

the --categories in train_shapenet.sh to specify which categories of

objects will be trained.

We provide a pretrained model,

whose class mean IoU (mIoU) on complete point clouds is 78.3% and mIoU on

parital point clouds is 78.3%. Replace the --checkpoint flag in

evaluate_shapenet.sh with the path of pretrained model.

-

Enter

shapenet_seg/cd shapnet_seg/ -

Train the model.

./train_shapenet.sh

-

Visualize the training process in the Tensorboard.

./tensorboard.sh

-

Evaluate your trained model. Make sure the parameters in

evaluate_shapenet.shis consistent with those intrain_shapenet.sh. Sample predicted part segmentation results are saved into:shapenet_seg/checkpoint/{model_name}/eval_sample_results/../evaluate_shapenet.sh

-

Visualize sample part segmentation results. After evaluation, three

.npyfiles are saved for each sample:pos_{idx}.npycontains the input point clouds;pred_{idx}.npycontains the predicted part labels;label_{idx}.npycontains the ground-truth labels.cd visulaization/ python3 visualize_part_segmentation.py --model_name {model_name} --idx {idx}

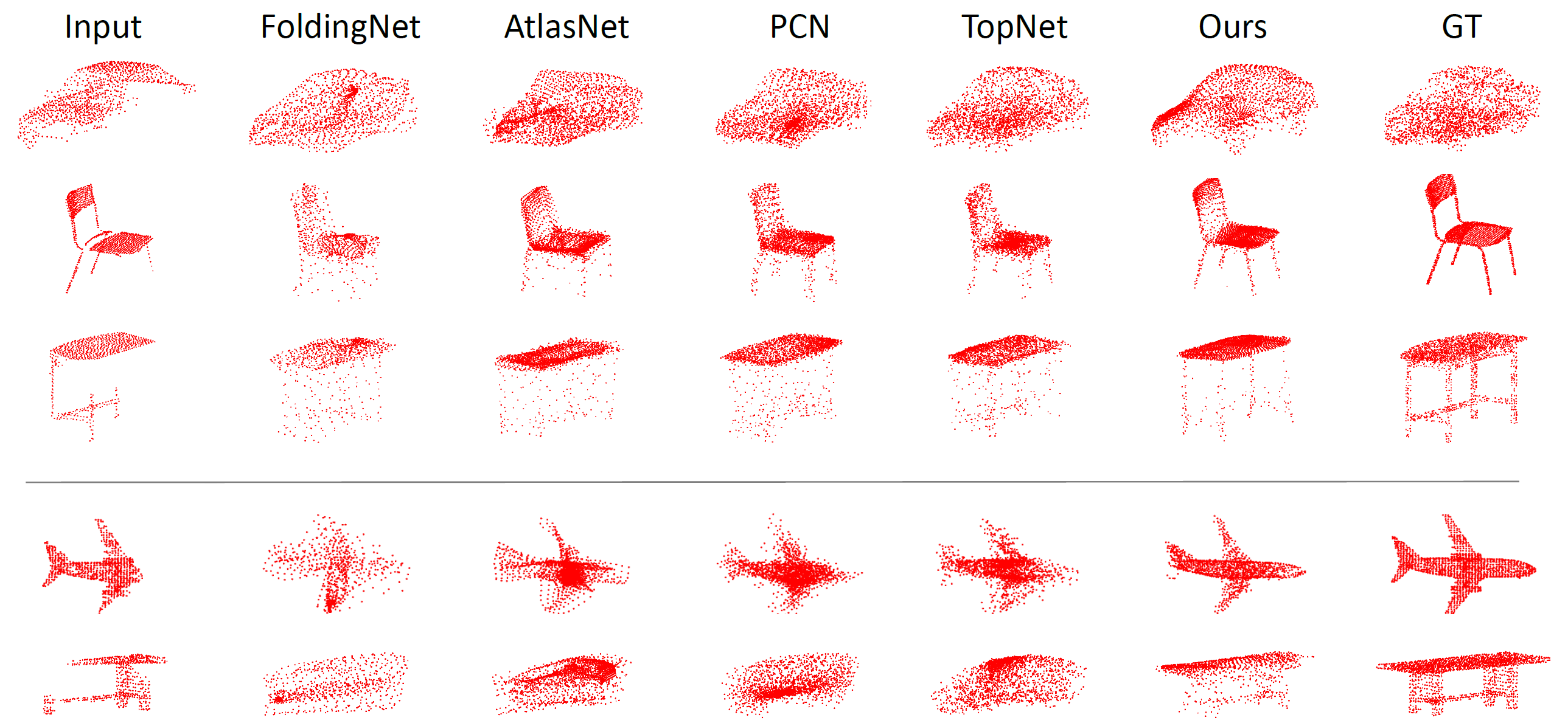

The Completion3D

(1.5GB) dataset is used during experiments of point cloud completion task.

Specifically, partial point clouds are taken as inputs and the goal is to infer

complete point clouds. Note that the sythetic partial point clouds are used

during training. Download dataset and save it to data_root/. You can set the

--categories in train_completion3D.sh to specify which category or

categories of object will be trained.

-

Enter

completion3D/cd completion3D/ -

Train the model.

./train_completion3D.sh

-

Evaluate the trained model. Make sure the parameters in the evaluation are consistent with those during training. Sample predicted completion results are saved into

completion3D/checkpoint/{model_name}/eval_sample_results/../evaluate_completion3D.sh

-

Visualize point cloud completionn results. After evaluation, four

.npyfiles are saved for each sample:pos_{idx}.npycontains the complete point clouds;pred_{idx}.npycontains the predicted complete point clouds;pos_observed_{idx}.npycontains the observed partial point clouds;pred_diverse_{idx}.npycontains a diverse predicted completion point clouds.cd visulaization/ python3 visualize_point_cloud_completion.py --model_name {model_name} --idx {idx}

Here we provide a quick demo for point cloud completion. Specically, the

pretraiend model

(pretrained only on cars from ShapeNet, and input cars are transformed to the

center of the bounding boxes) is used to do point cloud completion on partial

point clouds of vehicles generated from KITTI. The partial point cloud

generation process can be found in

here. Note

that input point clouds should be in .npy format and in the shape of (N, 3).

For example, if your input point clouds are in the demo/demo_inputs/*.npy and

pretrained model is in the demo/model_car.pth, run the following command:

cd demo/

python3 point_cloud_completion_demo.py \

--data_path demo_inputs \

--checkpoint model_car.pth \After running, predicted compeltion results will be saved in the

demo/demo_results/. Then visualize the results by running:

python3 visualize.py \

--data_path demo_inputs/{Input partial point clouds}.npy--data_path can be either set to a certain point cloud, such as

demo_inputs/000000_car_point_1.npy, or a directory containing input

parital point clouds, such as demo_inputs. In the later case, a random

sample from demo_inputs/ will be selected to visualize.

If you find this project useful in your research, please consider cite:

@article{pointsetvoting,

title={Point Set Voting for Partial Point Cloud Analysis},

author={Zhang, Junming and Chen, Weijia and Wang, Yuping and Vasudevan, Ram and Johnson-Roberson, Matthew},

journal={IEEE Robotics and Automation Letters},

year={2021}

}