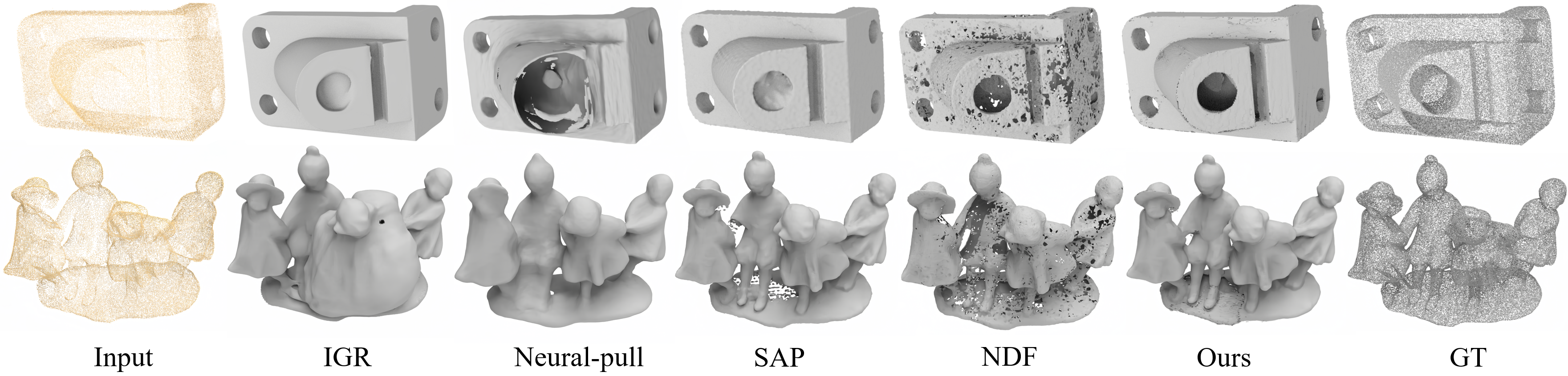

CAP-UDF: Learning Unsigned Distance Functions Progressively from Raw Point Clouds with Consistency-Aware Field Optimization

Junsheng Zhou* · Baorui Ma* · Shujuan Li · Yu-Shen Liu · Yi Fang · Zhizhong Han

(* Equal Contribution)

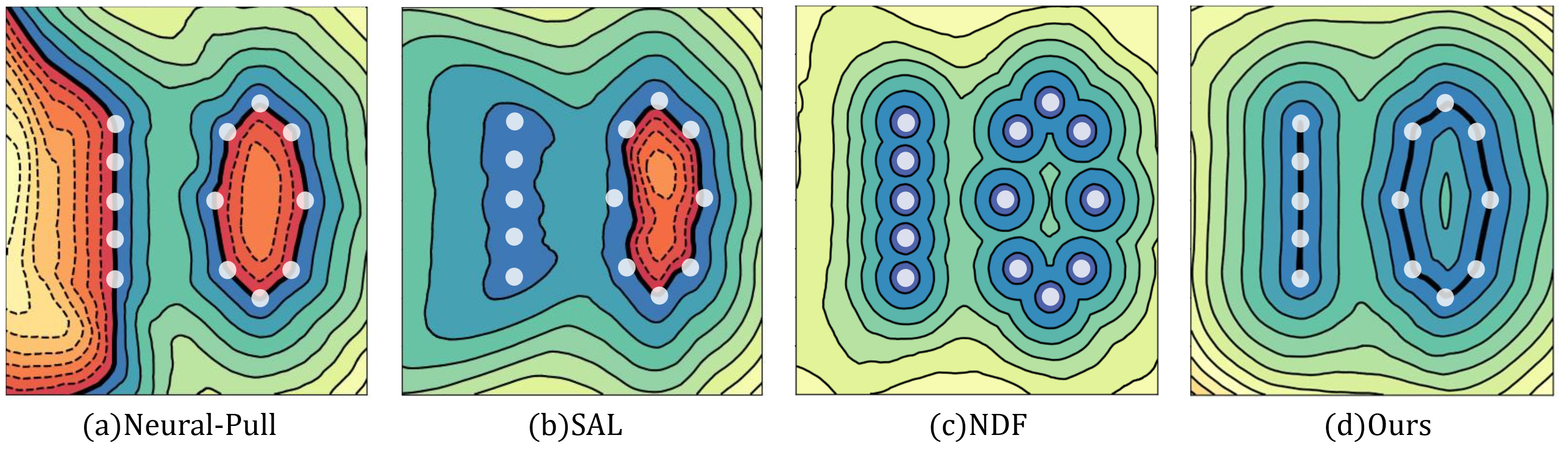

We propose to learn a consistency-aware unsigned distance field to represent shapes and scenes with arbitary architecture. We show a 2D case of learning the distance field from a sparse 2D point cloud

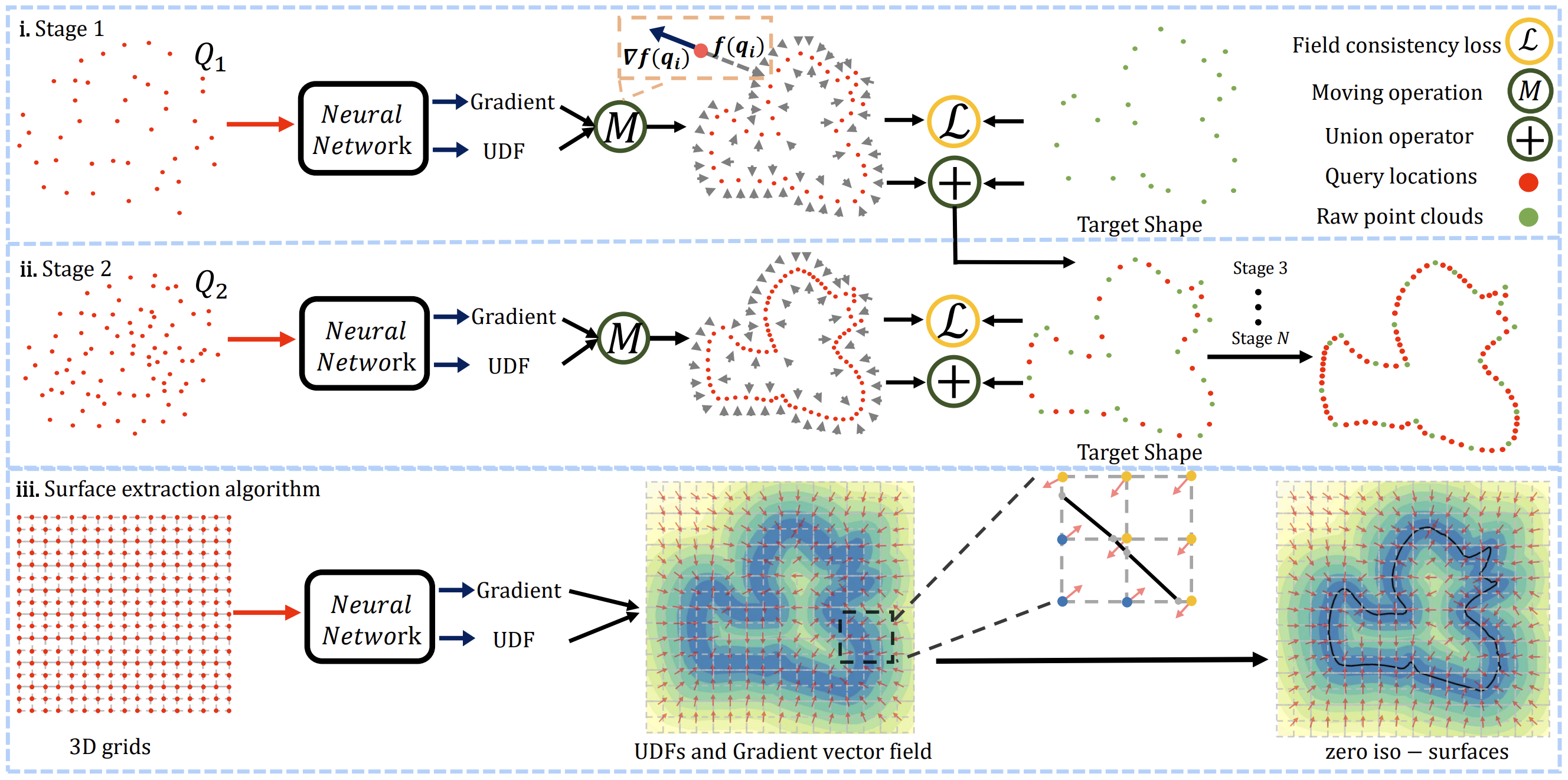

Overview of our method. The CAP-UDF is designed to reconstruct surfaces from raw point clouds by learning consistency-aware UDFs. Given a 3D query

Please also check out the following works that inspire us a lot:

- Baorui Ma et al. - Neural-Pull: Learning Signed Distance Functions from Point Clouds by Learning to Pull Space onto Surfaces (ICML2021)

- Baorui Ma et al. - Surface Reconstruction from Point Clouds by Learning Predictive Context Priors (CVPR2022)

- Baorui Ma et al. - Reconstructing Surfaces for Sparse Point Clouds with On-Surface Priors (CVPR2022)

Our code is implemented in Python 3.8, PyTorch 1.11.0 and CUDA 11.3.

- Install python Dependencies

conda create -n capudf python=3.8

conda activate capudf

conda install pytorch torchvision torchaudio cudatoolkit=11.3 -c pytorch

pip install tqdm pyhocon==0.3.57 trimesh PyMCubes scipy point_cloud_utils==0.29.7- Compile C++ extensions

cd extensions/chamfer_dist

python setup.py install

We provide the processed data for ShapeNetCars, 3DScenes and SRB dataset here. Unzip it to the ./data folder. The datasets is organised as follows:

│data/

├──shapenetCars/

│ ├── input

│ ├── ground_truth

│ ├── query_data

├──3dscene/

│ ├── input

│ ├── ground_truth

│ ├── query_data

├──srb/

│ ├── input

│ ├── ground_truth

│ ├── query_data

We provide all data of the 3DScenes and SRB dataset, and two demos of the ShapeNetCars. The full set data of ShapeNetCars will be uploaded soon.

You can train our CAP-UDF to reconstruct surfaces from a single point cloud as:

- ShapeNetCars

python run.py --gpu 0 --conf confs/shapenetCars.conf --dataname 3e5e4ff60c151baee9d84a67fdc5736 --dir 3e5e4ff60c151baee9d84a67fdc5736

- 3DScene

python run.py --gpu 0 --conf confs/3dscene.conf --dataname lounge_1000 --dir lounge_1000

- SRB

python run.py --gpu 0 --conf confs/srb.conf --dataname gargoyle --dir gargoyle

You can find the generated mesh and the log in ./outs.

You can evaluate the reconstructed meshes and dense point clouds as follows:

- ShapeNetCars

python evaluation/shapenetCars/eval_mesh.py --conf confs/shapenetCars.conf --dataname 3e5e4ff60c151baee9d84a67fdc5736 --dir 3e5e4ff60c151baee9d84a67fdc5736

- 3DScene

python evaluation/3dscene/eval_mesh.py --conf confs/3dscene.conf --dataname lounge_1000 --dir lounge_1000

- SRB

python evaluation/srb/eval_mesh.py --conf confs/srb.conf --dataname gargoyle --dir gargoyle

For evaluating the generated dense point clouds, you can run the eval_pc.py of each dataset instead of eval_mesh.py.

We also provide the instructions for training your own data in the following.

First, you should put your own data to the ./data/owndata/input folder. The datasets is organised as follows:

│data/

├──shapenetCars/

│ ├── input

│ ├── (dataname).ply/xyz/npy

We support the point cloud data format of .ply, .xyz and .npy

To train your own data, simply run:

python run.py --gpu 0 --conf confs/base.conf --dataname (dataname) --dir (dataname)

In different datasets or your own data, because of the variation in point cloud density, this hyperparameter scale has a very strong influence on the final result, which controls the distance between the query points and the point cloud. So if you want to get better results, you should adjust this parameter. We give 0.25 * np.sqrt(POINT_NUM_GT / 20000) here as a reference value, and this value can be used for most object-level reconstructions.

If you find our code or paper useful, please consider citing

@article{zhou2024cap-pami,

title={CAP-UDF: Learning Unsigned Distance Functions Progressively from Raw Point Clouds with Consistency-Aware Field Optimization},

author={Zhou, Junsheng and Ma, Baorui and Li, Shujuan and Liu, Yu-Shen and Fang, Yi and Han, Zhizhong},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

year={2024},

publisher={IEEE}

}

@inproceedings{zhou2022capudf,

title = {Learning Consistency-Aware Unsigned Distance Functions Progressively from Raw Point Clouds},

author = {Zhou, Junsheng and Ma, Baorui and Liu, Yu-Shen and Fang, Yi and Han, Zhizhong},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS)},

year = {2022}

}