This repo contains a finetuned CNN and a script for easy inference.

- install dependencies by either A) using the 'requirements.txt' or B) using the docker image.

- place new images in the folder 'VALIDATION_IMAGES/'. Note that images must not be in subfolders.

- run inference.py

- wait for the script to run - it'll keep you in the loop as to what it is currently doing.

- the results will be shown in the script output as well as in a file called 'validation_output.txt'. This file is formatted to comply with the instructions, thus no confidence etc. is provided.

Please not that the code is written to take advantage of a GPU, if it is available, and use the CPU otherwise. If this creates issues (there is a small chance that on MAC the specified pytorch version might have trouble with cuda), just replace line 20 in inference.py with "device = torch.device('cpu')".

Additionally, since solutions have to be shared to github, and github limits files to be <100MB, I split the model weights into chunks using the code in "split_stitch.py" which is then stitched together the first time "inference.py" is run. This should work without issue, but in case there is a problem, please contact me so I can provide a direct download link to the model on gDrive.

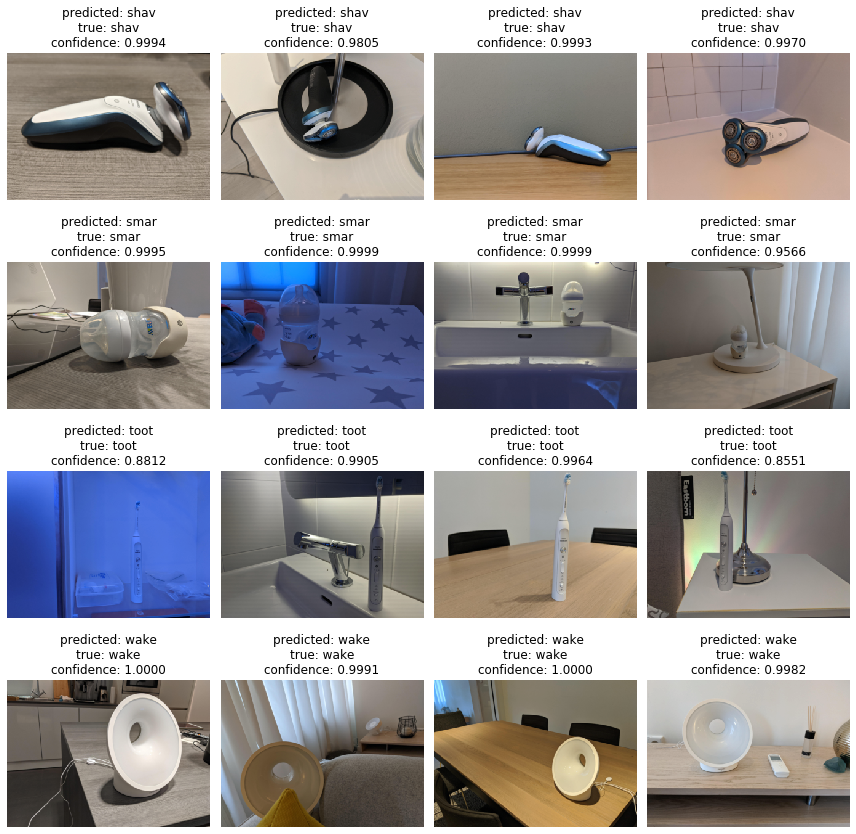

Finally, if you don't have any images of the four applicances ready, you can try out the code with the images in "EXAMPLE_VALIDATION_IMAGES/". Just copy them to "VALIDATION_IMAGES/" and run inference.py. Note that 3/4 of those images were used for training, so they do not reflect the model performance accurately.

Detailed information can be found here but in a nutshell, 20 images each of 4 philips appliances were provided as a training set to create an algorithm that can identify them on new photos. Each picture contains exactly one product and the products are at different angles, appear in front of different backgrounds in different parts of the pictures, and take up varying amounts of space.

According to the material provided, the results will be evaluated in terms of accuracy (and not say, log_loss or AUC), and limitless image augmentation was allowed.

I decided to finetune a cnn pre-trained on imagenet. I tested a few vgg and resnet versions, and VGG11 with batchnorm performed best on the validation data. Then I split off a held-out test set, and trained with a train/validation split. With the help of google images, I added a handful of extra images per class to the train set, although do differ noticably from the original train set.

For training, I added heavy image augmentation to the train set, and finetuned the cnn in multiple phases. In the first phase, the last fully connected layer was trained while the rest of the network was frozen. Then, progressively the other fully connected layers were added. Next, the last block of the vgg16 was progressively trained. And finally both the last block and the fully connected layers were trained together. Additionally, for the last few epochs per phase, the model was only trained on the original images and not on the extra images, to bias the model more towards recognising images correctly that come from the original distrubtion, rather than the noisier ones found online.

According to my test-set, this approach seemed to be surprisingly effective.

However, it appears that the background can often throw the model off. For instance, round features make the model think that it sees the bottle. Some of the strategies I discuss below might have been effective to mitigate this. A small thing that I do for inference is that I conduct inference on flipped versions of the images, since we know that products could appear in almost any orientation and that the products all look the same when mirrored.The current approach seems to work well according to my test set. There were many cool ideas that I didn't pursue due to the small data set size, like 1.; approach 2. that would be possible but that I think verge on cheating, approach 3./4. due to computational and storage constraints I had.

Given the provided training images, we would expect that the objects in question might only make up a small part of the images and that they can appear in any location. Thus, finding the most interesting candidate regions first and then running a classification network on them might be more effective. However, I think such approaches would have required much more data. A paper that pursues such an approach for medical images can be found here, but they used more than 1 million images.

Generally, whoever did the most image augmentation will likely win the challenge. It would have been possible, for in- stance to buy all four appliances and take a few dozen or hundred of pictures each; or to exhaustively search the internet.

Relatedly, since the products always look the same, it would have been possible to use the training images to cut out all the products and put them on a transparent background. Then, one could have written a script to take random background images (i.e. Imagenet, random google searches etc.) and impose the products on them, plus adding a bit of noise to the product image to prevent overfitting.

Those strategies would have been also quite effective but given the file size restrictions of github, and my computational constraints, I had to forgo them.