This repository is the official PyTorch implementation of our AAAI-2022 paper, in which we propose DiffSinger (for Singing-Voice-Synthesis) and DiffSpeech (for Text-to-Speech).

Besides, more detailed & improved code framework, which contains the implementations of FastSpeech 2, DiffSpeech and our NeurIPS-2021 work PortaSpeech is coming soon ✨ ✨ ✨.

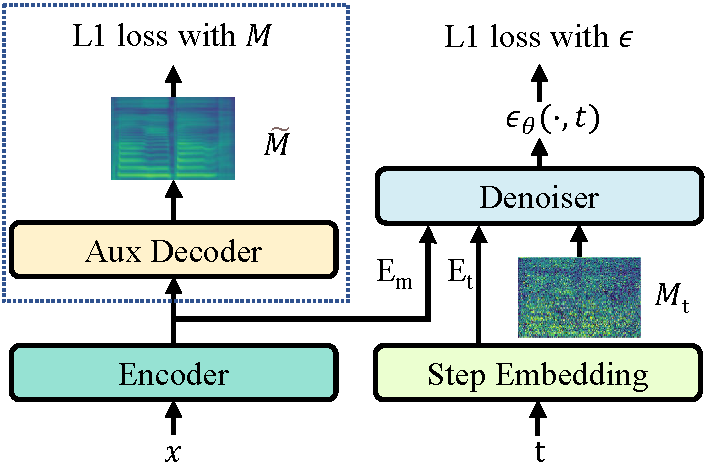

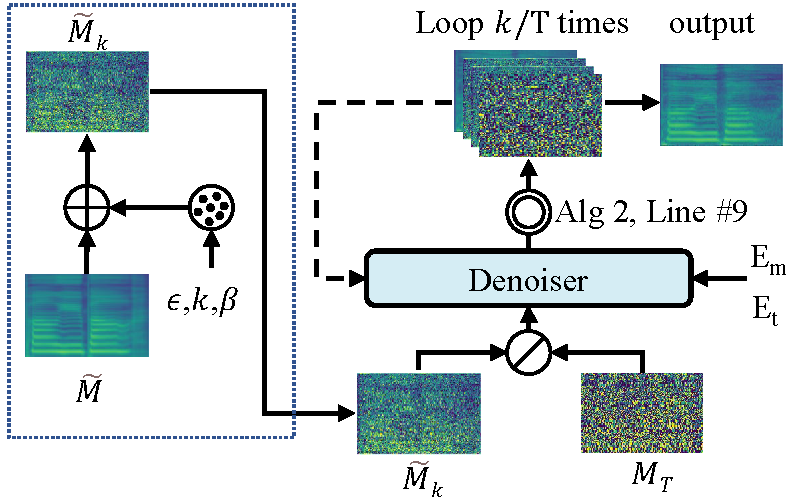

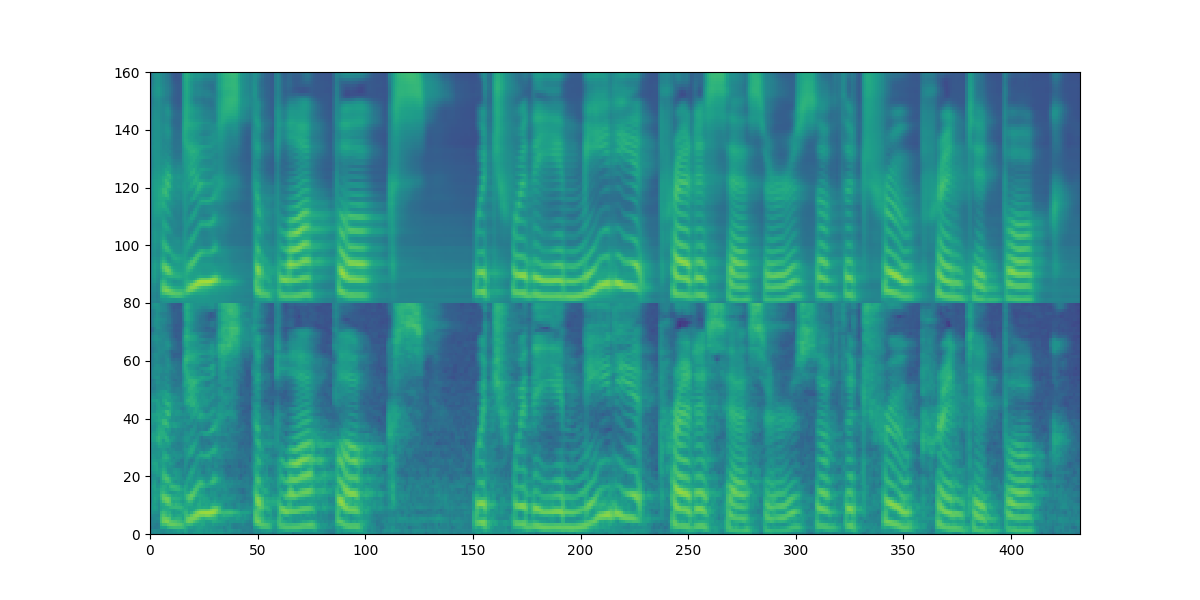

| DiffSinger/DiffSpeech at training | DiffSinger/DiffSpeech at inference |

|---|---|

|

|

🚀 News:

- Dec.01, 2021: DiffSinger was accepted by AAAI-2022.

- Sep.29, 2021: Our recent work

PortaSpeech: Portable and High-Quality Generative Text-to-Speechwas accepted by NeurIPS-2021.

- May.06, 2021: We submitted DiffSinger to Arxiv

.

🎉 🎉 🎉 New features updates:

- Jan.29, 2022: support MIDI version SVS.

- Jan.13, 2022: support SVS, release PopCS dataset.

- Dec.19, 2021: support TTS.

conda create -n your_env_name python=3.8

source activate your_env_name

pip install -r requirements_2080.txt (GPU 2080Ti, CUDA 10.2)

or pip install -r requirements_3090.txt (GPU 3090, CUDA 11.4)a) Download and extract the LJ Speech dataset, then create a link to the dataset folder: ln -s /xxx/LJSpeech-1.1/ data/raw/

b) Download and Unzip the ground-truth duration extracted by MFA: tar -xvf mfa_outputs.tar; mv mfa_outputs data/processed/ljspeech/

c) Run the following scripts to pack the dataset for training/inference.

export PYTHONPATH=.

CUDA_VISIBLE_DEVICES=0 python data_gen/tts/bin/binarize.py --config configs/tts/lj/fs2.yaml

# `data/binary/ljspeech` will be generated.CUDA_VISIBLE_DEVICES=0 python tasks/run.py --config usr/configs/lj_ds_beta6.yaml --exp_name lj_exp1 --resetCUDA_VISIBLE_DEVICES=0 python tasks/run.py --config usr/configs/lj_ds_beta6.yaml --exp_name lj_exp1 --reset --inferWe also provide:

- the pre-trained model of DiffSpeech;

- the pre-trained model of HifiGAN vocoder;

- the individual pre-trained model of FastSpeech 2 for the shallow diffusion mechanism in DiffSpeech;

Remember to put the pre-trained models in checkpoints directory.

- See in apply_form.

- Dataset preview.

a) Download and extract PopCS, then create a link to the dataset folder: ln -s /xxx/popcs/ data/processed/

b) Run the following scripts to pack the dataset for training/inference.

export PYTHONPATH=.

CUDA_VISIBLE_DEVICES=0 python data_gen/tts/bin/binarize.py --config usr/configs/popcs_ds_beta6.yaml

# `data/binary/popcs-pmf0` will be generated.# first run fs2 infer;

CUDA_VISIBLE_DEVICES=0 python tasks/run.py --config usr/configs/popcs_fs2.yaml --exp_name popcs_fs2_pmf0_1230 --reset --infer

# second run ds train;

CUDA_VISIBLE_DEVICES=0 python tasks/run.py --config usr/configs/popcs_ds_beta6_offline.yaml --exp_name popcs_exp2 --reset# first run fs2 infer; if you have already run 'fs2 infer' in above steps, you can skip 'fs2 infer'.

CUDA_VISIBLE_DEVICES=0 python tasks/run.py --config usr/configs/popcs_fs2.yaml --exp_name popcs_fs2_pmf0_1230 --reset --infer

# second run ds infer;

CUDA_VISIBLE_DEVICES=0 python tasks/run.py --config usr/configs/popcs_ds_beta6_offline.yaml --exp_name popcs_exp2 --reset --inferWe also provide:

- the pre-trained model of DiffSinger;

- the pre-trained model of FFT-Singer for the shallow diffusion mechanism in DiffSinger;

- the pre-trained model of HifiGAN-Singing which is specially designed for SVS with NSF mechanism.

Note that:

- the original PWG version vocoder in the paper we used has been put into commercial use, so we provide this HifiGAN version vocoder as a substitute.

- we assume the ground-truth F0 to be given as the pitch information following [1][2][3]. If you want to conduct experiments on MIDI data (with external F0 predictor or joint prediction with spectrograms), you may turn on the pe_enable option. Otherwise, the vocoder with NSF could not work well.

[1] Adversarially trained multi-singer sequence-to-sequence singing synthesizer. Interspeech 2020.

[2] SEQUENCE-TO-SEQUENCE SINGING SYNTHESIS USING THE FEED-FORWARD TRANSFORMER. ICASSP 2020.

[3] DeepSinger : Singing Voice Synthesis with Data Mined From the Web. KDD 2020.

tensorboard --logdir_spec exp_name |

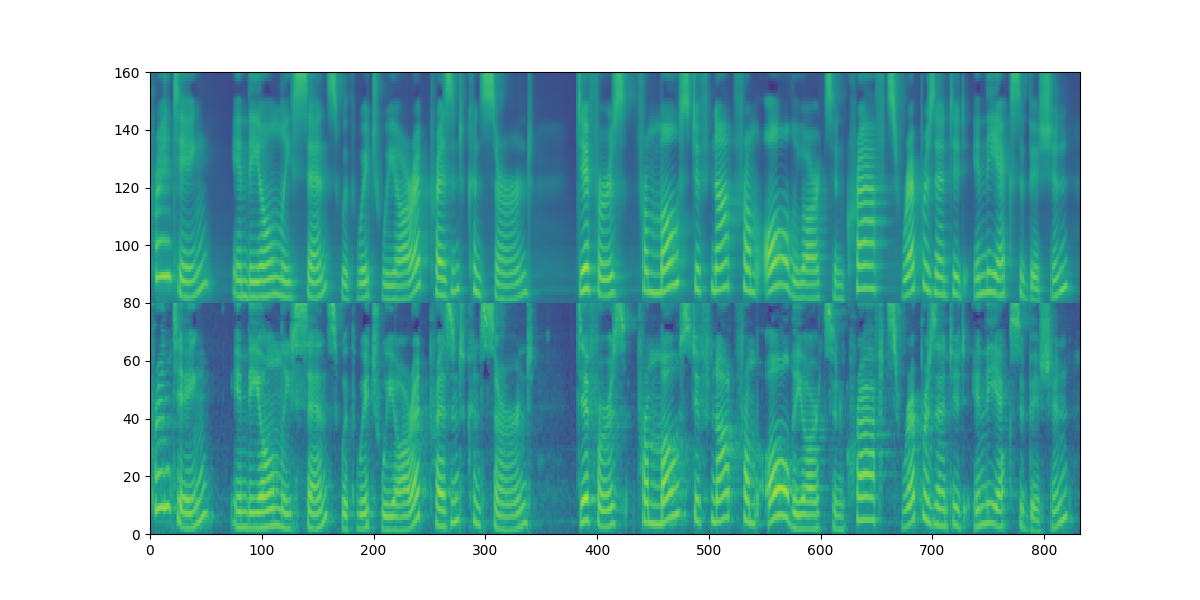

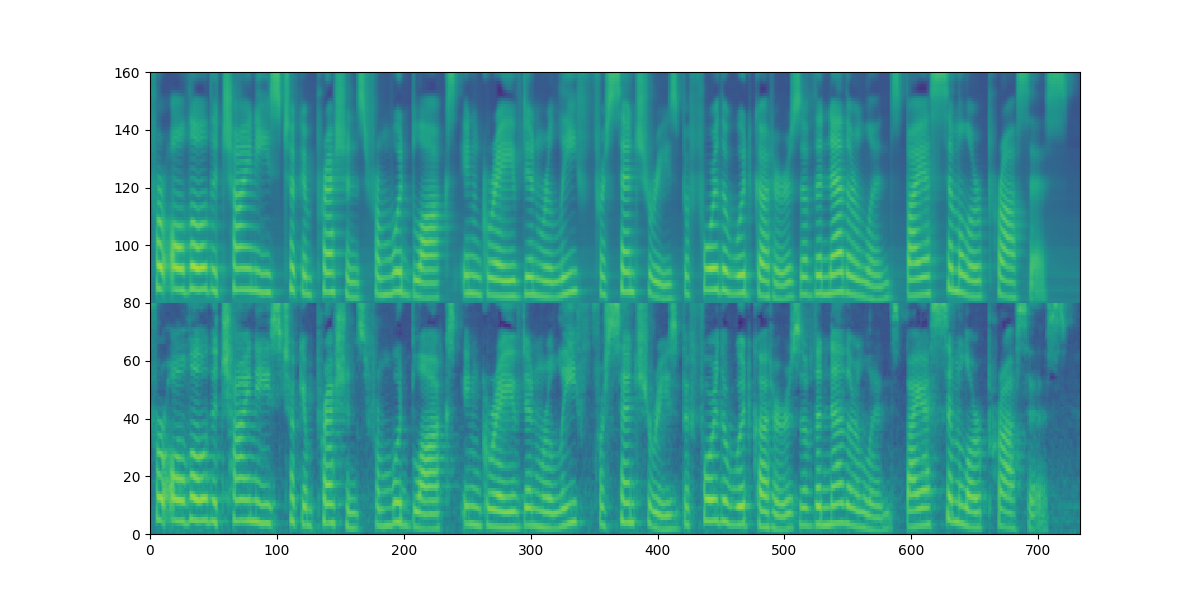

Along vertical axis, DiffSpeech: [0-80]; FastSpeech2: [80-160].

| DiffSpeech vs. FastSpeech 2 |

|---|

|

|

|

Audio samples can be found in our demo page.

We also put part of the audio samples generated by DiffSpeech+HifiGAN (marked as [P]) and GTmel+HifiGAN (marked as [G]) of test set in resources/demos_1213.

(corresponding to the pre-trained model DiffSpeech)

🚀 🚀 🚀 Update:

New singing samples can be found in resources/demos_0112.

@article{liu2021diffsinger,

title={Diffsinger: Singing voice synthesis via shallow diffusion mechanism},

author={Liu, Jinglin and Li, Chengxi and Ren, Yi and Chen, Feiyang and Liu, Peng and Zhao, Zhou},

journal={arXiv preprint arXiv:2105.02446},

volume={2},

year={2021}}

Our codes are based on the following repos:

Also thanks Keon Lee for fast implementation of our work.