Autonomous Web Browsing AI Agent with Vision - powered by MultiOn

As of May 6, MultiOnChat is now MultiAgent and frontend and backend repositories have been merged!

- git

- Python 3.11

- pipenv

- Node 21

- pnpm

- Clone this GitHub respository:

git clone https://github.com/justinsunyt/multiagent.git-

Create an account on Supabase if you don't have one already.

-

Create a project.

-

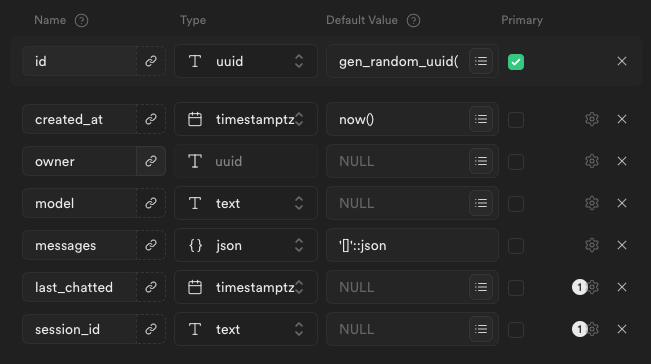

In Table Editor, create a table called

chatswith the following columns:

Make sure last_chatted and session_id are nullable.

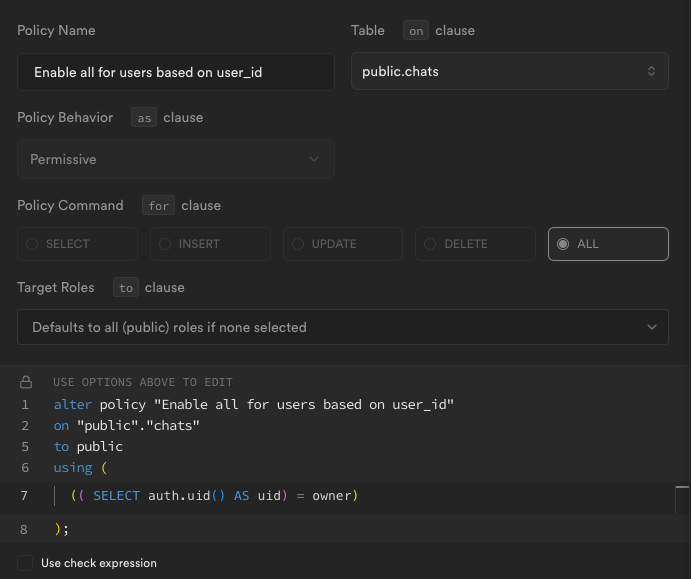

- Create the following auth policy for

chats:

- In Authentication -> Providers, enable

Emailas an auth provider.

- Navigate to the

backend/folder:

cd backend- Create a .env file in the

backend/folder and store the following variables:

SUPABASE_URL="<Supabase project URL>"

SUPABASE_KEY="<Supabase anon key>"

SUPABASE_JWT_SECRET="<Supabase JWT secret>"

SUPABASE_JWT_ISSUER="<Supabase project URL>/auth/v1"

REPLICATE_API_TOKEN="<Replicate API token>"

GROQ_API_KEY="<Groq API key>"

MULTION_API_KEY="<Multion API key>"- Launch pipenv environment:

pipenv shell- Install required packages:

pipenv install- Run the FastAPI development server:

uvicorn main:app --reload- Navigate to the

frontend/folder:

cd frontend- Create a .env.local file in the

frontend/folder and store the following variables:

NEXT_PUBLIC_SUPABASE_URL="<Supabase project URL>"

NEXT_PUBLIC_SUPABASE_ANON_KEY="<Supabase anon key>"

NEXT_PUBLIC_PLATFORM_URL="<deployed backend URL, will only be used in production>"- Install required packages:

pnpm install- Run the Next.js development server:

pnpm devFinally, open http://localhost:3000 with your browser to start using MultiAgent!

- Client: Next.js, TanStack Query

- UI: Tailwind, shadcn/ui, Framer Motion, Lucide, Sonner, Spline

- Server: FastAPI

- Database: Supabase

- AI: MultiOn, Replicate, Groq

- Autonomously browse the internet with your own AI agent using only an image and a command - order a Big Mac, schedule events, and shop for outfits!

- Supabase database and email authentication with JWT token verification for RLS storage

- Currently supports llama3-70b, llava-13b, lava-v1.6-34b, qwen-vl-chat

- Llama tool calling to activate agent whenever appropriate

- Refine image prompt recursively with Llama

- Chat selection menu to choose between any combination of LLMs and VLMs

- Toggle MultiOn local mode

- Deploy! (You will have to use your own API keys)

Special thanks to MultiOn for the epic agent package and auroregmbt for the Spline animation!