Cross-View Regularization for Domain Adaptive Panoptic Segmentation

Jiaxing Huang, Dayan Guan, Xiao Aoran, Shijian Lu

School of Computer Science Engineering, Nanyang Technological University, Singapore

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021 (Oral)

If you find this code useful for your research, please cite our paper:

@InProceedings{Huang_2021_CVPR,

author = {Huang, Jiaxing and Guan, Dayan and Xiao, Aoran and Lu, Shijian},

title = {Cross-View Regularization for Domain Adaptive Panoptic Segmentation},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2021},

pages = {10133-10144}

}

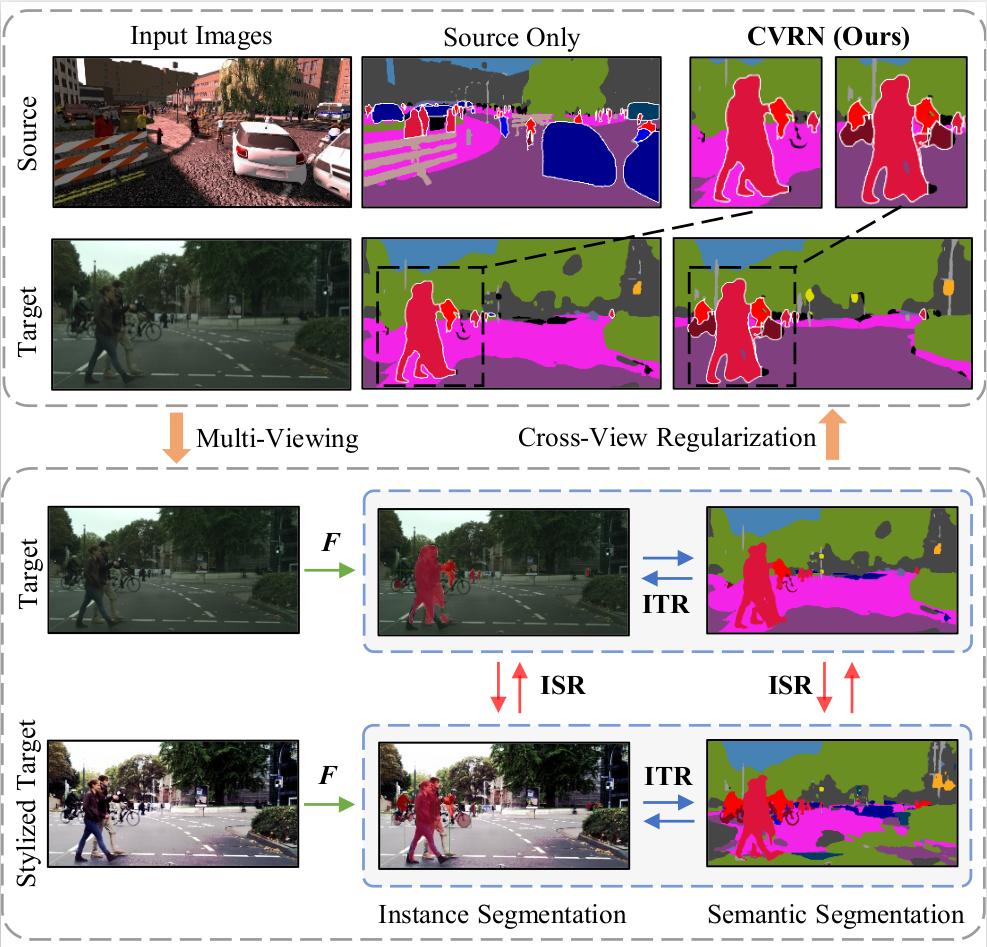

Panoptic segmentation unifies semantic segmentation and instance segmentation which has been attracting increasing attention in recent years. However, most existing research was conducted under a supervised learning setup whereas unsupervised domain adaptive panoptic segmentation which is critical in different tasks and applications is largely neglected. We design a domain adaptive panoptic segmentation network that exploits inter-style consistency and inter-task regularization for optimal domain adaptive panoptic segmentation. The inter-style consistency leverages semantic invariance across the same image of the different styles which fabricates certain self-supervisions to guide the network to learn domain-invariant features. The inter-task regularization exploits the complementary nature of instance segmentation and semantic segmentation and uses it as a constraint for better feature alignment across domains. Extensive experiments over multiple domain adaptive panoptic segmentation tasks (e.g. synthetic-to-real and real-to-real) show that our proposed network achieves superior segmentation performance as compared with the state-of-the-art.

- Python 3.7

- Pytorch >= 0.4.1

- CUDA 9.0 or higher

- Clone the repo:

$ git clone https://github.com/jxhuang0508/CVRN.git

$ cd CVRN- Creat conda environment:

$ conda env create -f environment.yaml- Clone UPSNet:

cd CVRN

$ git clone https://github.com/uber-research/UPSNet.git- Initialization:

$ cd UPSNet

$ sh init.sh

$ cp -r lib/dataset_devkit/panopticapi/panopticapi/ .$ cd CVRN

$ git clone https://github.com/yzou2/CRST.git$ cd UPSNet

$ sh init_cityscapes.sh

$ cd ..

$ python cvrn/init_citiscapes_19cls_to_16cls.py$ cp cvrn/models/* UPSNet/upsnet/models

$ cp cvrn/dataset/* UPSNet/upsnet/dataset

$ cp cvrn/upsnet/* UPSNet/upsnetPre-trained models can be downloaded here and put in CVRN/pretrained_models

$ cd UPSNet

$ python upsnet/test_cvrn_upsnet.py --cfg ../config/cvrn_upsnet.yaml --weight_path ../pretrained_models/cvrn_upsnet.pth

$ 2021-06-10 14:20:09,688 | base_dataset.py | line 499: | PQ SQ RQ N

$ 2021-06-10 14:20:09,688 | base_dataset.py | line 500: --------------------------------------

$ 2021-06-10 14:20:09,688 | base_dataset.py | line 505: All | 34.0 68.2 43.4 16

$ 2021-06-10 14:20:09,688 | base_dataset.py | line 505: Things | 27.9 73.6 37.3 6

$ 2021-06-10 14:20:09,688 | base_dataset.py | line 505: Stuff | 37.7 65.0 47.1 10$ python upsnet/test_cvrn_pfpn.py --cfg ../config/cvrn_pfpn.yaml --weight_path ../pretrained_models/cvrn_pfpn.pth

$ 2021-06-10 14:27:36,841 | base_dataset.py | line 361: | PQ SQ RQ N

$ 2021-06-10 14:27:36,842 | base_dataset.py | line 362: --------------------------------------

$ 2021-06-10 14:27:36,842 | base_dataset.py | line 367: All | 31.4 66.4 40.0 16

$ 2021-06-10 14:27:36,842 | base_dataset.py | line 367: Things | 20.7 68.1 28.2 6

$ 2021-06-10 14:27:36,842 | base_dataset.py | line 367: Stuff | 37.9 65.4 47.0 10$ python upsnet/test_cvrn_psn.py --cfg ../config/cvrn_psn.yaml --weight_path ../pretrained_models/cvrn_psn_maskrcnn_branch.pth

$ 2021-06-10 23:18:22,662 | test_cvrn_psn.py | line 240: combined pano result:

$ 2021-06-10 23:20:32,259 | base_dataset.py | line 361: | PQ SQ RQ N

$ 2021-06-10 23:20:32,261 | base_dataset.py | line 362: --------------------------------------

$ 2021-06-10 23:20:32,261 | base_dataset.py | line 367: All | 32.1 66.6 41.1 16

$ 2021-06-10 23:20:32,261 | base_dataset.py | line 367: Things | 21.6 68.7 30.2 6

$ 2021-06-10 23:20:32,261 | base_dataset.py | line 367: Stuff | 38.4 65.3 47.6 10-

- Download The SYNTHIA Dataset SYNTHIA-RAND-CITYSCAPES (CVPR16) The data folder is structured as follow:

CVRN/UPSNet/data:

│ ├── synthia/

| | ├── images/

| | ├── labels/

| | ├── ...

| | ├── ...

- Dataset preprocessing

$ cd CVRN

$ cp cvrn_train_r/UPSNet/crop640_synthia_publish.py UPSNet/crop640_synthia_publish.py

$ cd UPSNet

$ python crop640_synthia_publish.py-

Download the converted instance labels and put the two instance label files of cityscapes ('instancesonly_gtFine_train.json' and 'instancesonly_gtFine_val.json') under 'CVRN/UPSNet/data/cityscapes/annotations_7cls'.

-

Download the SYNTHIA instance label file ('instancesonly_train.json') and put it under 'CVRN/UPSNet/data/synthia_crop640/annotations_7cls_filtered'.

- creat environment:

$ cd CVRN/cvrn_train_r/cvrn_env

$ conda env create -f seg_cross_style_reg.yml

$ conda env create -f seg_st.yml

$ conda env create -f instance_seg.yml- move files into UPSNet, respectively (if the shell file does not work well, please move all files from 'cvrn_train_r/UPSNet' into the cloned 'UPSNet' mannually):

$ cd CVRN

$ cp -r cvrn_train_r/UPSNet/data/cityscapes/annotations_7cls UPSNet/data/cityscapes/

$ cp -r cvrn_train_r/UPSNet/data/synthia_crop640 UPSNet/data/

$ cp cvrn_train_r/UPSNet/upsnet/dataset/* UPSNet/upsnet/dataset

$ cp cvrn_train_r/UPSNet/upsnet/models/* UPSNet/upsnet/models

$ cp cvrn_train_r/UPSNet/upsnet/panoptic_uda_experiments/* UPSNet/upsnet/panoptic_uda_experiments

$ cp cvrn_train_r/UPSNet/upsnet/* UPSNet/upsnet- clone ADVENT and CRST:

$ cd CVRN

$ git clone https://github.com/valeoai/ADVENT.git

$ cd ADVENT

$ git clone https://github.com/yzou2/CRST.git- move files into ADVENT and CRST, respectively (if the shell file does not work well, please move all files from 'cvrn_train_r/ADVENT' into the cloned 'ADVENT' mannually, and similar for 'cvrn_train_r/CRST' and the cloned 'CRST'):

$ cd CVRN

$ cp cvrn_train_r/ADVENT/advent/dataset/* ADVENT/advent/dataset

$ cp cvrn_train_r/ADVENT/advent/domain_adaptation/* ADVENT/advent/domain_adaptation

$ cp cvrn_train_r/ADVENT/advent/scripts/configs/* ADVENT/advent/scripts/configs

$ cp cvrn_train_r/ADVENT/advent/scripts/* ADVENT/advent/scripts

$ cp cvrn_train_r/ADVENT/CRST/deeplab/* ADVENT/CRST/deeplab

$ cp cvrn_train_r/ADVENT/CRST/* ADVENT/CRST- Step 1: Instance segmentation: cross-style regularization pre-training:

$ cd CVRN/UPSNet

$ conda activate instance_seg

$ CUDA_VISIBLE_DEVICES=7 python upsnet/train_seed1234_co_training_cross_style.py --cfg upsnet/da_maskrcnn_cross_style.yaml- Step 2. Instance segmentation: evaluate cross-style pre-trained models: (a). Evaluate cross-style pre-trained models on validation set over bbox:

$ cd CVRN/UPSNet

$ conda activate instance_seg

$ CUDA_VISIBLE_DEVICES=1 python upsnet/test_resnet101_dense_detection.py --iter 1000 --cfg upsnet/da_maskrcnn_cross_style.yaml(b). Evaluate cross-style pre-trained models on validation set over instance mask:

$ cd CVRN/UPSNet

$ conda activate instance_seg

$ CUDA_VISIBLE_DEVICES=2 python upsnet/test_resnet101_maskrcnn.py --iter 23000 --cfg upsnet/da_maskrcnn_cross_style.yaml(c). Evaluate cross-style pre-trained models on training set over instance mask:

$ cd CVRN/UPSNet

$ conda activate instance_seg

$ CUDA_VISIBLE_DEVICES=7 python upsnet/cvrn_test_resnet101_maskrcnn_PL.py --iter 23000 --cfg upsnet/da_maskrcnn_cross_style.yaml- Step 3. Instance segmentation: instance pseudo label generation and fusion with pre-trained model:

$ cd CVRN/UPSNet

$ conda activate instance_seg

$ python upsnet/cvrn_st_psudolabeling_fuse.py --iter 23000 --cfg upsnet/da_maskrcnn_cross_style.yaml- Step 4. Instance segmentation: retrain model over generated and fused instance pseudo labels:

$ cd CVRN/UPSNet

$ conda activate instance_seg

CUDA_VISIBLE_DEVICES=4 python upsnet/train_seed1234_co_training_cross_style_stage2_ST_w_PL.py --cfg upsnet/panoptic_uda_experiments/da_maskrcnn_cross_style_ST.yaml- Step 5. Instance segmentation: evaluate retrained models:

$ cd CVRN/UPSNet

$ conda activate instance_seg

$ CUDA_VISIBLE_DEVICES=1 python upsnet/test_resnet101_dense_detection.py --iter 1000 --cfg upsnet/da_maskrcnn_cross_style_ST.yaml$ cd CVRN/UPSNet

$ conda activate instance_seg

$ CUDA_VISIBLE_DEVICES=2 python upsnet/test_resnet101_maskrcnn.py --iter 6000 --cfg upsnet/da_maskrcnn_cross_style_ST.yaml- Step 6. Panoptic segmentation evaluation: Please refer to previous sections.

- Step 1: Semantic segmentation: cross-style regularization pre-training:

$ cd CVRN/ADVENT/advent/scripts

$ conda activate seg_cross_style_reg

$ CUDA_VISIBLE_DEVICES=7 python train_synthia.py --cfg configs/synthia_self_supervised_aux_cross_style.yml- Step 2: Semantic segmentation: evaluate cross-style pre-trained models:

$ cd CVRN/ADVENT/advent/scripts

$ conda activate seg_cross_style_reg

$ CUDA_VISIBLE_DEVICES=7 python test.py --cfg configs/synthia_self_supervised_aux_cross_style.yml- Step 3: Semantic segmentation: Pseudo label generation, fusion and retraining: (a). Semantic segmentation: Pseudo label generation for 2975 images with best model (replace {best_model_dir} with the pretrained best model)

$ cd CVRN/ADVENT/CRST/

$ conda activate seg_st

$ CUDA_VISIBLE_DEVICES=7 python2 crst_seg_aux_ss_trg_cross_style_pseudo_label_generation.py --random-mirror --random-scale --test-flipping \

--num-classes 16 --data-src synthia --data-tgt-train-list ./dataset/list/cityscapes/train.lst \

--save results/synthia_cross_style_ep6 --data-tgt-dir dataset/Cityscapes --mr-weight-kld 0.1 \

--eval-scale 0.5 --test-scale '0.5' --restore-from {best_model_dir} \

--num-rounds 1 --test-scale '0.5,0.8,1.0' --weight-sil 0.1 --epr 6(b). Semantic segmentation: Pseudo label fusion for 2975 images

$ cd CVRN/UPSNet

$ conda activate instance_seg

$ python upsnet/cvrn_st_psudolabeling_fuse_for_semantic_seg.py --iter 23000 --cfg upsnet/da_maskrcnn_cross_style.yaml(c). Semantic segmentation: Pseudo label retraining for 2975 images (replace {best_model_dir} with the pretrained best model):

$ cd CVRN/ADVENT/CRST/

$ conda activate seg_st

$ CUDA_VISIBLE_DEVICES=7 python2 crst_seg_aux_ss_trg_cross_style_pseudo_label_retrain.py --random-mirror --random-scale --test-flipping \

--num-classes 16 --data-src synthia --data-tgt-train-list ./dataset/list/cityscapes/train.lst \

--save results/synthia_cross_style_ep6 --data-tgt-dir dataset/Cityscapes --mr-weight-kld 0.1 \

--eval-scale 0.5 --test-scale '0.5' --restore-from {best_model_dir} \

--num-rounds 1 --test-scale '0.5,0.8,1.0' --weight-sil 0.1 --epr 6Run Instance segmentation Step 1

Run Instance segmentation Step 2

Run Semantic segmentation Step 1

Run Semantic segmentation Step 2

Run Semantic segmentation Step 3 (a)

Run Instance segmentation Step 3

Run Instance segmentation Step 4

Run Instance segmentation Step 5

Run Semantic segmentation Step 3 (b)

Run Semantic segmentation Step 3 (c)

Run Panoptic segmentation evaluationThis codebase is heavily borrowed from UPSNet, CRST and ADVENT.

If you have any questions, please contact: jiaxing.huang@ntu.edu.sg