Point Transformer Diffusion (PTD) integrates the standard DDPM, adapted for 3D data, with Point Transformer, a local self-attention network specifically designed for 3D point clouds.

- Thesis report: 3D Shape Generation through Point Transformer Diffusion.

- It achieved outstanding results in the unconditional shape generation task on the ShapeNet dataset.

Make sure the following environments are installed.

python

torch

tqdm

matplotlib

trimesh

scipy

open3d

einops

pytorch_warmup

You can utilize the yaml file in the requirements folder by

conda env create -f ptd_env.yml

conda activate ptd_env

, which will take significant time. Then, install pointops by

cd lib/pointops/

python setup.py install

cp build/lib.linux-x86_64-cpython-39/pointops_cuda.cpython-39-x86_64-linux-gnu.so .

Also, install emd for evaluation by

cd metrics/PyTorchEMD/

python setup.py install

cp build/lib.linux-x86_64-cpython-39/emd_cuda.cpython-39-x86_64-linux-gnu.so .

The code was tested on Ubuntu 22.04 LTS and GeForce RTX 3090.

For generation, we use ShapeNet point cloud, which can be downloaded here.

Pretrained models can be downloaded here.

$ python train_generation.py --category car|chair|airplane

Please refer to the python file for optimal training parameters.

$ python train_generation.py --category car|chair|airplane --model MODEL_PATH

Evaluation results of the trained models of PTD.

| Category | Model | CD | EMD |

|---|---|---|---|

| airplane | airplane_2799.pth | 74.19 | 61.48 |

| chair | chair_1499.pth | 56.11 | 53.39 |

| car | car_2799.pth | 56.39 | 52.69 |

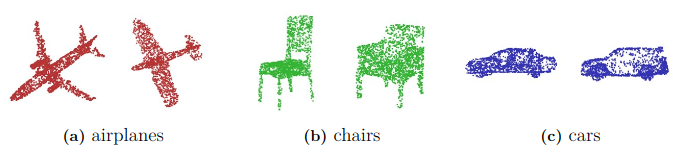

Visualization of several generated results:

DDPM for 3D point clouds: Point-Voxel Diffusion.

Point Transformer: