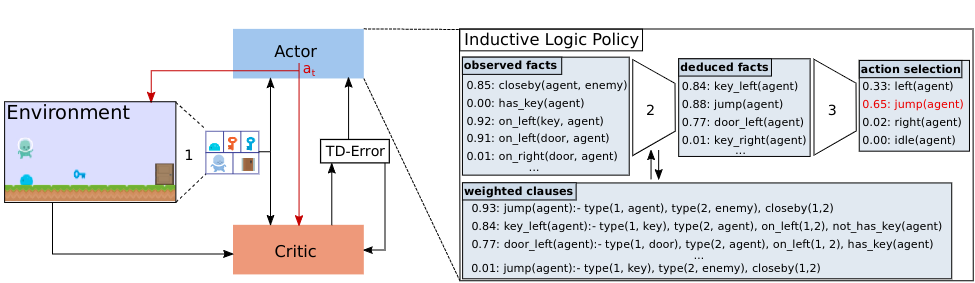

This is the implementation of Neurally gUided Differentiable loGic policiEs (NUDGE), a framework for logic RL agents based on differentiable forward reasoning with first-order logic (FOL).

- Install all requirements via

pip install -r requirements.txt

- On project level, simply run

python train.pyto start a new training run.

To train a new agent, run python train.py. The training process is controlled by the hyperparameters specified in in/config/default.yaml. You can specify a different configuration by providing the corresponding YAML file path as an argument, e.g., python train.py -c in/config/my_config.yaml. The -c argument is optional and defaults to in/config/default.yaml.

You can also overwrite the game environment by providing the -g argument, e.g., python train.py -g freeway.

The hyperparameters are configured inside in/config/default.yaml which is loaded as default. You can specify a different configuration by providing the corresponding YAML file path as an argument, e.g., python train.py in/config/my_config.yaml. A description of all hyperparameters can be found in train.py.

Inside in/envs/[env_name]/logic/[ruleset_name]/, you find the logic rules that are used as a starting point for training. You can change them or create new rule sets. The ruleset to use is specified with the hyperparam rules.

If you want to use NUDGE within other projects, you can install NUDGE locally as follows:

- Inside

nsfr/runpython setup.py develop

- Inside

nudge/runpython setup.py develop

In case you want to use the Threefish or the Loot environment, you also need to install QT-5 via

apt-get install qt5-defaultpython3 play_gui.py -g seaquest

getoutcontains key, door and one enemy.getoutplushas one more enemy.

threefishcontains one bigger fish and one smaller fish.threefishcolorcontains one red fish and one green fish. agent need to avoid red fish and eat green fish.

lootcontains 2 pairs of key and door.lootcolorcontains 2 pairs of key and door with different color than in loot.lootpluscontains 3 pairs of key and door.

You add a new environment inside in/envs/[new_env_name]/. There, you need to define a NudgeEnv class that wraps the original environment in order to do

- logic state extraction: translates raw env states into logic representations

- valuation: Each relation (like

closeby) has a corresponding valuation function which maps the (logic) game state to a probability that the relation is true. Each valuation function is defined as a simple Python function. The function's name must match the name of the corresponding relation. - action mapping: action-predicates predicted by the agent need to be mapped to the actual env actions

See the freeway env to see how it is done.

TODO Using Beam Search to find a set of rules

python3 beam_search.py -m getout -r getout_root -t 3 -n 8 --scoring True -d getout.json

Without scoring:

python3 beam_search.py -m threefish -r threefishm_root -t 3 -n 8

- --t: Number of rule expansion of clause generation.

- --n: The size of the beam.

- --scoring: To score the searched rules, a dataset of states information is required.

- -d: The name of dataset to be used for scoring.