Project Page | Paper | arXiv | Bibtex

Katherine Xu

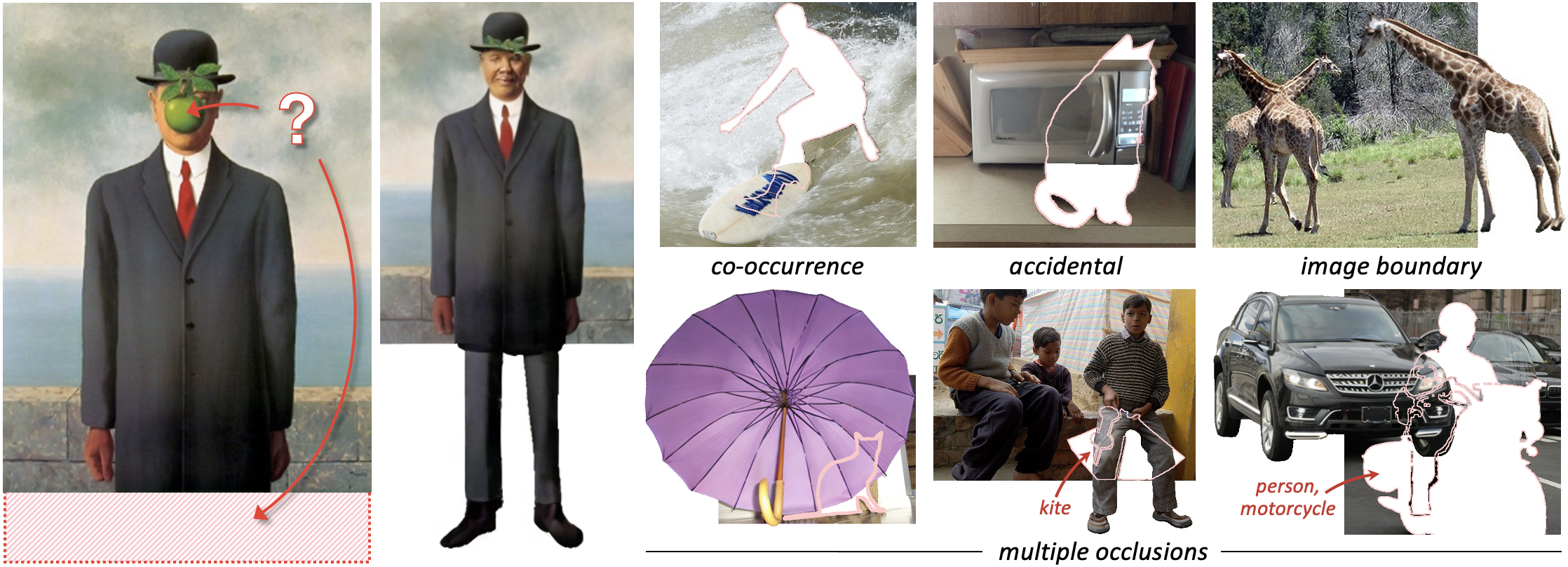

Our method can recover the hidden pixels of objects in diverse images. Occluders may be co-occurring (a person on a surfboard), accidental (a cat in front of a microwave), the image boundary (giraffe), or a combination of these scenarios.

The pink outline indicates an occluder object.

Our method can recover the hidden pixels of objects in diverse images. Occluders may be co-occurring (a person on a surfboard), accidental (a cat in front of a microwave), the image boundary (giraffe), or a combination of these scenarios.

The pink outline indicates an occluder object.

We use pretrained diffusion inpainting models, and no additional training is required!

- Stay tuned for our code release!

- Python 3.10

- Docker

-

Clone this

amodalrepository, and runcd Grounded-Segment-Anything. -

In the Dockerfile, change all instances of

/home/appuserto your path for theamodalrepository. -

Run

make build-image. -

Start and attach to a docker container from the image

gsa:v0. Then, navigate to theamodalrepository. -

Run

./install.shto finish setup and download model checkpoints.

- Run

./download_dataset.shto download the COCO dataset.

-

In

./main.sh, modifyinput_dirto your folder path for the images. -

Run

./main.sh. You may need to usechmodto change the file permissions first.

If you find our work useful, please cite our paper:

@inproceedings{xu2024amodal,

title={Amodal completion via progressive mixed context diffusion},

author={Xu, Katherine and Zhang, Lingzhi and Shi, Jianbo},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={9099--9109},

year={2024}

}