Speech recognition using google's tensorflow deep learning framework, sequence-to-sequence neural networks.

Replaces caffe-speech-recognition, see there for some background.

Create a decent standalone speech recognition for Linux etc. Some people say we have the models but not enough training data. We disagree: There is plenty of training data (100GB here and 21GB here on openslr.org , synthetic Text to Speech snippets, Movies with transcripts, Gutenberg, YouTube with captions etc etc) we just need a simple yet powerful model. It's only a question of time...

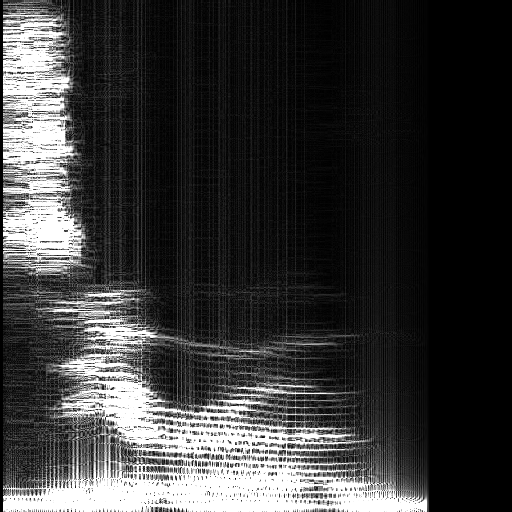

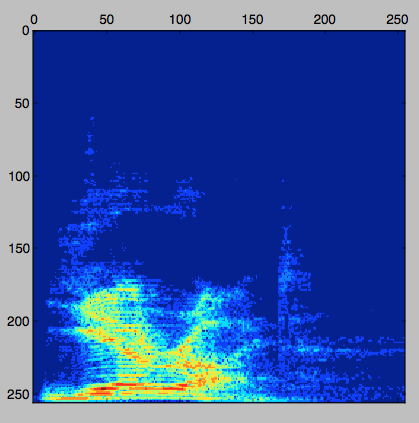

Sample spectrogram, Karen uttering 'zero' with 160 words per minute.

Toy examples:

./number_classifier_tflearn.py

./speaker_classifier_tflearn.py

Some less trivial architectures:

./densenet_layer.py

Later:

./train.sh

./record.py

We are in the process of tackling this project in seriousness. If you want to join the party just drop us an email at info@pannous.com.

Update: Nervana demonstrated that it is possible for 'independents' to build speech recognizers that are state of the art. Update: Mozilla is working on DeepSpeech and just achieved 0% error rate ... on the training set;) Free Speech is in good hands.

###Fun tasks for newcomers

- Watch video : https://www.youtube.com/watch?v=u9FPqkuoEJ8

- Understand and correct the corresponding code: lstm-tflearn.py

- Data Augmentation : create on-the-fly modulation of the data: increase the speech frequency, add background noise, alter the pitch etc,...

###Extensions Extensions to current tensorflow which are probably needed:

- WarpCTC on the GPU see issue

- Incremental collaborative snapshots ('P2P learning') !

- Modular graphs/models + persistance

Even though this project is far from finished we hope it gives you some starting points.

Looking for a tensorflow collaboration / consultant / deep learning contractor? Reach out to info@pannous.com