Important

Disclaimer: The code has been tested on:

Ubuntu 22.04.2 LTSrunning on a Lenovo Legion 5 Pro with twenty12th Gen Intel® Core™ i7-12700Hand anNVIDIA GeForce RTX 3060.MacOS Sonoma 14.3.1running on a MacBook Pro M1 (2020).

If you are using another Operating System or different hardware, and you can't load the models, please take a look either at the official Llama Cpp Python's GitHub issue. or at the official CTransformers's GitHub issue

Warning

Note: it's important to note that the large language model sometimes generates hallucinations or false information.

- Introduction

- Prerequisites

- Bootstrap Environment

- Using the Open-Source Models Locally

- Supported Response Synthesis strategies

- Example Data

- Build the memory index

- Run the Chatbot

- Run the RAG Chatbot

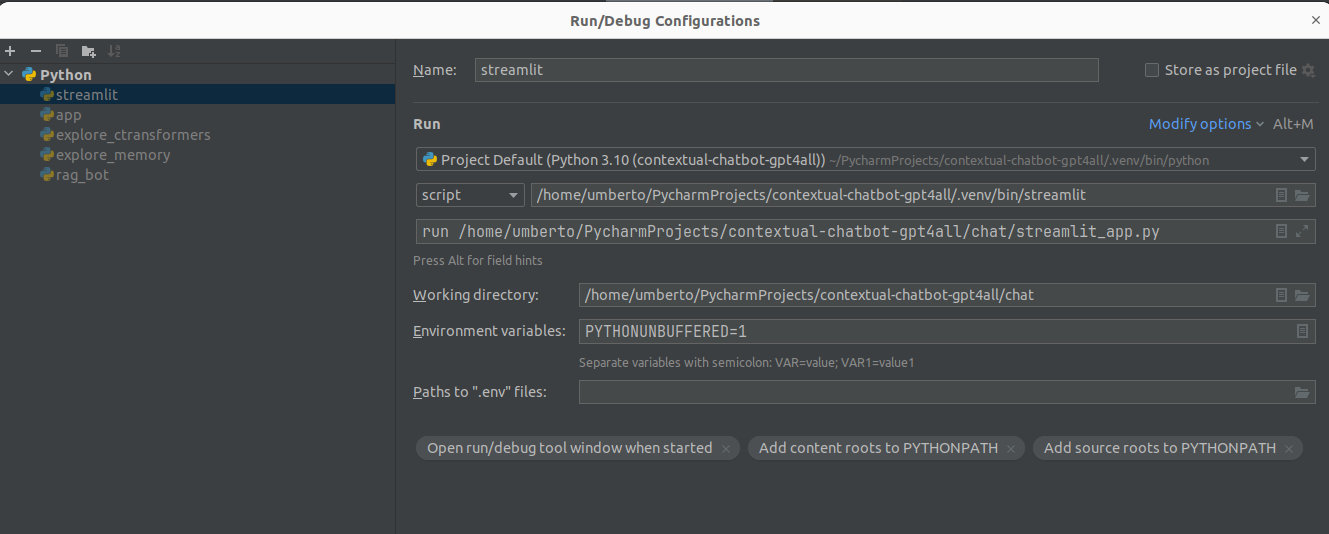

- How to debug the Streamlit app on Pycharm

- References

This project combines the power of Lama.cpp, CTransformers, LangChain (only used for document chunking and querying the Vector Database, and we plan to eliminate it entirely), Chroma and Streamlit to build:

- a Conversation-aware Chatbot (ChatGPT like experience).

- a RAG (Retrieval-augmented generation) ChatBot.

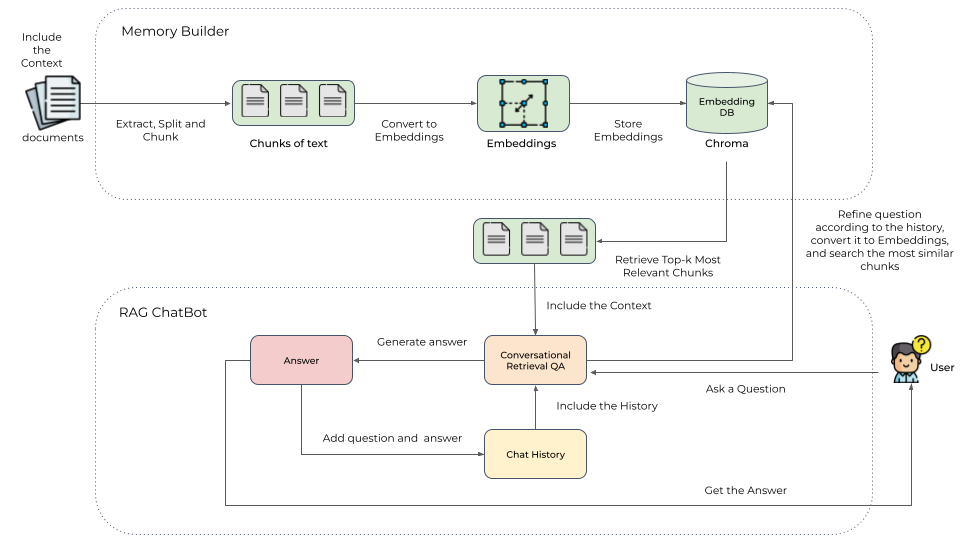

The RAG Chatbot works by taking a collection of Markdown files as input and, when asked a question, provides the corresponding answer based on the context provided by those files.

The Memory Builder component of the project loads Markdown pages from the docs folder.

It then divides these pages into smaller sections, calculates the embeddings (a numerical representation) of these

sections with the all-MiniLM-L6-v2

sentence-transformer, and saves them in an embedding database called Chroma

for later use.

When a user asks a question, the RAG ChatBot retrieves the most relevant sections from the Embedding database. Since the original question can't be always optimal to retrieve for the LLM, we first prompt an LLM to rewrite the question, then conduct retrieval-augmented reading. The most relevant sections are then used as context to generate the final answer using a local language model (LLM). Additionally, the chatbot is designed to remember previous interactions. It saves the chat history and considers the relevant context from previous conversations to provide more accurate answers.

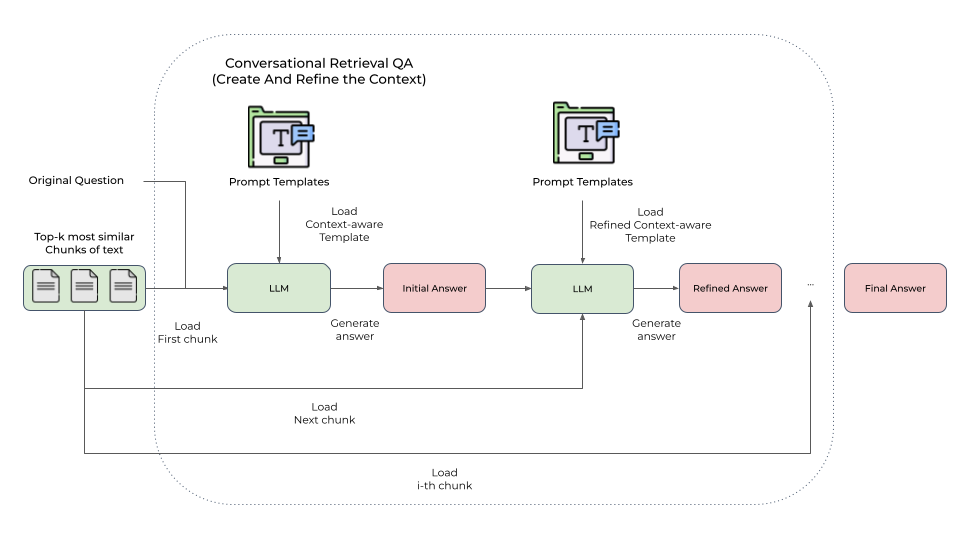

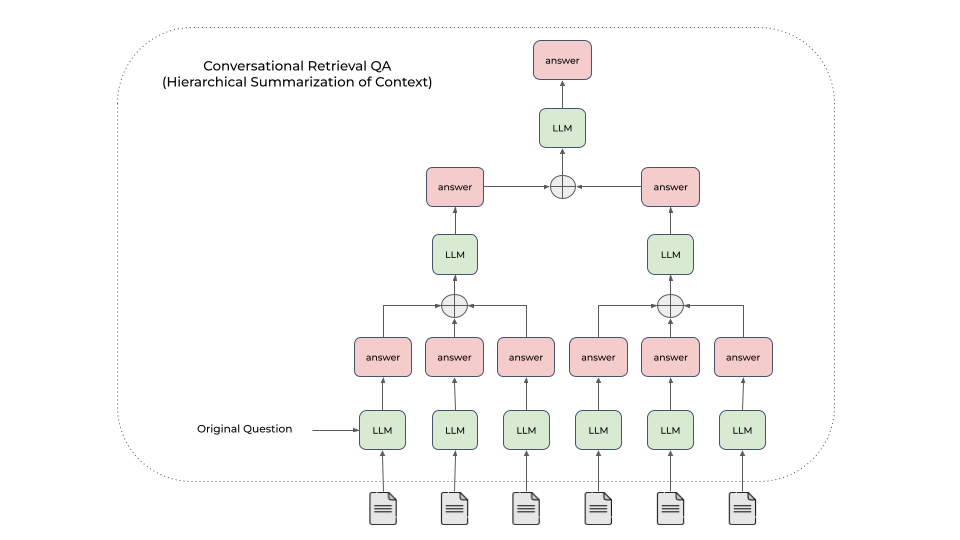

To deal with context overflows, we implemented three approaches:

Create And Refine the Context: synthesize a responses sequentially through all retrieved contents.Hierarchical Summarization of Context: generate an answer for each relevant section independently, and then hierarchically combine the answers.Async Hierarchical Summarization of Context: parallelized version of the Hierarchical Summarization of Context which lead to big speedups in response synthesis.

- Python 3.10+

- GPU supporting CUDA 12 and up

- Poetry 1.7.0

Install Poetry with the official installer by following this link.

You must use the current adopted version of Poetry defined here.

If you have poetry already installed and is not the right version, you can downgrade (or upgrade) poetry through:

poetry self update <version>

To easily install the dependencies we created a make file.

Important

Run Setup as your init command (or after Clean).

- Check:

make check- Use it to check that

which pip3andwhich python3points to the right path.

- Use it to check that

- Setup:

- Setup with NVIDIA CUDA acceleration:

make setup_cuda- Creates an environment and installs all dependencies with NVIDIA CUDA acceleration.

- Setup with Metal GPU acceleration:

make setup_metal- Creates an environment and installs all dependencies with Metal GPU acceleration for macOS system only.

- Setup with NVIDIA CUDA acceleration:

- Update:

make update- Update an environment and installs all updated dependencies.

- Tidy up the code:

make tidy- Run Ruff check and format.

- Clean:

make clean- Removes the environment and all cached files.

- Test:

make test- Runs all tests.

- Using pytest

We utilize two open-source libraries, Lama.cpp

and CTransformers,

which allow us to work efficiently with transformer-based models efficiently.

Running the LLMs architecture on a local PC is impossible due to the large (~7 billion) number of

parameters. These libraries enable us to run them either on a CPU or GPU.

Additionally, we use the Quantization and 4-bit precision to reduce number of bits required to represent the numbers.

The quantized models are stored in GGML/GGUF

format.

| 🤖 Model | Supported | Model Size | Notes and link to the model |

|---|---|---|---|

llama-3 Meta Llama 3 Instruct |

✅ | 8B | Less accurate than OpenChat - link |

openchat-3.6 Recommended - OpenChat 3.6 |

✅ | 8B | link |

openchat-3.5 - OpenChat 3.5 |

✅ | 7B | link |

starling Starling Beta |

✅ | 7B | Is trained from Openchat-3.5-0106. It's recommended if you prefer more verbosity over OpenChat - link |

neural-beagle NeuralBeagle14 |

✅ | 7B | link |

dolphin Dolphin 2.6 Mistral DPO Laser |

✅ | 7B | link |

zephyr Zephyr Beta |

✅ | 7B | link |

mistral Mistral OpenOrca |

✅ | 7B | link |

phi-3 Phi-3 Mini 4K Instruct |

✅ | 3.8B | link |

stablelm-zephyr StableLM Zephyr OpenOrca |

✅ | 3B | link |

| ✨ Response Synthesis strategy | Supported | Notes |

|---|---|---|

create_and_refine Create and Refine |

✅ | |

tree_summarization Tree Summarization |

✅ | |

async_tree_summarization - Recommended - Async Tree Summarization |

✅ |

You could download some Markdown pages from

the Blendle Employee Handbook

and put them under docs.

Run:

python chatbot/memory_builder.py --chunk-size 1000To interact with a GUI type:

streamlit run chatbot/chatbot_app.py -- --model openchat-3.6 --max-new-tokens 1024To interact with a GUI type:

streamlit run chatbot/rag_chatbot_app.py -- --model openchat-3.6 --k 2 --synthesis-strategy async-tree-summarization- LLMs:

- LLM integration and Modules:

- Embeddings:

- all-MiniLM-L6-v2

- This is a

sentence-transformersmodel: It maps sentences & paragraphs to a 384 dimensional dense vector space and can be used for tasks like clustering or semantic search.

- This is a

- all-MiniLM-L6-v2

- Vector Databases:

- Chroma

- Food Discovery with Qdrant

- Indexing algorithms:

- There are many algorithms for building indexes to optimize vector search. Most vector databases

implement

Hierarchical Navigable Small World (HNSW)and/orInverted File Index (IVF). Here are some great articles explaining them, and the trade-off betweenspeed,memoryandquality:- Nearest Neighbor Indexes for Similarity Search

- Hierarchical Navigable Small World (HNSW)

- From NVIDIA - Accelerating Vector Search: Using GPU-Powered Indexes with RAPIDS RAFT

- From NVIDIA - Accelerating Vector Search: Fine-Tuning GPU Index Algorithms

-

PS: Flat indexes (i.e. no optimisation) can be used to maintain 100% recall and precision, at the expense of speed.

- There are many algorithms for building indexes to optimize vector search. Most vector databases

implement

- Retrieval Augmented Generation (RAG):

- Rewrite-Retrieve-Read

-

Because the original query can not be always optimal to retrieve for the LLM, especially in the real world, we first prompt an LLM to rewrite the queries, then conduct retrieval-augmented reading.

-

- Rerank

- Conversational awareness

- Summarization: Improving RAG quality in LLM apps while minimizing vector storage costs

- RAG is Dead, Again?

- Rewrite-Retrieve-Read

- Chatbot Development:

- Text Processing and Cleaning:

- Open Source Repositories:

- llama.cpp

- llama-cpp-python

- CTransformers

- GPT4All

- pyllamacpp

- chroma

- Inspirational repos: