Meta-Query-Net: Resolving Purity-Informativeness Dilemma in Open-set Active Learning (NeurIPS 2022, PDF)

by Dongmin Park1, Yooju Shin1, Jihwan Bang2,3, Youngjun Lee, Hwanjun Song2, Jae-Gil Lee1

1 KAIST, 2 NAVER AI Lab, 3 NAVER CLOVA

Oct 19, 2022: Our work is publicly available at ArXiv.Dec 28, 2022: Our work is published in NeurIPS 2022.

- CIFAR10

python3 main_split.py --epochs 200 --epochs-csi 1000 --epochs-mqnet 100 --datset 'CIFAR10' --n-class 10 --n-query 500 \

--method 'MQNet' --mqnet-mode 'LL' --ssl-save True --ood-rate 0.6- CIFAR100

python3 main_split.py --epochs 200 --epochs-csi 1000 --epochs-mqnet 100 --datset 'CIFAR100' --n-class 100 --n-query 500 \

--method 'MQNet' --mqnet-mode 'LL' --ssl-save True --ood-rate 0.6- ImageNet50

python3 main_split.py --epochs 200 --epochs-csi 1000 --epochs-mqnet 100 --datset 'ImageNet50' --n-class 50 --n-query 1000 \

--method 'MQNet' --mqnet-mode 'LL' --ssl-save True --ood-rate 0.6- For ease of expedition, we provide CSI pre-trained models for split-experiment below

| Noise Ratio | Architecture | CIFAR10 | CIFAR100 |

|---|---|---|---|

| 60% | ResNet18 | weights | weights |

- CIFAR10, CIFAR100, ImageNet50

python3 main_split.py --epochs 200 --datset $dataset --n-query $num_query --method $al_algorithm --ood-rate $ood_ratetorch: +1.3.0

torchvision: 1.7.0

torchlars: 0.1.2

prefetch_generator: 1.0.1

submodlib: 1.1.5

diffdist: 0.1

scikit-learn: 0.24.2

scipy: 1.5.4

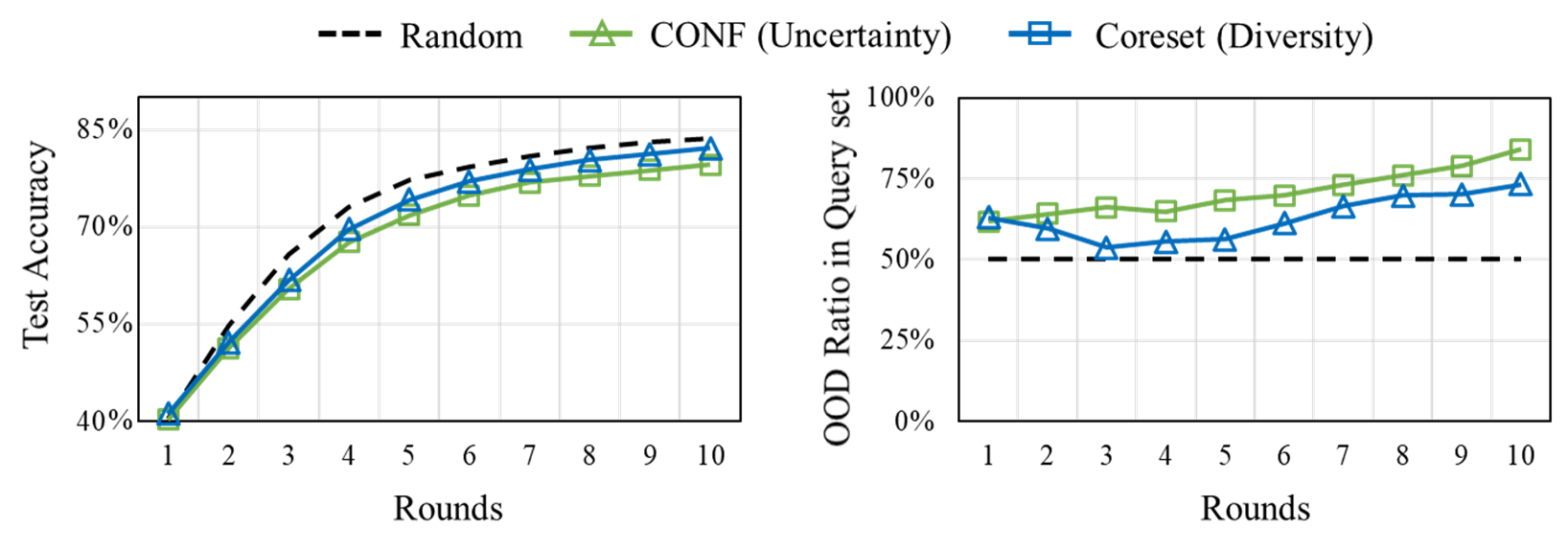

- OOD examples are uncertain in prediction & diverse in representation space

- They are Likely to be queried by standard AL algorithms, e.g., uncertainty- and diversity-based

- Since OOD examples are useless for target task, it wastes the labeling cost and significantly degrades AL performance

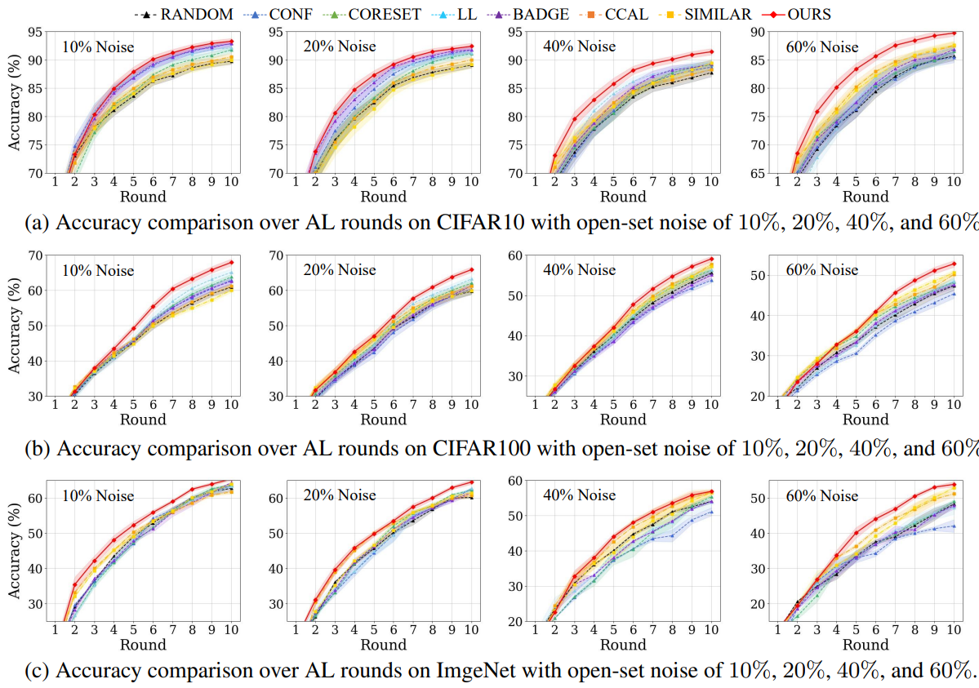

- Above figures are AL performance on CIFAR10 mixed with SVHN at the open-set noise ratio of 50% (1:1 mixing)

- With a such high noise ratio, uncertainty- and diversity-based algorithms queried many OOD examples and thus become even worse than random selection

- Recently, two open-set AL algorithms, SIMILAR and CCAL, have been proposed and tried to increase in-distribution purity of query-set

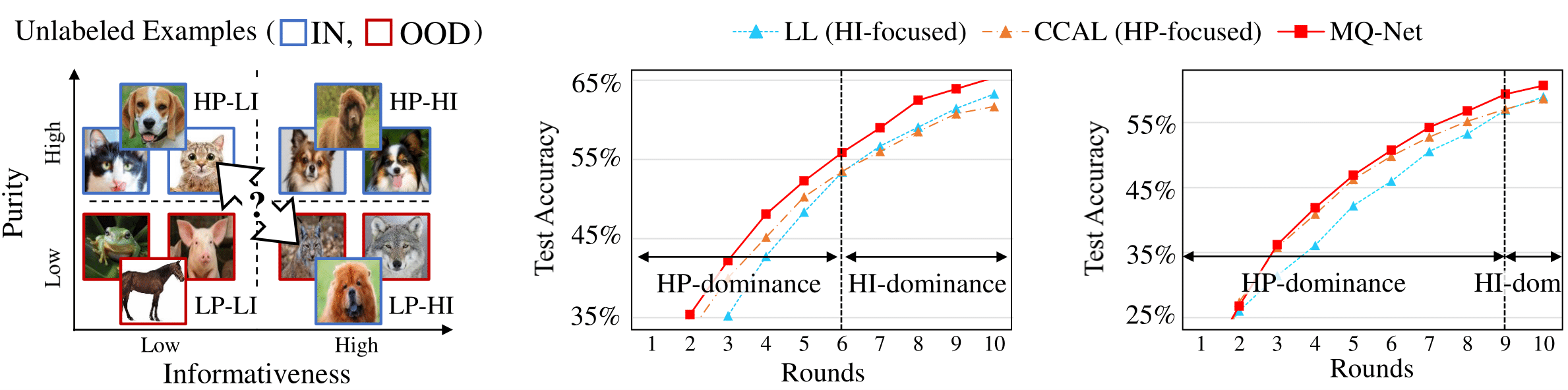

- However, "Should we focus on the purity throughout the entire AL period?" remains a question

- Increasing purity ↔ Losing Informativeness --> Trade-off!

- Which is more helpful? Fewer but more informative examples vs More but less informative examples

- Optimal trade-off may change according to the AL rounds & noise ratios!

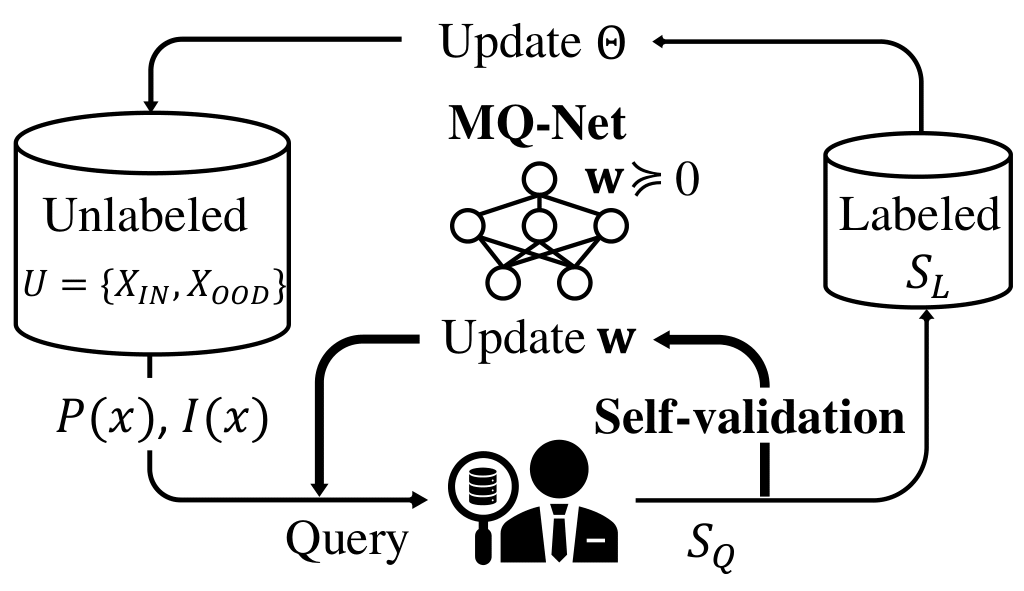

- Goal: To keep finding the best balance between purity and informativeness

- How: Learn a meta query score function

@article{park2022meta,

title={Meta-Query-Net: Resolving Purity-Informativeness Dilemma in Open-set Active Learning},

author={Park, Dongmin and Shin, Yooju and Bang, Jihwan and Lee, Youngjun and Song, Hwanjun and Lee, Jae-Gil},

journal={NeurIPS 2022},

year={2022}

}

- Coreset [code] : Active Learning for Convolutional Neural Networks: A Core-Set Approach, Sener et al. 2018 ICLR

- LL [code] : Learning Loss for Active Learning, Yoo et al. 2019 CVPR

- BADGE [code] : Deep Batch Active Learning by Diverse, Uncertain Gradient Lower Bounds, Jordan et al. 2020 ICLR

- CCAL [code] : Contrastive Coding for Active Learning under Class Distribution Mismatch, Du et al. 2021 ICCV

- SIMILAR [code] : SIMILAR: Submodular Information Measures based Active Learning in Realistic Scenarios, Kothawade et al. 2021 NeurIPS