This repo is the official implementation of "Divide to Adapt: Mitigating Confirmation Bias for Domain Adaptation of Black-Box Predictors". Our method is termed as BETA.

- Install

pytorchandtorchvision(we usepytorch==1.9.1andtorchvision==0.10.1). pip install -r requirements.txt

Please download and organize the datasets in this structure:

BETA

├── data

├── office_home

│ ├── Art

│ ├── Clipart

│ ├── Product

│ ├── Real World

├── office31

│ ├── amazon

│ ├── dslr

│ ├── webcam

├── visda17

│ ├── train

│ ├── validation

Then generate info files with the following commands:

python dev/generate_infos.py --ds office_home

python dev/generate_infos.py --ds office31

python dev/generate_infos.py --ds visda17

# train black-box source model on domain A

python train_src_v1.py configs/office_home/src_A/train_src_A.py

# adapt with BETA, from A to C

python train_BETA.py configs/office_home/src_A/BETA_C.py

# finetune on C

python finetune.py configs/office_home/src_A/finetune_C.py

# train black-box source model on domain a

python train_src_v1.py configs/office31/src_a/train_src_a.py

# adapt with BETA, from a to d

python train_BETA.py configs/office31/src_a/BETA_d.py

# finetune on d

python finetune.py configs/office31/src_a/finetune_d.py

# train black-box source model

python train_src_v2.py configs/visda17/train_src.py

# adapt with BETA

python train_BETA.py configs/visda17/BETA.py

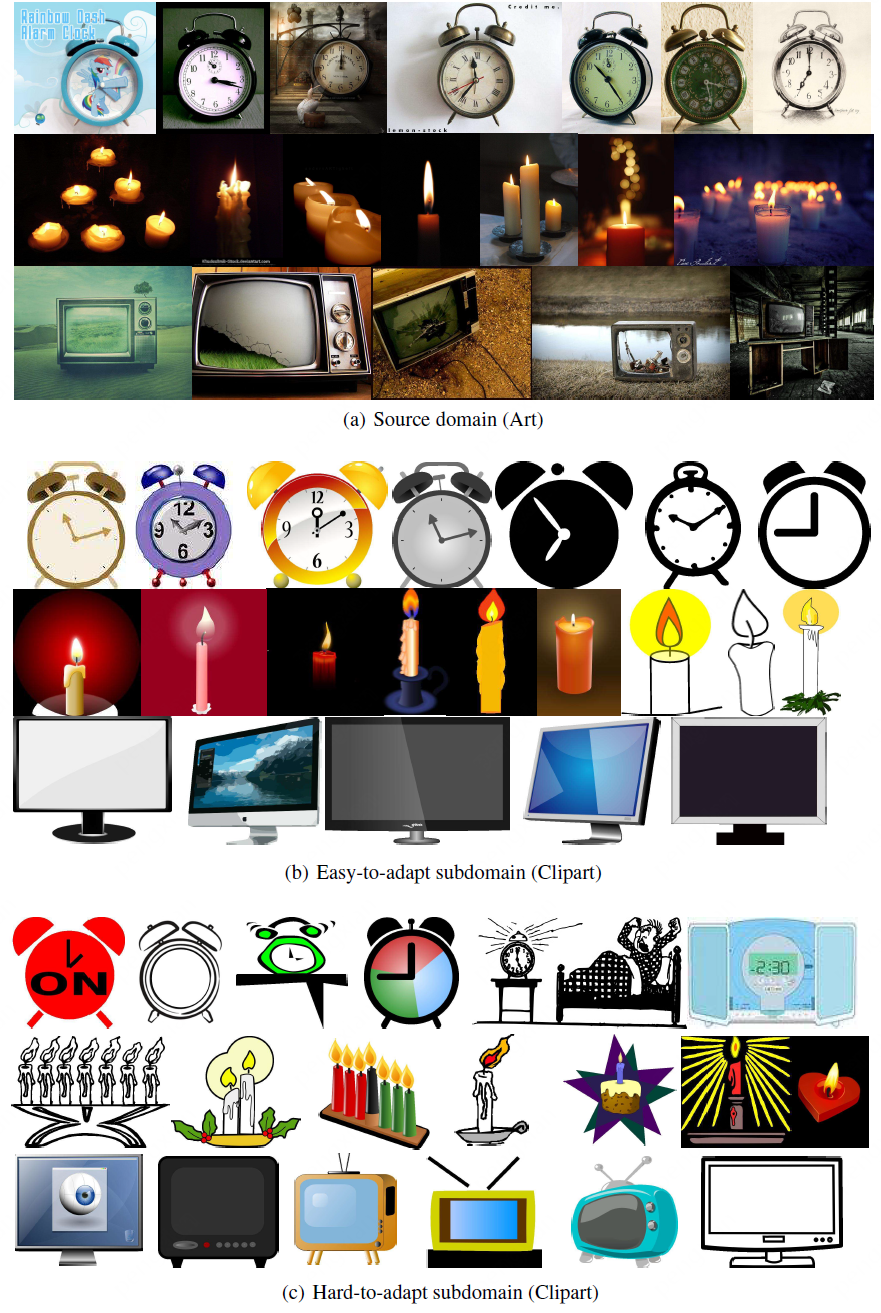

Here we show an example of the easy-hard target domain division (Office-Home: Art -> Clipart).