| Branch | Status |

|---|---|

| master | |

| release-0.2 | |

| release-0.1 |

Seldon Core is an open source platform for deploying machine learning models on Kubernetes.

- Goals

- Quick Start

- Example Components

- Integrations

- Install

- Deployment guide

- Reference

- Article/Blogs/Videos

- Community

- Developer

- Latest Seldon Images

- Usage Reporting

Machine learning deployment has many challenges. Seldon Core intends to help with these challenges. Its high level goals are:

- Allow data scientists to create models using any machine learning toolkit or programming language. We plan to initially cover the tools/languages below:

- Python based models including

- Tensorflow models

- Sklearn models

- Spark models

- H2O models

- R models

- Python based models including

- Expose machine learning models via REST and gRPC automatically when deployed for easy integration into business apps that need predictions.

- Allow complex runtime inference graphs to be deployed as microservices. These graphs can be composed of:

- Models - runtime inference executable for machine learning models

- Routers - route API requests to sub-graphs. Examples: AB Tests, Multi-Armed Bandits.

- Combiners - combine the responses from sub-graphs. Examples: ensembles of models

- Transformers - transform request or responses. Example: transform feature requests.

- Handle full lifecycle management of the deployed model:

- Updating the runtime graph with no downtime

- Scaling

- Monitoring

- Security

A Kubernetes Cluster. Kubernetes can be deployed into many environments, both on cloud and on-premise.

Read the overview to using seldon-core.

- Jupyter notebooks showing examples:

Seldon-core allows various types of components to be built and plugged into the runtime prediction graph. These include models, routers, transformers and combiners. Some example components that are available as part of the project are:

-

Models : example that illustrate simple machine learning models to help you build your own integrations

-

routers

-

transformers

- Mahalanobis distance outlier detection. Example usage can be found in the Advanced graphs notebook

- kubeflow

- Seldon-core can be installed as part of the kubeflow project. A detailed end-to-end example provides a complete workflow for training various models and deploying them using seldon-core.

- IBM's Fabric for Deep Learning

- Istio and Seldon

- NVIDIA TensorRT and DL Inference Server

- Tensorflow Serving

- Intel OpenVINO

- A Helm chart for easy integration and an example notebook using OpenVINO to serve imagenet model within Seldon Core.

Follow the install guide for details on ways to install seldon onto your Kubernetes cluster.

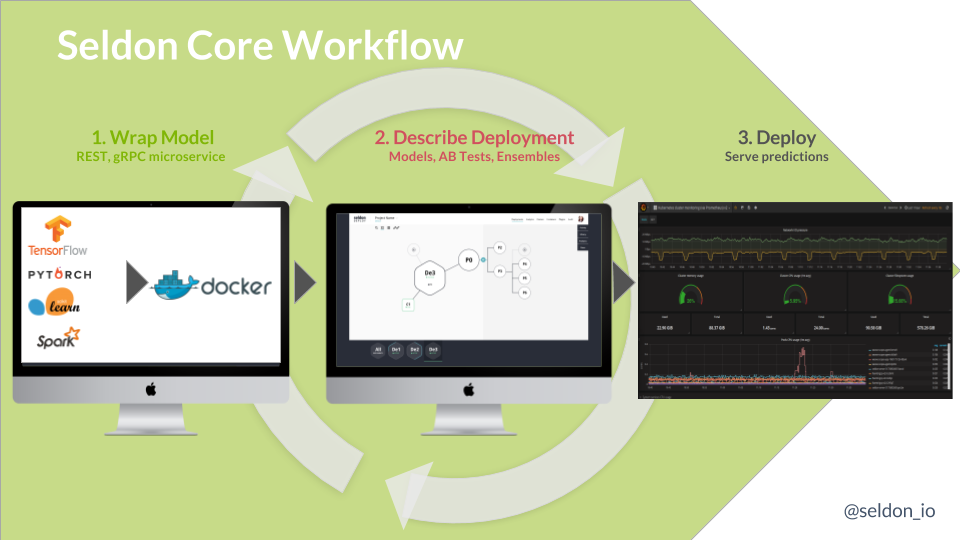

Three steps:

- Wrap your runtime prediction model.

- We provide easy to use wrappers for python, R, Java and NodeJS.

- We have tools to test your wrapped components.

- Define your runtime inference graph in a seldon deployment custom resource.

- Deploy the graph.

- Advanced graphs showing the various types of runtime prediction graphs that can be built.

- Handling large gRPC messages. Showing how you can add annotations to increase the gRPC max message size.

- Handling REST timeouts. Showing how you can add annotations to set the REST (and gRPC) timeouts.

- Prediction API

- Seldon Deployment Custom Resource

- Analytics

- Kubecon Europe 2018 - Serving Machine Learning Models at Scale with Kubeflow and Seldon

- Polyaxon, Argo and Seldon for model training, package and deployment in Kubernetes

- Manage ML Deployments Like A Boss: Deploy Your First AB Test With Sklearn, Kubernetes and Seldon-core using Only Your Web Browser & Google Cloud

- Using PyTorch 1.0 and ONNX with Fabric for Deep Learning

- AI on Kubernetes - O'Reilly Tutorial

- Scalable Data Science - The State of DevOps/MLOps in 2018

- Istio Weekly Community Meeting - Seldon-core with Istio

- Openshift Commons ML SIG - Openshift S2I Helping ML Deployment with Seldon-Core

- Overview of Openshift source-to-image use in Seldon-Core

- IBM Framework for Deep Learning and Seldon-Core

- CartPole game by Reinforcement Learning, a journey from training to inference

- Annotation based configuration.

- Notes for running in production.

- Helm configuration

- ksonnet configuration

| Description | Image URL | Stable Version | Development |

|---|---|---|---|

| Seldon Operator | seldonio/cluster-manager | 0.2.3 | 0.2.4-SNAPSHOT |

| Seldon Service Orchestrator | seldonio/engine | 0.2.3 | 0.2.4-SNAPSHOT |

| Seldon API Gateway | seldonio/apife | 0.2.3 | 0.2.4-SNAPSHOT |

| Seldon Python 3 Wrapper for S2I | seldonio/seldon-core-s2i-python3 | 0.2 | 0.3-SNAPSHOT |

| Seldon Python 2 Wrapper for S2I | seldonio/seldon-core-s2i-python2 | 0.2 | 0.3-SNAPSHOT |

| Seldon Python ONNX Wrapper for S2I | seldonio/seldon-core-s2i-python3-ngraph-onnx | 0.1 | |

| Seldon Core Python Wrapper | seldonio/core-python-wrapper | 0.7 | |

| Seldon Java Build Wrapper for S2I | seldonio/seldon-core-s2i-java-build | 0.1 | |

| Seldon Java Runtime Wrapper for S2I | seldonio/seldon-core-s2i-java-runtime | 0.1 | |

| Seldon R Wrapper for S2I | seldonio/seldon-core-s2i-r | 0.1 | |

| Seldon NodeJS Wrapper for S2I | seldonio/seldon-core-s2i-nodejs | 0.1 | 0.2-SNAPSHOT |

| Seldon Tensorflow Serving proxy | seldonio/tfserving-proxy | 0.1 | |

| Seldon NVIDIA inference server proxy | seldonio/nvidia-inference-server-proxy | 0.1 |

| Description | Package | Version |

|---|---|---|

| Seldon Core Wrapper | seldon-core-wrapper | 0.1.2 |

| Seldon Core JPMML | seldon-core-jpmml | 0.0.1 |

Tools that help the development of Seldon Core from anonymous usage.