Langcorn

LangCorn is an API server that enables you to serve LangChain models and pipelines with ease, leveraging the power of FastAPI for a robust and efficient experience.

Features

- Easy deployment of LangChain models and pipelines

- Ready to use auth functionality

- High-performance FastAPI framework for serving requests

- Scalable and robust solution for language processing applications

- Supports custom pipelines and processing

- Well-documented RESTful API endpoints

- Asynchronous processing for faster response times

📦 Installation

To get started with LangCorn, simply install the package using pip:

pip install langcorn⛓️ Quick Start

Example LLM chain ex1.py

import os

from langchain import LLMMathChain, OpenAI

os.environ["OPENAI_API_KEY"] = os.environ.get("OPENAI_API_KEY", "sk-********")

llm = OpenAI(temperature=0)

chain = LLMMathChain(llm=llm, verbose=True)Run your LangCorn FastAPI server:

langcorn server examples.ex1:chain

[INFO] 2023-04-18 14:34:56.32 | api:create_service:75 | Creating service

[INFO] 2023-04-18 14:34:57.51 | api:create_service:85 | lang_app='examples.ex1:chain':LLMChain(['product'])

[INFO] 2023-04-18 14:34:57.51 | api:create_service:104 | Serving

[INFO] 2023-04-18 14:34:57.51 | api:create_service:106 | Endpoint: /docs

[INFO] 2023-04-18 14:34:57.51 | api:create_service:106 | Endpoint: /examples.ex1/run

INFO: Started server process [27843]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://127.0.0.1:8718 (Press CTRL+C to quit)or as an alternative

python -m langcorn server examples.ex1:chain

Run multiple chains

python -m langcorn server examples.ex1:chain examples.ex2:chain

[INFO] 2023-04-18 14:35:21.11 | api:create_service:75 | Creating service

[INFO] 2023-04-18 14:35:21.82 | api:create_service:85 | lang_app='examples.ex1:chain':LLMChain(['product'])

[INFO] 2023-04-18 14:35:21.82 | api:create_service:85 | lang_app='examples.ex2:chain':SimpleSequentialChain(['input'])

[INFO] 2023-04-18 14:35:21.82 | api:create_service:104 | Serving

[INFO] 2023-04-18 14:35:21.82 | api:create_service:106 | Endpoint: /docs

[INFO] 2023-04-18 14:35:21.82 | api:create_service:106 | Endpoint: /examples.ex1/run

[INFO] 2023-04-18 14:35:21.82 | api:create_service:106 | Endpoint: /examples.ex2/run

INFO: Started server process [27863]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: Uvicorn running on http://127.0.0.1:8718 (Press CTRL+C to quit)Import the necessary packages and create your FastAPI app:

from fastapi import FastAPI

from langcorn import create_service

app:FastAPI = create_service("examples.ex1:chain")Multiple chains

from fastapi import FastAPI

from langcorn import create_service

app:FastAPI = create_service("examples.ex2:chain", "examples.ex1:chain")Run your LangCorn FastAPI server:

uvicorn main:app --host 0.0.0.0 --port 8000Now, your LangChain models and pipelines are accessible via the LangCorn API server.

Docs

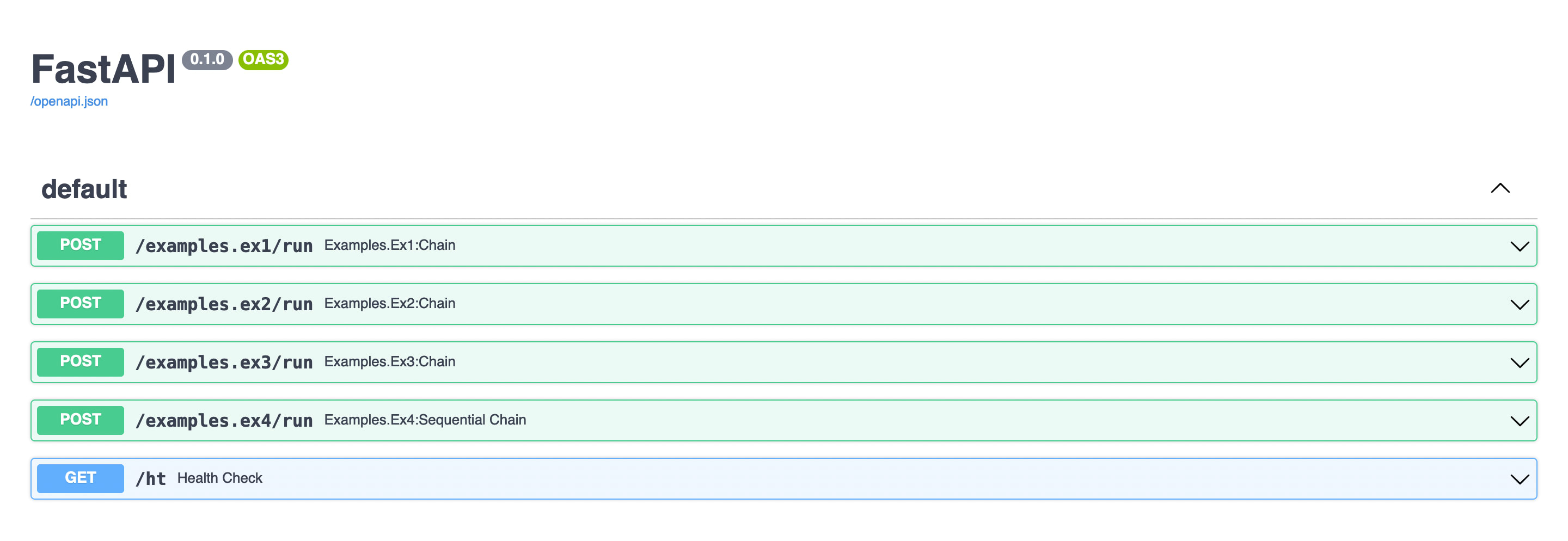

Automatically served FastAPI doc

Auth

It possible to add a static api token auth by specifying auth_token

python langcorn server examples.ex1:chain examples.ex2:chain --auth_token=api-secret-valueor

app:FastAPI = create_service("examples.ex1:chain", auth_token="api-secret-value")Documentation

For more detailed information on how to use LangCorn, including advanced features and customization options, please refer to the official documentation.

👋 Contributing

Contributions to LangCorn are welcome! If you'd like to contribute, please follow these steps:

- Fork the repository on GitHub

- Create a new branch for your changes

- Commit your changes to the new branch

- Push your changes to the forked repository

- Open a pull request to the main LangCorn repository

Before contributing, please read the contributing guidelines.

License

LangCorn is released under the MIT License.