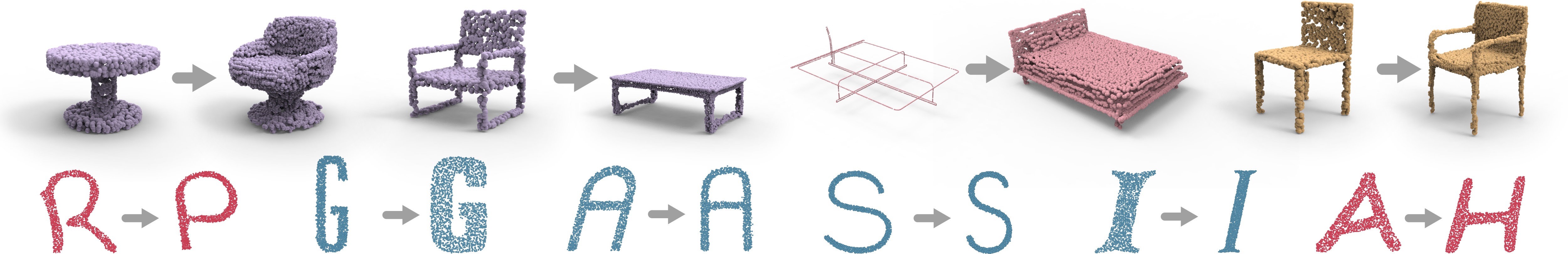

Kangxue Yin, Zhiqin Chen, Hui Huang, Daniel Cohen-Or, Hao Zhang.

[Paper] [Supplementary material]

- Linux (tested under Ubuntu 16.04 )

- Python (tested under 3.5.4)

- TensorFlow (tested under 1.12.0-GPU )

- numpy, scipy, etc.

The code is built on the top of latent_3d_points and pointnet2. Before run the code, please compile the customized TensorFlow operators under the folders "latent_3d_points/structural_losses" and "pointnet_plusplus/tf_ops".

An example of training and testing the autoencoder:

python -u run_ae.py --mode=train --class_name_A=chair --class_name_B=table --gpu=0

python -u run_ae.py --mode=test --class_name_A=chair --class_name_B=table --gpu=0 --load_pre_trained_ae=1

Training and testing the translator:

python -u run_translator.py --mode=train --class_name_A=chair --class_name_B=table --gpu=0

python -u run_translator.py --mode=test --class_name_A=chair --class_name_B=table --gpu=0 --load_pre_trained_gan=1

Upsampling:

Hmm.. I haven't put this into the release version of the code. I will work on it as soon as I get time.

Please note that all the quantitative evaluation results we provided in the paper were done with point clouds of size 2048, i.e., before upsampling.

If you find our work useful in your research, please consider citing:

@article {yin2019logan,

author = {Kangxue Yin and Zhiqin Chen and Hui Huang and Daniel Cohen-Or and Hao Zhang}

title = {LOGAN: Unpaired Shape Transform in Latent Overcomplete Space}

journal = {ACM Transactions on Graphics(Special Issue of SIGGRAPH Asia)}

volume = {38}

number = {6}

pages = {198:1--198:13}

year = {2019}

}

The code is built on the top of latent_3d_points and pointnet2. Thanks for the precedent contributions.