This project is an example of how to detect anomalies in financial, technical indicators by modeling their expected distribution and thus inform when the Relative Strength Indicator (RSI) is unreliable. RSI is a popular indicator for traders of financial assets, and it can be helpful to understand when it is reliable or not. This example will show how to implement a RSI model using realistic foreign exchange market data, Google Cloud Platform and the Dataflow time-series sample library.

The Dataflow samples library is a fast, flexible library for processing time-series data -- particularly for financial market data due to its large volume. Its ability to generate useful metrics in real-time significantly reduces the time and effort to build machine learning models and solve problems in the finance domain. This library is used in the metrics generator component of this example and detailed information on it's usage can be found in docs.

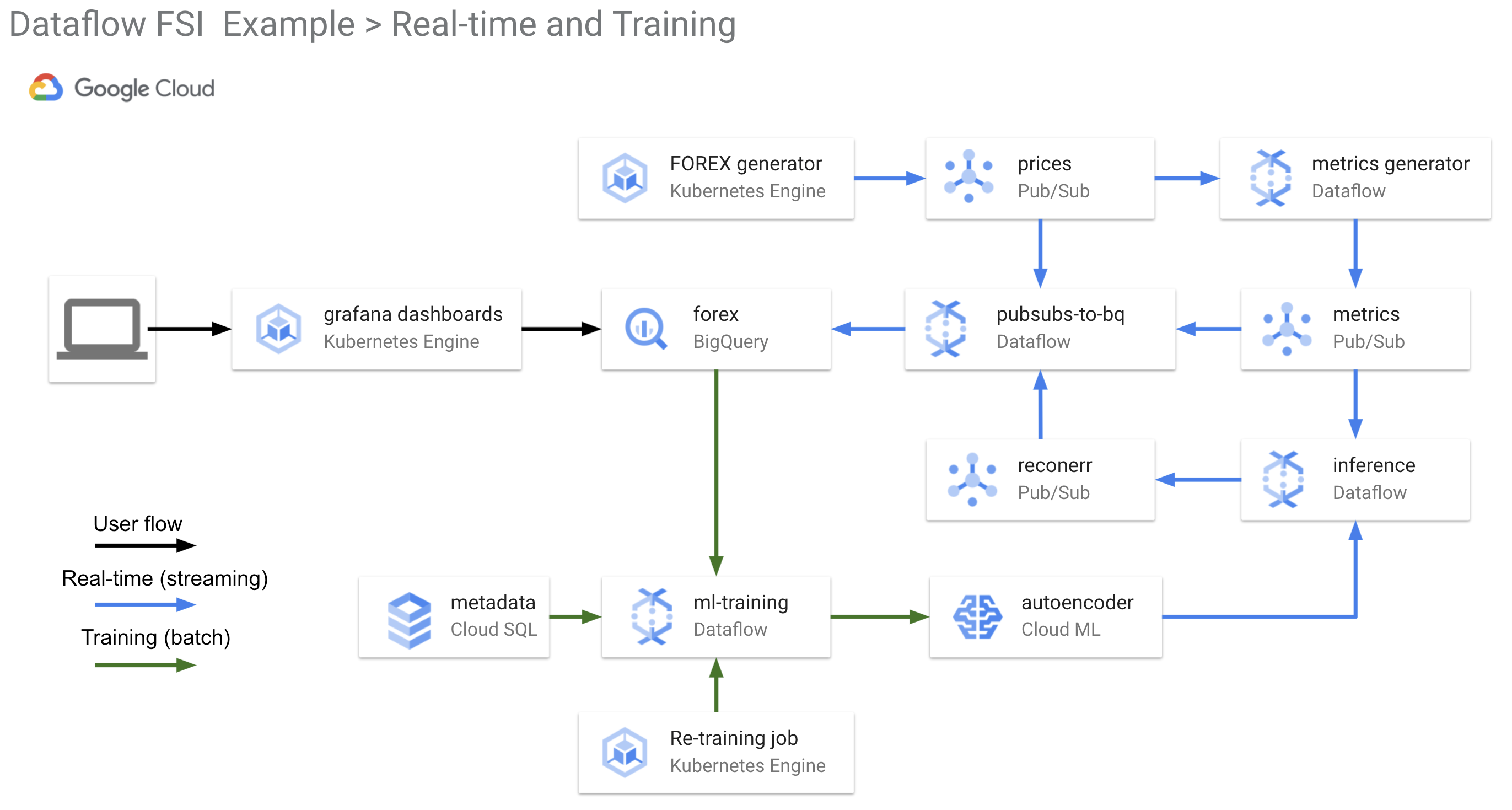

The GCP infrastructure used in this example includes Dataflow, Pub/Sub, BigQuery, Kubernetes Engine, and AI Platform. Further information on components, flows and diagrams can be found in the docs directory.

A great place to start is to run this example in GCP and view the excellent blog for a detailed walk-through of the solution.

To install:

- Create a new project in GCP

- Install

gcloudand set PROJECT_ID - Execute this script to create base infrastructure. This will take about 5-10mins

./deploy-infra.sh - After this has completed, deploy the pipelines and model by executing the run-app script. This will take about 5mins

./run-app.sh - View the grafana dashboard. The username and password is your PROJECT_ID and the location is found in the Cloud Console and output in the build log.

You can also run this example using Cloud Shell. To begin, login to the GCP console and select the “Activate Cloud Shell” icon in the top right of your project dashboard. Then run the following:

- Clone the repo:

git clone https://github.com/kasna-cloud/dataflow-fsi-example.git && cd dataflow-fsi-example - Execute this script to create base infrastructure. This will take about 5-10mins

./deploy-infra.sh - After this has completed, deploy the pipelines and model by executing the run-app script. This will take about 5mins

./run-app.sh - View the grafana dashboard. The username and password is your PROJECT_ID and the location is found in the Cloud Console and output in the build log.

The Relative Strength Index, or RSI, is a popular financial technical indicator that measures the magnitude of recent price changes to evaluate whether an asset is currently overbought or oversold.

To detect when RSI is reliable or not for a given asset, the modelling approach is as follows. We train an anomaly detection model to learn the expected behaviour of metrics describing the asset when RSI is greater than 70 or RSI is less than 30. When an anomaly is detected, the model is informing that these input metrics are behaving differently to how they usually behave when RSI is greater than 70 or RSI is less than 30. And so in these instances, RSI is not reliable and a trade is not advised. If no anomaly is detected, then the metrics are behaving as expected, so you can trust RSI and make a trade. NOTE:

This blog contains general advice only. It was prepared without taking into account your objectives, financial situation, or needs. You should speak to a financial planner before making a financial decision, and you should speak to a licensed ML practitioner before making an ML decision.

A deep-dive on the problem domain, data science and model creation are in Jupyter notebooks which you can run yourself, or view right here on github:

Be sure to view the blog for a detailed walk-through of the solution.

This repo is organised into folders containing logical functions of the example. A brief description of these are below:

- app

- app/bootstrap_models This is the LSTM TFX model pre-populated with the RSI example so that dashboards can immediately render RSI values. During the

run-app.shdeployment of components, this model will be uploaded into GCS and a new Cloud Machine Learning model version will be created for theinferencepipeline to use. This model is then updated by the re-training data pipeline. - app/grafana Contains visualization configuration used in the grafana dashboards.

- app/java This directory holds the Dataflow pipeline code using the Dataflow samples library. The pipeline creates metrics from the prices stream.

- app/kubernetes Directory of deployment manifests for starting the Dataflow pipelines, prices generator and retraining job.

- app/python This directory contains a containerized python program for:

- inference and retraining pipelines

- pubsub to bigquery pipeline

- forex generator to create realistic prices

- app/bootstrap_models This is the LSTM TFX model pre-populated with the RSI example so that dashboards can immediately render RSI values. During the

- docs This folder contains further example information and diagrams

- infra Contains the cloudbuild and terraform code to deploy this example GCP infrastructure.

- notebooks This folder has detailed AI Notebooks which step through the RSI use case from a Data Science perspective.

Further information is available in the directory READMEs and the docs directory.

This example can be thought of in two distinct, logical functions. One for real-time ingestion of prices and determination of RSI presence, and another for the re-training of the model to improve prediction.

The logical diagram for the real-time and training in GCP components is here:

A detailed list of the components and data flows can be found in the FLOWS doc.

- Three PubSub Topics:

- prices

- metrics

- reconerr

- One BigQuery Dataset with 3 Tables, schema defined in table_schemas:

- prices

- metrics

- reconerr

- One AI Platform Model

- One Cloud SQL Database for ML Metadata

- Autopilot GKE Cluster:

- price generator deployment

- grafana deployment

- tfx retraining pipeline cron job, and a singleton job to start dataflow streaming pipelines

- Dataflow streaming pipelines:

- Dataflow batch pipline:

- Re-training pipeline created dynamically by TFX when the GKE cronjob is run (every hour)

This repo uses java, python, cloudbuild, terraform and other technologies which require configuration. For this example we have chosen to store all configuration values in the config.sh file. You can change any values in this file to modfiy the behaviour or deployment of the example.

This example is designed to be run in a fresh GCP project and requires at least Owner privileges to the project. All further IAM permissions are set by Cloud Build or Terraform.

Deployment of this example is done in two steps:

- infrastructure into GCP by CloudBuild and terraform

- application and pipeline deployment using CloudBuild

Both of these CloudBuild steps can be triggered using the deploy-infra.sh and run-app.sh scripts and require only a gcloud Google Cloud SDK to be installed locally.

To install this example repo into your Google Cloud project, follow the instructions in the Quickstart section. If needed, this example can be run using GCP Cloud Shell.

Further information is available in the app and infra directories.

This code is licensed under the terms of the MIT license and is available for free.

This repo has been built with the support of Google, Kasna and Eliiza. Links the relevant doco, libraries and resources are below:

The excellent contributors to this repo are listed in the AUTHORS file and in the git history. If you would like to contribute please see the CODE-OF-CONDUCT and CONTRIBUTING info.