Read this in other languages: 한국어.

This project shows how serverless, event-driven architectures execute code in response to messages or to handle streams of data records.

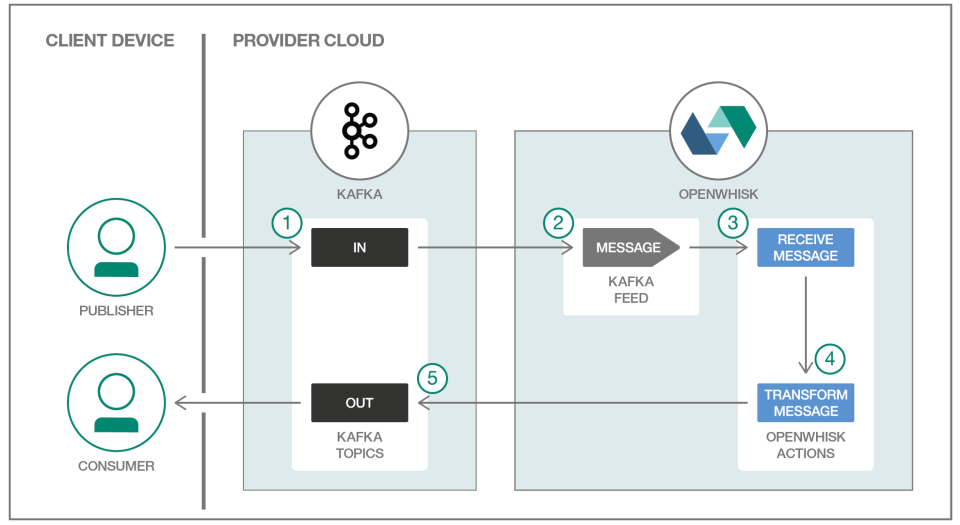

The application demonstrates two IBM Cloud Functions (based on Apache OpenWhisk) that read and write messages with IBM Message Hub (based on Apache Kafka). The use case demonstrates how actions work with data services and execute logic in response to message events.

One function, or action, is triggered by message streams of one or more data records. These records are piped to another action in a sequence (a way to link actions declaratively in a chain). The second action aggregates the message and posts a transformed summary message to another topic.

- IBM Cloud Functions (powered by Apache OpenWhisk)

- IBM Message Hub (powered by Apache Kafka)

You should have a basic understanding of the Cloud Functions/OpenWhisk programming model. If not, try the action, trigger, and rule demo first.

Also, you'll need an IBM Cloud account and the latest OpenWhisk command line tool (wsk) installed and on your PATH.

As an alternative to this end-to-end example, you might also consider the more basic "building block" version of this sample.

- Configure IBM Message Hub

- Create OpenWhisk actions, triggers, and rules

- Test new message events

- Delete actions, triggers, and rules

- Recreate deployment manually

Log into the IBM Cloud, provision a Message Hub instance, and name it kafka-broker. On the "Manage" tab of your Message Hub console create two topics: in-topic and out-topic. On the "Service credentials" tab make sure to add a new credential named Credentials-1.

Copy template.local.env to a new file named local.env and update the KAFKA_INSTANCE, SRC_TOPIC, and DEST_TOPIC values for your instance if they differ.

deploy.sh is a convenience script reads the environment variables from local.env and creates the OpenWhisk actions, triggers, and rules on your behalf. Later you will run the commands in the file directly.

./deploy.sh --installNote: If you see any error messages, refer to the Troubleshooting section below. You can also explore Alternative deployment methods.

Open one terminal window to poll the logs:

wsk activation pollSend a message with a set of events to process.

# Produce a message, will trigger the sequence of actions

DATA=$( base64 events.json | tr -d '\n' | tr -d '\r' )

wsk action invoke Bluemix_${KAFKA_INSTANCE}_Credentials-1/messageHubProduce \

--param topic $SRC_TOPIC \

--param value "$DATA" \

--param base64DecodeValue trueUse deploy.sh again to tear down the OpenWhisk actions, triggers, and rules. You will recreate them step-by-step in the next section.

./deploy.sh --uninstallThis section provides a deeper look into what the deploy.sh script executes so that you understand how to work with OpenWhisk triggers, actions, rules, and packages in more detail.

Create the message-trigger trigger using the Message Hub packaged feed that listens for new messages. The package refresh will make the Message Hub service credentials and connection information available to OpenWhisk.

wsk package refresh

wsk trigger create message-trigger \

--feed Bluemix_${KAFKA_INSTANCE}_Credentials-1/messageHubFeed \

--param isJSONData true \

--param topic ${SRC_TOPIC}Upload the receive-consume action as a JavaScript action. This downloads messages when they arrive via the trigger.

wsk action create receive-consume actions/receive-consume.jsUpload the transform-produce action. This aggregates information from the action above, and sends a summary JSON string back to another Message Hub topic.

wsk action create transform-produce actions/transform-produce.js \

--param topic ${DEST_TOPIC} \

--param kafka ${KAFKA_INSTANCE}Declare a linkage between the receive-consume and transform-produce in a sequence named message-processing-sequence.

wsk action create message-processing-sequence --sequence receive-consume,transform-produceDeclare a rule named message-rule that links the trigger message-trigger to the sequence named message-processing-sequence.

wsk rule create message-rule message-trigger message-processing-sequence# Produce a message, will trigger the sequence

DATA=$( base64 events.json | tr -d '\n' | tr -d '\r' )

wsk action invoke Bluemix_${KAFKA_INSTANCE}_Credentials-1/messageHubProduce \

--param topic $SRC_TOPIC \

--param value "$DATA" \

--param base64DecodeValue trueCheck for errors first in the OpenWhisk activation log. Tail the log on the command line with wsk activation poll or drill into details visually with the monitoring console on the IBM Cloud.

If the error is not immediately obvious, make sure you have the latest version of the wsk CLI installed. If it's older than a few weeks, download an update.

wsk property get --cliversiondeploy.sh will be replaced with wskdeploy in the future. wskdeploy uses a manifest to deploy declared triggers, actions, and rules to OpenWhisk.

You can also use the following button to clone a copy of this repository and deploy to the IBM Cloud as part of a DevOps toolchain. Supply your OpenWhisk and Message Hub credentials under the Delivery Pipeline icon, click Create, then run the Deploy stage for the Delivery Pipeline.

This project was inspired by and reuses significant amount of code from this article.