Goal is to slowly implement https://github.com/ssloy/tinyrenderer in zig for learning purposes.

https://github.com/ssloy/tinyrenderer/wiki/Lesson-0:-getting-started https://github.com/ssloy/tinyrenderer/tree/909fe20934ba5334144d2c748805690a1fa4c89f

First goal is to port the TGA code, just enough to generate the example image with the red dot. I could vendor this in and directly call the C++ code from zig, but likely a better goal for learning zig to port.

I first start off by copying a flake.nix I had from an earlier project which

provides the latest zig. I then just ran zig init-exe and started hacking.

Interesting things are that const means that all bytes related to that

variable are immutable. This is unlike other languages and actually makes a

lot of sense. So I had to use var on the array I created for the TGA file. I

used Cody to work this out, I am starting to see the value of AI tools.

I spent a long time before this jumping back and forth between the zig learn site, zig std library code and my editor. I think I am gonna experiment with AI tools to help me write correct code. Additionally I need to go back over the code and try and use things like memmove/memcopy in places.

After an hour I had something which wrote out stuff to disk, but was not the TGA file format. Next time I will try make the file correct.

Only had 30min tonight, but have a rendering TGA file which looks buggy as hell. Tomorrow I will have to compare hexdump output of this TGA writer vs the reference one.

It was quite amazing though, I pasted in the TGAHeader struct from C++ into

Cody to translate and it did a great job, including packed struct to match

the pragma used in the C++ code! I think I could of pasted in all the code and

it would of done a decent job, but then I refrained since I wouldn’t learn as

much that way.

Ran the upstream code (switching off RLE (run length encoding) to compare TGA output in a hex dump. This was fruitful:

First I wasn’t initializing the pixels to 0. This was easy to do

- var data: [100 * 100]TGAColor = undefined;

+ var data = [_]TGAColor{.{}} ** (100 * 100);

Then I noticed I was writing out RGBA but should just write out RGB. Finally I was writing down the imagedescriptor as the value 20 instead 0x20.

Had a very short night (just 15min), so this was as far as I got. There is still some discrepencies to dive into, hopefully my next session is longer.

https://github.com/ssloy/tinyrenderer/wiki/Lesson-1:-Bresenham%E2%80%99s-Line-Drawing-Algorithm

Only had a few minutes to hack, but did the first attempt. Zig seems a lot more explicit at casting between ints and floats, so the code was a bit verbose. Tried a bit to use cody to help with how to cast, but it generated slightly incorrect names.

I tried to profile the zig code as mentioned in the part about perf optimization in the line drawing. Felt like this was a good opportunity to expose myself to the tools.

First I tried to use gprof but that fails since I think you have to be

compiling with gcc to pass the -pg flag. Inspecting the output of zig

build gave no clues.

I then tried using perf. I got a bit further, but I don’t think perf understands the symbols in zig (likely need to pass some flag to build?). This is what I did:

nix-shell -p linuxKernel.packages.linux_6_1.perf zig build perf record -a -F 999 -g ./zig-out/bin/zig-tinyrenderer perf script -i perf.data > ~/Downloads/profile.linux-perf.txt

I then opened that final file in https://www.speedscope.app/ which is really neat. I always find using the FlameGraph repo super clunky, so was nice to find a convenient interface.

While I was doing all of this I also downloaded Xcode on my macbook to try out Instruments. I created a time profile then made it target the zig binary. Ran it and it gave me great output straight away. This was a pleasant experience. In this case it told me that we spend 98.2% of the time in line, 40% of which is in calling set. I am guessing by removing the floating point stuff we should be able to get to set being much closer to the time spent.

I then remember valgrind so tried that out on linux again. Given it isn’t a sampling profiler it is very slow.

nix-shell -p valgrind kcachegrind valgrind --tool=callgrind ./zig-out/bin/zig-tinyrenderer kcachegrind callgrind.out.17062

This also was missing function names. I am now convinced that error is PEBKAC so let me see if I can get debug info in my binary. I failed after toggling many a flag. Reading the build.zig and then reading TigerBeetles build.zig makes me like zig more though. Still don’t know why I can’t get it sorted out.

Googling didn’t give me much like, likely time to dust off my discord accounts. Additionally there was some mention of tracy, but that seems to be about instrumenting your code with spans which likely isn’t as useful here vs a sampling profiler.

Next hacking session I’ll likely spend my time measuring relative perf improvement with something like hyperfine. Additionally the valgrind output is actually alright but I just need to guess the names of the symbols.

I probably need to experiment with better compilation options, but I the floats implementation was faster. Here is the dog ugly port for not using floats:

fn line(x0_: u32, y0_: u32, x1_: u32, y1_: u32, image: *TGAImage, color: TGAColor) void {

var x0: i32 = @as(i32, @intCast(x0_));

var x1: i32 = @as(i32, @intCast(x1_));

var y0: i32 = @as(i32, @intCast(y0_));

var y1: i32 = @as(i32, @intCast(y1_));

// algorithm longs along x axis, so transpose if the line is longer in the

// y axis for better fidelity.

const transposed = dist(i32, x0, x1) < dist(i32, y0, y1);

if (transposed) {

std.mem.swap(i32, &x0, &y0);

std.mem.swap(i32, &x1, &y1);

}

if (x0 > x1) {

std.mem.swap(i32, &x0, &x1);

std.mem.swap(i32, &y0, &y1);

}

const dx = x1 - x0;

const dy: i32 = y1 - y0;

var derror2 = dy * 2;

if (derror2 < 0) {

derror2 *= -1;

}

var error2: i32 = 0;

var y = y0;

var x: i32 = x0;

while (x <= x1) : (x += 1) {

if (transposed) {

image.set(@as(u32, @intCast(y)), @as(u32, @intCast(x)), color);

} else {

image.set(@as(u32, @intCast(x)), @as(u32, @intCast(y)), color);

}

error2 += derror2;

if (error2 > dx) {

y += if (y1 > y0) 1 else -1;

error2 -= dx * 2;

}

}

}And the hyperfine output:

Benchmark 1: ./zig-out/bin/zig-tinyrenderer

Time (mean ± σ): 1.501 s ± 0.002 s [User: 1.486 s, System: 0.014 s]

Range (min … max): 1.497 s … 1.503 s 10 runs

Benchmark 2: ./zig-out/bin/zig-tinyrenderer-floats

Time (mean ± σ): 1.496 s ± 0.001 s [User: 1.483 s, System: 0.013 s]

Range (min … max): 1.495 s … 1.499 s 10 runs

Summary

'./zig-out/bin/zig-tinyrenderer-floats' ran

1.00 ± 0.00 times faster than './zig-out/bin/zig-tinyrenderer'

Enabling optimizations made a big difference. But it made the float code even faster. Maybe this is something to do with the M2?

zig build -Doptimize=ReleaseFast

Benchmark 1: ./zig-out/bin/zig-tinyrenderer

Time (mean ± σ): 261.9 ms ± 140.1 ms [User: 218.1 ms, System: 2.8 ms]

Range (min … max): 217.0 ms … 660.7 ms 10 runs

Warning: The first benchmarking run for this command was significantly slower than the rest (660.7 ms). This could be caused by (filesystem) caches that were not filled until after the first run. You should consider using the '--warmup' option to fill those caches before the actual benchmark. Alternatively, use the '--prepare' option to clear the caches before each timing run.

Benchmark 2: ./zig-out/bin/zig-tinyrenderer-floats

Time (mean ± σ): 202.2 ms ± 143.9 ms [User: 157.7 ms, System: 2.3 ms]

Range (min … max): 156.3 ms … 611.7 ms 10 runs

Warning: The first benchmarking run for this command was significantly slower than the rest (611.7 ms). This could be caused by (filesystem) caches that were not filled until after the first run. You should consider using the '--warmup' option to fill those caches before the actual benchmark. Alternatively, use the '--prepare' option to clear the caches before each timing run.

Summary

'./zig-out/bin/zig-tinyrenderer-floats' ran

1.30 ± 1.15 times faster than './zig-out/bin/zig-tinyrenderer'

Trying to work out where I was. I think I am still on the first lesson here https://github.com/ssloy/tinyrenderer/wiki/Lesson-1:-Bresenham%E2%80%99s-Line-Drawing-Algorithm#wireframe-rendering

I have less than 30min, so will have the goal of just parsing the very first line from the object file.

I remember reading some zig code on nice ways to just read files. I have vague memories of liking something from the zig repo tools dir. Will try find that and use it.

I am looking at the patterns in tools/docgen.zig. The first change I make is

to use ArgIterator instead of directly using os.argv. Main benefit is the

code is nicer, cross platform and I get zig style strings instead of c’s null

terminated strings. This makes it easier to then open a file.

Alright I spent more than 30min, did just under an hour. It was fun though. I started a parser which only parses the “v” lines and then panics. So easy to pick up for next time.

Right now I am running

zig run main.zig -- ../obj/african_head.obj

I did this via lots of grepping of the zig source code, using imenu in emacs

and some googling. Googling helped me find the pretty neat trick of creating

an enum, and then using std.meta.stringToEnum for a neat way to treat each

object line type as an enum.

Something I also learnt is you can’t just use imenu on say fmt.zig to see everything under the fmt import. For example it missed parseFloat because the line in fmt.zig is declared like follows:

pub const parseFloat = @import("fmt/parse_float.zig").parseFloat;

Goal is in 25m parse everything in the file without a panic. Right now I am splitting on space or newline. To handle comment lines I likely need to split on lines and do further parsing. I am hoping for some sort of scanf, to see how that works, but I don’t see any exported things in zig with the word scan in it and no useful functions.

Managed to parse all lines except the vertex normal lines (f). The parser is super naive and would likely break on anything that isn’t african_head.obj.

Very short amount of coding due morning beach visit. Will just aim to parse the vertex normals.

Took a bit of time to work out what each value is, then looked at the reference implementation and we actually only care about the first value. So implemented that after some working out of how to initiliaze arrays/etc in zig.

When looking at the reference implementation it only handles vertices and faces. I wanna simplify this implementation to only support those two. However, I also wanna see what it is like to implement an iterator in zig.

I am looking at how tokenize works in the stdlib. There is some magic here

which is pretty damn cool and I didn’t realise zig did this. So tokenizeAny

returns the type TokenIterator(T, .any). TokenIterator is a function, but

its a function which returns a type. So at comptime zig calls the function! So

cool.

I wasn’t sure what .any was. I thought it was something special. Turns out

the 2nd arg is an enum, where any is one of the values. So cool.

I don’t think I need comptime stuff here, so will see how to construct this.

Actually if I want to take in an iterator for u8, it needs to be generic. This

makes me think I should try out comptime stuff. But it is likely too fancy,

and all I really need is to implement a function which will return an iterator

over the fully read in []u8. Then the iterator type will be

mem.TokenIterator(u8, .any).

I have a short amount of time today, so just going to focus on how I would represent the type returned by the iterator. My focus will just be on face and vertex, and the face can only have 3 vertices so that I can represent it on the stack.

https://zig.news/edyu/zig-unionenum-wtf-is-switchunionenum-2e02 seems like a

good article on what I want to do. What I want is called a tagged union and

looks like union(enum).

It took me a while how to work out how to get the enum type from the tagged

union. meta.stringToEnum would complain I was passing in a union. Initially

I just declared the enum and repeated myself. But then found meta.Tag which

extracts the tag type. The way I found this was grepping for @as.*enum and

then looking for results relating to unions, which then had a test asserting

that the type for a tagged unions enum was the same as calling meta.Tag…

this isn’t at all obvious lol.

But then it was pretty smooth after that and now I just have it printing out the entities. Next time I can turn this into an iterator.

Only goal for today is to make the iterator, then I will finally be more productive and do things like rendering.

Actually as I was implementing it I realized it would be nicer to just wrap the iterator. But I will stick with the more verbose implementation since I feel like it is more standard. Maybe tomorrow follow up with comptime stuff.

Code feels nice like this. I tried to define the error set for the iterator, but had trouble working out how to union error sets. So instead I am just relying on the inferred error set. I could also explicitly list out the error set enum. It seems pretty cool in zig, the error set is basically a global enum, so if you reuse a name you get the same enum value.

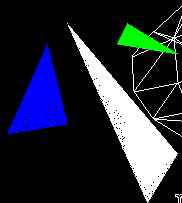

Rendered the model to disk now. I had some issues with out of bounds vertices, mainly around hitting the width or height. I think that is likely due to rounding up behaviour when scaling the image to fix.

https://github.com/ssloy/tinyrenderer/wiki/Lesson-2:-Triangle-rasterization-and-back-face-culling

Today I am just gonna see how to implement the Vec2i class he uses, but without templates. I managed to do that fairly quickly, but gonna call it at that. Also refactored code to use that instead + drew the 3 triangles. Next time I will attempt his bullet points at improving the triangle function.

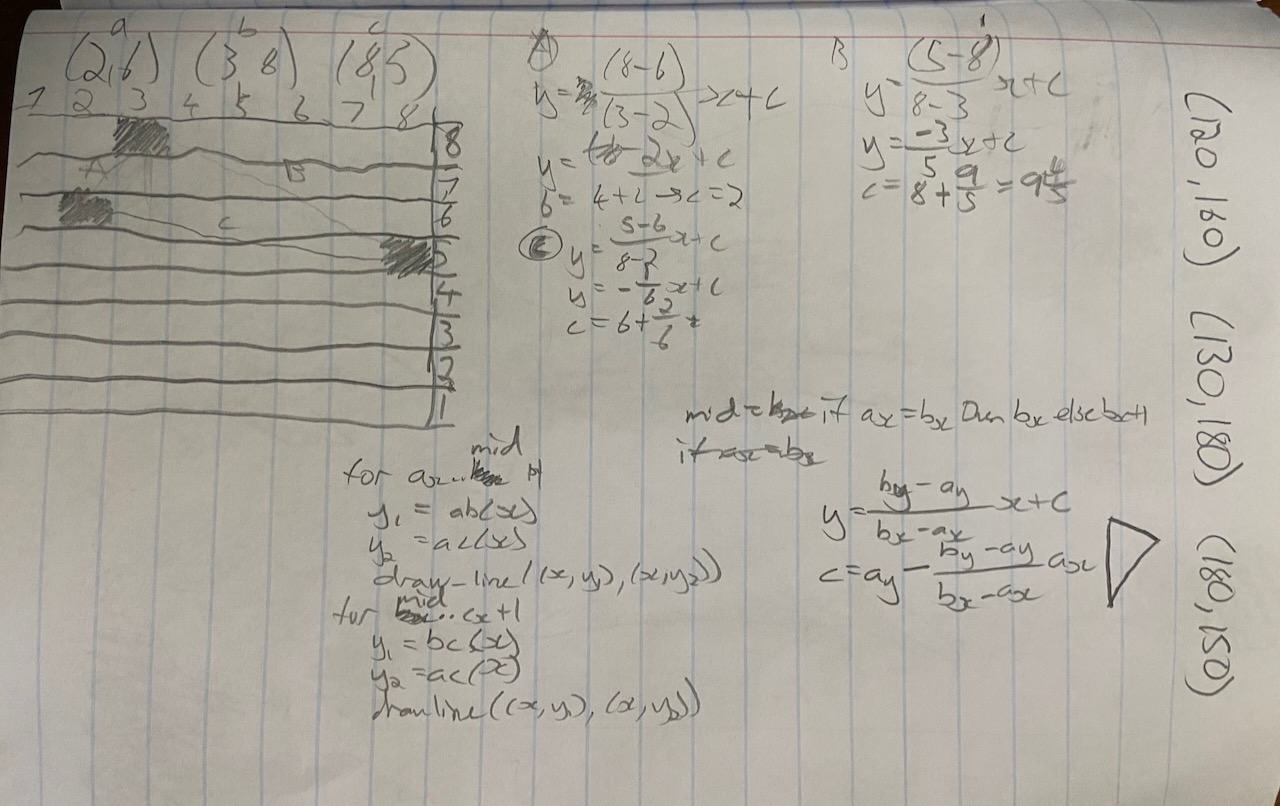

Yesterday got out a pencil and paper and derived some high school math around how to draw a filled triangle. Was a lot of fun. Gonna likely spend some time playing around with this until I am happy, then will look up what is the fast way.

Right now what I have is sorting the points by x value, then label them A B C. You then iterate the points in the line AB AC via asking what is y for each x. Once AB ends you switch to BC. For each pair of points you fill in all y between the two.

I realise I can likely convert the bresenham algorithm into an iterator for reuse here. I think I will first implement the shitty floating point one just to see something, then will work out how to reuse the line drawing logic.

Another minor perf change I realised I need is likely sorting by y since in

the underlying datastructure (x, y) and (x+1, y) live next to each other

in memory.

[2024-01-22 Mon 09:02] Implemented it, but there are a bunch of artifacts in this simple implementation which are surprising. Makes me think my draw_line algorithm is a bit messed up.

Today just wanna do a little work to try and fix the artifacts plus make a change I wanna do around making it orientated around scanning along the y axis instead of the x axis.

Refactor was mostly copy pasta, artifacts slightly changed. Then implemented a more direct filling of lines (not via draw_line) and the artifacts are gone.

Took a look at his code now, he uses the same approach. His calculation of x is a little different, I think he simplifed it out a bit more.

He then goes on to another way to draw triangles which rather finds the bounding box then does checks if inside triangle to draw or not. I will spend the rest of the time reading, but don’t think I will implement his version unless it seems useful to learn a primitive from it.

Alright, so the implementation does more floating point operations per pixel. However, the math I have all forgotten so I think my next session will be pencil and paper to revise this stuff.

I never felt like I understood linear algebra in uni, it just felt like I learnt the rules. So gonna take the time to brush up enough to feel confident with the explanation of all the vector and matrix math being used.

I watched (at a higher speed) the first 3 videos of 3Blue1Brown Essence of linear algebra and the first video on vector spaces in Khan Academy Linear Algebra. As I watched I paused and wrote things down, including re-remembering how matrix vector multiplication worked.

The 3Blue1Brown video on linear transformations I felt like it finally clicked how 2d matrices are used to transform stuff in R2. I have just blindly copy pasted this in opengl coding in the past. What made it click was proving myself why any linear transformation can be represented with a matrix (using things I remember about how linear transformations are defined in general on vector spaces). Then when he showed an example in the video deriving the matrix myself first. Additionally the visuals in the video helped cement.

Linear algebra was my least favourite math course in my math major. I feel like I may now try relearn it since I was just missing that intuition which I just gained.

The code he presents is really nice, plus I ran into some bugs with the triangle filling code I wrote when trying to render all the faces. I fixed some of the bugs like division by zero, but I believe there is something I am missing in my algorithm. Given the many months that have passed since I last worked on this project I am gonna take this linear algebra code as gospel and implement it. I’ll have to come back another time to see if I can gain a deeper understanding of the math.

It still has a bunch of artifacts. Diving into the code he actually uses he doesn’t use the barycentric code, so I am guessing there are issues I am not aware of when using the code. In particular it seems that this algorithm is sensitive to the ordering of the points. I tried implementing counter clockwise sorting, and it looks mostly correct but I still worry about artifacts.