Study meetup RSVP stream data

Meetup RSVP stream API:

http://stream.meetup.com/2/rsvps

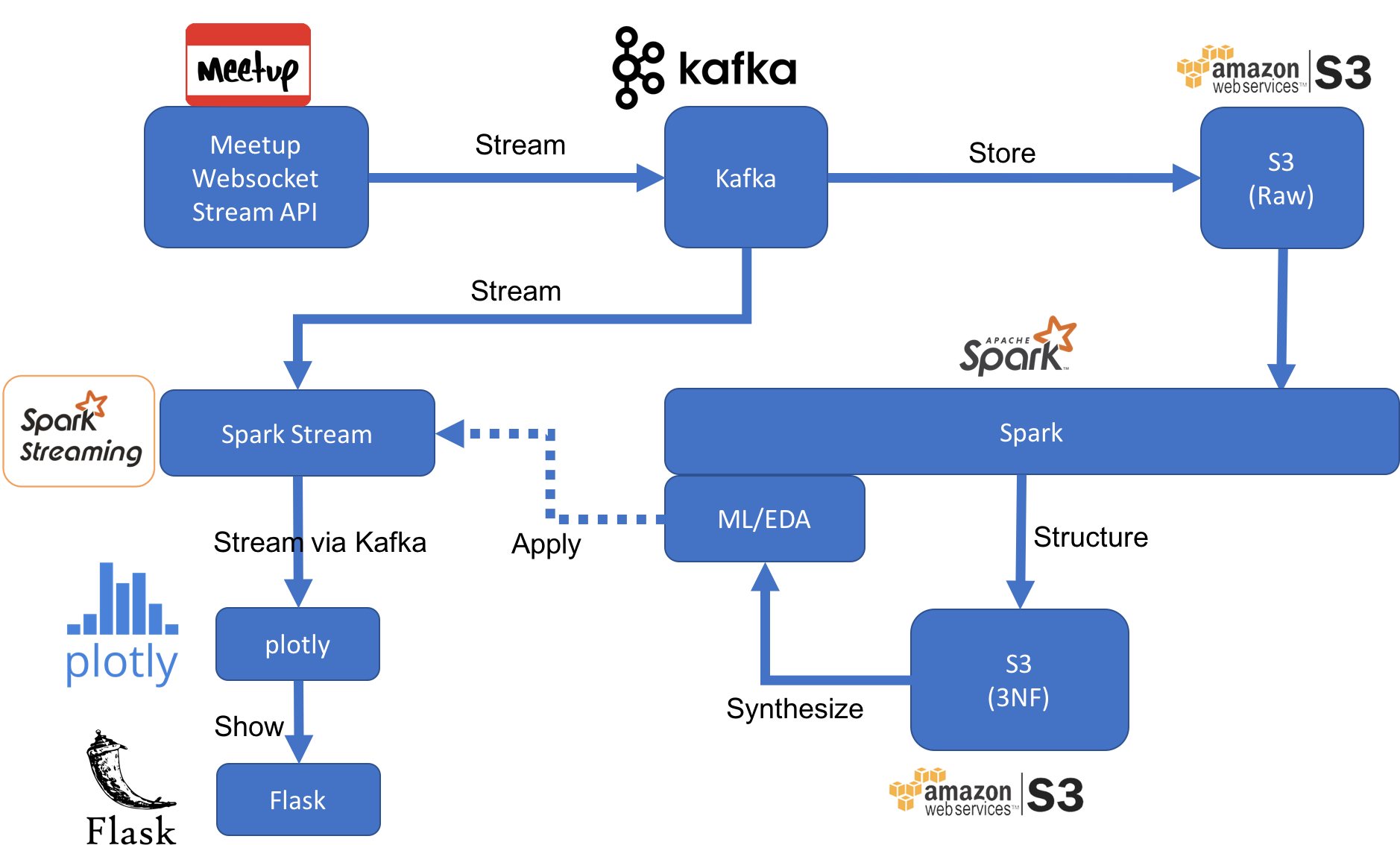

The inidial idea of the target architecture looks like below.

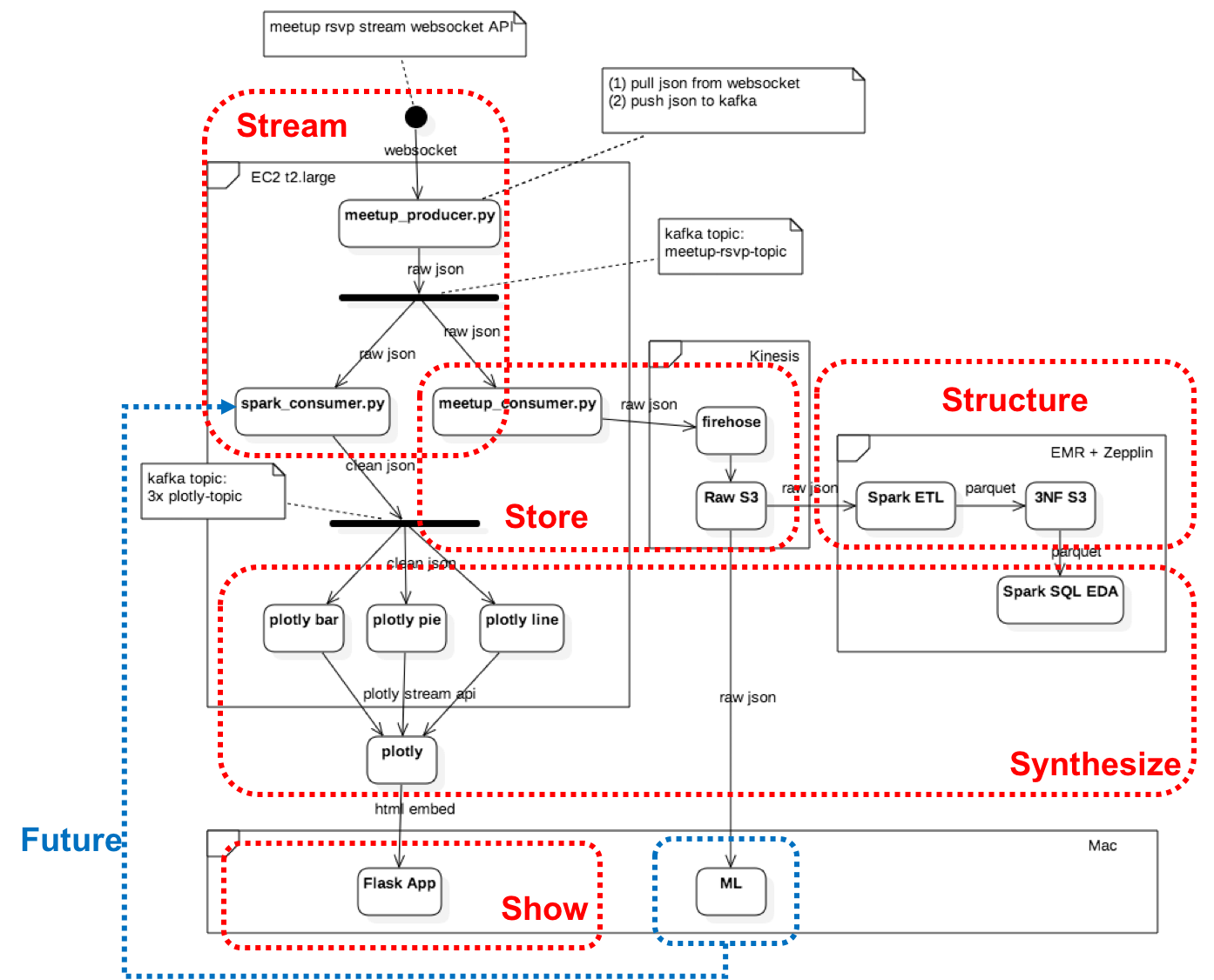

The exact implementation is shown as below.

- Kafka Websocket Producer: recieve from Meetup Websocket Stream API, pipe to 1st Kafka topic

- Kafka Instance: running in EC2

- Kafka Spark Streaming Consumer: receive from Kafka, pipe to Spark Stream for real-time analysis, then forward transformed data to 2nd kafka topic

- Kafka Firehose Consumer: receive from 1st Kafka topic, forward raw data to Kinesis Firehose

- Raw S3: receive from Firhose, store raw data in S3 bucket for batch analysis at later stage

- Spark ETL: read raw data from S3, perform ETL to transform raw data into structured data, persisted as Spark DataFrame paquet in separate S3 location

- 3NF S3: parquet-ed Spark DataFrame version of structured ETL data. The ETL transofrmed data shall conform to 3rd Normal Form.

- Spark SQL EDA: load the parquet-ed dataframe, perform simple EDA on meetup rsvp data, e.g. who is the most active user, which is the most popular group, etc

- Plotly Updater: kafka consumers that receive from 2nd kafka topic, transform data and update plotly charts via plotly streaming API.

- Machine Learning:

- download parquet data from S3 to local (e.g.

aws s3 sync) - load DataFrame in sklearn

- perform hierarchical clustering using urlkeys

- perform regression with ensemble method to predict cluster label

- download parquet data from S3 to local (e.g.

- Flask: python web server to serve templated web page

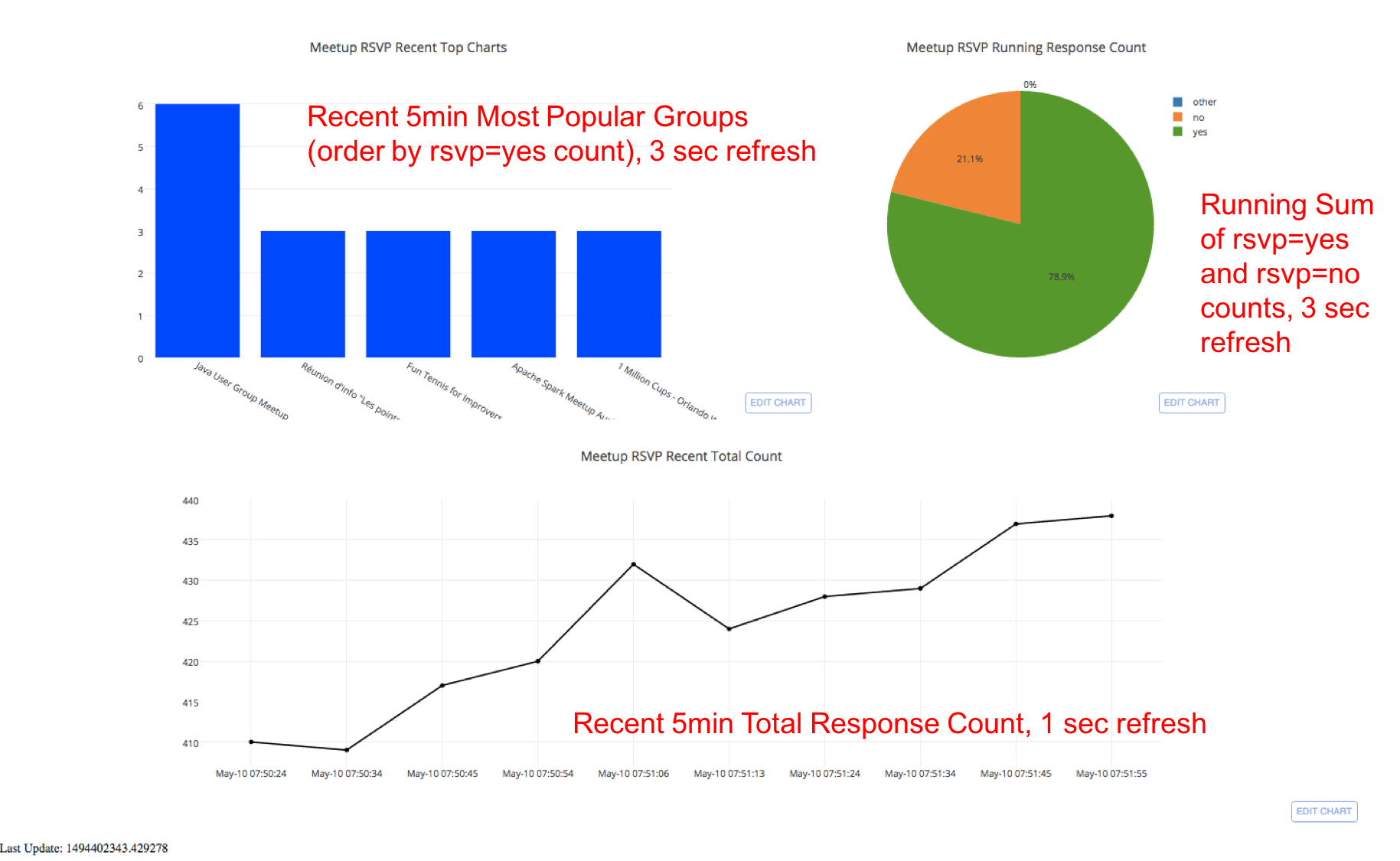

- Plotly: real-time dynamic charts to show dashboard about streaming data

- Kafka: current setup is single instance, SPoF. Future >> Scale Out

- Spark: current setup is using EMR cluster, already fault tolerant; RDD + DAG delivers resilient abstraction

- S3: rely on Amazon to make it fault tolerant (hopefully...)

- Flask: current setup is single Flask instance, SPoF. Future >> use NginX/Apache http server + scale out

- Spark RDD is imutable, delivers "read only" and "write only" aspect

- Spark Stream works on "mini batches" delivers low latency (relatively)

- Kafka: future scale out

- Spark: already scalable with EMR

- S3: already scalable

- Flask: future scale out (cluster + load balancer + stateless)

- The architecutre can be generalized to any near real-time streaming text processing workload, by replacing the business logic part

- For true real-time streaming, need to explore event based approach

- Kafka vanilla producer/consumer is flexible to handle non-frequent data model changes (e.g. change both side)

- Future to adopt Avro producer/consumer for dynamic typing

- Offline batch aspect supported by Spark SQL

- Real-time aspect is not supported as for now. Future: implement lambda architecture to support ad-hoc queries by union the query result from both stream and batch sides

- Use Vagrant and start-up script for deployment automation and easy maintenance

- Use EC2 features (e.g. snapshot, monitor) for fast recovery

- EC2 trace logs, it's there, not obvious though...

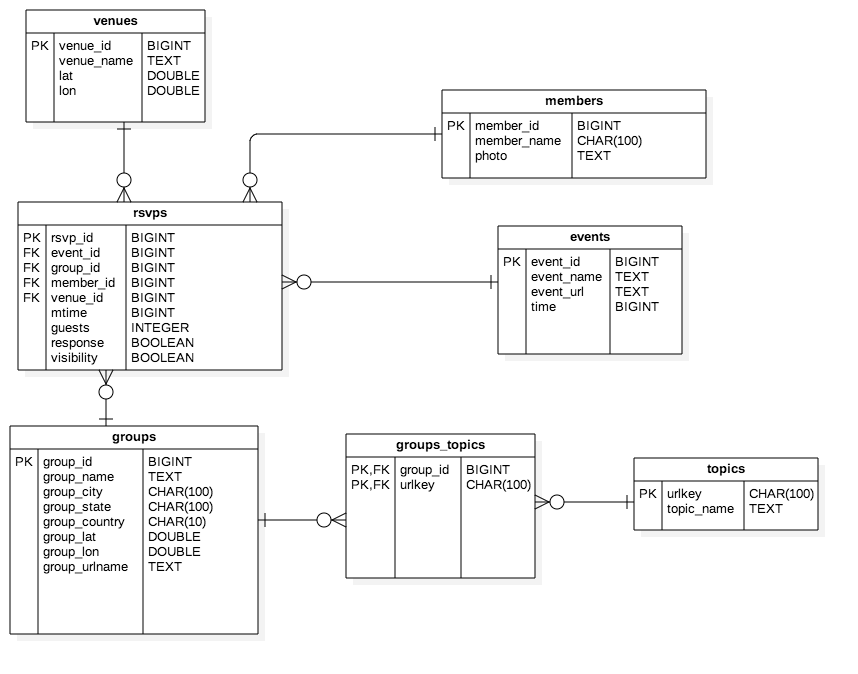

Both Parquet-ed S3 DataFrame and RDBMS PostgreSQL persisted data shall conform with 3rd Normal Form (3NF).

The ER diagram as below.

- Build full-fledged Lambda architecture to "merge" the stream and batch query result, show in Web HMI

- Distributed Kafka setup

- Shift to Mongo to support flexible/free schema

- More machine learning tasks

- Query Interface

- Scale out Flask to NginX/Apache http server cluster + load balancer