VectorizedMultiAgentSimulator (VMAS)

Welcome to VMAS!

This repository contains the code for the Vectorized Multi-Agent Simulator (VMAS).

VMAS is a vectorized framework designed for efficient MARL benchmarking. It is comprised of a vectorized 2D physics engine written in PyTorch and a set of challenging multi-robot scenarios. Scenario creation is made simple and modular to incentivize contributions. VMAS simulates agents and landmarks of different shapes and supports rotations, elastic collisions and custom gravity. Holonomic motion models are used for the agents to simplify simulation. Custom sensors such as LIDARs are available and the simulator supports inter-agent communication. Vectorization in PyTorch allows VMAPS to perform simulations in a batch, seamlessly scaling to tens of thousands of parallel environments on accelerated hardware. VMAS has an interface compatible with OpenAI Gym and with the RLlib library, enabling out-of-the-box integration with a wide range of RL algorithms. The implementation is inspired by OpenAI's MPE. Alongside VMAS's scenarios, we port and vectorize all the scenarios in MPE.

Paper

The arXiv paper can be found here.

If you use VMAS in your research, cite it using:

@article{bettini2022vmas

title = {VMAS: A Vectorized Multi-Agent Simulator for Collective Robot Learning},

author = {Bettini, Matteo and Kortvelesy, Ryan and Blumenkamp, Jan and Prorok, Amanda},

year = {2022},

journal={arXiv preprint arXiv:2207.03530},

url = {https://arxiv.org/abs/2207.03530}

}

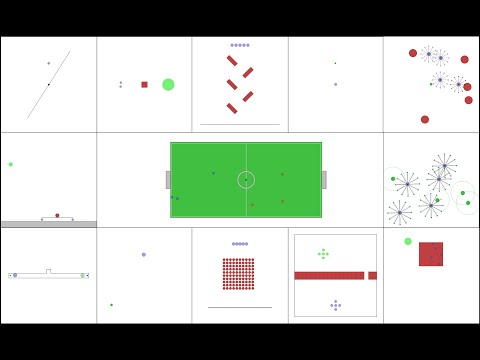

Video

Watch the presentation video of VMAS, showing its structure, scenarios, and experiments.

How to use

Notebooks

Using a VMAS environment. Here is a simple notebook that you can run to create, step and render any scenario in VMAS. It reproduces the

use_vmas_env.pyscript in theexamplesfolder.Using VMAS in RLlib. In this notebook, we show how to use any VMAS scenario in RLlib. It reproduces the

rllib.pyscript in theexamplesfolder.

Install

To install the simulator, simply install the requirements using:

pip install -r requirements.txt

rllib dependencies are outdated, so then run:

pip install gym==0.22

and then install the package with:

pip install -e .

Run

To use the simulator, simply create an environment by passing the name of the scenario

you want (from the scenarios folder) to the make_env function.

The function arguments are explained in the documentation. The function returns an environment

object with the OpenAI gym interface:

Here is an example:

env = vmas.make_env(

scenario_name="waterfall",

num_envs=32,

device="cpu", # Or "cuda" for GPU

continuous_actions=True,

wrapper=None, # One of: None, vmas.Wrapper.RLLIB, and vmas.Wrapper.GYM

**kwargs # Additional arguments you want to pass to the scenario initialization

)

A further example that you can run is contained in use_vmas_env.py in the examples directory.

RLlib

To see how to use VMAS in RLlib, check out the script in examples/rllib.py.

Simulator features

- Vectorized: VMAS vectorization can step any number of environments in parallel. This significantly reduces the time needed to collect rollouts for training in MARL.

- Simple: Complex vectorized physics engines exist (e.g., Brax~\cite{brax2021github}), but they do not scale efficiently when dealing with multiple agents. This defeats the computational speed goal set by vectorization. VMAS uses a simple custom 2D dynamics engine written in PyTorch to provide fast simulation.

- General: The core of VMAS is structured so that it can be used to implement general high-level multi-robot problems in 2D. It can support adversarial as well as cooperative scenarios. Holonomic point-robot simulation has been chosen to focus on general high-level problems, without learning low-level custom robot controls through MARL.

- Extensible: VMAS is not just a simulator with a set of environments. It is a framework that can be used to create new multi-agent scenarios in a format that is usable by the whole MARL community. For this purpose, we have modularized the process of creating a task and introduced interactive rendering to debug it. You can define your own scenario in minutes. Have a look at the dedicated section in this document.

- Compatible: VMAS has wrappers for RLlib and OpenAI Gym. RLlib has a large number of already implemented RL algorithms.

Keep in mind that this interface is less efficient than the unwrapped version. For an example of wrapping, see the main of

make_env. - Entity shapes: Our entities (agent and landmarks) can have different customizable shapes (spheres, boxes, lines). All these shapes are supported for elastic collisions.

- Faster than physics engines: Our simulator is extremely lightweight, using only tensor operations. It is perfect for running MARL training at scale with multi-agent collisions and interactions.

- Customizable: When creating a new scenario of your own, the world, agent and landmarks are highly customizable. Examples are: drag, friction, gravity, simulation timestep, non-differentiable communication, agent sensors (e.g. LIDAR), and masses.

- Non-differentiable communication: Scenarios can require agents to perform discrete or continuous communication actions.

- Gravity: VMAS supports customizable gravity.

- Sensors: Our simulator implements ray casting, which can be used to simulate a wide range of distance-based sensors that can be added to agents. We currently support LIDARs. To see available sensors, have a look at the

sensorsscript. - Joints: Our simulator supports joints. Joints are constraints that keep entities at a specified distance. The user can specify the anchor points on the two objects, the distance (including 0), the thickness of the joint, if the joint is allowed to rotate at either anchor point, and if he wants the joint to be collidable. Have a look at the waterfall scenario to see how you can use joints.

Creating a new scenario

To create a new scenario, just extend the BaseScenario class in scenario.

You will need to implement at least make_world, reset_world_at, observation, and reward. Optionally, you can also implement done and info.

To know how, just read the documentation of BaseScenario and look at the implemented scenarios.

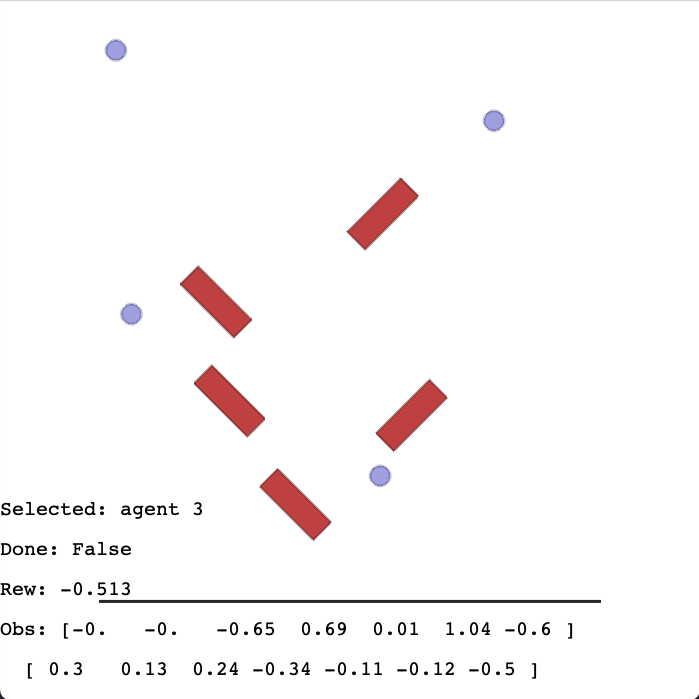

Play a scenario

You can play with a scenario interactively!

Just use the render_interactively script. Relevant values will be plotted to screen.

Move the agent with the arrow keys and switch agents with TAB. You can reset the environment by pressing R.

If you have more than 1 agent, you can control another one with W,A,S,D and switch the second agent using LSHIFT.

On the screen you will see some data from the agent controlled with arrow keys. This data includes: name, current obs, current reward, total reward so far and environment done flag.

Here is an overview of what it looks like:

Rendering

To render the environment, just call the render or the try_render_at functions (depending on environment wrapping).

Example:

env.render(

mode="rgb_array", # "rgb_array" returns image, "human" renders in display

agent_index_focus=4, # If None keep all agents in camera, else focus camera on specific agent

index=0, # Index of batched environment to render

visualize_when_rgb: bool = False, # Also run human visualization when mode=="rgb_array"

)

| Gif | Agent focus |

|---|---|

|

With agent_index_focus=None the camera keeps focus on all agents |

|

With agent_index_focus=0 the camera follows agent 0 |

|

With agent_index_focus=4 the camera follows agent 4 |

Rendering on server machines

To render in machines without a display use mode="rgb_array". Make sure you have OpenGL and Pyglet installed.

To enable rendering on headless machines you should install EGL.

If you do not have EGL, you need to create a fake screen. You can do this by running these commands before the script:

export DISPLAY=':99.0'

Xvfb :99 -screen 0 1400x900x24 > /dev/null 2>&1 &

or in this way:

xvfb-run -s \"-screen 0 1400x900x24\" python <your_script.py>

To create a fake screen you need to have Xvfb installed.

List of environments

VMAS

dropout  |

football  |

transport  |

wheel  |

balance  |

reverse  |

give_way  |

passage  |

dispersion  |

waterfall  |

flocking  |

discovery  |

MPE

| Env name in code (name in paper) | Communication? | Competitive? | Notes |

|---|---|---|---|

simple.py |

N | N | Single agent sees landmark position, rewarded based on how close it gets to landmark. Not a multi-agent environment -- used for debugging policies. |

simple_adversary.py (Physical deception) |

N | Y | 1 adversary (red), N good agents (green), N landmarks (usually N=2). All agents observe position of landmarks and other agents. One landmark is the ‘target landmark’ (colored green). Good agents rewarded based on how close one of them is to the target landmark, but negatively rewarded if the adversary is close to target landmark. Adversary is rewarded based on how close it is to the target, but it doesn’t know which landmark is the target landmark. So good agents have to learn to ‘split up’ and cover all landmarks to deceive the adversary. |

simple_crypto.py (Covert communication) |

Y | Y | Two good agents (alice and bob), one adversary (eve). Alice must sent a private message to bob over a public channel. Alice and bob are rewarded based on how well bob reconstructs the message, but negatively rewarded if eve can reconstruct the message. Alice and bob have a private key (randomly generated at beginning of each episode), which they must learn to use to encrypt the message. |

simple_push.py (Keep-away) |

N | Y | 1 agent, 1 adversary, 1 landmark. Agent is rewarded based on distance to landmark. Adversary is rewarded if it is close to the landmark, and if the agent is far from the landmark. So the adversary learns to push agent away from the landmark. |

simple_reference.py |

Y | N | 2 agents, 3 landmarks of different colors. Each agent wants to get to their target landmark, which is known only by other agent. Reward is collective. So agents have to learn to communicate the goal of the other agent, and navigate to their landmark. This is the same as the simple_speaker_listener scenario where both agents are simultaneous speakers and listeners. |

simple_speaker_listener.py (Cooperative communication) |

Y | N | Same as simple_reference, except one agent is the ‘speaker’ (gray) that does not move (observes goal of other agent), and other agent is the listener (cannot speak, but must navigate to correct landmark). |

simple_spread.py (Cooperative navigation) |

N | N | N agents, N landmarks. Agents are rewarded based on how far any agent is from each landmark. Agents are penalized if they collide with other agents. So, agents have to learn to cover all the landmarks while avoiding collisions. |

simple_tag.py (Predator-prey) |

N | Y | Predator-prey environment. Good agents (green) are faster and want to avoid being hit by adversaries (red). Adversaries are slower and want to hit good agents. Obstacles (large black circles) block the way. |

simple_world_comm.py |

Y | Y | Environment seen in the video accompanying the paper. Same as simple_tag, except (1) there is food (small blue balls) that the good agents are rewarded for being near, (2) we now have ‘forests’ that hide agents inside from being seen from outside; (3) there is a ‘leader adversary” that can see the agents at all times, and can communicate with the other adversaries to help coordinate the chase. |

TODOS

- Link video of experiments

- Add LIDAR section

- Custom actions for scenario

- Implement LIDAR

- Implement 1D camera sensor

- Move simulation core from force-based to impulse-based

- Vectorization in the agents (improve collision loop)

- Make football heuristic efficient

- Rewrite all MPE scenarios

- simple

- simple_adversary

- simple_crypto

- simple_push

- simple_reference

- simple_speaker_listener

- simple_spread

- simple_tag

- simple_world_comm