GCP exams focus more on how to develop and implement comprehensive solutions than knowing minutia of the services. The goal of this project is to connect services together into a comprehensive solution. Plus, the end result kinda cool.

Create a cloud storage bucket Create a cloud function with this code. This code will take any image file from the bucket, and submit it to Google's Machine Learning APIs. Configure the cloud function to trigger when a file is uploaded to the bucket (This is the trigger to look into: google.storage.object.finalize) Optional: Create a cloud datastore table where the results of the API call can be written. (There is no code for this- its optional, so it should be a bit less plug and play- this is a chance to get more familiar with GCP's SDKs, which, while not a major component of the exam, are extremely important for practicing DevOps in the environment)

The solution should include the following GCP services:

- Cloud Storage Buckets

- Cloud Function

- Cloud Vision API

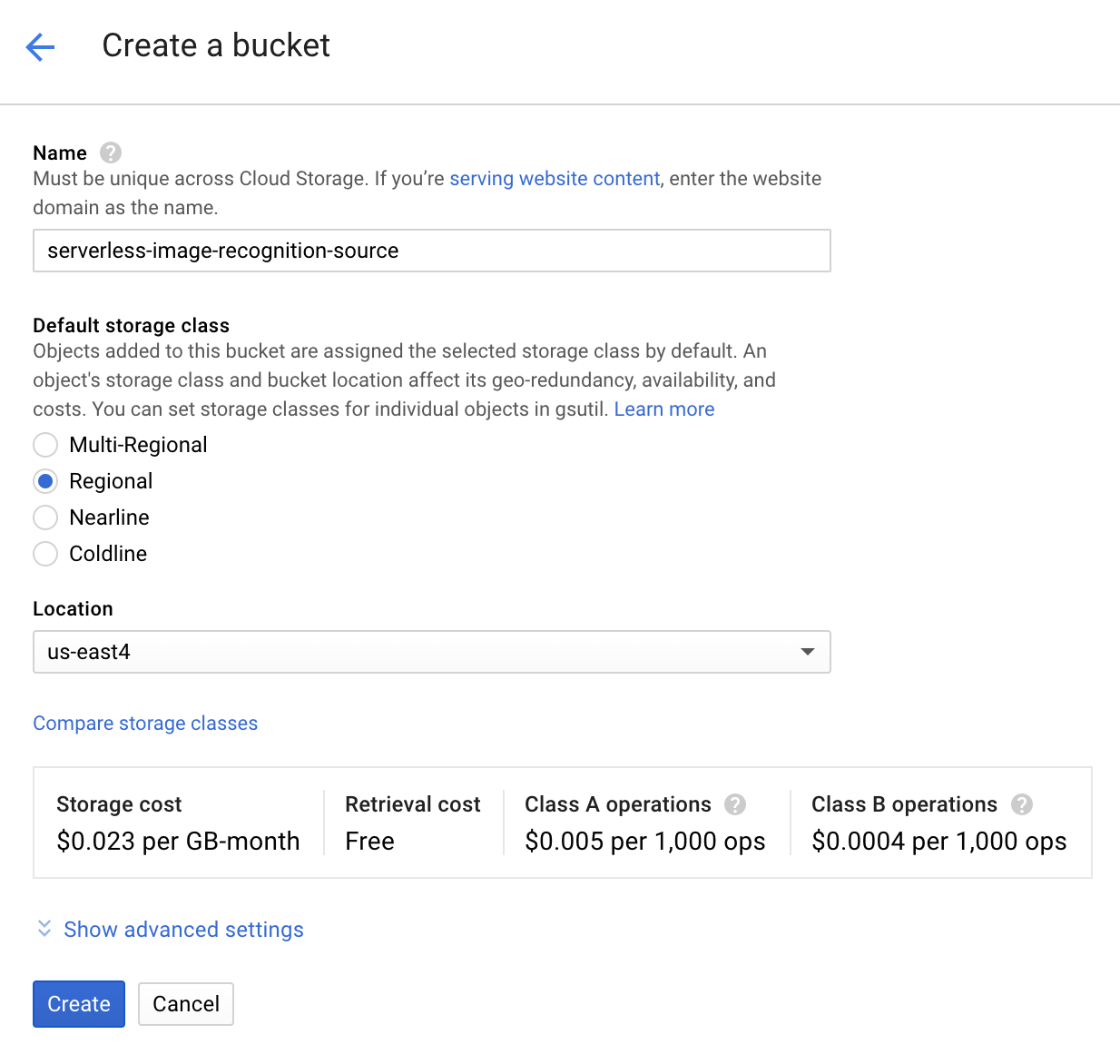

- Create a new bucket. Since this is a learning exercise, which type of bucket should we use?

-

Go to

Cloud Functionsin the console. -

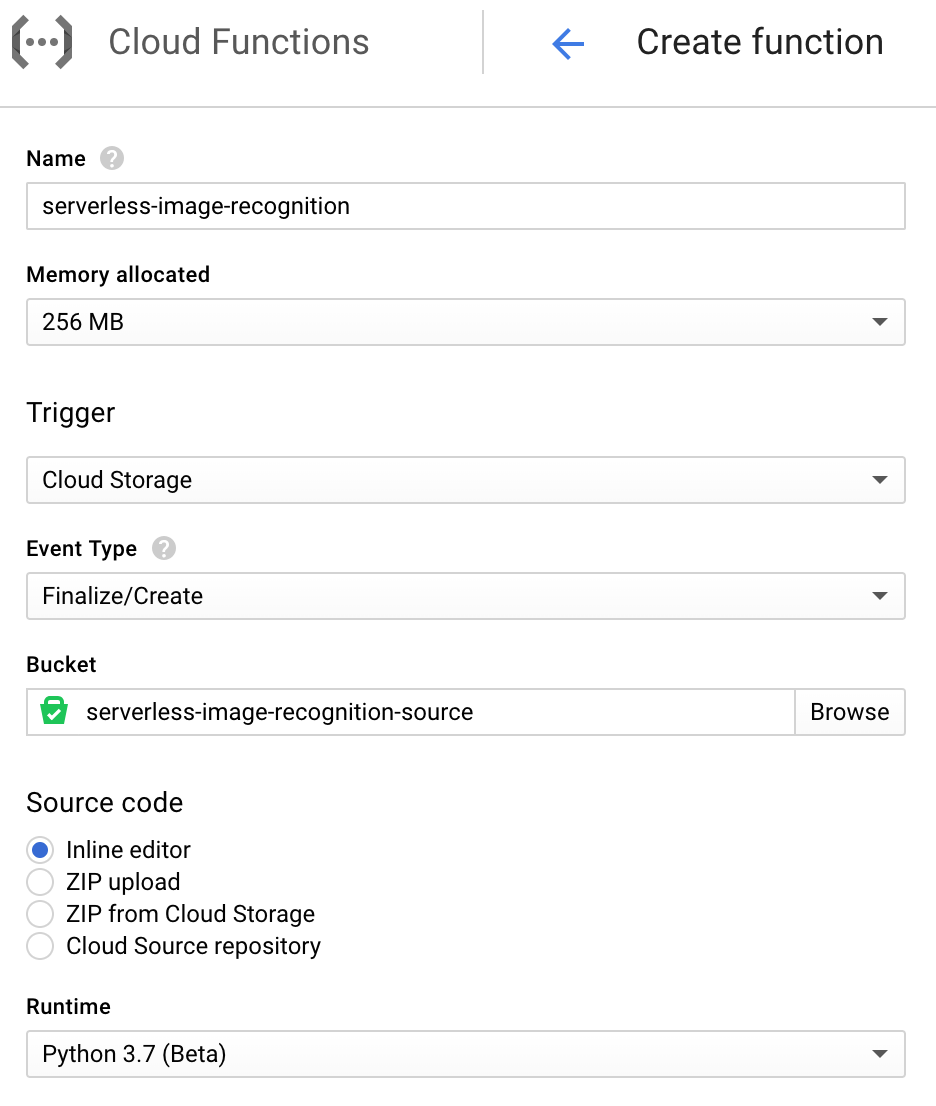

Click

+ Create Function -

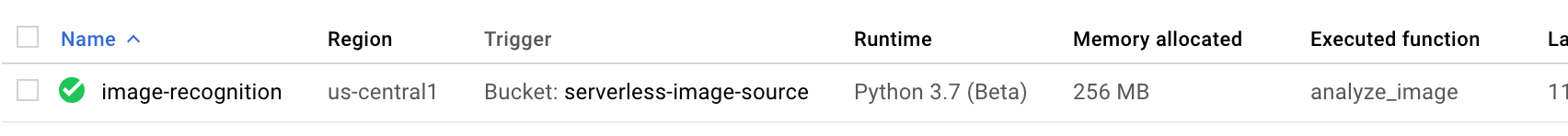

After naming the function (and leave memory allocation at the default 256 MB), adjust the trigger. By default, it's set to

HTTP, but we will change that toCloud Storage. TheEvent Typewill default toFinalize/Create, which is what we want for this project. Take a look at the other trigger event options. What are some use cases for those functionalities?

Select the bucket created in set 1 as theBucket, leave theSource codeset toinline editor- we will be adding that shortly. Finally, change theRuntimetoPython 3.7. It is currently in beta, which is fine for our purposes.

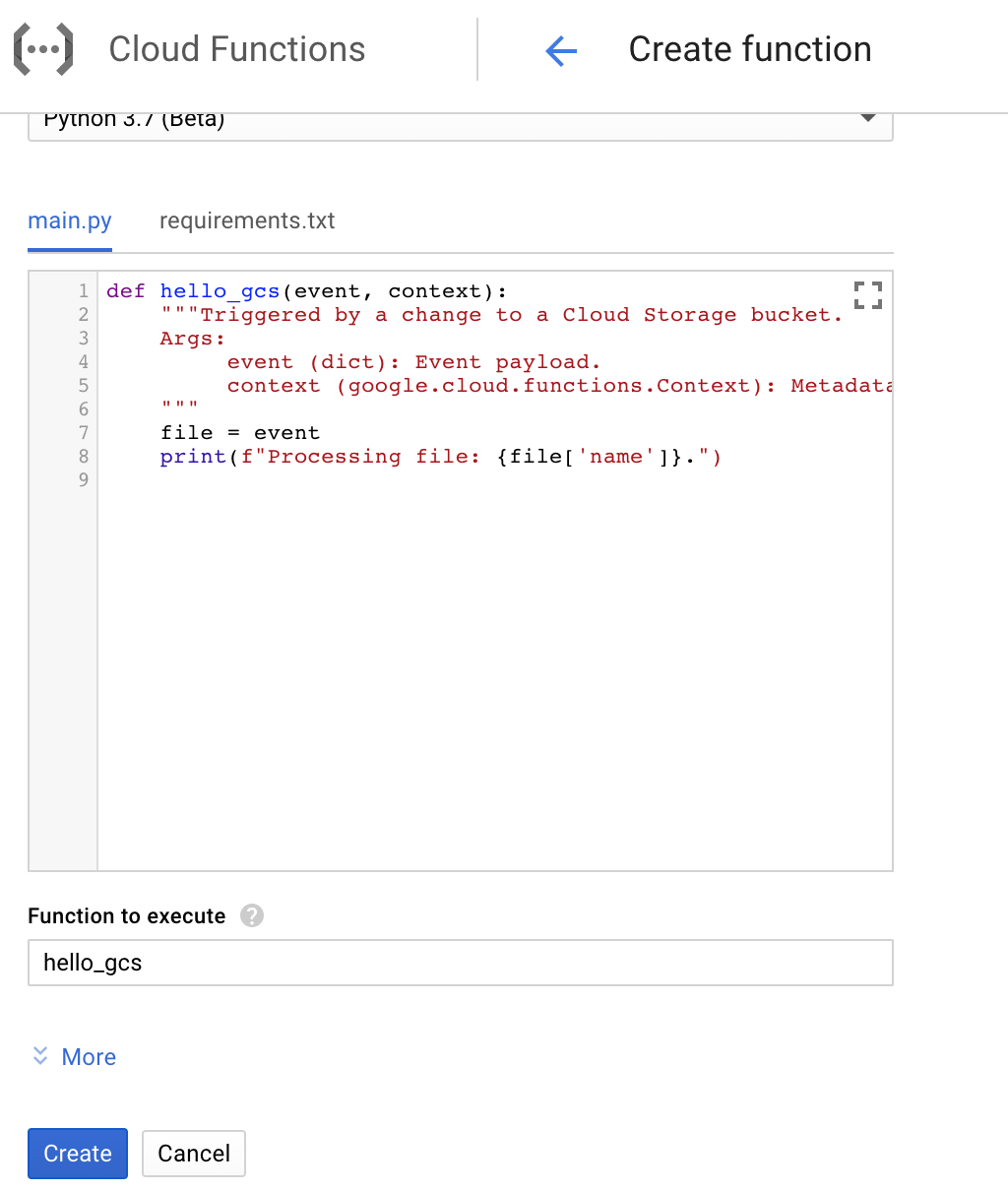

- Scroll down to the lower half of the page. You will see some prebuilt code. We won't be using it. Before just copying and pasting from this repo, though, lets take a look at the code and talk about the services being used.

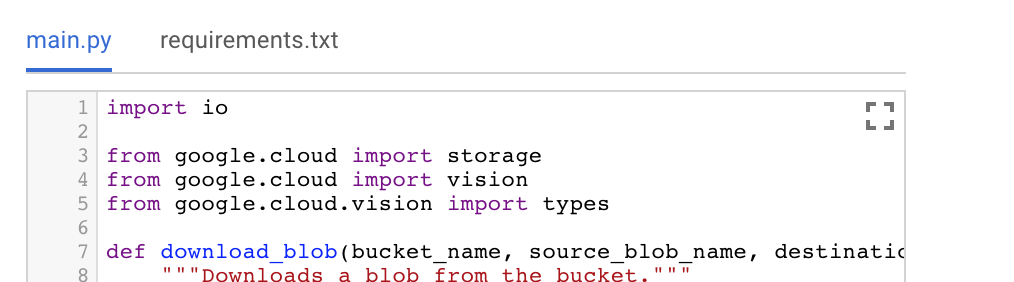

In the function.py in this repo, take a look at the code. The GCP exams don't require a deep understanding of code, but there are some snippets of python, so some familiarity will be helpful.

Lines 3-5

from google.cloud import storage

from google.cloud import vision

from google.cloud.vision import types

Import the Google Cloud SDKs. Make sure that any modules you would have to pip install (non native modules) are also noted in requirements.txt. This tells the Cloud Function which modules it needs to import. :

# Function dependencies

google-cloud-storage

google-cloud-vision

The first function pulls the object from the bucket into the cloud function compute space. It's worth noting that you can read and write objects into the /tmp directory of a cloud function, but that will not persist past the run of the function, so make sure anything you want to keep is written out to a bucket. You can see more about the code here.

def download_blob(bucket_name, source_blob_name, destination_file_name):

"""Downloads a blob from the bucket."""

storage_client = storage.Client()

bucket = storage_client.get_bucket(bucket_name)

blob = bucket.blob(source_blob_name)

blob.download_to_filename(destination_file_name)

print('Blob {} downloaded to {}.'.format(

source_blob_name,

destination_file_name))

return destination_file_name

The second function makes the call to the cloud vision API. You'll notice all we are doing with the results is printing them. We'll talk more about this later. You can see more about the code here.

def detect_labels(destination_file_name):

# Instantiates a client

client = vision.ImageAnnotatorClient()

# The name of the image file to annotate

file_name = destination_file_name

# Loads the image into memory

with io.open(file_name, 'rb') as image_file:

content = image_file.read()

image = types.Image(content=content)

# Performs label detection on the image file

response = client.label_detection(image=image)

labels = response.label_annotations

print('Labels:')

for label in labels:

print(label.description)

- Copy the code from

function.pyto themain.pytab and therequirements.txtto that tab.

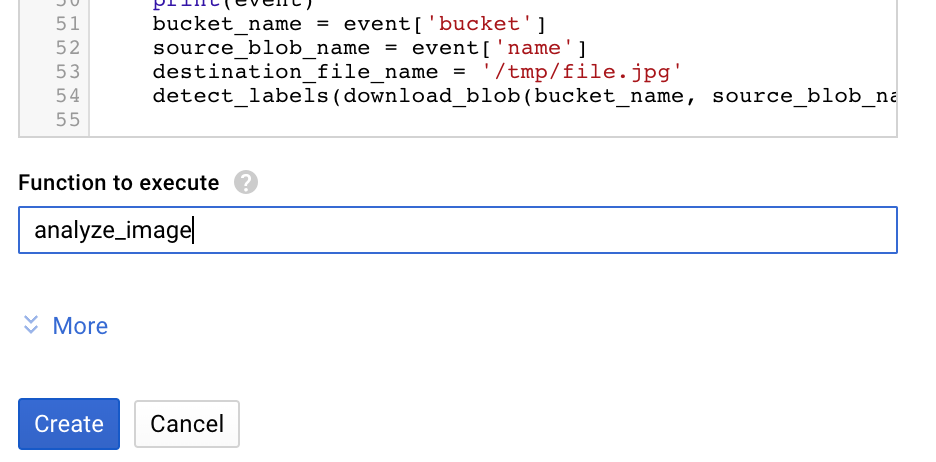

- Change the

function to executefromhello_gcstoanalyze_image. This tells the cloud function what function it is actually going to run. Create the function, it will take a few minutes to create.

- While that function is deploying, we have to enable the Cloud Vision API. Go to the

API Libraryin theAPIs and Servicessection of the menu.

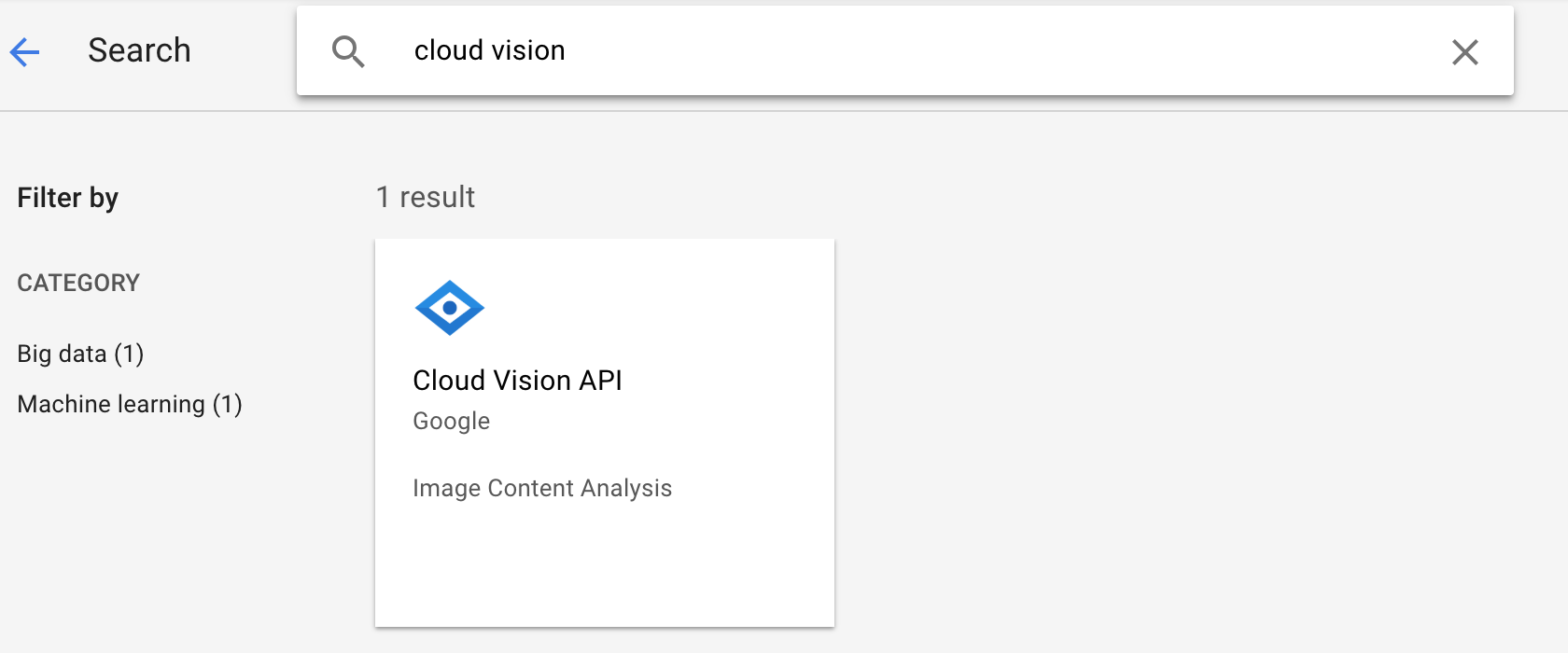

- Search for

cloud vision.

- Click to Enable API.

- Now that the function is deployed, let's try it out. I found an image of some cute iguanas:

(source: https://www.theguardian.com/news/gallery/2018/nov/08/baby-iguanas-diwali-lights-thursdays-best-photos)

(source: https://www.theguardian.com/news/gallery/2018/nov/08/baby-iguanas-diwali-lights-thursdays-best-photos)

I will drop this in my bucket through the console.

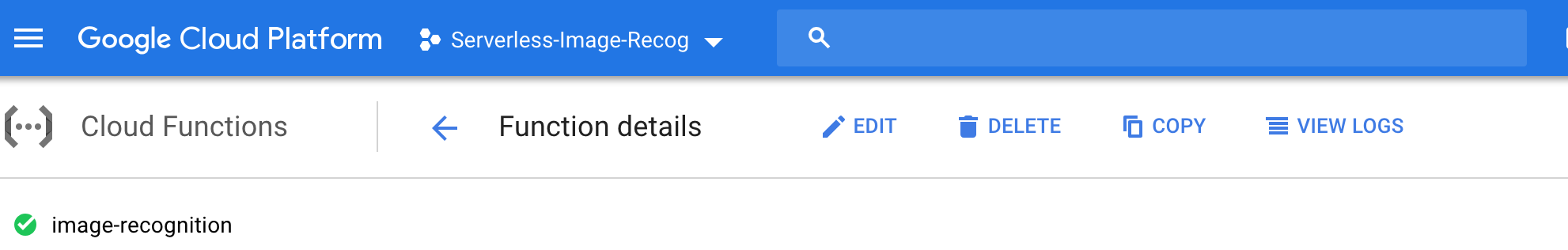

- Since we printed the code, we can just check the console output. In Cloud Functions, these logs are aggregated in Stackdriver. You can get to the logs by clicking on

view logsat the top of the of the Cloud Function details page:

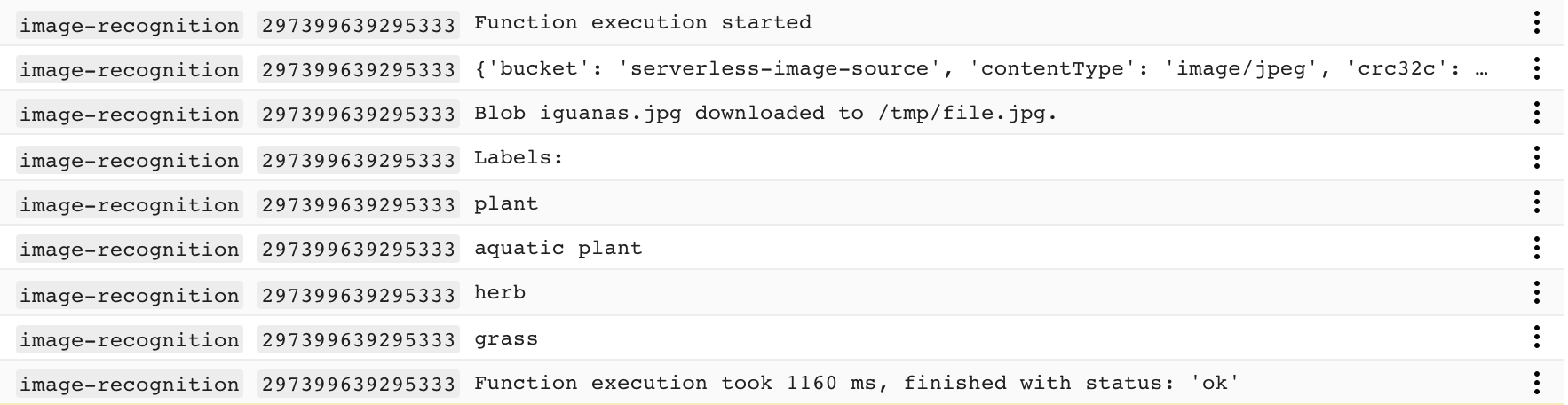

- Your logs should look something like this. Obviously, the results under

Labelswill vary depending on the image you upload.

Bonus: Right now, we're just looking at the results in Stackdriver, but what else could we do with them? Try updating the cloud function code to write the results to a pub/sub topic or a datastore database.