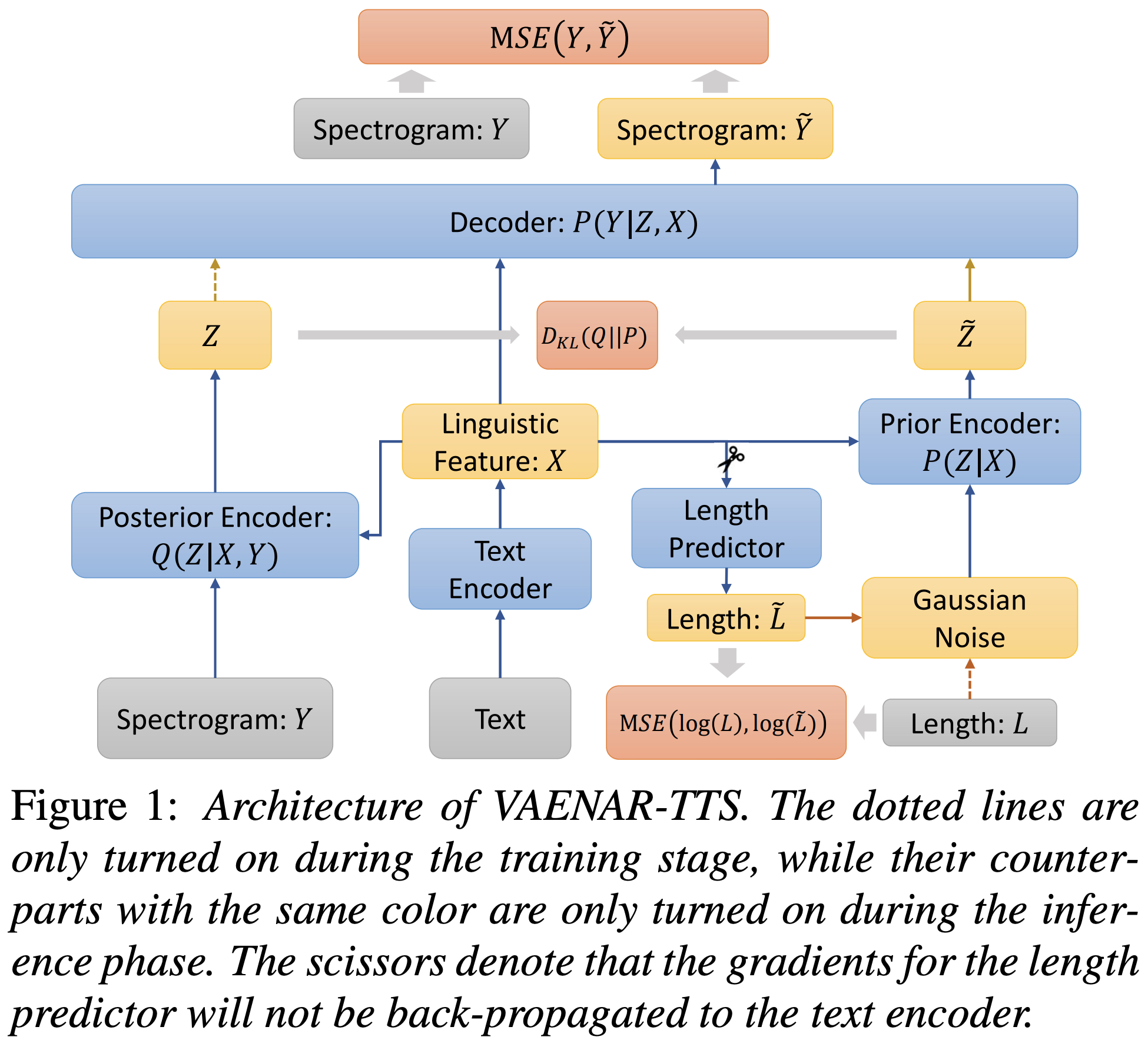

PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

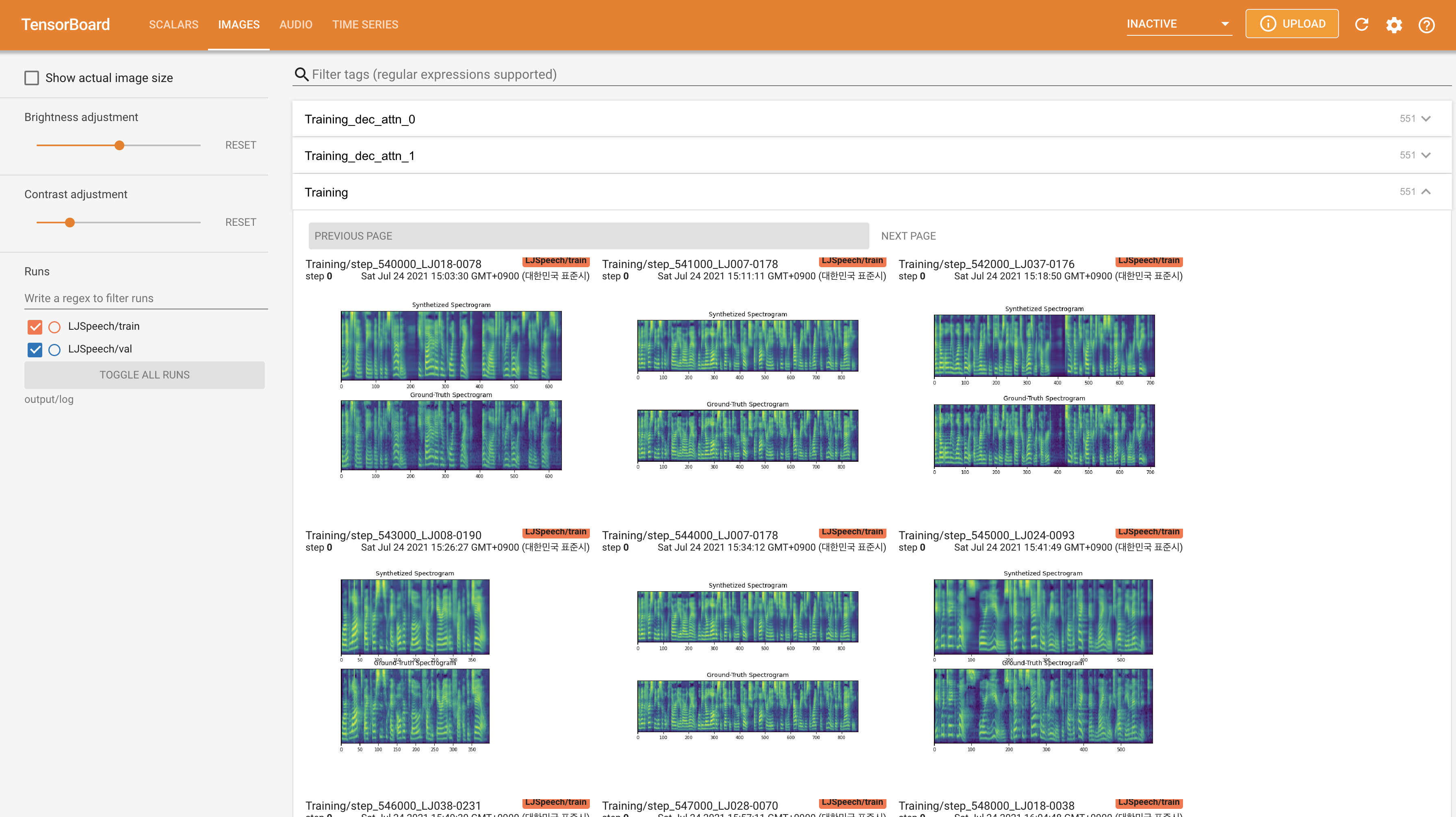

The validation logs up to 70K of synthesized mel and alignment are shown below (LJSpeech_val_dec_attn_0_LJ029-0157 and LJSpeech_val_step_LJ029-0157 from top to bottom).

You can install the Python dependencies with

pip3 install -r requirements.txt

You have to download the pretrained models and put them in output/ckpt/LJSpeech/.

For English single-speaker TTS, run

python3 synthesize.py --text "YOUR_DESIRED_TEXT" --restore_step RESTORE_STEP --mode single -p config/LJSpeech/preprocess.yaml -m config/LJSpeech/model.yaml -t config/LJSpeech/train.yaml

The generated utterances will be put in output/result/.

Batch inference is also supported, try

python3 synthesize.py --source preprocessed_data/LJSpeech/val.txt --restore_step RESTORE_STEP --mode batch -p config/LJSpeech/preprocess.yaml -m config/LJSpeech/model.yaml -t config/LJSpeech/train.yaml

to synthesize all utterances in preprocessed_data/LJSpeech/val.txt

The supported datasets are

- LJSpeech: a single-speaker English dataset consists of 13100 short audio clips of a female speaker reading passages from 7 non-fiction books, approximately 24 hours in total.

First, run

python3 prepare_align.py config/LJSpeech/preprocess.yaml

for some preparations. And then run the preprocessing script.

python3 preprocess.py config/LJSpeech/preprocess.yaml

Train your model with

python3 train.py -p config/LJSpeech/preprocess.yaml -m config/LJSpeech/model.yaml -t config/LJSpeech/train.yaml

Use

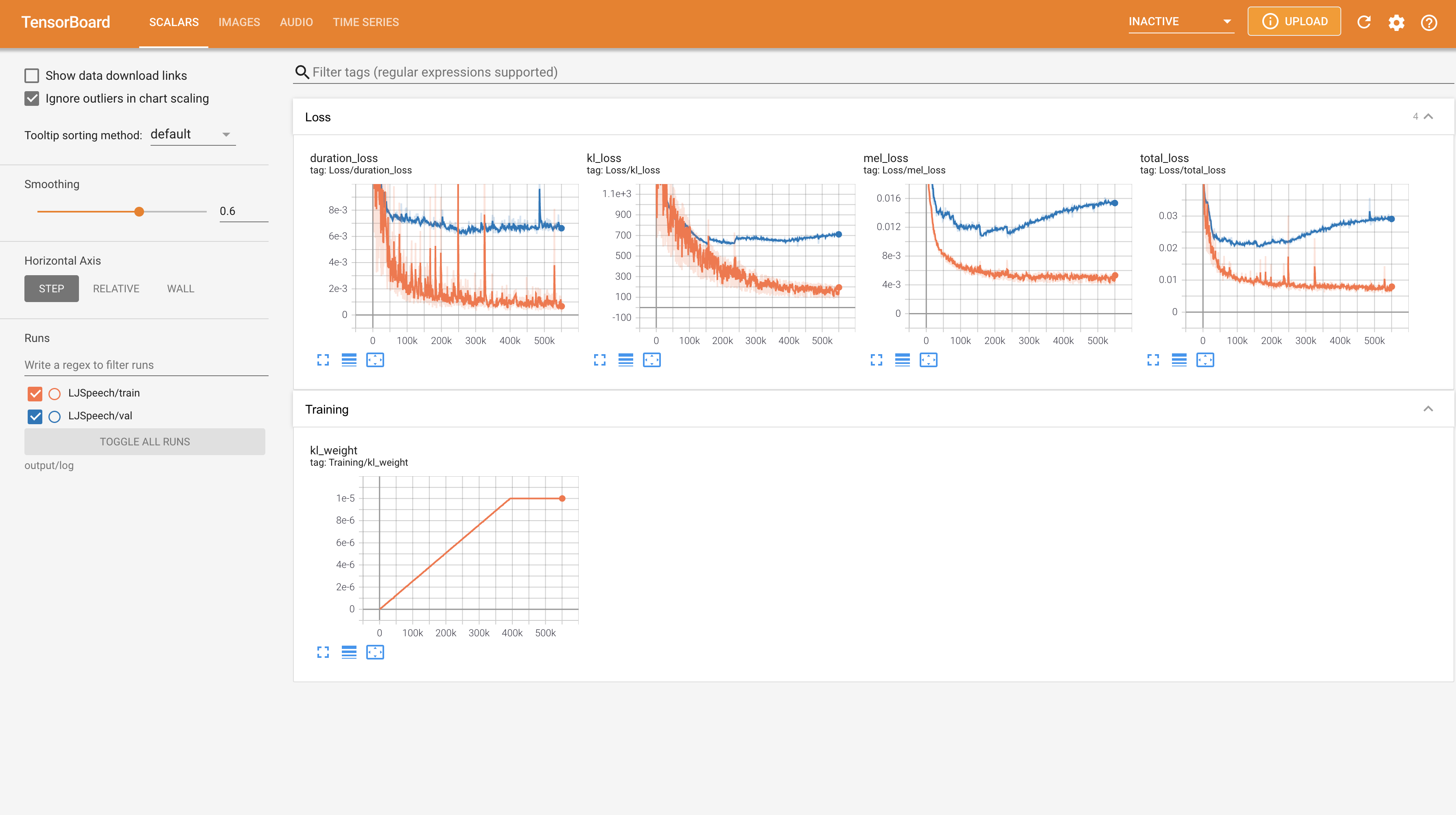

tensorboard --logdir output/log/LJSpeech

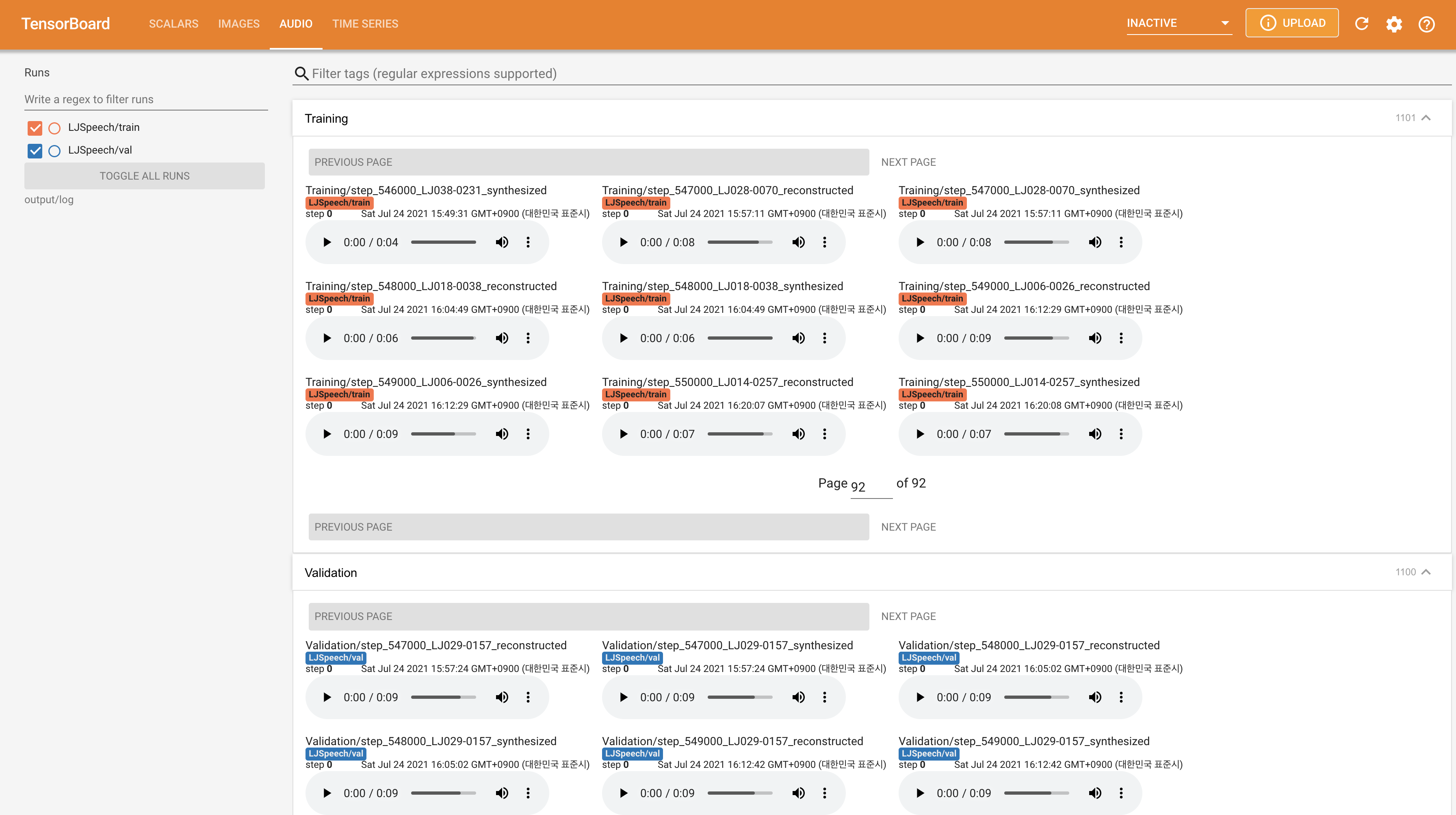

to serve TensorBoard on your localhost. The loss curves, synthesized mel-spectrograms, and audios are shown.

- Removed arguments, methods during converting Tensorflow to PyTorch: name, kwargs, training, get_config()

- Specify

in_featuresin LinearNorm which is corresponding totf.keras.layers.Dense. Also,in_channelsis explicitly specified in Conv1D. - get_mask_from_lengths() function returns logical not of that of FastSpeech2.

- In this implementation, the

griffin_limalgorithms is used to convert a mel-spectrogram to a waveform. You can use HiFi-GAN as a vocoder by setting config, but you need to train it from scratch (you cannot use the provided pre-trained HiFi-GAN model).

@misc{lee2021vaenar-tts,

author = {Lee, Keon},

title = {VAENAR-TTS},

year = {2021},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/keonlee9420/VAENAR-TTS}}

}