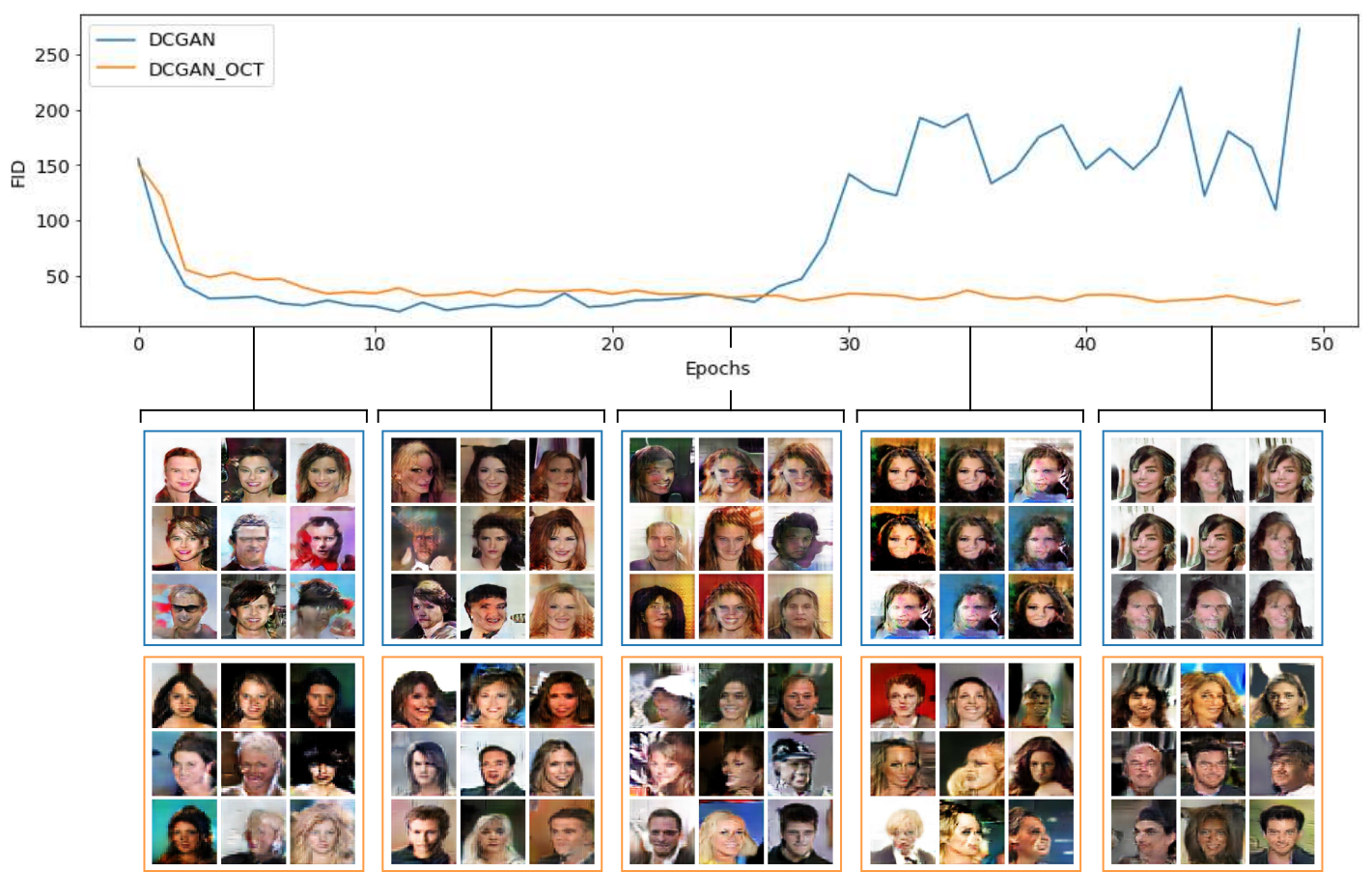

This repository provides the official PyTorch implementation of Stabilizing GANs with Octave Convolutions.

Tested on Python 3.6.x.

The full CelebA is available here. To resize the RGB images to 128 by 128 pixels, set the path and run resize_celeba.py.

To train a model, simply specify run sh on the selected model (e.g. sh gan.sh, sh wgan.sh or sh lsgan.sh) with the appropriate hyper-parameters.

python train.py --type wgan \

--nb-epochs 50 \

--learning-rate 0.00005 \

--optimizer rmsprop \

--critic 5 \

--cuda

This repo combines the pytorch implementation of the following paper:

Goodfellow et al. Generative Adversarial Nets.

Radford et al. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks.

Mao et al. Multi-class Generative Adversarial Networks with the L2 Loss Function.

Arjovsky et al. Wasserstein Generative Adversarial Networks.

Chen et al. Drop an Octave: Reducing Spatial Redundancy in Convolutional Neural Networks with Octave Convolution.

The codes are heavily borrowed from a pytorch:

If this work is useful for your research, please cite our paper:

@article{durall2019dropgan,

title={Stabilizing GANs with Octave Convolutions},

author={Durall, Ricard and Pfreundt, Franz-Josef and Keuper, Janis},

journal={arXiv preprint arXiv:1905.12534},

year={2019}

}