A model-agnostic tool for explaining text-based risk classification predictions for patients in Intensive Care Units.

University of Aberdeen CS5917: MSc Project in Artificial Intelligence (Industrial Placement with the partnership of RedStar)

A LIME-based explainer for interpreting risk factors regarding possible clinical outcomes from patient discharge letters. Includes modules for sentence ranking and highlighting, alongside other statistics regarding the accumulated results. Allows the user to upload a discharge letter (as a .txt file) and generate an explanation for it, as well as select the model configuration.

This app is meant to serve as an assistant tool for clinicians who monitor and perform patient EHR readings. It aims to minimize the time spent on an EHR, in order to reach a conclusion regarding the patient's condition and the required intervention. As a result, this can also reduce the error rate of clinicians, who otherwise perform these readings manually on large volumes of text. Thus, It can be beneficial to health surveillance systems for filtering and diagnosing high-risk patients within a large number of records.

The app currently supports two functioning models, implemented in Keras(TF2):

- Fasttext (AUC: 0.85)

- 1D-Conv-LSTM (AUC: 0.87)

The tasks supported by the explainer are:

- Risk of death within 1 year

- Risk of hospital readmission within 30 days

You can also customize the model decision boundary for positive and negative classes, which affects the risk score ([1-10]).

The text classification models were trained on the specified tasks using the MIMIC III database (use the specified link to find out how to get access).

The model/explainer frameworks (in the notebooks folder) were developed using Google Colab. The web app is developed using Flask (blueprint modules) and Python 3.7. The deployment branch includes an AWS Elastic Beanstalk configuration for building the production system. The app also includes a modified version of the original LIME package, which includes sentence perturbations, available here. To run the explanations an NVIDIA GPU (TF2) is required (tested with GTX 960M). The front-end visualization libraries include:

- Mark.js (Sentence highlighting with weighting for each class)

- Chart.js (Sentence importance plot)

- Bootstrap 4 template

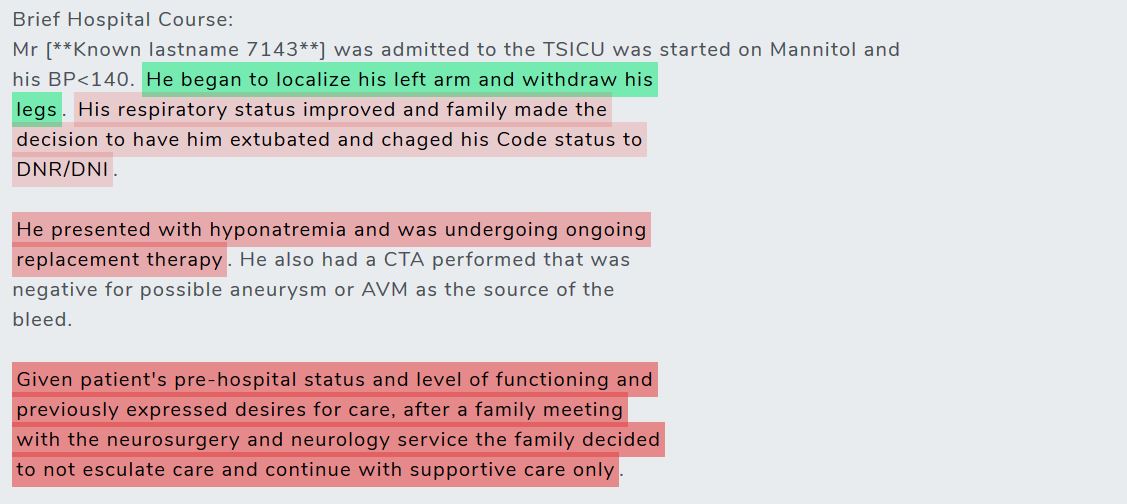

Sentence highlighting (green - negative, red - positive LIME explanation scores)

Sentence importance plot for visualizing the explanation scores.

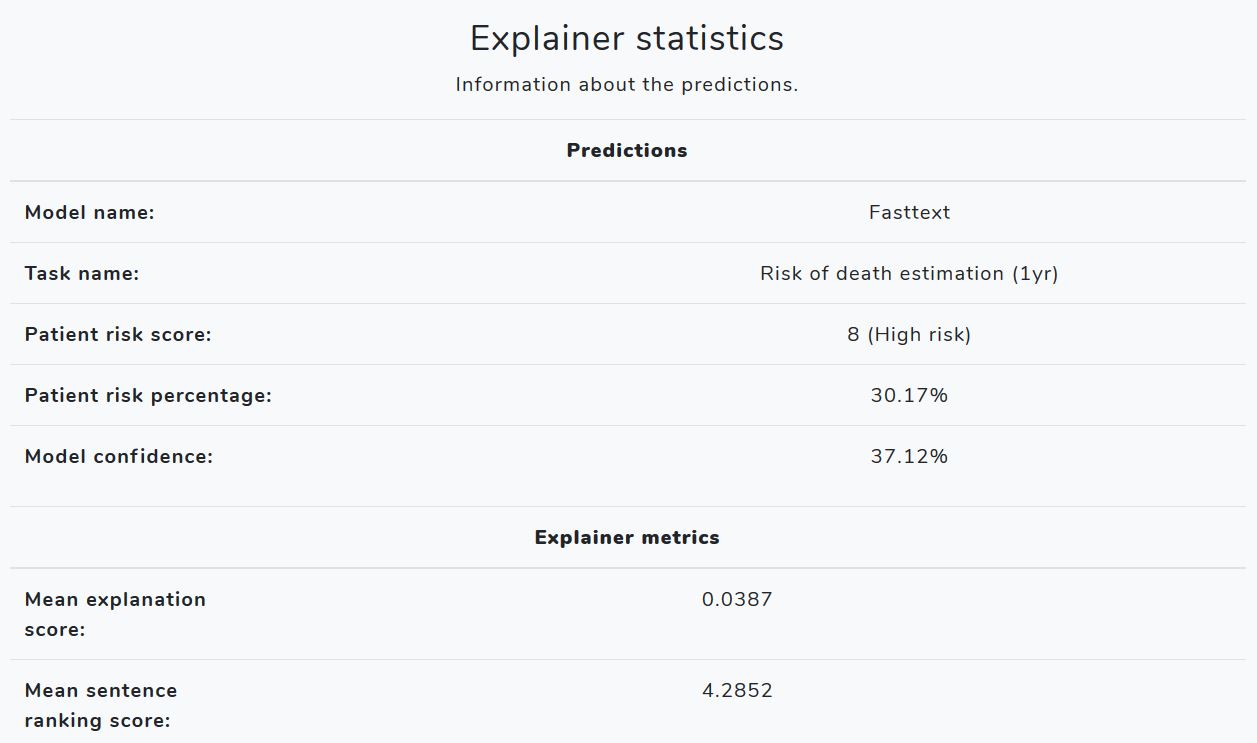

Explainer statistics (related to the model predictions and explanation scores)

1. Clone the project from this repository to your local directory using:

git clone https://github.com/JadeBlue96/Patient-Risk-Explainer.git2. Create a new conda environment to install the packages in:

conda create -n prme python=3.7

conda activate prme3. Install the required libraries using pip:

pip install –r requirements.txt4. Pull the model files from S3 using:

aws s3 sync s3://prme-main/ . --no-sign-request5. Set the Flask app environment variables in your environment and run it locally:

set FLASK_APP=app.py

set FLASK_ENV=development

flask run6. Check that every package is installed correctly and that the models are downloaded successfully by running the unit tests:

python -m pytest --cov-report term-missing --cov=src tests/