Chinese Chess reinforcement learning by AlphaZero methods.

This project is based on these main resources:

- DeepMind's Oct 19th publication: Mastering the Game of Go without Human Knowledge.

- The great Reversi/Chess/Chinese chess development of the DeepMind ideas that @mokemokechicken/@Akababa/@TDteach did in their repo: https://github.com/mokemokechicken/reversi-alpha-zero, https://github.com/Akababa/Chess-Zero, https://github.com/TDteach/AlphaZero_ChineseChess

- A Chinese chess engine with gui: https://github.com/mm12432/MyChess

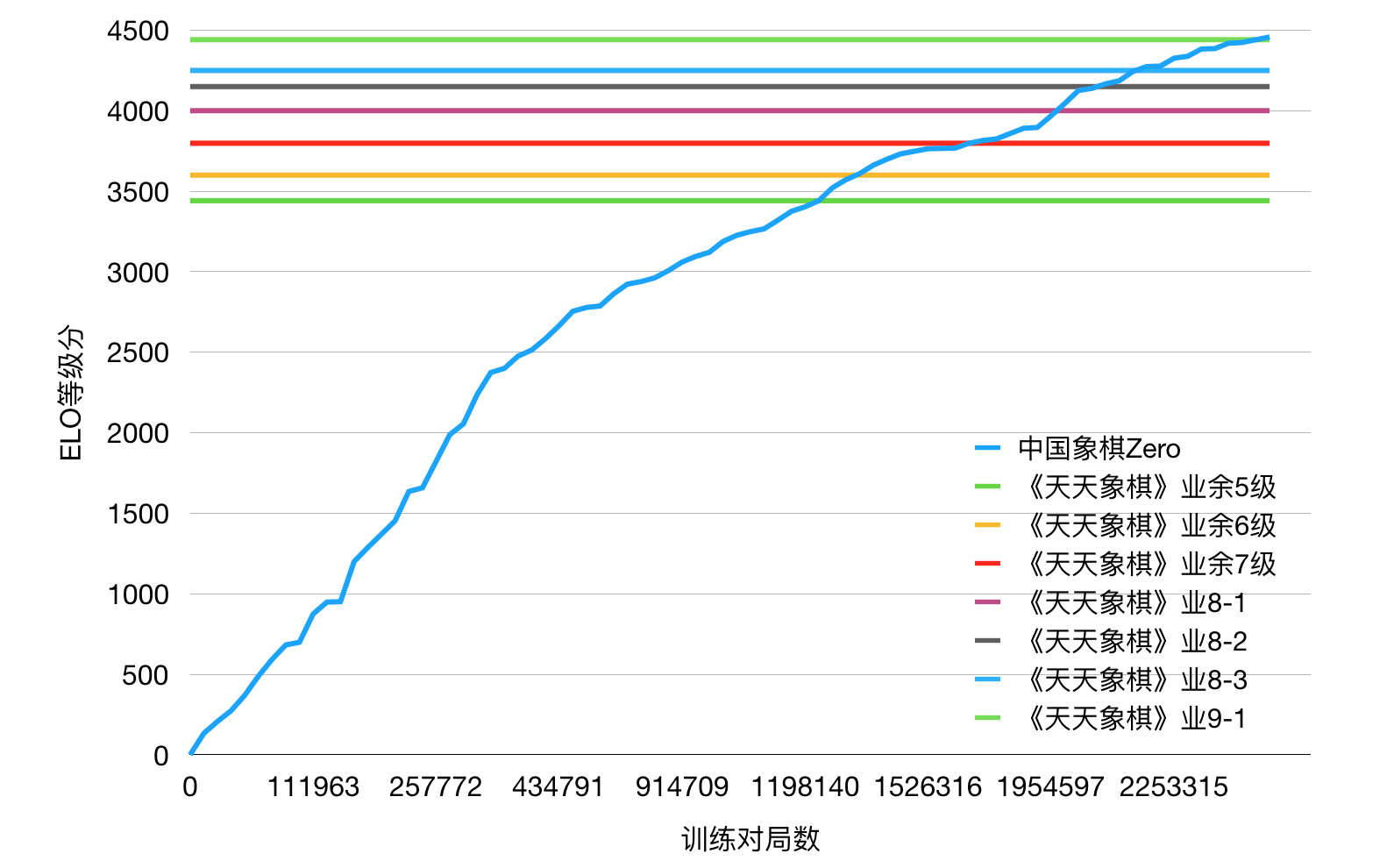

In order to build a strong chinese chess AI following the same type of techniques as AlphaZero, we need to do this with a distributed project, as it requires a huge amount of computations.

If you want to join us to build the best chinese chess AI in the world:

- For instructions, see wiki

- For live status, see https://cczero.org

- Python 3.6.3

- tensorflow-gpu: 1.3.0

- Keras: 2.0.8

This AlphaZero implementation consists of two workers: self and opt.

selfis Self-Play to generate training data by self-play using BestModel.optis Trainer to train model, and generate new models.

For the sake of faster training, another two workers are involved:

slis Supervised learning to train data crawled from the Internet.evalis Evaluator to evaluate the NextGenerationModel with the current BestModel.

Requirement: pygame

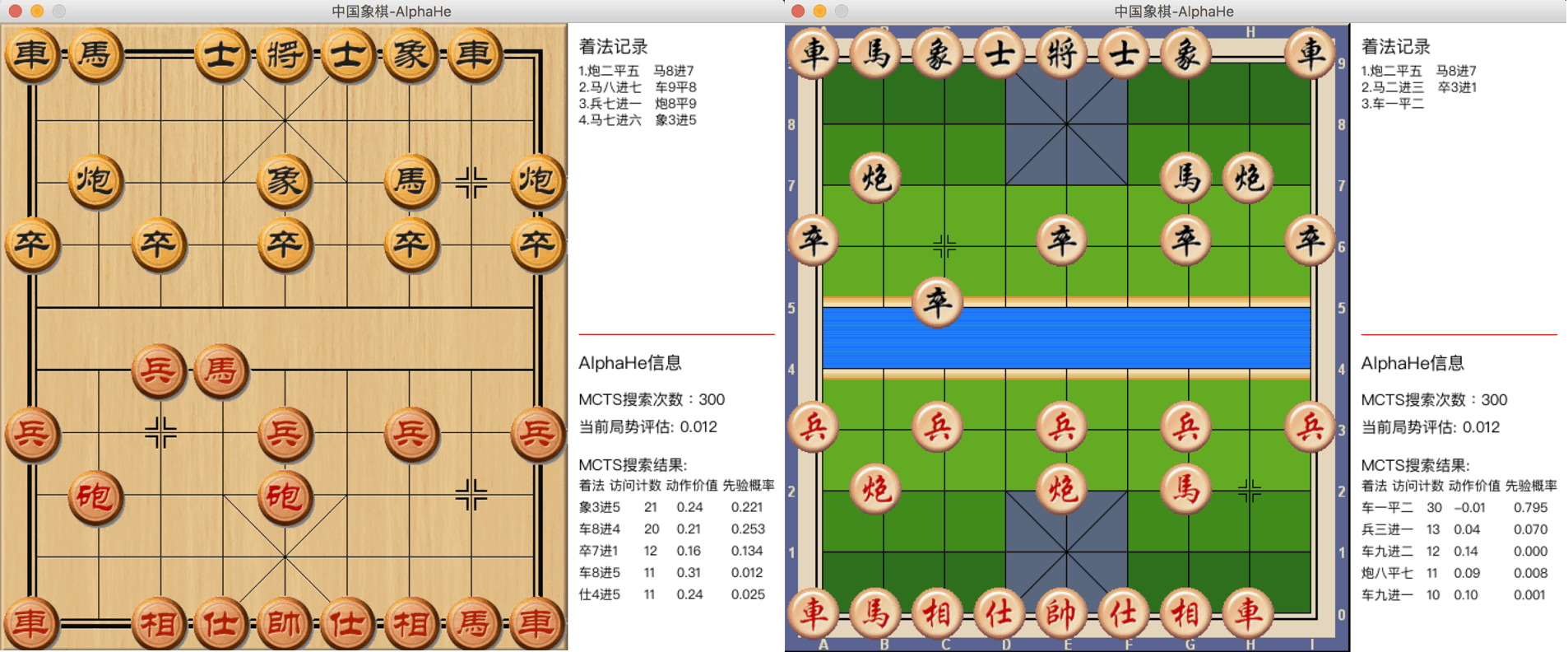

python cchess_alphazero/run.py playScreenshots

You can choose different board/piece styles and sides, see play with human.

pip install -r requirements.txtIf you want to use CPU only, replace tensorflow-gpu with tensorflow in requirements.txt.

Make sure Keras is using Tensorflow and you have Python 3.6.3+.

PlayDataConfig

nb_game_in_file, max_file_num: The max game number of training data isnb_game_in_file * max_file_num.

PlayConfig, PlayWithHumanConfig

simulation_num_per_move: MCTS number per move.c_puct: balance parameter of value network and policy network in MCTS.search_threads: balance parameter of speed and accuracy in MCTS.dirichlet_alpha: random parameter in self-play.

python cchess_alphazero/run.py self

When executed, Self-Play will start using BestModel. If the BestModel does not exist, new random model will be created and become BestModel.

options

--new: create new BestModel--type mini: use mini config, (seecchess_alphazero/configs/mini.py)--gpu '1': specify which gpu to use--ucci: whether to play with ucci engine (rather than self play, seecchess_alphazero/worker/play_with_ucci_engine.py)--distributed: run self play in distributed mode which means it will upload the play data to the remote server and download latest model from it

python cchess_alphazero/run.py opt

When executed, Training will start. The current BestModel will be loaded. Trained model will be saved every epoch as new BestModel.

options

--type mini: use mini config, (seecchess_alphazero/configs/mini.py)--total-step TOTAL_STEP: specify total step(mini-batch) numbers. The total step affects learning rate of training.--gpu '1': specify which gpu to use

View training log in Tensorboard

tensorboard --logdir logs/

And access http://<The Machine IP>:6006/.

python cchess_alphazero/run.py play

When executed, the BestModel will be loaded to play against human.

options

--ai-move-first: if set this option, AI will move first, otherwise human move first.--type mini: use mini config, (seecchess_alphazero/configs/mini.py)--gpu '1': specify which gpu to use--piece-style WOOD: choose a piece style, default isWOOD--bg-style CANVAS: choose a board style, default isCANVAS--cli: if set this flag, play with AI in a cli environment rather than gui

Note: Before you start, you need to download/find a font file (.ttc) and rename it as PingFang.ttc, then put it into cchess_alphazero/play_games. I have removed the font file from this repo because it's too big, but you can download it from here.

python cchess_alphazero/run.py eval

When executed, evaluate the NextGenerationModel with the current BestModel. If the NextGenerationModel does not exist, worker will wait until it exists and check every 5 minutes.

options

--type mini: use mini config, (seecchess_alphazero/configs/mini.py)--gpu '1': specify which gpu to use

python cchess_alphazero/run.py sl

When executed, Training will start. The current SLBestModel will be loaded. Tranined model will be saved every epoch as new SLBestModel.

About the data

I have two data sources, one is downloaded from https://wx.jcloud.com/market/packet/10479 ; the other is crawled from http://game.onegreen.net/chess/Index.html (with option --onegreen).

options

--type mini: use mini config, (seecchess_alphazero/configs/mini.py)--gpu '1': specify which gpu to use--onegreen: if set the flag,sl_onegreenworker will start to train data crawled fromgame.onegreen.net--skip SKIP: if set this flag, games whoses index is less thanSKIPwould not be used to train (only valid whenonegreenflag is set)