Codebase for "Composing graphical models with generative adversarial networks for EEG signal modeling"

Paper link: https://ieeexplore.ieee.org/document/9747783

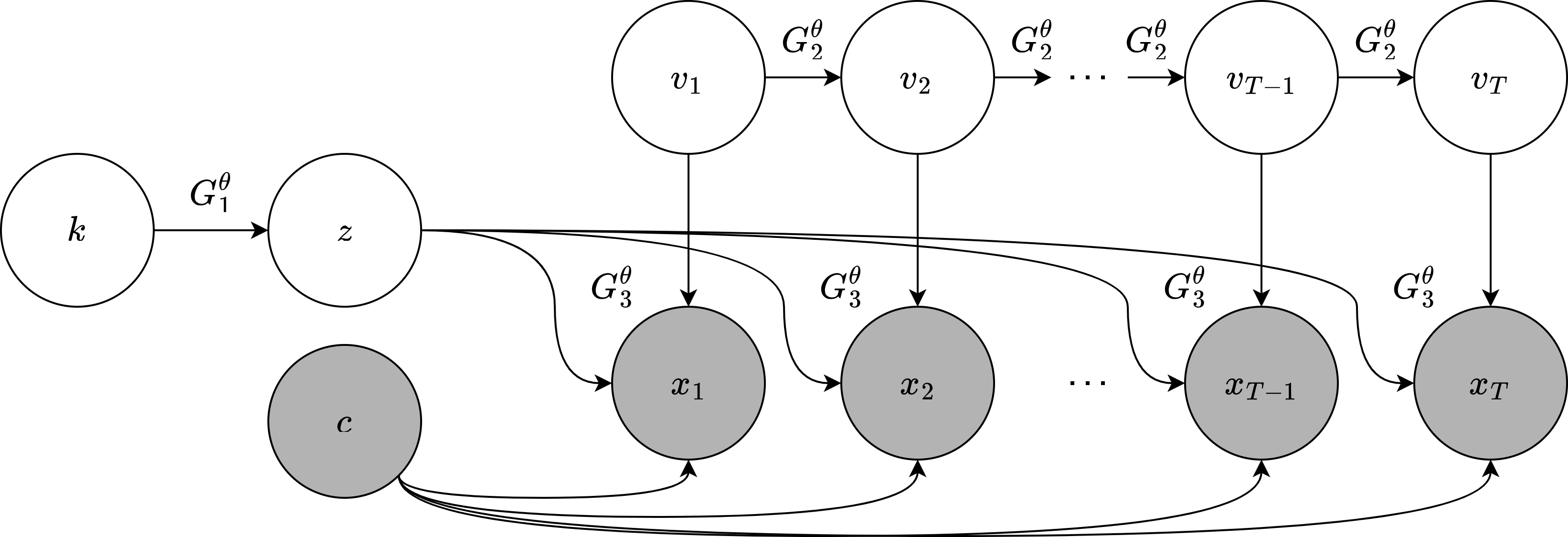

Generative process:

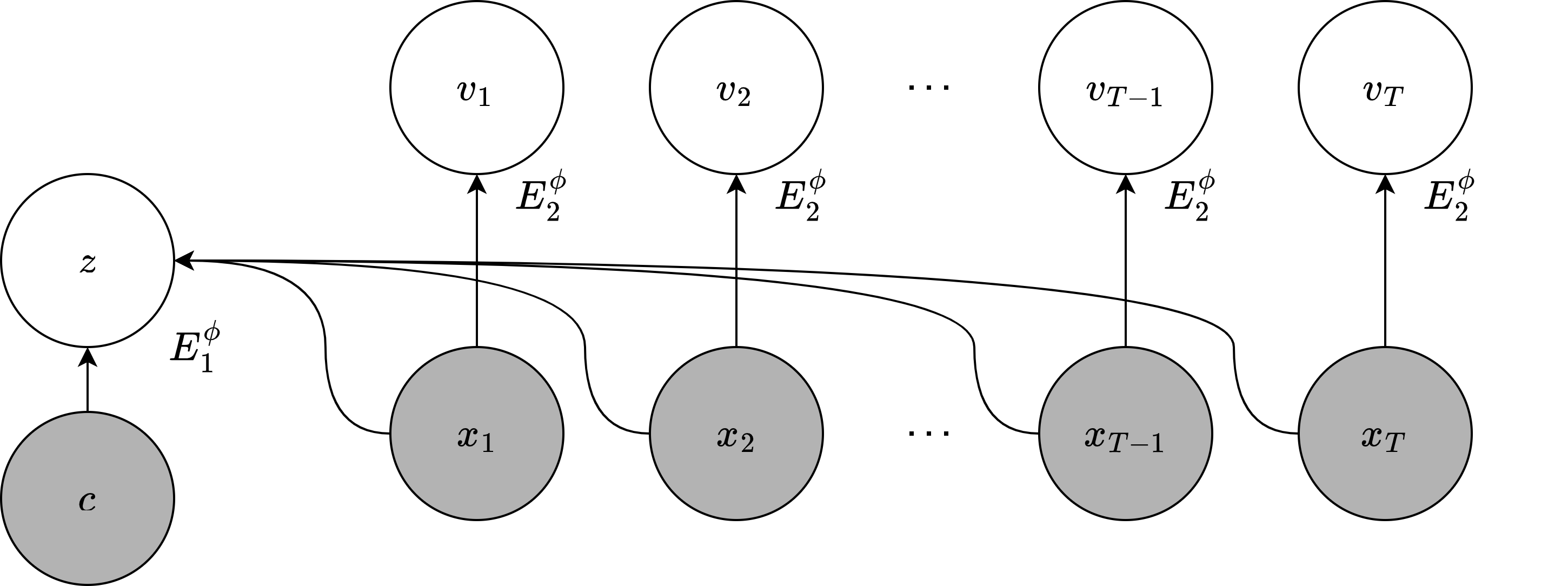

Inference process:

Each time step corresponds to a

Variables

To install the dependencies, you can run in your terminal:

pip install -r requirements.txtExperimental dataset can be downloaded at: https://zenodo.org/record/8408937

The code is structured as follows:

data.pycontains functions to transform and feed the data to the model.models.pydefines deep neural network architectures.utils.pyhas utilities for evaluation and plottings.train.pyis the main entry to run the training process.eval.ipynbruns the evaluation.

If you find this code helpful, please cite our paper:

@inproceedings{vo2022composing,

title={Composing Graphical Models with Generative Adversarial Networks for EEG Signal Modeling},

author={Vo, Khuong and Vishwanath, Manoj and Srinivasan, Ramesh and Dutt, Nikil and Cao, Hung},

booktitle={ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

pages={1231--1235},

year={2022},

organization={IEEE}

}