Kili AutoML is a lightweight library to create ML models in a data-centric AI way:

- Label on Kili

- Train a model with AutoML and evaluate its performance in one line of code

- Push predictions to Kili to accelerate the labeling in one line of code

- Prioritize labeling on Kili to label the data that will improve your model the most first

Iterate.

Once you are satisfied with the performance, in one line of code, serve the model and monitor the performance keeping a human in the loop with Kili.

Only works on Linux and Mac OS X

You can try automl on a simple image classification project with this notebook.

Check the Demos section for more examples.

Creating a new conda or virtualenv before cloning is recommended because we install a lot of packages:

conda create --name automl python=3.7

conda activate automlgit clone https://github.com/kili-technology/automl.git

cd automl

git submodule update --initthen install the requirements:

export SETUPTOOLS_ENABLE_FEATURES="legacy-editable"

pip install torch && pip install -e .

export PYTHONPATH=$PYTHONPATH:$(pwd)We made Kili AutoML very simple to use. The following sections detail how to call the main methods.

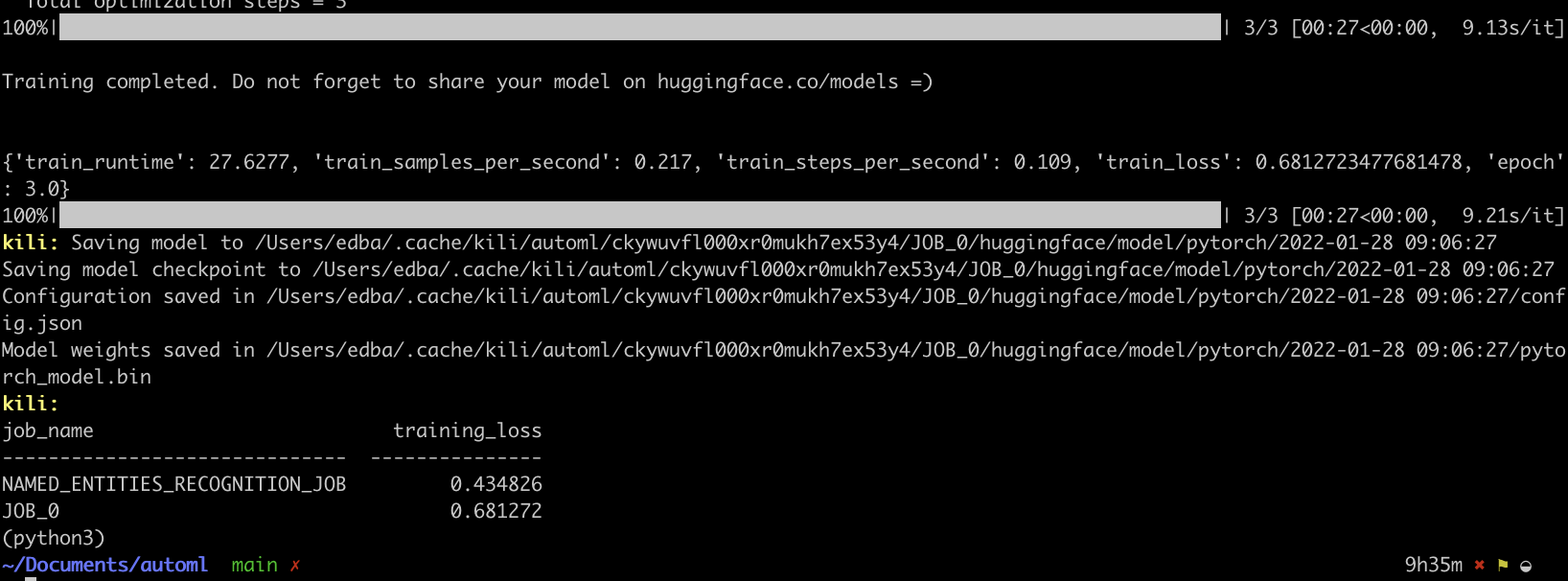

We train the model with the following command line:

kiliautoml train \

--api-key $KILI_API_KEY \

--project-id $KILI_PROJECT_IDBy default, the library uses Weights and Biases to track the training and the quality of the predictions.

The model is then stored in the cache of the AutoML library (default location: HOME/.cache/kili/automl, but you can choose the location with the env variable KILIAUTOML_CACHE).

Kili automl training does the following:

- Selects the models related to the tasks declared in the project ontology.

- Retrieve Kili's asset data and convert it into the input format for each model.

- Fine-tunes the model on the input data.

- Outputs the model metrics.

You can check the supported ML backends and the tasks they are used for here.

Compute model loss to infer when you can stop labeling.

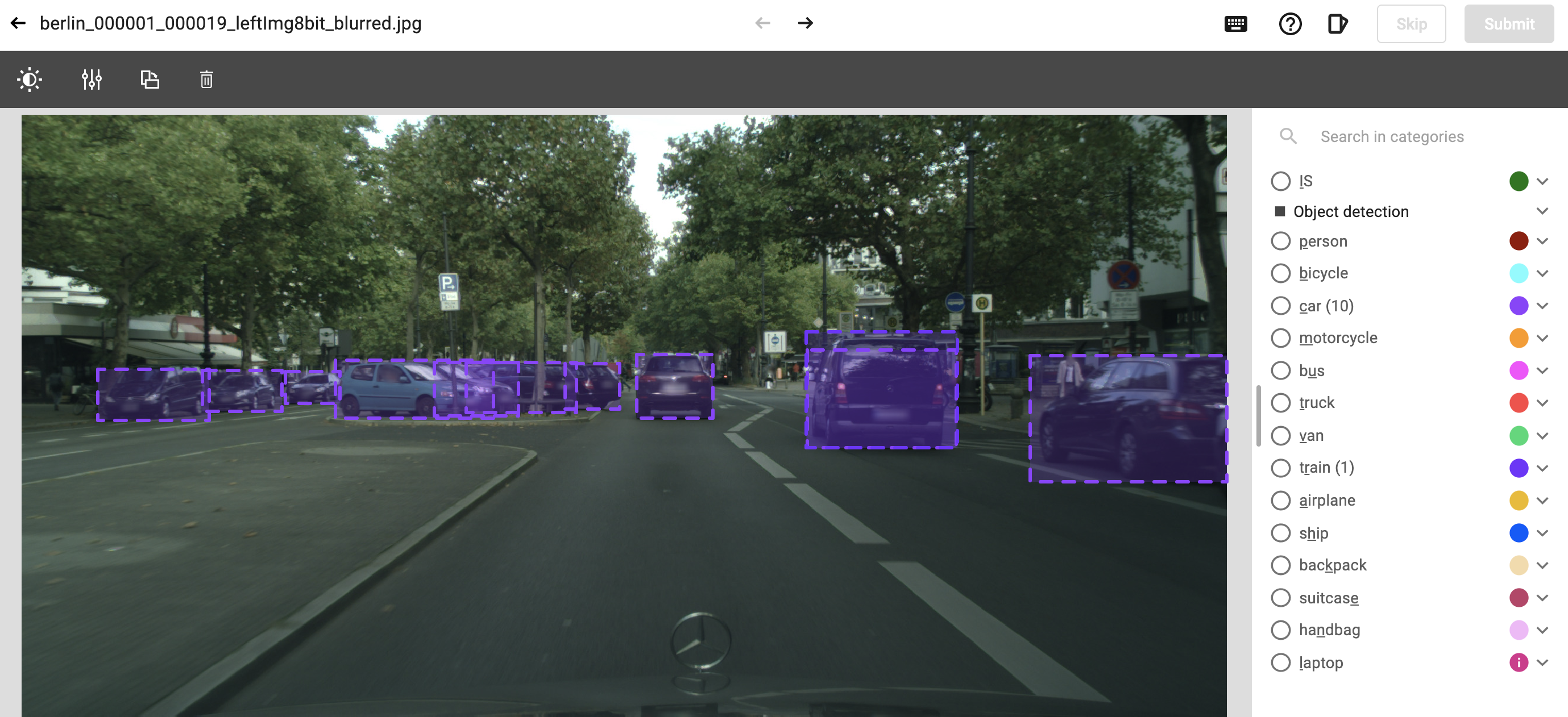

Once trained, the models are used to predict the labels, add preannotations on the assets that have not yet been labeled by the annotators. The annotators can then validate or correct the preannotations in the Kili user interface.

kiliautoml predict \

--api-key $KILI_API_KEY \

--project-id $KILI_PROJECT_IDUsing trained models to push pre-annotations onto unlabeled assets typically speeds up labeling by 10%.

You can also use a model coming from another project, if they have the same ontology:

kiliautoml predict \

--api-key $KILI_API_KEY \

--project-id $KILI_PROJECT_ID \

--from-project $ANOTHER_KILI_PROJECT_IDOnce roughly 10 percent of the assets in a project have been labeled, it is possible to prioritize the remaining assets to be labeled on the project in order to prioritize the assets that will best improve the performance of the model.

kiliautoml prioritize \

--api-key $KILI_API_KEY \

--project-id $KILI_PROJECT_IDThis command will change the priority queue of the assets to be labeled. To do this, AutoML uses a mix between diversity sampling and uncertainty sampling.

Note: for image classification, object detection and image segmentation projects only.

Labeling mistakes happen. Fortunately, we provide methods to detect potential annotation problems. label_errors.py allows to identify potential problems and create a 'potential_label_error' filter on the project's asset exploration view:

kiliautoml label_errors \

--api-key $KILI_API_KEY \

--project-id $KILI_PROJECT_IDAutoML currently supports the following tasks:

- Natural Language Processing (NLP)

- Named Entity Recognition

- Text Classification

- Image

- Object detection

- Image Classification

- Semantic Segmentation

You can test the features of AutoML with these notebooks:

- Natural Language Processing (NLP)

- Image

AutoML is a utility library that trains and serves models. It is your responsibility to determine whether the model performance is high enough or not.

Don't hesitate to contribute!