Training Godot game agent using the Deep Learning at the C++. It provides faster training speed than the GDScript.

The agent can obtain the score by collecting the coin during 20 second. The Action is consist of the left, right, up, and down.

- Godot 3 version.

- The C++ code of this repository is based on official tutorial of Godot.

- This project uses the GodotAIGym to connect the Godot with Python.

- The GDScript version code is available in DogdeCreepTut.

NOTE:

- You need copy the GodotModule first before building this project.

- The libtorch, godot-cpp, and game exe file are excluded from this repositoy because of uploading size limitation.

- Build the project.

$ scons platform=linux

- Move to project folder.

$ cd project

- Start the Learner.

$ python learner.py --env_num 1

$ python3.7 learner.py --env_num 1

- Start the Actor

$ python actor.py --env_id your_env_id --learner_ip your_learner_ip

$ python3.7 actor.py --env_id 0 --learner_ip 192.168.1.3

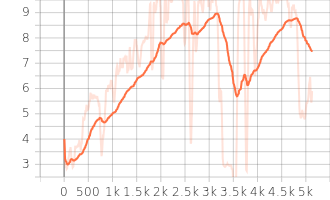

- You can see the training progress by using Tensorboard.

$ tensorboard --logdir=./tensorboard

- Evaluate the trained model. Try to pick the best trained model by using Tensorboard chart!

$ python run_evaluation.py --workspace_path /your/path --model_name reinforcement_model_xxxxx

$ python3.7 run_evaluation.py --workspace_path /home/kimbring2/dodge_the_creeps/project/ --model_name reinforcement_model_13000

NOTE:

- Pretrained model of mine is avialable from my Google Drive.

You can change the game speed by setting the turbo_mode flag of the dodgeCreepEnv. The game speed will be doubled if turbo_mode is True.

env = dodgeCreepEnv(exec_path=GODOT_BIN_PATH, env_path=env_abs_path, turbo_mode=False)

The total frame rate per one episode is same even if the game speed is increased. That means the trained model from torbe_mode can be used in normal mode.