- You will need to have installed Java 17 or later and Docker.

- Setup and configuration all in the

./scripts/startup.shscript; execute it from the root directory to get everything running. - Select

(1) cluster-1or(2) clusterfor your first exploration of this project. - Goto localhost:3000 to explore the dashboards.

- you shouldn't need to log in, but if you need to the username is

adminand the password isgrafana.

- you shouldn't need to log in, but if you need to the username is

- Goto localhost:8888 to explore the contents of the aggregates.

./scripts/teardown.shwill shut everything (regardless of which cluster was selected) and will also remove all volumes.

This project showcases Kafka Stream Metrics by deploying 2 types of applications and then dashboards available to monitor them.

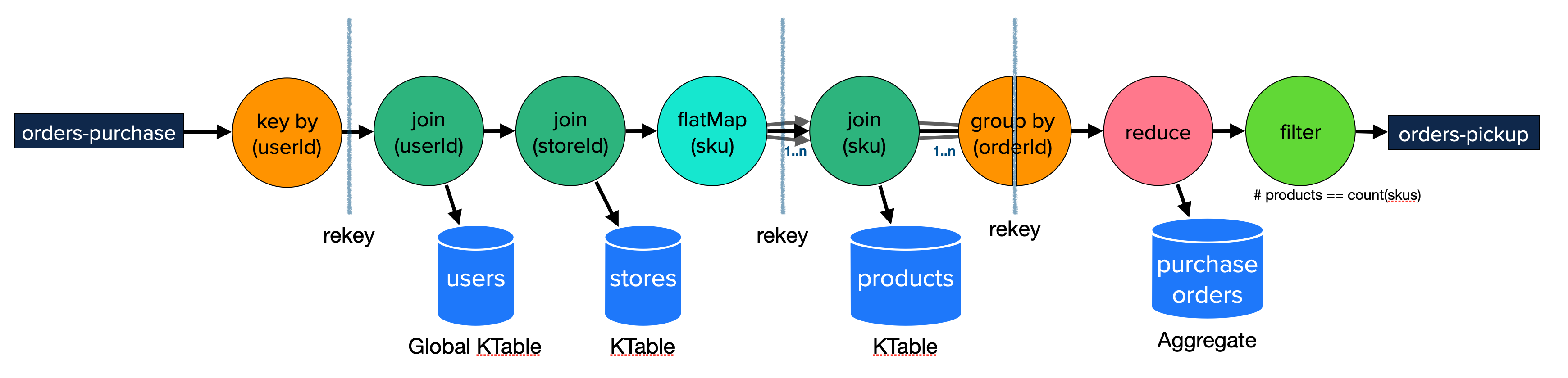

The first application is a purchase order system that takes orders, attaches stores and users, and prices them, and emits the result as a pickup order. This showcases KTables, Global KTables, Joins, aggregations, and more.

The primary stream of data flowing for purchase orders is shown in this diagram. The topology to hydrate and use the tables, is not shown. This is a logical representation of the topology based on the DSL components, the actual topology built includes additions source and sink nodes to handling the re-key process.

The reason for the group by (orderId) being split with a re-key is that is indeed what happens when the logical DSL topology is built by the StreamsBuilder. It is important to understand that here, especially since it is this topic that is then leveraged for analytics.

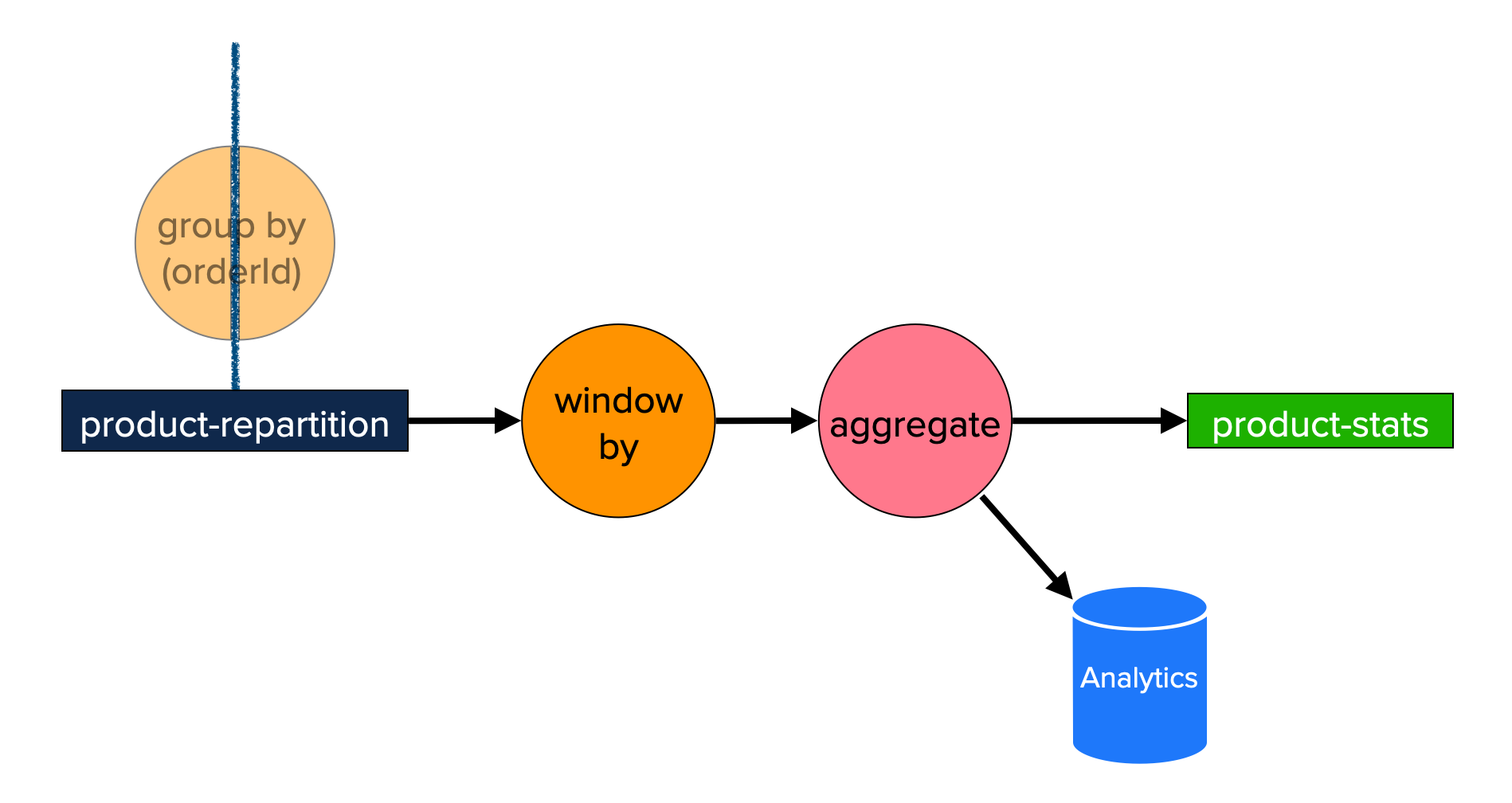

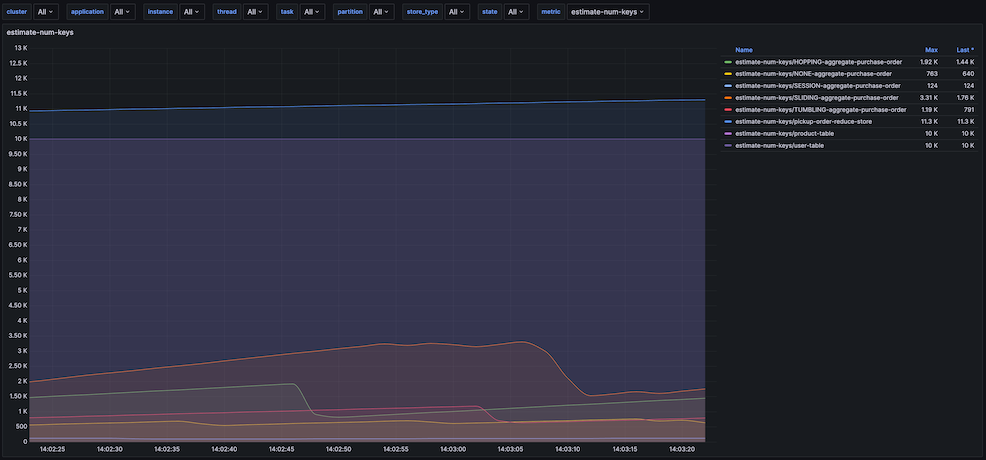

The second application is analytics on the SKU purchased in those orders. When the order/item message (when skus are priced) are joined back to the order, this application listens on that topic and extracts information to keep track of SKUs purchased over a given period of time (window).

It tracks the re-keyed order by SKU and builds up windowed analytics. This includes all window types: tumbling, hopping, sliding, session, and

even none as a non-window deployment. These aggregations are tracking the quantity purchased on the given SKU for the given type of window. Now, from

a real-world use-case scenario, I wouldn't use session windows for such aggregation; but having the same application with all

windowing options makes it a lot easier to see and compare the metrics between them.

This project:

-

Extensively leverages Docker and Docker Compose.

-

Applications are built with Java 17 and run on a Java 17 JVM.

-

Kafka leverages Confluent Community Edition containers, which run with a Java 17 JVM.

-

Has Grafana dashboards for Kafka Cluster, Kafka Streams, Consumer, Producer, and JVM.

- Supports a variety of cluster configurations to better showcase the Kafka Cluster metrics and validate dashboards are build with the various options.

-

Setup and Configuration all in the

./scripts/startup.shscript; execute from root directory to get everything running. You will be prompted to select an Apache Kafka Cluster to start. Typically, I suggest(2) clusteris having more brokers is more realistic experience, but if you have limited memory/cpu on your machine, use(1) cluster-1.

1. cluster-1 -- 1 node (broker and controller)

2. cluster -- 4 brokers, 1 raft controller

3. cluster-3ctrls -- 4 brokers, 3 raft controllers

4. cluster-hybrid -- 4 brokers, 1 dedicated raft controller, 2 brokers are also kraft controllers

5. cluster-zk -- 4 brokers, 1 zookeeper controller

6. cluster-sasl -- 3 brokers, 1 raft controller, with SASL authentication & otel collector client-metrics reporter

7. cluster-lb -- 4 brokers, 1 raft controller, an nginx lb (9092)

8. cluster-native -- 4 brokers, 1 raft controller, apache/kafka-native images

9. cluster-cm -- 3 brokers, 1 raft controller, otel collector client-metrics reporter

The other options are for more advance scenarios. (3) cluster-3ctrls is a typical deployment (3+ brokers and 3 controllers).

The hybrid (4) cluster-hybrid is to ensure that the Kafka Cluster dashboards correctly handle metrics "math" by having a node

that is both a broker and controller while also having nodes that are just broker and just controller. The (5) cluster-zk is

to make sure the dashboards still support zookeeper. (6) cluster-sasl is to be able to check the authentication dashboard

provided in the Kafka Cluster dashboards, it is also to show how security works with setting up a Kafka Cluster. Be sure to generate

the certificates readme. The last cluster (7) cluster-lb has an nginx proxy for each broker that allows you to navigate

into it and use Linux's traffic-controller tc to add network latencies. The best way to learn if your dashboards are useful, is to

observe them when things are not going well; this provides that scenario.

The grafana dashboards are handled with a local Grafana and Prometheus instance running from the monitoring module.

https://localhost:3000- Credentials (configuration is currently configured allowing edit access, even w/out login)

- username:

admin - password:

grafana

- username:

Both the purchase-order and aggregate applications have a simple UI allowing for inspection of the state stores.

-

https://localhost:8888 -

There are two pages to allow for inspection of the purchase-order state store and aggregate state store.

-

This allows for learning about state stores and windowing.

-

Shutdown

- Shut it all down, use

./scripts/teardown.shscript. This will also remove all volumes.

- Shut it all down, use

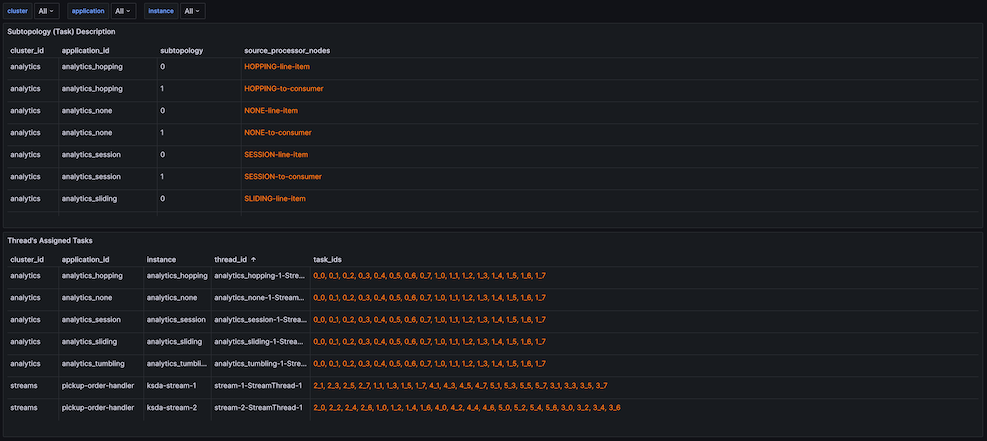

There are 12 Kafka Streams dashboards currently as part of this project. In addition, there are dashboards for Kafka Cluster, Producer, Consumer, and JVM. This documentation is focused on the Kafka Stream dashboards, but feel free to explore the other dashboards, especially for learning or gaining ideas and examples for building dashboards for your own organizations.

- This dashboard will give you insights into the Kafka Streams Topology along with the instance/thread a task is assigned.

- Aids greatly in understanding the task_id (subtopology_partition) used by other dashboards.

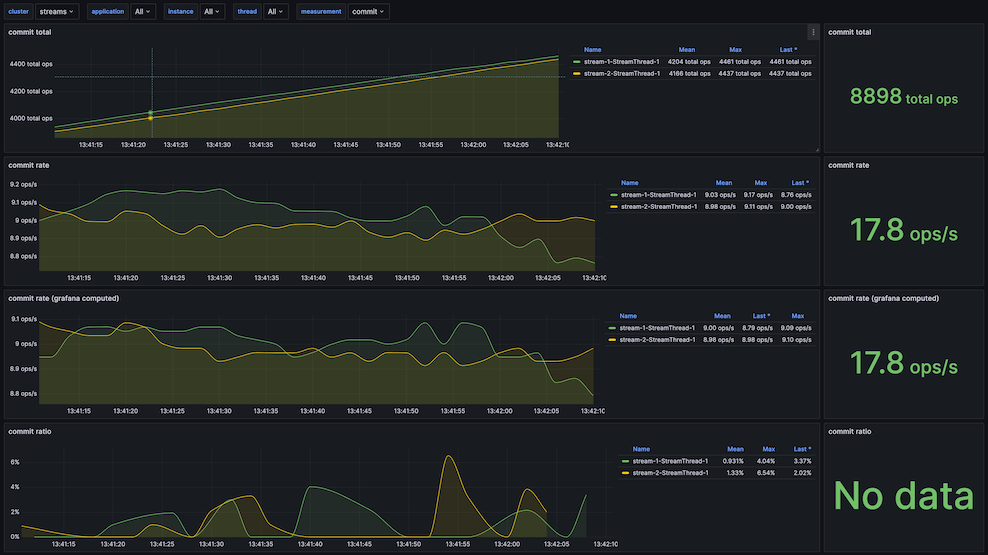

- Process, Commit, Poll statistics on each thread.

- The graph will keep thread/instances separated while the number is total (of what is selected).

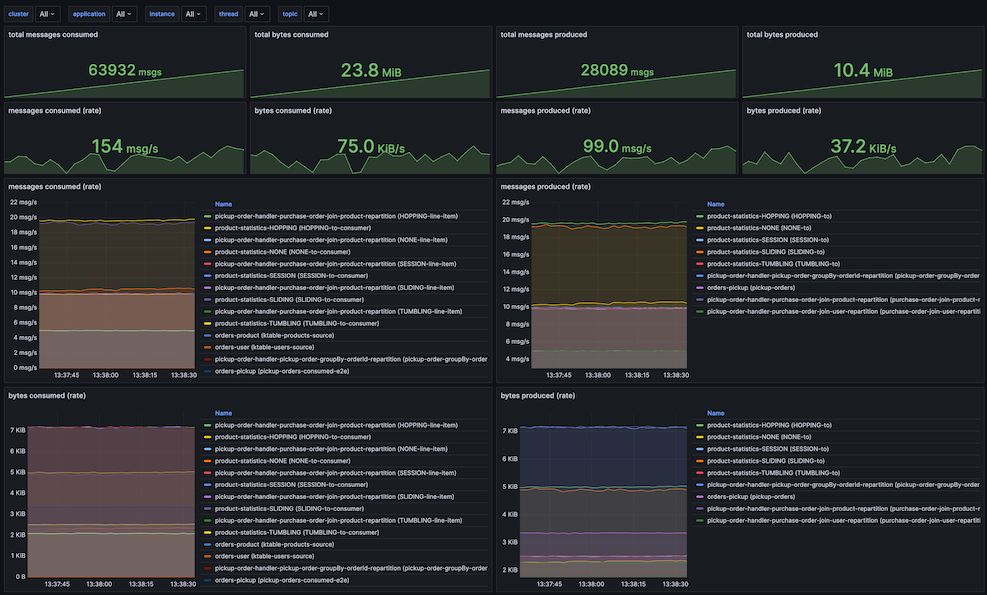

- Since 3.3, Kafka Streams has topic rate messages for capturing bytes and messages produced and consumed for a given topic.

- This dashboard provides a top-level summary of the variable selection as well as a graphical break-down on each partition.

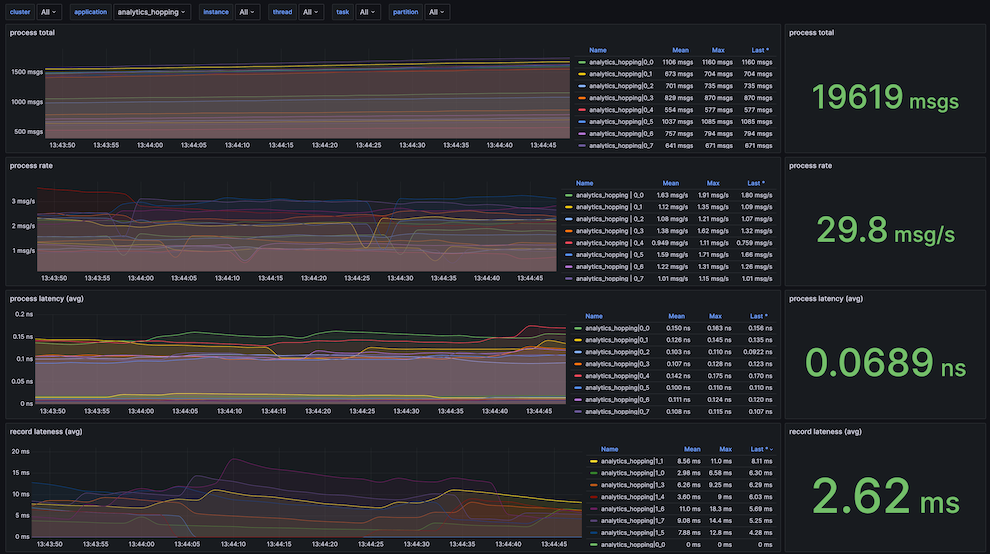

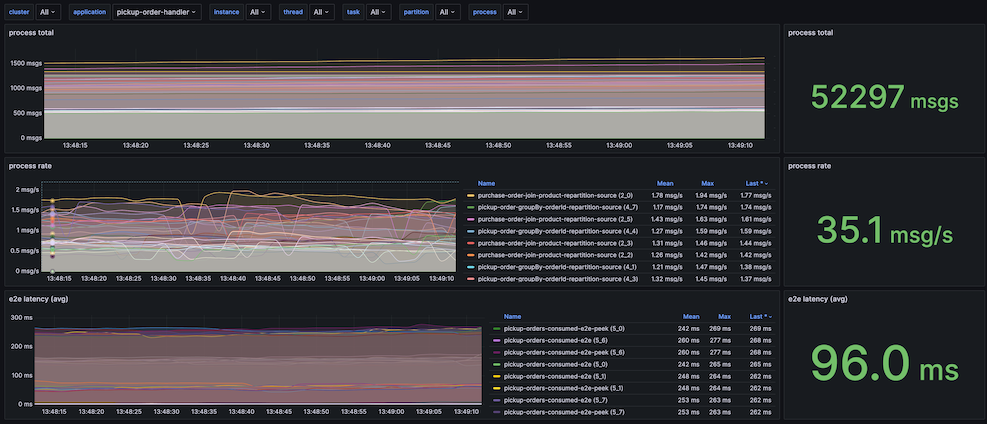

- It provides processing information and record information.

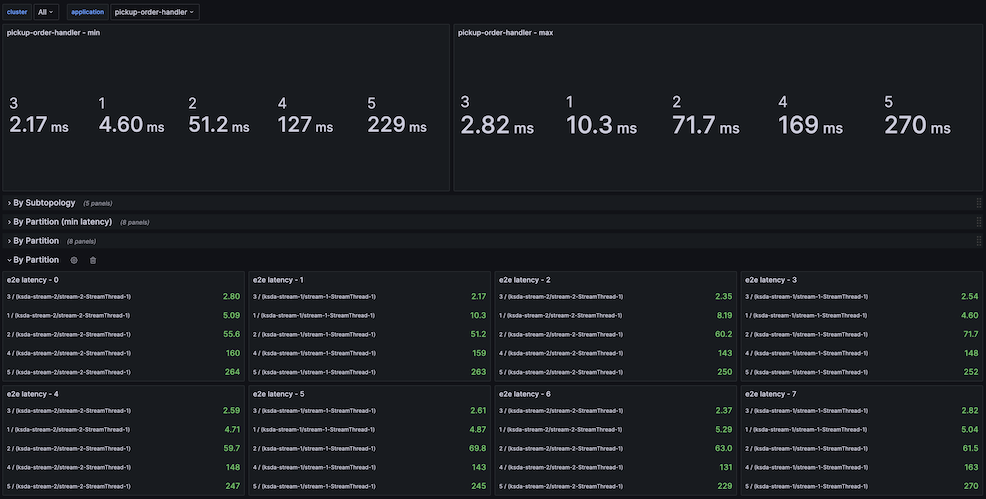

- Task end-to-end metrics (introduced in Kafka 2.4) showcases the e2e metrics.

- It allows for a breakdown by both sub-topology and by partition. An

applicationmust be selected and is defaulted.

- These are

debuglevel metrics. - The process is selected by name, but the graphs show it by task (subtopology_partition).

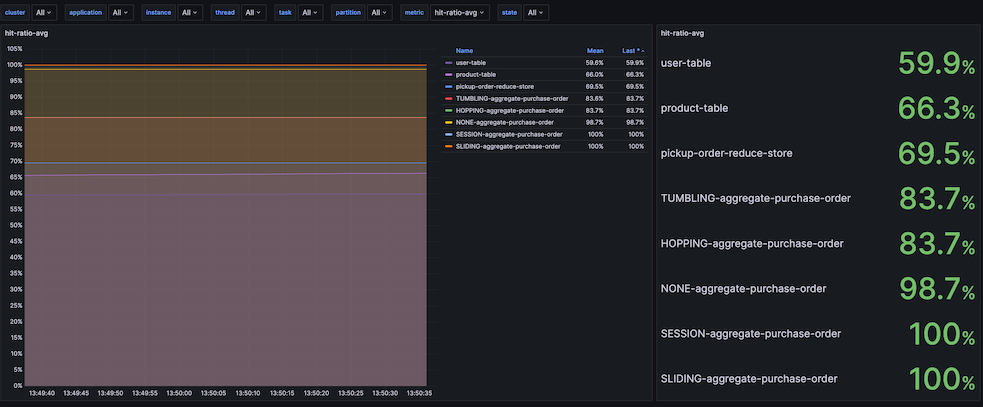

- The record cache is for materialized stores that have caching enabled.

- The metric is hit-ratio (max, avg, min).

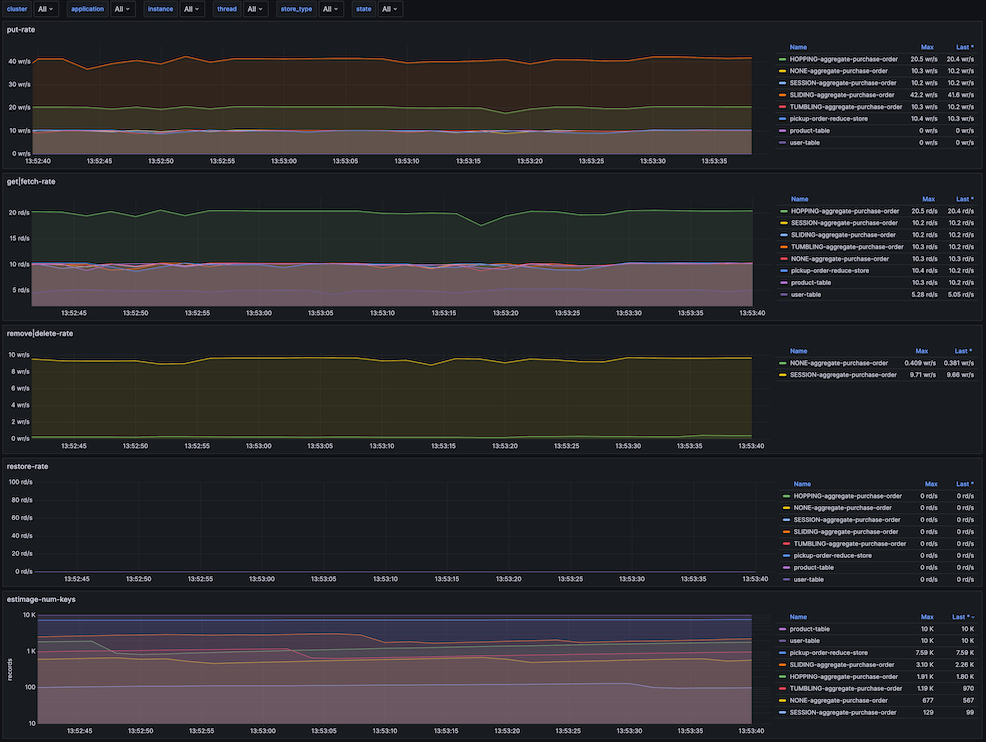

- Shows the put, get/fetch, delete, and count statistics into a single dashboard.

- puts get and fetch in the same dashboard, since implementation of the store depends on which one is used, but only one or the other is used.

- remove and delete are also on the same panel, for the same reason.

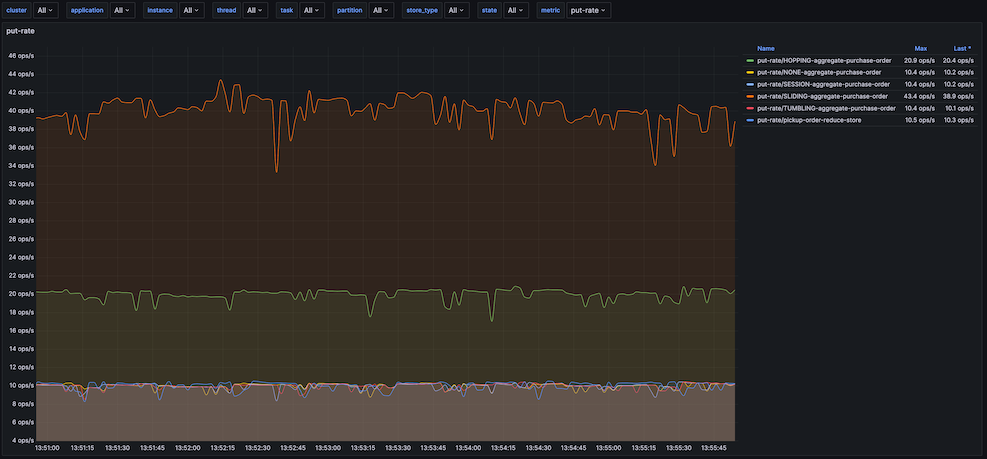

- The ability to view the rate metrics of the state-stores.

put,get,delete, and more.

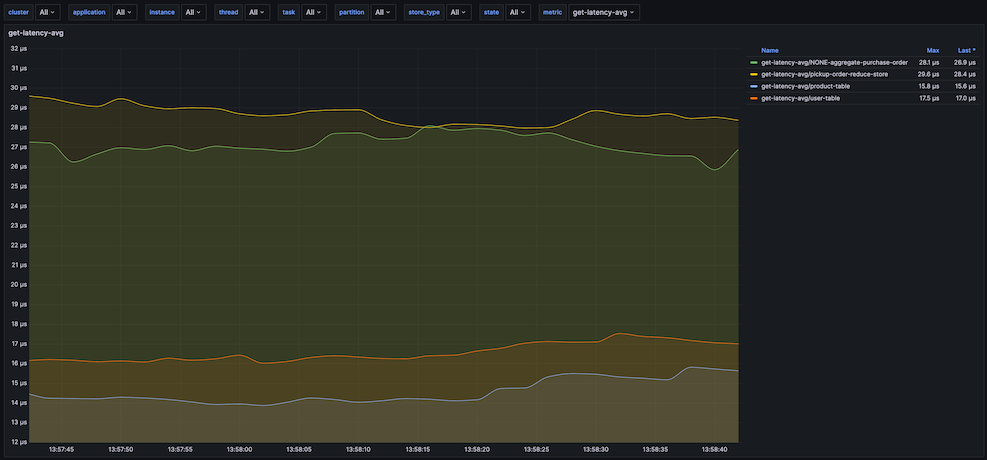

- The ability to view the latency metrics of the state-stores.

maxandavglatency metrics- The same metrics as for rate, just like

put,get,delete.

- All of the RocksDB metrics that are exposed through Kafka-Streams API.

- The time a given task is running within the stream thread.

- Each stream thread is displayed in its own panel.

- For this application, this information hasn't been the most useful; not sure if more sampling is needed to get a better display of data, or if it is just the nature of this metric.

-

This project leverages docker and docker compose for easy of demonstration.

-

to minimize having to start up all components, separate

docker-compose.ymlfor each logical-unit and a common bridge networkksd. -

docker compose

.envfiles used to keep container names short and consistent but hopefully not clash with any existing docker containers you are using.

- one docker image is built to run all java applications.

- The

.tarfile built leveraging the gradleassemblyplugin is mounted, such as:- ./{application}-1.0.tar:/app.tar

- To improve application start time, the .jar files of

kafka-clientsandkafka-streamsare added to the docker image and removed from the.tarfile. - the

entrypoint.shwill extract the tare file and then move the pre-loaded .jar files into the gradle assembly structure. - The size of the

rocksdbjar is the biggest impact; and a dramatic startup time as a result.

Apache Kafka Clients communicate directly with each broker, and it is up to the broker to tell the client its host & port for communication. This means that each broker needs a unique address for communication. Within docker, this means that each broker needs a unique localhost:port so any application running on your local machine can talk to each broker independently.

| broker | internal (container) bootstrap-servers | external (host-machine) bootstrap-servers |

|---|---|---|

| broker-1 | broker-1:9092 | localhost:19092 |

| broker-2 | broker-2:9092 | localhost:29092 |

| broker-3 | broker-3:9092 | localhost:39092 |

| broker-4 | broker-4:9092 | localhost:49092 |

This is not the case for broker-discovery, that discovery can be generalized, but since Kafka communicates the brokers access information within the broker metadata, it must always have the scenario as shown above.

Currently, each broker-1 in each cluster will add a port mapping of 9092 to the 19092, allowing for bootstrap.servers=localhost:19092 or bootstrap.servers=localhost:9092.

So if you see this extra mapping in the broker-1 entry in the clusters, it is there only to aid in the initial connection the client makes to the cluster, one of the first

messages returned will be a metadata response that will include the topics/partitions needed to communicat to the various topic-partition the client

needs to send data to or read data from.

- '19092:19092'

- '9092:19092'-

The Kafka applications can run on the host machine utilizing the external names, the applications can run in containers using the internal hostnames.

-

To see the Kafka Streams applications in the dashboard, they must be running within the same network; the

applicationsproject does this. -

Each application can have multiple instances up and running, there are 4 partitions for all topics, so for instances are possible.

-

A single Docker image is built to run any application, this application has the JMX Prometheus Exporter rules as part of the container, it also has a health-check for Kafka streams that leverages jolokia and the

kafka-metrics-countmetric. -

To improve startup time of the applications, the Docker image preloads the jars for

kafka-clientsandkafka-streamsand excludes them from the distribution tar. with RocksDB being a rather large jar file, this has shown to greatly improve startup time as the image needs to untar the distribution on startup. -

To reduce build times, the Docker image is only built if it doesn't exist or if

-Pforce-docker=trueis part of the build process.

-

- The monitoring related containers are in the

monitoringdocker-compose, and leverages the prometheus agent for obtaining metrics.

These applications are build with the following open-source libraries.

- kafka-streams

- kafka-clients

- jackson

- lombok

- slf4j-api

- logback

- quartz

- undertow

- apache-commons-csv

- apache-commons-lang3

- jcommander

While I do not use lombok for enterprise applications, it does come in handle for demonstration projects to minimize on the boiler-plate code that is shown.

- Kafka Streams is a framework, there is no need to use an additional framework.

- Always good to learn now to use the Kafka libraries w/out additional frameworks.

- See Tools README for the benefits this can provide.