[Paper] [PyTorch Implementation] [Paddle Implementation] [Huggingface Gradio Demo] [Colab]

Please note that the code and model of this repo will be deleted at 23:59 (+08), 11 Aug, 2021! Due to some reasons concerning with high level policy of our orgnization, we have to make such a difficult decision. Thank you for your understand.

This repository contains the official PyTorch implementation of paper:

Paint Transformer: Feed Forward Neural Painting with Stroke Prediction,

Songhua Liu*, Tianwei Lin*, Dongliang He, Fu Li, Ruifeng Deng, Xin Li, Errui Ding, Hao Wang (* indicates equal contribution)

ICCV 2021 (Oral)

- Linux or macOS

- Python 3

- PyTorch 1.7+ and other dependencies (torchvision, visdom, dominate, and other common python libs)

-

Clone this repository:

git clone https://github.com/Huage001/PaintTransformer cd PaintTransformer -

Download pretrained model from Google Drive and move it to inference directory:

mv [Download Directory]/model.pth inference/ cd inference -

Inference:

python inference.py

- Input image path, output path, and etc can be set in the main function.

- Notably, there is a flag serial as one parameter of the main function:

- If serial is True, strokes would be rendered serially. The consumption of video memory will be low but it requires more time.

- If serial is False, strokes would be rendered in parallel. The consumption of video memory will be high but it would be faster.

- If animated results are required, serial must be True.

-

Train:

-

Before training, start visdom server:

python -m visdom.server

-

Then, simply run:

cd train bash train.sh -

You can monitor training status at http://localhost:8097/ and models would be saved at checkpoints/painter folder.

-

-

You may feel free to try other training options written in train.sh.

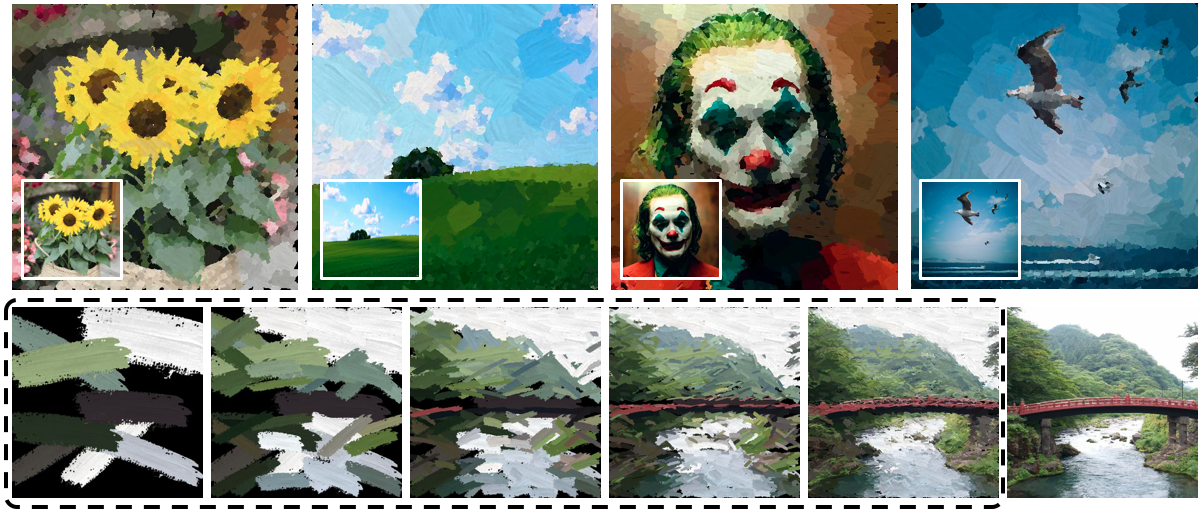

| Input | Animated Output |

|---|---|

|

|

|

|

|

|

-

If you find ideas or codes useful for your research, please cite:

@inproceedings{liu2021paint, title={Paint Transformer: Feed Forward Neural Painting with Stroke Prediction}, author={Liu, Songhua and Lin, Tianwei and He, Dongliang and Li, Fu and Deng, Ruifeng and Li, Xin and Ding, Errui and Wang, Hao}, booktitle={Proceedings of the IEEE International Conference on Computer Vision}, year={2021} }

- This implementation is developed based on the code framework of pytorch-CycleGAN-and-pix2pix by Junyan Zhu et al.