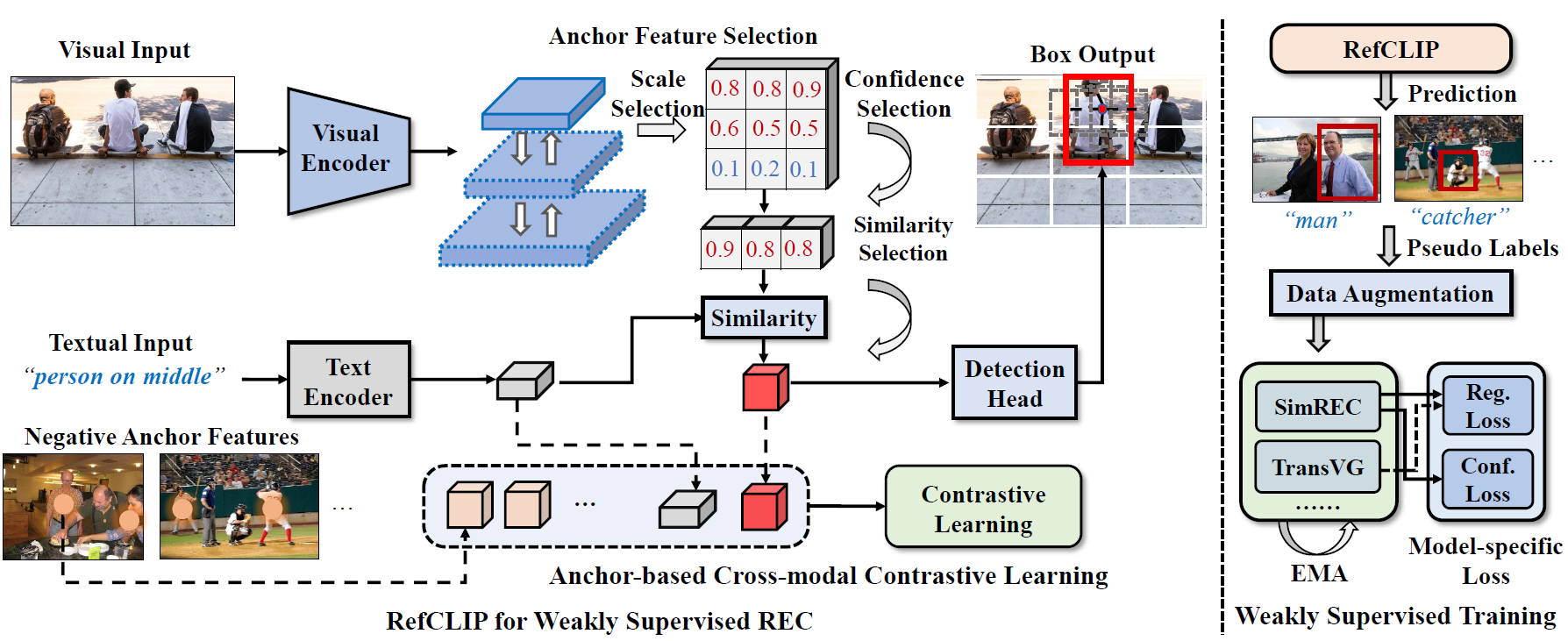

This is the official implementation of "RefCLIP: A Universal Teacher for Weakly Supervised Referring Expression Comprehension". In this paper,we propose a novel one-stage contrastive model called RefCLIP, which achieves weakly supervised REC via anchor-based cross-modal contrastive learning. Based on RefCLIP, we propose a weakly supervised training scheme for common REC models, that is, to train any REC model by means of pseudo-labels.

- Clone this repo

git clone https://github.com/AnonymousPaperID5299/RefCLIP.git

cd RefCLIP- Create a conda virtual environment and activate it

conda create -n refclip python=3.7 -y

conda activate refclip- Install Pytorch following the official installation instructions

- Install apex following the official installation guide

- Compile the DCN layer:

cd utils/DCN

./make.shpip install -r requirements.txt

wget https://github.com/explosion/spacy-models/releases/download/en_vectors_web_lg-2.1.0/en_vectors_web_lg-2.1.0.tar.gz -O en_vectors_web_lg-2.1.0.tar.gz

pip install en_vectors_web_lg-2.1.0.tar.gz-

Download images and Generate annotations according to SimREC.

-

Download the pretrained weights of YoloV3 from OneDrive.

-

The project structure should look like the following:

| -- RefCLIP

| -- data

| -- anns

| -- refcoco.json

| -- refcoco+.json

| -- refcocog.json

| -- refclef.json

| -- images

| -- train2014

| -- COCO_train2014_000000000072.jpg

| -- ...

| -- refclef

| -- 25.jpg

| -- ...

| -- config

| -- datasets

| -- models

| -- utils

- NOTE: our YoloV3 is trained on COCO’s training images, excluding those in RefCOCO, RefCOCO+, and RefCOCOg’s validation+testing.

python train.py --config ./config/[DATASET_NAME].yaml

python test.py --config ./config/[DATASET_NAME].yaml --eval-weights [PATH_TO_CHECKPOINT_FILE]

- Use RefCLIP to generate pseudo-labels.

- Train model with pseudo-labels according to RealGIN, SimREC, TransVG and MattNet.

| Method | RefCOCO | RefCOCO+ | RefCOCOg | ReferItGame | ||||

|---|---|---|---|---|---|---|---|---|

| val | testA | testB | val | testA | testB | val-g | test | |

| RefCLIP | 60.36 | 58.58 | 57.13 | 40.39 | 40.45 | 38.86 | 47.87 | 39.58 |

| Method | RefCOCO | RefCOCO+ | RefCOCOg | ReferItGame | ||||

|---|---|---|---|---|---|---|---|---|

| val | testA | testB | val | testA | testB | val-g | test | |

| RefCLIP_RealGIN | 59.43 | 58.49 | 57.36 | 37.08 | 38.70 | 35.82 | 46.10 | 37.56 |

| RefCLIP_SimREC | 62.57 | 62.70 | 61.22 | 39.13 | 40.81 | 36.59 | 45.68 | 42.33 |

| RefCLIP_TransVG | 64.08 | 63.67 | 63.93 | 39.32 | 39.54 | 36.29 | 45.70 | 42.64 |

| RefCLIP_MattNet | 69.31 | 67.23 | 71.27 | 43.01 | 44.80 | 41.09 | 51.31 | - |

@InProceedings{Jin_2023_CVPR,

author = {Jin, Lei and Luo, Gen and Zhou, Yiyi and Sun, Xiaoshuai and Jiang, Guannan and Shu, Annan and Ji, Rongrong},

title = {RefCLIP: A Universal Teacher for Weakly Supervised Referring Expression Comprehension},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

pages = {2681-2690}

}Thanks a lot for the nicely organized code from the following repos