This repository contains the supporting notebooks for a medium article on training an LLM from scratch with a custom domain corpus and Amazon SageMaker.

The series covers the following topics:

Learn how to acquire and preprocess your custom domain corpus for training the LLM.

Understand how to customize the vocabulary of the LLM to fit your dataset.

Learn how to tokenize your dataset and prepare it for training.

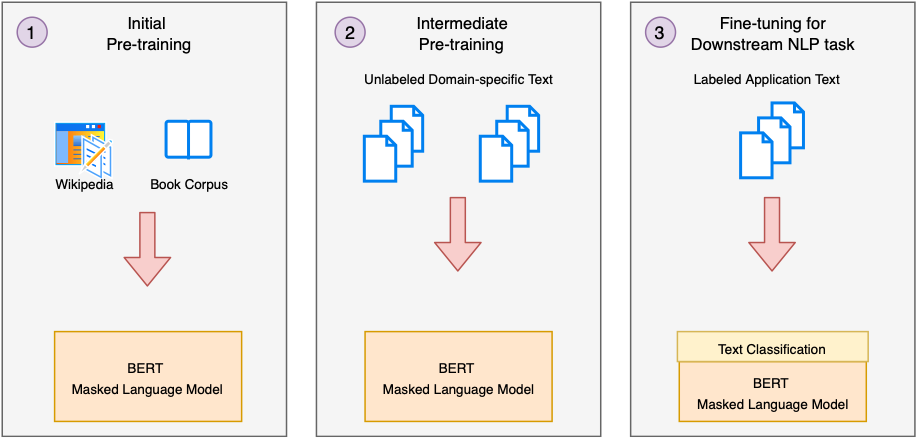

Discover how to perform intermediate training on the LLM using Amazon SageMaker.

Explore how to deploy the trained LLM as an endpoint for inference.

To follow along with the notebooks in this repository, you will need an AWS account with access to Amazon SageMaker. Additionally, you will need to have a custom domain corpus ready for training.

To get started, clone this repository and open the notebooks in the order they are presented in the series. Each notebook contains detailed instructions and explanations for the corresponding module.