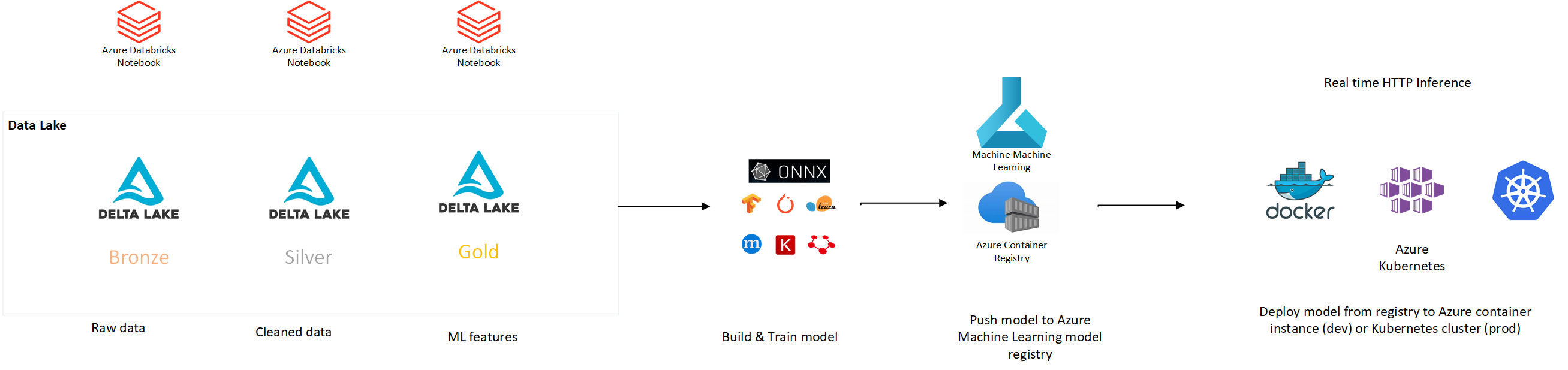

This repo implements MLOPs for large-scale Machine Learning operaltionalization using Azure Databricks, MLFlow, AzureML, Azure Container Services and Azure Kubernetes Services. The sample architecture adopted in this project is pictured below:

This repo is configured to serve real-time model inference. Real-time or online inference enables low-latency scoring with immediate prediction outputs available. The model is exposed via a REST API, deployed as a managed web server on a Azure container instance (dev/test environment) of Azure kubernetes cluster (production).

You will need the following resources to get started:

In your Azure subsciption, create an Azure Databricks workspace on its own resource group.

In your Azure subscription, create an Azure Machine Learning workspace on its own resource group. No need to add compute.

In Azure DevOps, create a project to host your MLOps pipeline. Import the code to your DevOps repo.

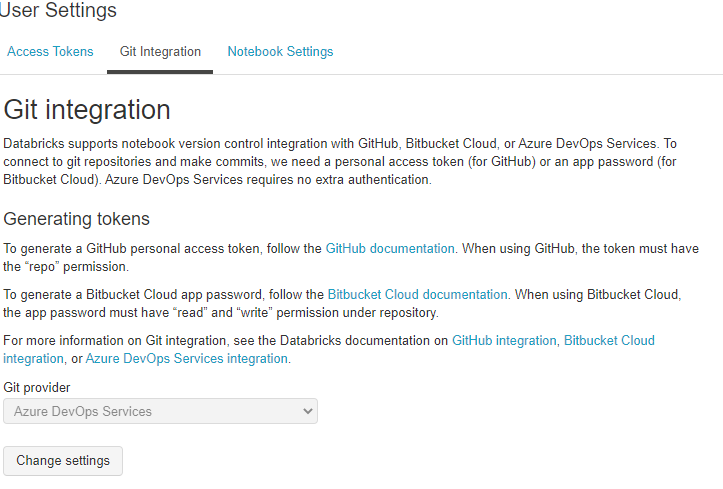

Connect your notebooks to the Azure DevOps repo. To configure this, go to the "User Settings" and click on "Git Integration".

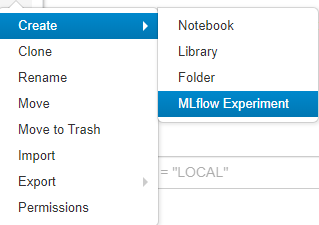

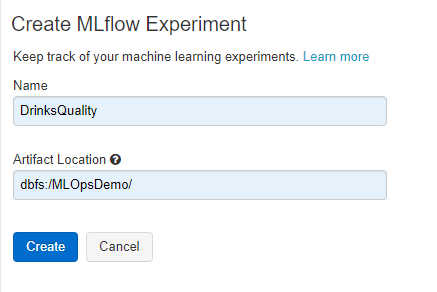

Create the MLFlow experiment in the Databricks workspace.

Create a Service Principal in your Azure Active Directory and give it contributor permissions to the resource group where your Azure Machine Learning workspace is deployed.

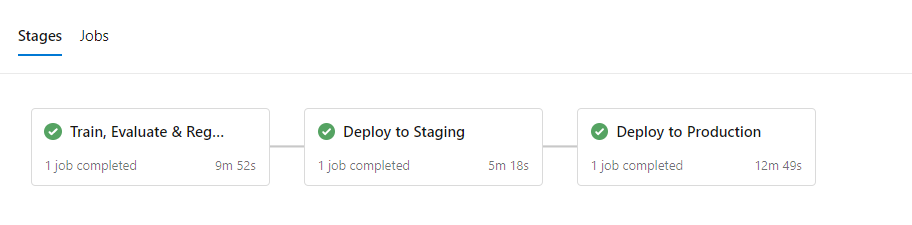

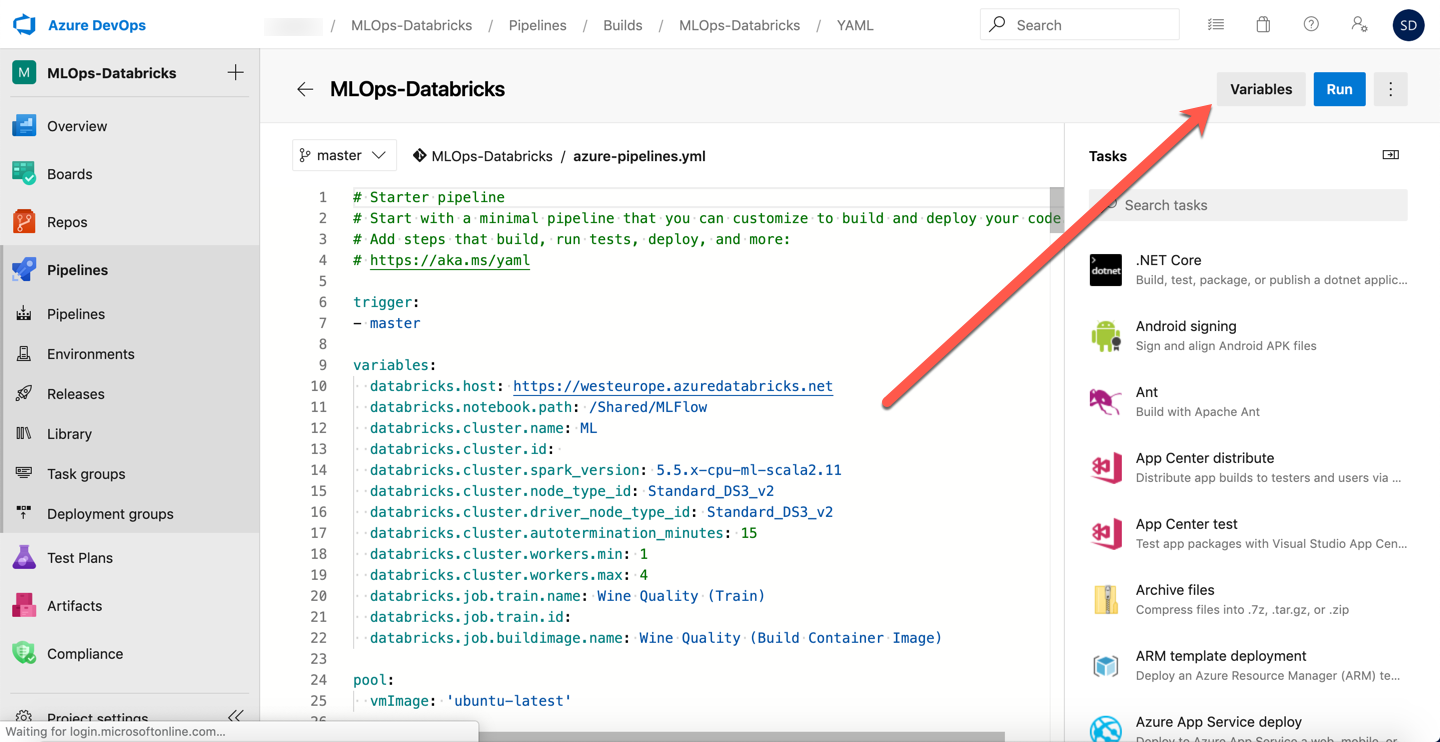

Use the azure-pipelines.yml file to create your Build Pipeline.

This Build Pipeline is using "Multi-Stage Pipelines". Enable this feature. The staging setp is deploying the model to an Azure Container instance for dev/test purposes. The final step is promoting the model to Azure Kubernetes deployment representing the final production stage.

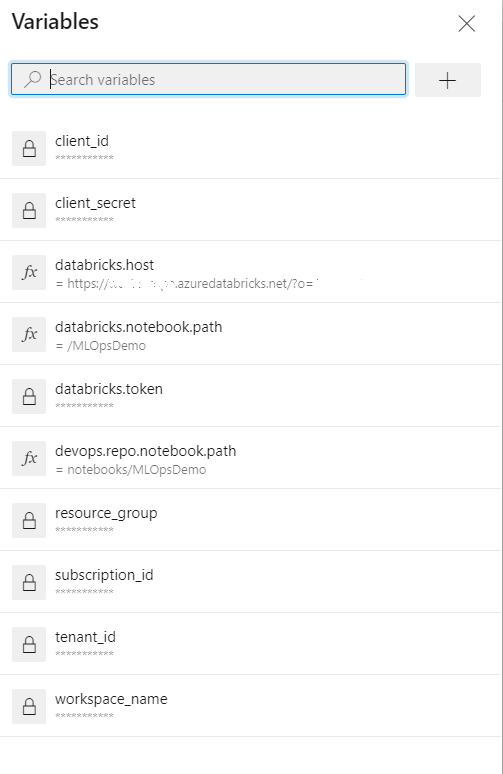

To connect your Azure Databricks Workspace with the Build pipeline you need to generate an access token on Databricks.

This token must be stored as encrypted secret named databricks.token in your Azure DevOps Build Pipeline.

List of Pipeline Variables required:

- client_id: The SPN clinet id

- client_secret: The SPN secret

- tenant_id: The SPN tenant id

- subscription_id: your subscription id

- workspace_location: the region used to deploy your Azure Machine Learning workspace

- workspace_name: your Azure Machine Learning workspace name

- resource_group: your Azure Machie Learning resource group

- databricks.notebook.path: Notebook path in the Databricks workspace

- devops.repo.notebook.path: Notebook path in the DevOps repo

- databricks.token: Databricks access token

Note the variable section in the azure-pipelines.yml file. Ensure you set the databricks.host to your Databricks Workspace url.

Disclaimer: This work is inspired by and based on efforts done by Sascha Dittman & Ahmed Mostafa.