This is the official implementation code of the paper:

- *A. Kratimenos, *K. Avramidis, C. Garoufis, A. Zlatintsi, & P. Maragos, "Augmentation Methods on Monophonic Audio for Instrument Classification in Polyphonic Music," 2020 28th European Signal Processing Conference (EUSIPCO), Amsterdam, 2021, pp. 156-160, doi: 10.23919/Eusipco47968.2020.9287745.

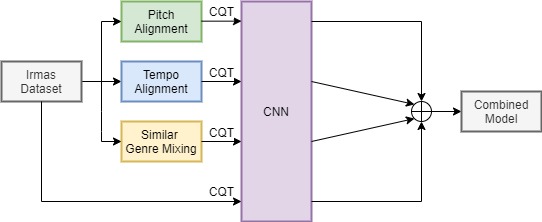

Instrument classification is one of the fields in Music Information Retrieval (MIR) that has attracted a lot of research interest. However, the majority of that is dealing with monophonic music, while efforts on polyphonic material mainly focus on predominant instrument recognition or multi-instrument recognition for entire tracks. We present an approach for instrument classification in polyphonic music using monophonic training data that involves mixing-augmentation methods. Specifically, we experiment with pitch and tempo-based synchronization, as well as mixes of tracks with similar music genres. Further, a custom CNN model is proposed, that uses the augmented training data efficiently and a plethora of suitable evaluation metrics are discussed as well. The tempo-sync and genre techniques stand out, achieving an 81% label ranking average precision accuracy, detecting up to 9 instruments in over 2300 testing tracks.

If you find our paper useful in your research, please consider citing:

@inproceedings{kratimenos2020ic,

title={Augmentation Methods on Monophonic Audio for Instrument Classification in Polyphonic Music},

author={Kratimenos, A. and Avramidis, K. and Garoufis, C. and Zlatintsi, A. and Maragos, P.},

booktitle={2020 28th European Signal Processing Conference (EUSIPCO)},

pages={156-160},

year={2020},

doi={10.23919/Eusipco47968.2020.9287745}

}