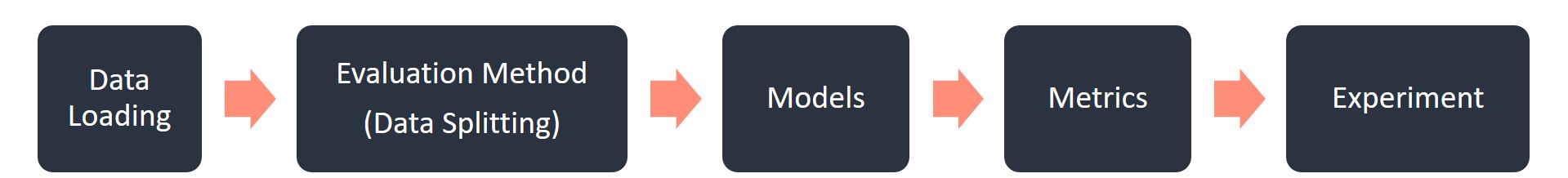

Cornac is python recommender system library for easy, effective and efficient experiments. Cornac is simple and handy. It is designed from the ground-up to faithfully reflect the standard steps taken by researchers to implement and evaluate personalized recommendation models.

Website | Documentation | Models | Examples | Preferred.AI

Currently, we are supporting Python 3 (version 3.6 is recommended). There are several ways to install Cornac:

- From PyPI (you may need a C++ compiler):

pip3 install cornac- From Anaconda:

conda install cornac -c conda-forge- From the GitHub source (for latest updates):

pip3 install Cython

git clone https://github.com/PreferredAI/cornac.git

cd cornac

python3 setup.py installNote:

Additional dependencies required by models are listed here.

Some of the algorithms use OpenMP to support multi-threading. For OSX users, in order to run those algorithms efficiently, you might need to install gcc from Homebrew to have an OpenMP compiler:

brew install gcc | brew link gccIf you want to utilize your GPUs, you might consider:

- TensorFlow installation instructions.

- PyTorch installation instructions.

- cuDNN (for Nvidia GPUs).

Flow of an Experiment in Cornac

Load the built-in MovieLens 100K dataset (will be downloaded if not cached):

from cornac.datasets import movielens

ml_100k = movielens.load_100k()Split the data based on ratio:

from cornac.eval_methods import RatioSplit

ratio_split = RatioSplit(data=ml_100k, test_size=0.2, rating_threshold=4.0, seed=123)Here we are comparing Biased MF, PMF, and BPR:

from cornac.models import MF, PMF, BPR

mf = MF(k=10, max_iter=25, learning_rate=0.01, lambda_reg=0.02, use_bias=True)

pmf = PMF(k=10, max_iter=100, learning_rate=0.001, lamda=0.001)

bpr = BPR(k=10, max_iter=200, learning_rate=0.001, lambda_reg=0.01)Define metrics used to evaluate the models:

mae = cornac.metrics.MAE()

rmse = cornac.metrics.RMSE()

rec_20 = cornac.metrics.Recall(k=20)

ndcg_20 = cornac.metrics.NDCG(k=20)

auc = cornac.metrics.AUC()Put everything together into an experiment and run it:

from cornac import Experiment

exp = Experiment(eval_method=ratio_split,

models=[mf, pmf, bpr],

metrics=[mae, rmse, rec_20, ndcg_20, auc],

user_based=True)

exp.run()Output:

| MAE | RMSE | Recall@20 | NDCG@20 | AUC | Train (s) | Test (s) | |

|---|---|---|---|---|---|---|---|

| MF | 0.7441 | 0.9007 | 0.0622 | 0.0534 | 0.2952 | 0.0791 | 1.3119 |

| PMF | 0.7490 | 0.9093 | 0.0831 | 0.0683 | 0.4660 | 8.7645 | 2.1569 |

| BPR | N/A | N/A | 0.1449 | 0.1124 | 0.8750 | 0.8898 | 1.3769 |

For more details, please take a look at our examples.

The recommender models supported by Cornac are listed here. Why don't you join us to lengthen the list?

Your contributions at any level of the library are welcome. If you intend to contribute, please:

- Fork the Cornac repository to your own account.

- Make changes and create pull requests.

You can also post bug reports and feature requests in GitHub issues.