Search and Sample Return Project

This project is modeled after the NASA sample return challenge

The Simulator

The first step is to download the simulator build that's appropriate for your operating system. Here are the links for Linux, Mac, or Windows.

Dependencies

Python 3 + OpenCV, and Jupyter Notebooks

Computer Vision Analysis

Identifying Sand, Obstacles and Rocks

To identify the Obstacle in the field of view we looked at different colour space segment them for the the range of colour we are looking for, for example when looking for the the rock walls we are generlly looking for a blackish brown colour.

These actions are preformed in the Diferent Colour Spaces Thresholds, Sand Threshold, Rock Threshold and Ball Threshold sections of the Jupyter Notebook.

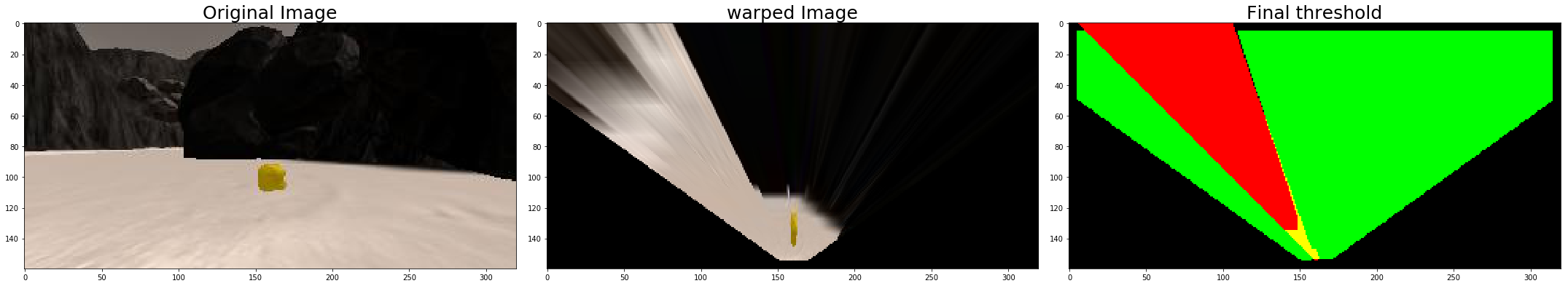

Processing of Images

The first step to processing an incoming is to apply a perspective transform on it to get a bird's eye view of the environment. Next we apply our three threshold functions to identify the the sand, walls and the rock samples that are inview.

After finding each of these we then convert the pixel locations to a position in the local rover coordinate system then to our global world coordinate system.

Finally we update our map, marking it with our findindings.

Here is a video of our pipeline on the sample video

Autonomous Navigation and Mapping

In the perception_step() we look for each of the sand, walls and rock samples pixels and then translate them to our world coordinate system to be used in the decision_step() of our pipeline.

Also we try to correct for the roll of the rover, as well as making note if we have either an extreme value of pitch or roll.

In the decision_step() we build a DFA which make choices to transition from state to state primarily based on the size of the sand area and rock wall area in front of the rover.

The possible state are

| Possible State |

|---|

| Forward |

| Stuck |

| Rock Ahead |

| Move To Ball |

| Can Pick Up |

| Picking Up |

| Turn Around |

| Open Area |

| Corridor |

| Left Wall |

| Right Wall |

| Front Wall |

| Left Corner |

| Right Corner |

| Full Corner |

| Return Home |

| Completed Challenge |

Autonomous Mode

To lunch in autonomous mode open a terminal and run the drive_rover.py file. Call it at the command line like this:

python drive_rover.pyThen launch the simulator and choose "Autonomous Mode". The rover should drive itself now!

Results

When launching in autonomous mode the rover is able to navigate the environment and map 95+% with a fidelity of about 65%. It is able to find all of the rock samples, pick them up, and return home.

The simulator was run at 1024x768 on Good quality with about 26-28 fps. **Note: running the simulator with different choices of resolution and graphics quality may produce different results!

Issues and Improvements

The pipeline can fail when it gets really stuck in a Wall/Rock sometimes, it can get unstuck if you give it enough time. This is due to the edge detection/collision of the simulator.

The pipline could be improved by doing some path planing and moving in the direction of unexplored area.