Libify makes it easy to import notebooks in Databricks. Notebook imports can also be nested to create complex workflows easily. Supports Databricks Runtime Version 5.5 and above.

Installation

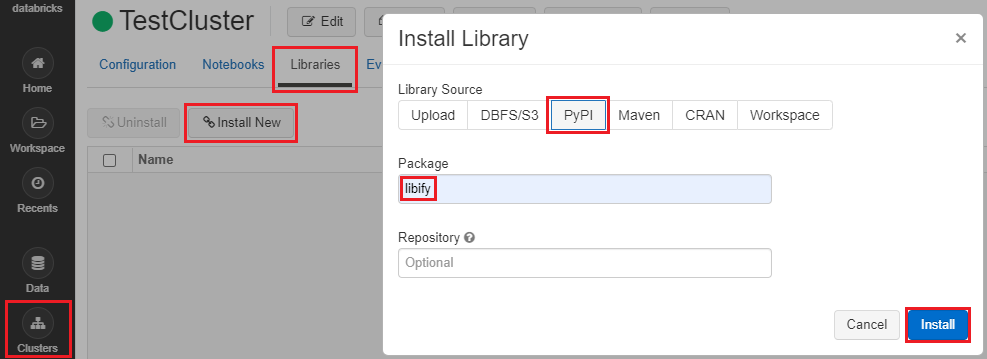

- Click the Clusters icon in the sidebar

- Click a cluster name (make sure the cluster is running)

- Click the Libraries tab

- Click Install New

- Under Library Source, choose PyPI

- Under Package, write libify

- Click Install

After installing the package, add the following code snippets to the notebooks:

-

In the importee notebook (the notebook to be imported), add the following cell at the end of the notebook. Make sure that

dbutils.notebook.exitis not used anywhere in the notebook and that the last cell contains exactly the following snippet and nothing else:import libify libify.exporter(globals())

-

In the importer notebook (the notebook that imports other notebooks), first import

libify:import libify

and then use the following code to import the notebook(s) of your choice:

mod1 = libify.importer(globals(), '/path/to/importee1') mod2 = libify.importer(globals(), '/path/to/importee2')

Everything defined in

importee1andimportee2would now be contained in the namespacesmod1andmod2respectively, and can be accessed using the dot notation, e.g.x = mod1.function_defined_in_importee1()

Databricks Community Cloud (https://community.cloud.databricks.com) does not allow calling one notebook from another notebook, but notebooks can still be imported using the following workaround. However, both of the following steps will have to be run each time a cluster is created/restarted.

-

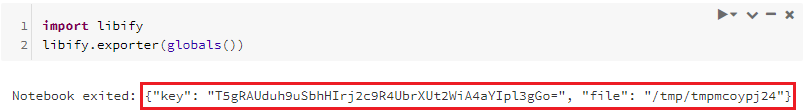

Run step 1 from above (Typical Usage). Make a note of the output of the last cell (only the part marked below):

-

In the importer notebook, call

libify.importerwith theconfigparameter as the dictionary obtained from the previous step:import libify mod1 = libify.importer(globals(), config={"key": "T5gRAUduh9uSbhHIrj2c9R4UbrXUt2WiA4aYIpl3gGo=", "file": "/tmp/tmpmcoypj24"})