Open Dream AI is a deep learning-based image generation platform that uses StableDiffusion to generate high-quality images from text prompts. This repository contains the source code for the Open Dream AI platform.

To install the Open Dream AI platform, follow these steps:

- Clone this repository to your local machine.

- Install the required dependencies by running

pip install -r requirements.txt. - Configure the platform by editing the

app/core/config.pyfile. The configuration file contains settings for the device to use (CPU or GPU), the project name, the server name, and the output folder to use for storing generated images. - Start the API server by running

uvicorn app.main:app --reload. This will start the API server on port 8000.

- Clone this repository to your local machine.

- (Optional) Configure the platform by creating a .env from .env.sample. The configuration file contains settings for

the device to use (

DEVICEshould be set to cpu,MIXED_PRECISIONshould be set to empty value). - Build and start the service using

docker compose -f docker-compose-cpu.yml up

Requirements

- Nvidia GPU with NVIDIA driver installed.

- Install docker on your system.

For instructions on getting started with the NVIDIA Container Toolkit, refer to the installation guide.

Docker-compose:

- Clone this repository to your local machine.

- Configure the platform by creating a .env from .env.sample. The configuration file contains settings for the

device to use (

DEVICEshould be set to cuda). - Build and start the service using

docker compose -f docker-compose-cuda.yml up

OR use native Docker commands:

- Clone this repository to your local machine.

- Configure the platform by creating a .env from .env.sample. The configuration file contains settings for the

device to use (CPU or GPU), the project name, the server name, and the output folder to use for storing generated

images. (

DEVICEshould be set to cuda). - Build the cuda docker image via

docker build -t open-dream-ai-cuda -f ./Dockerfile.cuda .. - Start the Docker image by running

docker run --gpus all -p 8000:80 open-dream-ai-cuda. This will start the API server on port 8000.

Swagger can be found at http://127.0.0.1:8000/docs/open-dream-ai

REST API documentation can be found at http://127.0.0.1:8000/redoc/open-dream-ai

To generate images from text prompts using the Open Dream AI platform, follow these steps:

- Send a POST request to the

v1/open-dream-ai/txt2img/endpoint with a JSON payload containing the text prompt and other configuration options. - The API will generate one or more images from the text prompt using the StableDiffusion pipeline.

- The API will return a JSON response containing the filenames of the generated images.

For example, to generate a single image from the prompt "Beautiful floor paving", you could use the following curl

command:

curl -X 'POST' \

'http://127.0.0.1:8000/v1/open-dream-ai/txt2img/' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '{

"prompt": [

"Beautiful floor paving"

]

}'

This will generate an image and return a response like the following:

{

"images": [

"01FEH7W9N9MH84QJN65F2C8Y26.png"

],

"info": "Your request was placed in background. It will be available shortly."

}The generation will take some time and will run in the background. You can check progress and retrieve generated images using this filename at the Get Image endpoint.

To generate images from an image using the Open Dream AI platform, follow these steps:

- Send a POST request to the

v1/open-dream-ai/img2img/endpoint with a JSON payload containing path to the image, the text prompt, and other configuration options. - The API will generate one or more images from the image using the StableDiffusion pipeline.

- The API will return a JSON response containing the filenames of the generated images.

For example, to generate a single image using existing image.jpg and the prompt "Dog sitting on a bench in winter",

you could use the following curl command:

curl -X 'POST' \

'http://127.0.0.1:8000/v1/open-dream-ai/img2img/' \

-H 'accept: application/json' \

-H 'Content-Type: multipart/form-data' \

-F 'image=@doc_images/dog_on_a_bench.png;type=image/png' \

-F 'prompt=Dog sitting on a bench in winter' \

-F 'number_of_images=1' \

-F 'steps=100' \

-F 'guidance_scale=7.5' \

-F 'strength=0.7'

This will generate an image and return a response like the following:

{

"images": [

"01FEH7W9N9MH84QJN65F2C8Y26.png"

],

"info": "Your request was placed in background. It will be available shortly."

}The generation will take some time and will run in the background. You can check progress and retrieve generated images using this filename at the Get Image endpoint.

To inpaint image using the Open Dream AI platform, follow these steps:

- Send a POST request to the

v1/open-dream-ai/inpaint/endpoint with a JSON payload containing path to the image, path to mask image, the text prompt, and other configuration options. - The API will generate one or more images from the image using the StableDiffusion pipeline.

- The API will return a JSON response containing the filenames of the generated images.

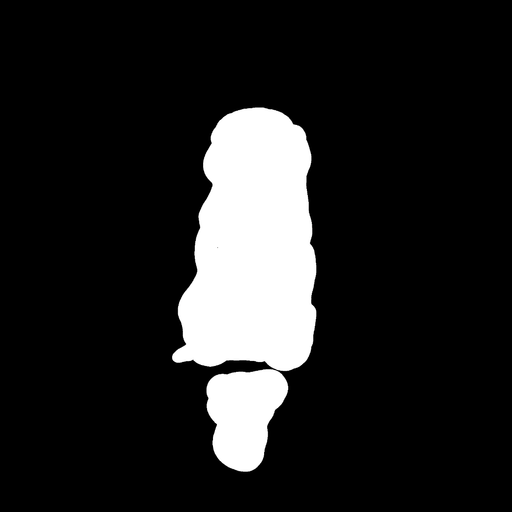

For example, to remove a dog from a bench in a photo, send the photo of a dog, the mask to specify what area of the

image should be changed, and enter prompt "An empty bench". You could use the following curl command:

curl -X 'POST' \

'http://127.0.0.1:8000/v1/open-dream-ai/inpaint/' \

-H 'accept: application/json' \

-H 'Content-Type: multipart/form-data' \

-F 'image=@doc_images/dog_on_a_bench.png;type=image/png' \

-F 'mask_image=@doc_images/inpaint_mask.png;type=image/png' \

-F 'prompt=An empty bench' \

-F 'number_of_images=1' \

-F 'steps=100' \

-F 'guidance_scale=7.5'

This will generate an image and return a response like the following:

{

"images": [

"01FEH7W9N9MH84QJN65F2C8Y26.png"

],

"info": "Your request was placed in background. It will be available shortly."

}The generation will take some time and will run in the background. You can check progress and retrieve generated images using this filename at the Get Image endpoint.

To generate image from image with the depth info using Open Dream AI platform, follow these steps:

- Send a POST request to the

v1/open-dream-ai/depth2img/endpoint with a JSON payload containing path to the image, the text prompt, and other configuration options. - The API will generate one or more images from the image using the StableDiffusion pipeline.

- The API will return a JSON response containing the filenames of the generated images.

For example, to generate a single image using existing image.jpg and the prompt "Old man",

you could use the following curl command:

curl -X 'POST' \

'http://127.0.0.1:8000/v1/open-dream-ai/depth2img/' \

-H 'accept: application/json' \

-H 'Content-Type: multipart/form-data' \

-F 'image=@doc_images/barack_obama.jpg;type=image/jpeg' \

-F 'prompt=Old man' \

-F 'number_of_images=1' \

-F 'steps=100' \

-F 'guidance_scale=7.5' \

-F 'strength=0.7'

This will generate an image and return a response like the following:

{

"images": [

"01FEH7W9N9MH84QJN65F2C8Y26.png"

],

"info": "Your request was placed in background. It will be available shortly."

}The generation will take some time and will run in the background. You can check progress and retrieve generated images using this filename at the Get Image endpoint.

To get generated image by filename, send a GET request to the v1/open-dream-ai/image/ endpoint with the

filename parameter containing image filename that you got from other endpoints.

For example:

curl -X 'GET' \

'http://127.0.0.1:8000/v1/open-dream-ai/image?filename=YOUR-FILENAME.png' \

-H 'accept: application/json'

If the generation process is not yet finished, you will receive a response that looks like this:

{

"filename": "01FEH7W9N9MH84QJN65F2C8Y26.png",

"progress": "44%"

}To fine-tune a model using LoRA, send a POST request to the v1/open-dream-ai/lora endpoint with

JSON data that contains fine-tuning arguments.

For example:

curl --location 'http://127.0.0.1:8000/v1/open-dream-ai/lora/' \

--header 'Content-Type: application/json' \

--data '{

"pretrained_model_name_or_path": "CompVis/stable-diffusion-v1-4",

"dataset_name": "lambdalabs/pokemon-blip-captions",

"resolution": 512,

"random_flip": true,

"train_batch_size": 1,

"num_train_epochs": 100,

"checkpointing_steps": 1000,

"learning_rate": 0.0001,

"lr_scheduler": "constant",

"lr_warmup_steps": 0,

"seed": 42

}'

Response is the process name like following:

"2F01GX6WTWSVZ9SZX2CXAQFE7DCA"

You can use this process name to monitor fine-tuning progress using Get progress endpoint Once the process is finished, you can use generated LoRA weights using the same process ID as the "lora_weights" parameter on image generation endpoints.

If the generation is finished and the image is ready, it will be returned as a response instead.

To get generated image by filename, send a GET request to the v1/open-dream-ai/lora/progress endpoint with the

process_name parameter containing process name that you got from LoRA fine-tuning endpoint.

For example:

curl -X 'GET' \

'http://127.0.0.1:8000/v1/open-dream-ai/lora/progress?process_name=YOUR-PROCESS-NAME' \

-H 'accept: application/json'

If the generation process is not yet finished, you will receive a response that looks like this:

{

"process_name": "01FEH7W9N9MH84QJN65F2C8Y26",

"progress": "34%"

}To get list of all generated images, send a GET request to the v1/open-dream-ai/image/list endpoint.

For example:

curl -X 'GET' \

'http://127.0.0.1:8000/v1/open-dream-ai/image/list' \

-H 'accept: application/json'

Response:

[

"01GYFAY3JWS2446491KG2DKZC8.png",

"01GYFAZFN2QTGV5XJK752Z3Q3A.png",

"01GYFC1APG5JQ50H0HSX9FZPEG.png",

"01GYFC1APGEQ7ECKDG2HGBCJN8.png",

"01GYFVDMV34E55DZEXQFPDDVQ7.png",

"01GYFXA7XQQ0W2A037HZ9PZJHS.png",

"01GYFXFB8GCB5FZHA0H1KABPV9.png"

]To get list of all fine-tuned LoRA weights, send a GET request to the v1/open-dream-ai/lora/lora_models endpoint.

For example:

curl -X 'GET' \

'http://127.0.0.1:8000/v1/open-dream-ai/lora/lora_models \

-H 'accept: application/json'

Response:

[

"01GYFAY3JWS2446491KG2DKZC8",

"01GYFAZFN2QTGV5XJK752Z3Q3A",

"01GYFC1APG5JQ50H0HSX9FZPEG"

]We welcome contributions to the Open Dream AI platform! To contribute, follow these steps:

- Fork this repository to your own account.

- Create a new feature branch for your changes.

- Make your changes and commit them to your feature branch.

- Push your feature branch to your fork of the repository.

- Submit a pull request from your feature branch to the

mainbranch of this repository.

Please make sure that your changes follow the PEP 8 style guide.

The Open Dream AI platform is released under the MIT License. See the LICENSE

file for more details.