Table of Contents

Currently, the setup can only be done in a single machine.

You will need a Google Cloud Platform account in order to setup BigQuery and the credentials in order for it to work

You will need python libraries and dependency manager such as pip. I use poetry

-

$ curl -sSL https://raw.githubusercontent.com/python-poetry/poetry/master/get-poetry.py | python -

-

Clone the repo

$ git clone https://github.com/koksang/test.git -

To install dependencies, you can

- poetry

$ poetry install $ poetry shell

- poetry

-

Setup environment

- Use .env

$ touch .env $ echo "AIRFLOW_HOME=${YOUR PATH TO AIRFLOW_HOME}" >> .env $ echo "DBT_PROJECT_DIR=${YOUR DBT_PROJECT_DIR}" >> .env $ echo "DBT_PROFILES_DIR=${YOUR DBT_PROFILES_DIR}" >> .env $ echo "PROJECT_ID=${YOUR GCP PROJECT_ID}" >> .env $ echo "BUCKET=${YOUR GCS BUCKET}" >> .env $ set -a && source .env

- Use .env

-

Start a local airflow cluster

-

Create airflow database

$ airflow db init $ airflow users create -u admin -r Admin --password admin -e admin -f admin -l admin

-

Start airflow webserver

$ airflow webserver -

Start airflow scheduler

$ airflow scheduler -

Go to airflow and login using username:

adminand password:admin

-

-

To exit poetry shell, you can

- poetry

$ exit

- poetry

-

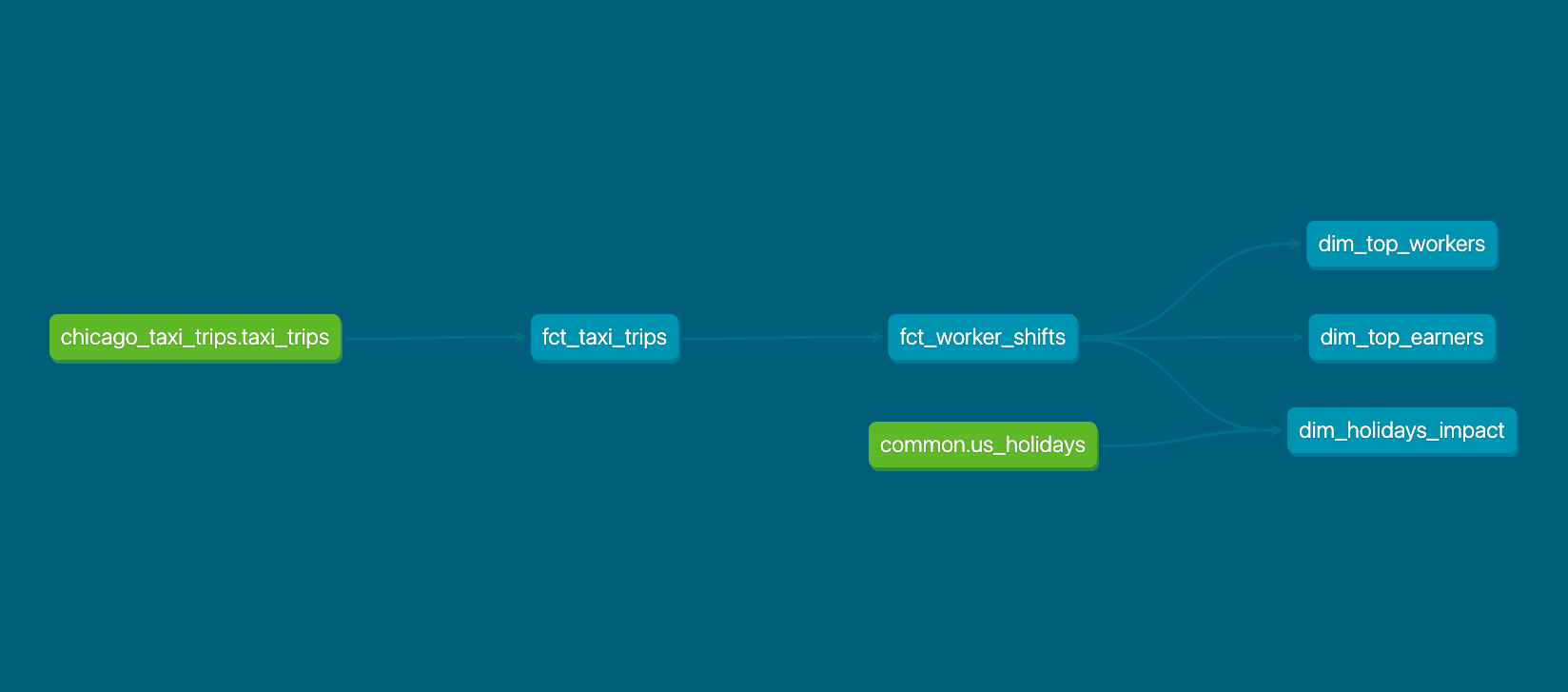

Any taxi driver can only have maximum 1 shift a day

-

A shift is defined by the earliest trip_start_timestamp and latest trip_end_timestamp in a single day