All models are trained on 4 GPUs with a minibatch size of 128. Testing is turned off during training due to memory limit(at least 12GB is require). The LMDB data is obtained from the official caffe imagenet tutorial

To train a network, use train.sh. For example, train resnet-50 with gpu 0,1,2,3:

#set caffe path in train.sh

mkdir resnet_50/logs

mkdir resnet_50/snapshot

./train.sh 0,1,2,3 resnet_50 resnet_50_For better training results, please install my Caffe fork, since the official Caffe ImageData layer doesn't support original paper's augmentation (resize shorter side to 256 then crop to 224x224). Use my 224x224 mean image bgr.binaryproto accordingly

See resnet_50/ResNet-50-test.prototxt ImageData layer for details

If you find the code useful in your research, please consider citing:

@InProceedings{He_2017_ICCV,

author = {He, Yihui and Zhang, Xiangyu and Sun, Jian},

title = {Channel Pruning for Accelerating Very Deep Neural Networks},

booktitle = {The IEEE International Conference on Computer Vision (ICCV)},

month = {Oct},

year = {2017}

}

use resnet_50/ResNet-50-test.prototxt for training and validation

(new) We've release a 2X accelerated ResNet-50 caffemodel using channel-pruning

This is a bottleneck architecture,

Since there's no strong data augmentation and 10-crop test in caffe, the results maybe a bit low.

test accuracy: accuracy@1 = 0.67892, accuracy@5 = 0.88164

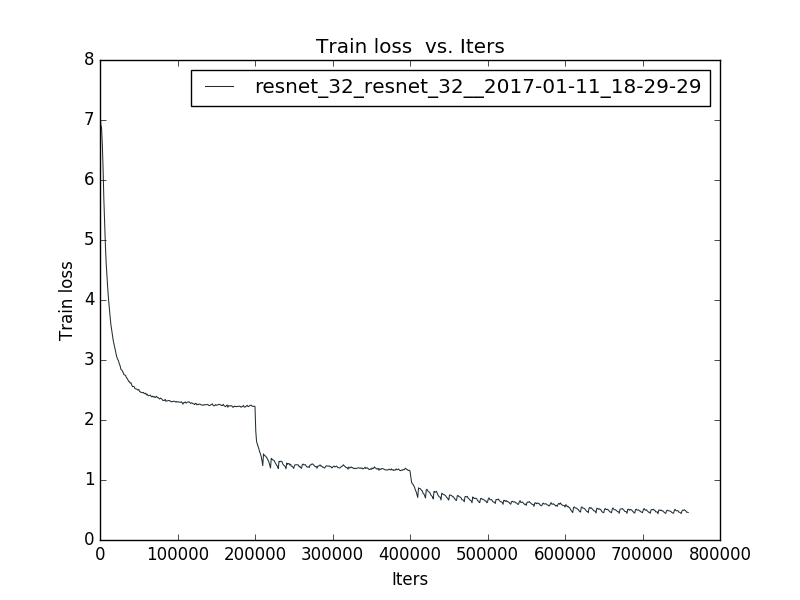

training loss for resnet-32 is shown below:

the trained model is provided in release